The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Satellite Data Acquisition and Preprocessing interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Satellite Data Acquisition and Preprocessing Interview

Q 1. Explain the different types of satellite sensors and their applications.

Satellite sensors are the eyes in the sky, collecting data about Earth’s surface and atmosphere. They come in various types, each designed for specific applications. Broadly, we categorize them by the type of energy they detect.

- Optical Sensors: These sensors capture reflected sunlight, similar to how a camera works. They are further subdivided into:

- Multispectral: Capture images in multiple, distinct wavelength bands (e.g., red, green, blue, near-infrared). Landsat and Sentinel-2 are examples, used for land cover classification, vegetation monitoring, and precision agriculture.

- Hyperspectral: Capture images in many narrow, contiguous wavelength bands, providing very detailed spectral information. Useful for mineral identification, pollution monitoring, and advanced vegetation analysis.

- Panchromatic: Capture images in a single, broad band of the visible spectrum, resulting in high-resolution grayscale images. Often used for creating high-resolution base maps or enhancing the spatial resolution of multispectral images.

- Microwave Sensors: These sensors use microwaves to penetrate clouds and even vegetation, enabling data acquisition regardless of weather conditions. They are used in:

- Synthetic Aperture Radar (SAR): These active sensors emit their own microwaves and measure the backscattered signal. Excellent for mapping topography, monitoring sea ice, and detecting changes in land use, even at night or through clouds.

- Passive Microwave Sensors: These sensors measure the naturally emitted microwave radiation from the Earth’s surface. Useful for monitoring soil moisture, sea surface temperature, and atmospheric water vapor.

- Thermal Infrared Sensors: These sensors detect heat emitted by objects on Earth’s surface. Applications include monitoring volcanic activity, tracking wildfires, and measuring land surface temperature.

The choice of sensor depends entirely on the application. For example, if you need to monitor deforestation regardless of cloud cover, SAR would be ideal. If you need detailed spectral information for mineral exploration, hyperspectral imagery would be the best choice.

Q 2. Describe the process of satellite data acquisition, from planning to downlink.

Satellite data acquisition is a complex process involving several stages. It begins with careful mission planning.

- Mission Planning: This includes defining the objectives, selecting the appropriate satellite and sensors, determining the area of interest, and scheduling the data acquisition. Factors like sun angle, cloud cover probability, and revisit time need meticulous consideration.

- Data Acquisition: The satellite’s sensors capture the data as it orbits the Earth. The process is automated, with onboard computers controlling sensor operations and data storage.

- Onboard Processing: Some satellites perform initial processing onboard to reduce the volume of data transmitted to the ground. This might include compression and basic geometric corrections.

- Telemetry and Tracking: Ground stations track the satellite’s position and receive the telemetry data, which provides information on the satellite’s health and status.

- Downlink: The processed data is transmitted from the satellite to ground stations via radio waves. The size of the ground station’s antenna and the distance between the satellite and the ground station impacts the downlink rate (speed of data transfer). Larger antennas and closer proximity leads to faster downlink speeds.

- Data Reception and Archiving: Ground stations receive and record the data. The data is then archived for future use. Often, data is initially stored in proprietary formats and later converted to standard formats for easier access and processing.

Imagine it like taking a really high-resolution photograph of the Earth: you need to carefully plan your shot (mission planning), take the picture (data acquisition), potentially do some basic adjustments on the camera itself (onboard processing), and then get the developed picture to your computer (downlink and data reception).

Q 3. What are the common geometric distortions in satellite imagery, and how are they corrected?

Geometric distortions in satellite imagery arise from various factors related to the satellite’s position, the Earth’s curvature, and sensor characteristics. These distortions need correction for accurate geographic referencing and analysis.

- Earth Curvature: The Earth is a sphere, so projecting its curved surface onto a flat image causes distortion, particularly near the edges of the image. This is addressed using geometric corrections that account for the Earth’s curvature (e.g., using geodetic models).

- Platform Instability: Variations in the satellite’s attitude (orientation) and altitude introduce distortions. These are often corrected using data from the satellite’s onboard inertial measurement unit (IMU) or from star trackers.

- Sensor Characteristics: Internal distortions within the sensor itself, such as lens distortions or detector misalignments, can create systematic errors. These are corrected using calibration parameters provided by the sensor manufacturer.

- Relief Displacement: Tall objects appear tilted in satellite images due to their height above the ground. This is particularly noticeable in mountainous areas. Corrections require digital elevation models (DEMs) to rectify these distortions.

Geometric correction involves transforming the image coordinates to a projected coordinate system (e.g., UTM, WGS84) using various methods, including:

- Orthorectification: Creates a geometrically corrected image with minimal distortions, usually requiring a DEM. This process is considered a ‘best practice’.

- Rubber Sheeting/Polynomial Transformation: Uses ground control points (GCPs) — locations with known coordinates in both the image and a map — to adjust the image geometry. GCPs are identified visually or using automated processes. A simple example of a rubber sheet transformation might be a first order polynomial transformation.

The accuracy of the correction depends on the availability and quality of reference data, like DEMs and GCPs.

Q 4. Explain the concept of atmospheric correction in satellite image preprocessing.

Atmospheric correction is crucial in satellite image preprocessing because the Earth’s atmosphere interacts with sunlight before it reaches the sensor and after it reflects from the Earth’s surface. This interaction affects the spectral signature of the reflected light, leading to inaccurate interpretations.

The atmosphere absorbs and scatters light, and the extent of this depends on factors like atmospheric pressure, water vapor content, aerosol concentration, and the wavelength of light.

Atmospheric correction aims to remove or reduce the atmospheric effects, so the values reflect the surface reflectance. Common methods include:

- Dark Object Subtraction (DOS): Assumes the darkest pixels in the image represent areas with zero reflectance. This method is simple but less accurate.

- Empirical Line Method: Utilizes the relationship between the reflectance of different spectral bands to estimate and remove atmospheric effects. It requires a relatively clear atmosphere and careful selection of reference pixels.

- Radiative Transfer Models (RTMs): These sophisticated models use complex mathematical equations to simulate the atmospheric effects based on atmospheric parameters. Models like MODTRAN and 6S are commonly used. They require atmospheric profile data, often from weather stations or other atmospheric sensors. More computationally intensive but offer higher accuracy.

Without atmospheric correction, analysis of satellite imagery could lead to inaccurate results, especially in vegetation indices or land surface temperature calculations. For instance, clouds can significantly reduce the amount of light reaching the surface and therefore the reflectance values.

Q 5. Discuss different radiometric corrections applied to satellite imagery.

Radiometric correction addresses variations in the brightness values of satellite imagery caused by sensor noise, atmospheric effects (partially addressed in atmospheric correction), and variations in illumination. The goal is to ensure that the pixel values accurately represent the reflectance or radiance of the Earth’s surface.

- Sensor Calibration: This involves correcting for sensor-specific biases and inconsistencies using calibration parameters provided by the sensor manufacturer. This often involves converting Digital Numbers (DNs) to surface reflectance or radiance.

- Noise Reduction: Digital filters (e.g., median filter, Gaussian filter) can reduce random noise in the imagery, improving the overall image quality. These techniques work by smoothing the data.

- Normalization: This involves adjusting the pixel values to a common scale, often between 0 and 1, to facilitate comparisons between different images or sensor data. For example, one might normalise the digital numbers to represent surface reflectance.

- Illumination Correction: This corrects for variations in solar illumination across the image caused by factors like topographic effects and changes in sun angle. Techniques such as cosine correction can be used.

Proper radiometric correction ensures that the analysis is based on consistent and reliable data. For example, comparing land surface temperatures across different images requires meticulous radiometric correction to minimize errors due to illumination variation.

Q 6. What are the advantages and disadvantages of using different image formats (e.g., GeoTIFF, JPEG)?

Satellite imagery can be stored in various formats, each with its own advantages and disadvantages.

- GeoTIFF: A tagged image file format (TIFF) that incorporates geospatial metadata. This means the image is directly georeferenced, meaning its location is explicitly defined using coordinate systems (e.g., UTM, WGS84). Advantages include direct use in GIS software and ease of integration with geospatial analysis tools. Disadvantages include larger file sizes compared to some lossy formats.

- JPEG: A common image format that uses lossy compression. Advantages include smaller file sizes and widespread compatibility. Disadvantages include loss of information due to compression, making it unsuitable for quantitative analysis where precise pixel values are essential. It does not inherently contain geographic metadata.

The choice depends on the application. GeoTIFF is preferred for applications requiring accurate georeferencing and precise pixel values, such as change detection or land cover classification. JPEG is suitable for applications where visual representation is the primary concern and a loss of some detail is acceptable, like quick viewing or web mapping. Other formats like HDF (Hierarchical Data Format) are used to store large volumes of data from satellite sensors, particularly those with many bands.

Q 7. How do you handle cloud cover in satellite imagery?

Cloud cover is a major challenge in satellite image analysis. Clouds obscure the Earth’s surface, making it difficult to extract information from the affected areas. Several strategies are used to address this:

- Image Selection: The simplest approach is to select cloud-free images for analysis. This might require waiting for suitable acquisition times or selecting images from a different time point in the data series. Cloud probabilities are typically provided by satellite data providers which assists in this selection.

- Cloud Masking: This involves identifying and removing cloud-covered areas from the image using various techniques. Thresholding methods can be used on specific spectral bands, particularly infrared wavelengths, to identify clouds. More sophisticated algorithms, often utilising machine learning methods, can improve cloud detection accuracy.

- Cloud Filling/Interpolation: This involves estimating the reflectance or radiance values of cloud-covered areas using data from surrounding cloud-free pixels. This is typically done using spatial or temporal interpolation techniques, but carries the inherent risk of introducing biases.

- Data Fusion: Combining data from multiple sources (e.g., using multiple satellite images from different dates, or fusing optical and SAR data) can help minimize the effects of cloud cover. If the cloud cover is partial, one can stitch together data from cloud-free portions.

The best approach depends on the extent of the cloud cover and the specific application. For applications requiring time-series analysis, combining images from different dates, and employing cloud masking, can be crucial for accurate results.

Q 8. Explain the process of orthorectification.

Orthorectification is a geometric correction process that transforms satellite imagery from its original, distorted projection into a map-like projection. Think of it like straightening a slightly warped photograph to make it perfectly aligned with a map. This process removes geometric distortions caused by terrain relief, sensor viewing angle, and Earth curvature. The result is an image where all features are in their correct geographic location and scale.

The process typically involves several steps:

- Sensor Model Acquisition: Obtaining precise parameters about the satellite sensor and its position during image acquisition.

- Digital Elevation Model (DEM) Acquisition: Using a DEM, a 3D representation of the Earth’s surface, to account for terrain variations.

- Geometric Correction: Applying corrections based on the sensor model and DEM to remove distortions. This often involves polynomial transformations or other sophisticated mathematical models.

- Resampling: Assigning pixel values to the corrected image grid. Different resampling methods (like nearest neighbor, bilinear, or cubic convolution) can be applied, each with trade-offs in accuracy and computational cost.

- Image Registration: Aligning the orthorectified image to a known reference coordinate system.

For example, orthorectifying a Landsat image over a mountainous region ensures that buildings or roads appear in their correct positions, not skewed or shifted due to the terrain. This is critical for accurate measurements, feature extraction, and map production.

Q 9. What are the different resampling techniques used in image processing, and when would you use each?

Resampling is crucial in satellite image processing whenever you need to change the image’s spatial resolution or project it into a different coordinate system. Imagine you’re resizing a digital photo – resampling determines how pixel values are assigned to create the new image size. Several techniques exist, each with its advantages and disadvantages:

- Nearest Neighbor: The simplest method. It assigns the pixel value of the nearest neighbor in the original image. This method is fast but can lead to aliasing (jagged edges) and loss of detail. It is best used when preserving the original pixel values is paramount, and speed is a priority.

- Bilinear Interpolation: Averages the values of the four nearest neighbors using a weighted average. It’s faster than cubic convolution but can cause blurring, especially in areas with high contrast.

- Cubic Convolution: Uses a weighted average of sixteen nearest neighbors. It provides smoother results than bilinear interpolation and minimizes blurring, offering better detail preservation. However, it is computationally more expensive.

- Bicubic Interpolation: A more advanced variant of cubic convolution, offering even smoother results but at increased computational cost.

The choice of resampling method depends on the specific application. If speed is critical and minor detail loss is acceptable, nearest neighbor is suitable. For high-quality results and detail preservation, cubic convolution or bicubic interpolation are preferred. For applications requiring precise measurements, the selection must be guided by a careful evaluation of the introduced error in various methods.

Q 10. Describe your experience with different image processing software (e.g., ENVI, ERDAS IMAGINE, QGIS).

My experience spans several leading image processing software packages. I’ve extensively used ENVI for advanced image analysis tasks, such as atmospheric correction, spectral unmixing, and classification. ENVI’s powerful tools and extensive libraries are particularly well-suited for complex research projects. ERDAS IMAGINE has been valuable for its robust functionalities in orthorectification and mosaicking large datasets. Its user-friendly interface simplifies tasks when working with massive imagery. Finally, QGIS provides a user-friendly open-source alternative for visualization, basic image processing, and geospatial analysis. In a recent project involving land-use change detection, I leveraged ENVI for atmospheric correction and classification, ERDAS IMAGINE for orthorectification and mosaicking, and QGIS for creating maps and visual presentations.

Q 11. How do you assess the quality of satellite imagery?

Assessing satellite imagery quality involves evaluating several factors that can affect the reliability and accuracy of the data. This includes:

- Spatial Resolution: How fine the detail is; higher resolution means better detail.

- Spectral Resolution: The number and width of spectral bands; more bands allow for more detailed spectral analysis.

- Radiometric Resolution: The number of bits used to represent the brightness value of a pixel; higher bit depth (e.g., 16-bit) provides better sensitivity to subtle variations in reflectance.

- Geometric Accuracy: How well the image aligns with geographic coordinates; evaluated using root mean square error (RMSE) of checkpoints.

- Atmospheric Effects: Presence of haze, clouds, or atmospheric scattering can significantly impact image quality. Atmospheric correction techniques can help mitigate these.

- Sensor Degradation: Age and wear of the sensor may introduce artifacts or biases.

Often, I use image statistics (mean, standard deviation, histogram) and visual inspection to assess basic quality. For rigorous evaluation, I’d employ quantitative metrics like RMSE for geometric accuracy and compare the imagery against ground truth data or reference imagery.

Q 12. Explain the concept of spatial resolution and spectral resolution in satellite imagery.

Spatial and spectral resolution are fundamental characteristics of satellite imagery that greatly influence its application. Imagine taking photos with a camera – spatial resolution is akin to the camera’s megapixel count, determining image sharpness and detail. Spectral resolution refers to the number and width of wavelengths captured by the camera sensor.

Spatial Resolution: This refers to the size of a single pixel on the ground. A higher spatial resolution (e.g., 0.5-meter) means that each pixel represents a smaller area on the Earth’s surface, resulting in a sharper image with more detail. Lower spatial resolution (e.g., 30-meter) means each pixel covers a larger area and details are less clear.

Spectral Resolution: This describes the number and width of spectral bands the sensor records. Each band represents a specific range of wavelengths in the electromagnetic spectrum (e.g., visible, near-infrared, thermal infrared). A higher spectral resolution (more narrow bands) provides more detailed information about the spectral characteristics of objects, allowing for better discrimination between different land cover types. For example, a hyperspectral sensor provides very fine spectral resolution, useful in identifying minerals or vegetation types.

Q 13. What are the different types of satellite data projections, and how do you choose the appropriate one?

Satellite data projections define how the spherical surface of the Earth is represented on a 2D plane. Choosing the appropriate projection is crucial for accurate analysis. Several common projections exist:

- Geographic (Latitude/Longitude): Simple, but distortions increase with distance from the equator.

- UTM (Universal Transverse Mercator): Divides the Earth into 60 zones, minimizing distortion within each zone, ideal for regional studies.

- Albers Equal-Area Conic: Preserves area accurately, suited for large-scale mapping of continental areas.

- Plate Carrée (Equirectangular): Simple projection; significant distortion near the poles.

The selection of the appropriate projection depends heavily on the study area’s extent and the specific objectives. For a small, localized area, UTM might be best, minimizing distortion. For a large area, like a continent, Albers Equal-Area Conic is more suitable, if area preservation is a high priority. Geographic projection should be avoided for any serious quantitative analysis unless the area is extremely small.

Q 14. How do you perform image classification using satellite data?

Image classification uses satellite data to categorize pixels into different classes representing land cover types (e.g., forest, water, urban). Several approaches exist:

- Supervised Classification: Requires training data – sample pixels with known classes. Algorithms like Maximum Likelihood, Support Vector Machines (SVM), and Random Forest learn from this training data to classify the remaining pixels.

- Unsupervised Classification: Doesn’t require training data. Algorithms like K-means clustering group pixels based on their spectral similarity. The resulting classes need to be interpreted by the analyst.

- Object-Based Image Analysis (OBIA): Groups pixels into meaningful objects (segments) based on spectral and spatial properties before classification. This approach can improve classification accuracy, especially in heterogeneous areas.

The choice of classification method depends on the data availability and the level of detail required. Supervised classification generally provides more accurate results but requires significant effort in preparing training data. Unsupervised classification is faster but may require more manual interpretation. OBIA is more computationally intensive but can yield better results for complex landscapes. In a project involving mapping agricultural land, I successfully used a supervised classification approach (Random Forest) with Landsat data to identify different crop types, achieving a high classification accuracy.

Q 15. Explain the difference between supervised and unsupervised classification.

Supervised and unsupervised classification are two fundamental approaches in satellite image analysis used to categorize pixels based on their spectral characteristics. Think of it like sorting a pile of colorful rocks: supervised is like having a labeled sample of each type of rock (e.g., ‘red granite’, ‘grey basalt’) to train your sorting system, while unsupervised is like letting the system figure out the rock types on its own by identifying clusters based on color and texture.

- Supervised Classification: This method requires labeled training data. We provide the algorithm with samples of each land cover class (e.g., forest, water, urban) and their corresponding spectral signatures. The algorithm then learns the relationship between spectral values and class labels and uses this knowledge to classify the entire image. Common algorithms include Maximum Likelihood Classification (MLC) and Support Vector Machines (SVM).

- Unsupervised Classification: This approach does not require labeled data. The algorithm automatically groups pixels with similar spectral characteristics into clusters. The analyst then interprets these clusters based on their spectral characteristics and spatial context to assign land cover classes. K-means clustering is a popular unsupervised technique.

The choice between supervised and unsupervised classification depends on the availability of training data and the research objectives. If accurate labeled data is available, supervised classification is generally preferred for higher accuracy. If labeled data is scarce or unavailable, unsupervised classification provides a valuable exploratory tool.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with different classification algorithms (e.g., Maximum Likelihood, Support Vector Machines).

I have extensive experience with various classification algorithms, particularly MLC and SVM.

- Maximum Likelihood Classification (MLC): This is a widely used supervised technique that assumes the spectral values for each class follow a normal distribution. MLC calculates the probability of each pixel belonging to each class based on its spectral values and the class statistics derived from the training data. It then assigns the pixel to the class with the highest probability. I’ve used MLC successfully in projects mapping agricultural land use, where the distinct spectral signatures of different crops allow for accurate classification.

- Support Vector Machines (SVM): SVMs are powerful algorithms capable of handling complex, non-linear relationships between spectral values and class labels. They work by finding the optimal hyperplane that maximally separates different classes in the feature space. I’ve employed SVMs in classifying highly heterogeneous urban areas, where the spectral signatures can be quite mixed and challenging to separate using simpler methods. The ability of SVMs to handle high dimensionality and non-linearity is key here.

In addition to these, I am also familiar with other techniques such as Random Forest, which is particularly useful for handling large datasets and reducing overfitting.

Q 17. How do you evaluate the accuracy of a classification result?

Evaluating the accuracy of a classification result is crucial for ensuring reliability. Several metrics are used, often in combination, to provide a comprehensive assessment.

- Error Matrix (Confusion Matrix): This matrix shows the counts of correctly and incorrectly classified pixels for each class. From this, we can calculate overall accuracy, producer’s accuracy (how well a class is classified), and user’s accuracy (how reliable the classification is for a given class). A high overall accuracy is a good indicator, but examining the individual class accuracies is important to identify potential weaknesses.

- Kappa Coefficient: This statistic measures the agreement between the classification result and the reference data, accounting for the possibility of chance agreement. A Kappa value close to 1 indicates strong agreement, while a value close to 0 suggests agreement is no better than random.

- Visual Inspection: While quantitative metrics are vital, visual inspection of the classified image alongside the reference data is equally important. This allows for identification of systematic errors or areas where the classification performed poorly.

The choice of evaluation metrics depends on the specific application and the type of reference data available. In many cases, a combination of quantitative metrics and visual inspection provides the most comprehensive assessment.

Q 18. Explain the concept of change detection using satellite imagery.

Change detection using satellite imagery involves identifying and analyzing differences in land cover or land use over time. Imagine comparing two photos of the same location taken years apart; change detection pinpoints the transformations, such as deforestation, urbanization, or agricultural expansion.

The process typically involves acquiring satellite images from multiple time points, pre-processing them to ensure geometric and radiometric consistency, and then applying a change detection algorithm. Common algorithms include image differencing, image ratioing, and post-classification comparison. Image differencing simply subtracts the pixel values of two images; significant differences indicate changes. Image ratioing divides the pixel values of two images; ratios significantly different from 1 suggest changes. Post-classification comparison involves classifying each image separately and then comparing the classification results to identify changes.

The accuracy of change detection depends on several factors, including the spatial and temporal resolution of the imagery, the accuracy of the pre-processing steps, and the appropriateness of the chosen algorithm.

Q 19. How do you handle large satellite datasets?

Handling large satellite datasets requires a strategic approach that combines efficient data management, optimized processing techniques, and potentially the use of high-performance computing resources.

- Data Storage and Management: Efficient storage solutions are critical. Cloud-based storage services are often preferred for their scalability and cost-effectiveness. Organizing data using a hierarchical structure with clear naming conventions is essential for easy access and retrieval.

- Parallel Processing: Large datasets are often processed using parallel processing techniques, distributing the workload across multiple processors or machines. Libraries such as GDAL and libraries specific to cloud computing platforms like AWS’s ParallelCluster facilitate this.

- Data Subsetting and Mosaicking: Processing the entire dataset at once may be computationally infeasible. Subsetting the data into smaller, manageable tiles allows for parallel processing and reduces memory requirements. Once processed, the tiles can be mosaicked back together to form the complete processed dataset.

- Data Compression: Lossless or lossy compression techniques can significantly reduce the storage space required for satellite data. The choice of compression method depends on the acceptable level of data loss and the requirements of downstream applications.

Careful planning and optimization are key to efficiently manage and process large satellite datasets. Choosing the right tools and techniques can significantly reduce processing time and computational costs.

Q 20. Describe your experience with cloud computing platforms (e.g., AWS, Google Cloud) for processing satellite data.

I possess considerable experience leveraging cloud computing platforms like AWS and Google Cloud for processing satellite data. These platforms offer significant advantages in terms of scalability, cost-effectiveness, and access to high-performance computing resources.

- AWS: I have utilized AWS services such as EC2 (for virtual machines), S3 (for data storage), and Lambda (for serverless computing) to process large satellite datasets. The scalability of AWS allows for easy scaling of computing resources based on the demands of the processing tasks. For example, I’ve used EC2 instances with GPUs to accelerate computationally intensive tasks like image classification.

- Google Cloud: Google Cloud’s offerings, including Compute Engine, Cloud Storage, and Dataflow, have also been instrumental in my work. Google Earth Engine is particularly useful for working with large geospatial datasets due to its integrated processing capabilities and vast archive of satellite imagery. I’ve used Google Earth Engine for time-series analysis and change detection projects.

The choice between AWS and Google Cloud often depends on specific project needs and existing infrastructure. Both platforms provide robust solutions for efficient and scalable satellite data processing.

Q 21. What are the ethical considerations related to using satellite data?

Ethical considerations are paramount when working with satellite data. The potential for misuse and the implications for privacy and security necessitate careful consideration.

- Privacy: High-resolution satellite imagery can reveal sensitive details about individuals and their properties. Using this data requires careful adherence to privacy regulations and ethical guidelines, ensuring anonymization or de-identification techniques are employed where necessary.

- Security: Satellite data is a valuable asset, and its security must be protected from unauthorized access or manipulation. Secure data storage and transmission protocols are essential.

- Bias and Fairness: Algorithms used to process satellite data can perpetuate or amplify existing biases, leading to unfair or discriminatory outcomes. Careful consideration of potential biases in the data and algorithms is essential to ensure fairness and equity.

- Transparency and Accountability: The methods used to acquire, process, and interpret satellite data should be transparent and auditable. This ensures accountability and fosters trust in the results.

- Environmental Impact: The use of satellite data can facilitate environmental monitoring and conservation efforts, but we must acknowledge the environmental footprint of the satellites themselves and strive for responsible and sustainable practices.

Ethical considerations are not just an afterthought; they should be integrated throughout the entire process of working with satellite data, from acquisition to dissemination of results.

Q 22. How do you ensure the security and integrity of satellite data?

Ensuring the security and integrity of satellite data is paramount. It involves a multi-layered approach encompassing physical, technical, and procedural safeguards. Think of it like protecting a valuable artwork – you need multiple layers of security to prevent theft or damage.

- Physical Security: This involves securing the data centers housing the satellite data archives, controlling access to the facilities, and employing environmental controls to protect against damage from natural disasters or power outages.

- Technical Security: This includes robust cybersecurity measures such as encryption (both at rest and in transit), access control lists (ACLs) limiting access to authorized personnel, regular security audits, and intrusion detection systems. We often use encryption algorithms like AES-256 to protect the data’s confidentiality and integrity.

- Procedural Security: This focuses on establishing clear data handling protocols, regular backups, version control systems, and a robust chain of custody to track data access and modifications. We maintain detailed logs of all data access and modifications to enable audits and ensure traceability.

- Data Validation and Quality Control: This is crucial for integrity. We employ various techniques, including checksums and data consistency checks, to verify data accuracy and detect any corruption that might have occurred during transmission or storage.

For example, in one project involving sensitive environmental data, we implemented end-to-end encryption using AES-256, coupled with multi-factor authentication for access to the data repository, significantly reducing the risk of unauthorized access or data breaches.

Q 23. Explain your experience with data visualization and presentation using satellite data.

Data visualization is key to extracting meaningful insights from satellite data. My experience involves using a variety of tools and techniques to present complex information clearly and effectively. I’m proficient in using GIS software like ArcGIS and QGIS, along with programming languages like Python with libraries such as Matplotlib and Seaborn, to create compelling visualizations.

For instance, I once worked on a project analyzing deforestation patterns in the Amazon rainforest. Using Landsat data, I processed the imagery, calculated deforestation rates over time, and then created animated maps showing the progression of deforestation. These visualizations were critical in presenting our findings to stakeholders and influencing policy decisions. I also utilized interactive dashboards to allow for user exploration of the data, allowing for better understanding and communication of complex results.

In another project, I used Python to generate interactive 3D visualizations of elevation models derived from satellite altimetry data, providing a powerful way to represent complex terrain data and identify key features.

Q 24. How do you manage and organize metadata associated with satellite imagery?

Metadata management is crucial for finding and using satellite imagery efficiently. Think of metadata as the ‘table of contents’ for your data, providing essential information about its origin, processing, and quality. Effective metadata management ensures data discoverability, reproducibility of analyses, and facilitates collaborative work.

- Standardized Metadata Schemas: I utilize established standards like the ISO 19115 standard for geospatial metadata to ensure consistency and interoperability across different datasets and software platforms.

- Metadata Catalogs: We use dedicated metadata catalogs (e.g., those integrated within GIS platforms or cloud storage services) to store and manage the metadata associated with satellite images. This enables efficient searching and retrieval based on various criteria such as sensor type, acquisition date, geographic location, or processing level.

- Database Management Systems (DBMS): For large-scale projects, a relational database (e.g., PostgreSQL) or NoSQL database can be used to manage metadata efficiently, allowing for advanced querying and analysis of the metadata itself.

- Metadata Validation: Regular validation checks are performed to ensure metadata consistency and completeness, preventing errors and improving data quality.

For example, in a recent project, we used a custom-built PostgreSQL database to manage metadata for hundreds of thousands of satellite images, enabling rapid search and retrieval based on numerous parameters, significantly speeding up our workflow.

Q 25. Describe your experience with programming languages (e.g., Python, R) for satellite data processing.

Python is my primary programming language for satellite data processing. Its extensive libraries like GDAL, Rasterio, and scikit-image provide powerful tools for handling various geospatial data formats, performing image processing tasks, and analyzing the data. I’m also proficient in using R for statistical analysis and visualization of satellite data, especially for ecological and environmental studies.

Example (Python): The following code snippet demonstrates how to open a GeoTIFF image using Rasterio and access its metadata:

import rasterio

with rasterio.open('image.tif') as src:

print(src.profile)This code provides access to the metadata (like projection, resolution, number of bands, etc.) embedded within the GeoTIFF file.

My experience includes writing custom scripts for automating tasks like geometric correction, atmospheric correction, cloud masking, and change detection, significantly improving efficiency and reproducibility. I’ve also integrated these scripts into larger workflows managed through workflow management systems.

Q 26. Explain your understanding of different coordinate reference systems (CRS).

Coordinate Reference Systems (CRS) are fundamental to geospatial data. They define how coordinates are represented on the Earth’s surface. Understanding different CRS is crucial for accurate spatial analysis and data integration. Think of it like using different maps – one might show distances accurately in a local area (like a city map), while another shows the global picture (like a world map), but they represent the same locations differently.

- Geographic Coordinate Systems (GCS): These use latitude and longitude to define locations on the Earth’s surface using a spherical or ellipsoidal model. WGS 84 is a commonly used GCS.

- Projected Coordinate Systems (PCS): These project the spherical or ellipsoidal surface onto a flat plane, introducing distortions depending on the projection method used. Common projections include UTM (Universal Transverse Mercator) and Albers Equal-Area Conic. The choice of projection impacts the accuracy of area, distance, and shape measurements.

- Datum: A datum defines the reference surface (e.g., ellipsoid) and the origin of the coordinate system. Different datums can lead to slight variations in coordinate values for the same location.

Understanding the CRS of a dataset is critical before performing any spatial analysis. Incorrect CRS handling can lead to misalignment of datasets and inaccurate results. I routinely use tools in GIS software and programming libraries to reproject data into a consistent CRS for seamless integration and analysis.

Q 27. What are the common challenges encountered in satellite data acquisition and preprocessing?

Satellite data acquisition and preprocessing present numerous challenges. These can range from technical limitations to environmental factors. Think of it as a complex puzzle with many pieces that need to fit together perfectly.

- Data Acquisition Challenges: These include atmospheric effects (clouds, aerosols), sensor limitations (noise, geometric distortions), data gaps due to sensor failures or unfavorable weather conditions, and data volume, especially with high-resolution sensors.

- Preprocessing Challenges: These include geometric correction (aligning images to a common coordinate system), atmospheric correction (removing atmospheric effects), orthorectification (removing relief displacement), radiometric calibration (correcting for sensor variations), cloud masking (removing cloud cover), and handling data inconsistencies across multiple datasets.

For example, cloud cover is a persistent problem affecting the availability of useful cloud-free satellite imagery. We employ techniques like cloud masking and temporal compositing (combining multiple images to create a single cloud-free image) to mitigate this. Also, geometric distortions introduced during image acquisition require sophisticated correction techniques involving ground control points (GCPs) and digital elevation models (DEMs).

Q 28. How would you approach troubleshooting a problem with satellite data acquisition or processing?

Troubleshooting satellite data acquisition or processing problems requires a systematic approach. It’s like diagnosing a medical condition – you need a methodical approach to find the root cause.

- Identify the Problem: Clearly define the problem. Is it related to data acquisition (e.g., missing data, corrupted files), or preprocessing (e.g., incorrect geometric correction, inaccurate atmospheric correction)?

- Examine the Data: Inspect the raw data and intermediate processing products for anomalies. Check metadata for inconsistencies or errors. Visual inspection of the imagery can often reveal problems.

- Check the Processing Steps: Review the processing workflow for errors or inconsistencies. Ensure that all necessary parameters have been set correctly. Run individual processing steps separately to isolate potential problems.

- Consult Documentation: Refer to the sensor documentation, software manuals, and relevant literature to troubleshoot specific issues. Look for known problems or solutions.

- Seek Expert Advice: If the problem persists, seek assistance from experts or online communities dedicated to satellite data processing. Many forums and resources are available.

- Reproduce the Problem: Try to reproduce the problem in a simpler scenario to isolate the root cause. This can help narrow down the scope of the problem and identify potential solutions.

For example, if I encounter geometric distortions in processed imagery, I’d carefully check the GCPs used for geometric correction, investigate potential errors in the DEM used for orthorectification, and verify the accuracy of the projection parameters. A systematic approach helps pinpoint the exact step where the error occurred and facilitates an effective solution.

Key Topics to Learn for Satellite Data Acquisition and Preprocessing Interview

- Satellite Orbits and Sensor Characteristics: Understanding different orbital types (e.g., LEO, GEO, MEO) and their impact on data acquisition, along with the specifics of various sensor technologies (e.g., optical, radar, hyperspectral) and their capabilities.

- Data Acquisition Planning and Scheduling: Learn the practical aspects of mission planning, including target selection, data volume estimation, and scheduling to optimize data collection based on factors like weather conditions and sun angles.

- Radiometric and Geometric Correction: Mastering techniques to correct for atmospheric effects, sensor biases, and geometric distortions to ensure accurate and reliable data. This includes understanding concepts like atmospheric correction models and geometric transformations.

- Data Format and File Structures: Familiarity with common satellite data formats (e.g., GeoTIFF, HDF) and their associated metadata is crucial. Understanding how to navigate and interpret these file structures is essential for efficient preprocessing.

- Preprocessing Techniques: Develop a strong understanding of various preprocessing steps, including orthorectification, mosaicking, and atmospheric correction. Be prepared to discuss the advantages and disadvantages of different algorithms and approaches.

- Cloud Masking and Noise Reduction: Learn techniques for identifying and removing cloud cover and other noise sources from satellite imagery to improve data quality and analysis. This includes understanding various algorithms and their limitations.

- Data Quality Assessment and Validation: Develop the ability to assess the quality of preprocessed data, identify potential errors, and implement validation procedures to ensure data accuracy and reliability. This often involves statistical analysis and visual inspection.

- Software and Tools: Gain proficiency in commonly used software packages and tools for satellite data acquisition and preprocessing (mentioning specific software is avoided to keep it general and applicable to various tools).

Next Steps

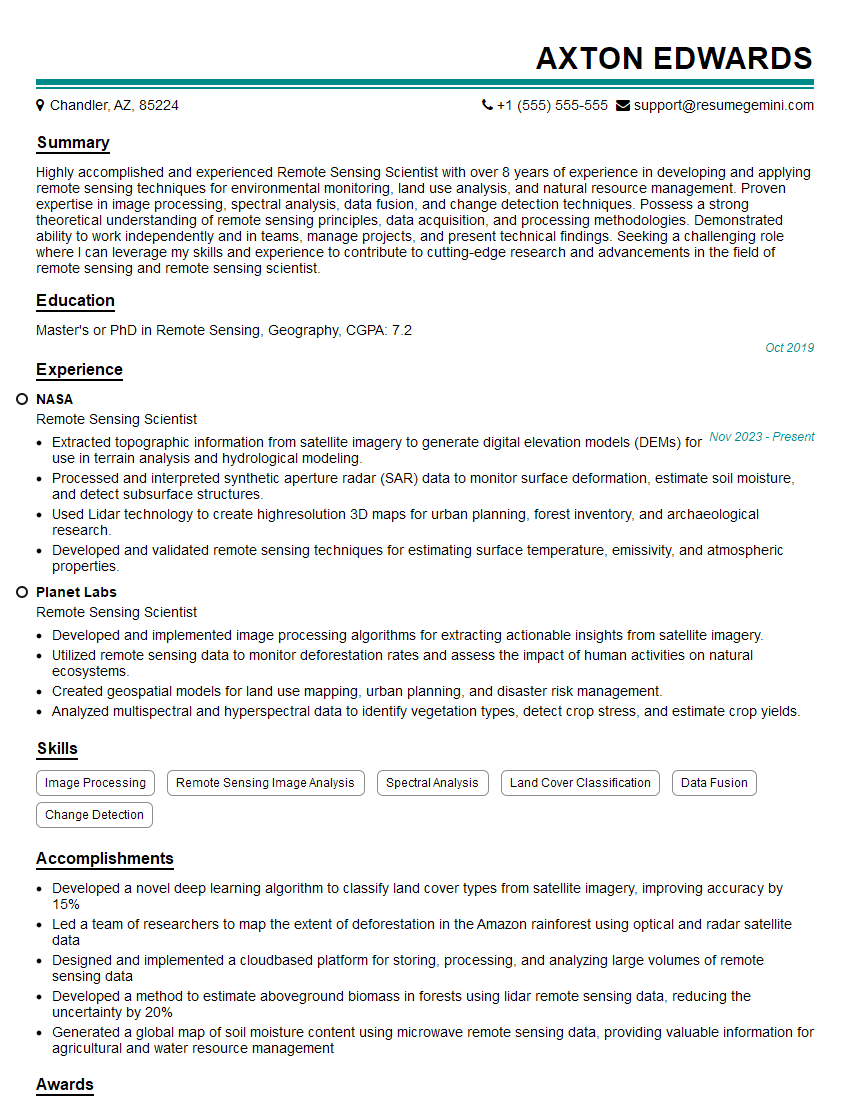

Mastering Satellite Data Acquisition and Preprocessing is vital for a successful and rewarding career in the geospatial industry. These skills are highly sought after, opening doors to diverse and impactful roles. To maximize your job prospects, create a compelling and ATS-friendly resume that effectively showcases your expertise. ResumeGemini is a trusted resource that can help you build a professional resume tailored to your specific skills and experience. Examples of resumes specifically tailored for roles in Satellite Data Acquisition and Preprocessing are available to help you get started.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

To the interviewgemini.com Webmaster.

Very helpful and content specific questions to help prepare me for my interview!

Thank you

To the interviewgemini.com Webmaster.

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.