Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Metrology and Measurement Equipment Calibration interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Metrology and Measurement Equipment Calibration Interview

Q 1. Explain the concept of traceability in metrology.

Traceability in metrology is the ability to demonstrate an unbroken chain of comparisons between the measurement result and a known standard. Think of it like a family tree for your measurement – it shows how your measurement result is linked back to a national or international standard, providing confidence in its accuracy. This chain typically involves a series of calibrations, where each instrument is calibrated against a more accurate standard, eventually leading to the highest level of national or international standards maintained by metrology institutes like NIST (National Institute of Standards and Technology) or similar organizations globally.

For example, imagine calibrating a thermometer. You wouldn’t just guess its accuracy. You’d compare it to a more accurate thermometer, which was itself calibrated against a yet more accurate thermometer, and so on, until you reach a primary standard that is directly traceable to a fundamental physical constant, like the triple point of water.

Without traceability, your measurements lack credibility and comparability. You wouldn’t know how accurate your measurement truly is, making it difficult or impossible to compare your results with those obtained by others.

Q 2. Describe the different types of calibration standards used.

Calibration standards are artifacts or instruments with known and documented values used to verify or adjust the accuracy of other measuring instruments. They come in various types, each with a specific purpose and accuracy level. We can broadly categorize them as:

- Primary Standards: These are the most accurate standards, often defined by fundamental physical constants or highly precise measurements, and are used to calibrate secondary standards. They are rarely used directly for routine calibration.

- Secondary Standards: These are calibrated against primary standards and are used to calibrate working standards.

- Working Standards: These are the most commonly used standards in day-to-day calibration activities. They are calibrated against secondary standards and used to verify the accuracy of measuring instruments.

- Reference Standards: These are high-accuracy standards maintained within an organization for internal use, providing traceability within the organization.

The choice of standard depends on the accuracy required for the instrument being calibrated and the level of uncertainty acceptable for the measurement. For instance, a high-precision measuring instrument used in a laboratory might require calibration against a secondary or even a primary standard, whereas a less critical instrument might only require calibration against a working standard.

Q 3. What is the importance of uncertainty analysis in calibration?

Uncertainty analysis is crucial in calibration as it quantifies the doubt associated with a measurement result. It expresses the range within which the true value of the measurand is likely to lie. This is not about the error itself but rather the degree of confidence we have in the measurement. A small uncertainty indicates a high degree of confidence, while a large uncertainty indicates more doubt.

Uncertainty analysis considers various factors that contribute to the overall uncertainty, including the uncertainty of the calibration standard, the resolution of the instrument, environmental conditions (temperature, humidity), and the operator’s skill. The combined uncertainty is typically expressed as a standard uncertainty (usually one standard deviation) and expanded uncertainty (often two or three standard deviations), covering a specified confidence interval (e.g., 95%).

Why is this important? Without uncertainty analysis, a calibration certificate simply states a value without indicating the reliability of that value. Understanding the uncertainty allows users to make informed decisions about the acceptability of the calibration result for their specific application.

Q 4. How do you select the appropriate calibration method for a specific instrument?

Selecting the appropriate calibration method involves considering several factors:

- Instrument type and specifications: The instrument’s manual will often specify recommended calibration procedures.

- Required accuracy: Higher accuracy demands a more rigorous calibration method and potentially higher-accuracy standards.

- Available resources: The availability of suitable calibration equipment, standards, and expertise will influence the choice of method.

- Calibration standards: The selection of standards has to be appropriate to the range and accuracy of the instrument being calibrated.

- Traceability requirements: The method must ensure traceability to national or international standards.

For instance, a simple digital multimeter might be calibrated using a comparison method against a known accurate standard. A more complex instrument like a spectrum analyzer may require a more involved calibration procedure involving multiple standards and specialized software.

In my experience, careful review of the instrument’s specifications and relevant standards is the first step, followed by consultation with relevant calibration standards and guidelines to choose the optimum method ensuring both accuracy and efficiency.

Q 5. Explain the difference between accuracy and precision.

Accuracy and precision are often confused, but they represent different aspects of measurement quality. Think of it like shooting arrows at a target:

- Accuracy refers to how close the measured values are to the true value. High accuracy means the arrows are clustered close to the bullseye.

- Precision refers to how close the measured values are to each other. High precision means the arrows are clustered tightly together, regardless of whether they are near the bullseye.

An instrument can be precise but not accurate (arrows clustered but far from the bullseye), accurate but not precise (arrows scattered around the bullseye), or both accurate and precise (arrows clustered around the bullseye). Calibration aims to improve both accuracy and precision by identifying and correcting systematic errors (affecting accuracy) and reducing random errors (affecting precision).

Q 6. What are the common sources of measurement error?

Measurement errors can stem from various sources, broadly classified as:

- Systematic Errors: These errors are consistent and repeatable, often caused by instrument limitations, environmental factors, or operator bias. Examples include instrument drift, incorrect calibration, or environmental influences like temperature variations.

- Random Errors: These errors are unpredictable and vary from one measurement to the next. They are due to various uncontrollable factors and contribute to the uncertainty. Examples include small variations in readings, environmental noise, or operator inconsistency.

Other sources include:

- Environmental factors: Temperature, humidity, pressure, and electromagnetic fields can influence measurements significantly.

- Instrument limitations: Resolution, linearity, and stability of the instrument itself are crucial factors.

- Operator errors: Incorrect reading, setup, or handling of the instrument can introduce errors.

A thorough understanding of these sources is essential to mitigate their effects and ensure the quality of measurements.

Q 7. Describe your experience with various calibration techniques (e.g., comparison, substitution).

Throughout my career, I’ve extensively used various calibration techniques, including:

- Comparison Calibration: This method involves comparing the instrument under test (IUT) with a known standard, often using a difference measurement. I’ve used this extensively for calibrating various instruments such as digital multimeters, thermometers, and pressure gauges. The simplicity and speed make it efficient for routine calibrations.

- Substitution Calibration: In substitution calibration, the IUT is substituted with the standard to measure a known quantity. This helps eliminate the influence of systematic errors from the measuring system. This technique is beneficial when dealing with instruments where direct comparison is challenging.

- Functional Calibration: This method involves testing the instrument under various operating conditions, verifying if the instrument behaves as per its specifications. This is useful for complex instruments with multiple functions or operational modes, like oscilloscopes or signal generators. Functional calibration often involves specific test procedures and verification against pre-defined limits.

I have also experience with more specialized techniques for specific instrumentation. The selection of the appropriate technique is always guided by the instrument’s characteristics, the required accuracy level, and available resources. Furthermore, meticulous documentation and uncertainty analysis are integrated into each process to ensure the highest level of quality and traceability.

Q 8. How do you manage calibration certificates and records?

Managing calibration certificates and records is crucial for maintaining traceability and demonstrating compliance. We utilize a robust, multi-layered system. Firstly, all certificates are scanned and stored digitally in a secure, searchable database. This allows for easy retrieval and ensures redundancy in case of physical document loss. Secondly, a detailed record-keeping system tracks each instrument, its calibration history, and any associated maintenance. This often includes details like calibration dates, results, and the technician’s identification. Think of it like a meticulous medical chart for each piece of equipment. Finally, we implement a robust version control system to manage revisions and ensure we always work with the most current data. This is especially vital when dealing with instruments that might undergo significant repairs or modifications.

For instance, if a pressure gauge is recalibrated, the old certificate is archived, and the new one is clearly marked and indexed in the database. This way, we can always demonstrate a complete and unbroken chain of calibration history for every instrument, vital for audits and regulatory compliance.

Q 9. How do you ensure the proper maintenance and handling of calibration equipment?

Proper maintenance and handling of calibration equipment are paramount for accuracy and longevity. We follow a strict protocol, beginning with proper storage. Instruments are stored in controlled environments to protect them from dust, humidity, and temperature fluctuations – imagine keeping a fine watch in a climate-controlled safe, not a dusty attic. Regular cleaning and inspection are also part of the process, often according to manufacturer recommendations. This can include checking for physical damage, cleaning optical components, and verifying connections. For delicate instruments, specialized cleaning solutions and techniques might be employed.

Furthermore, we have a comprehensive preventative maintenance schedule. This ensures regular checks and calibrations of the equipment itself, keeping the calibrator calibrated, if you will. For example, a high-precision digital multimeter might require a check of its internal reference voltage periodically, to ensure it is giving accurate readings. This approach helps prevent unexpected equipment failures and costly downtime.

Q 10. Explain the significance of ISO 17025 in calibration laboratories.

ISO 17025 is the international standard for testing and calibration laboratories. It’s the gold standard, essentially, establishing the criteria for competence and impartiality. For calibration labs, achieving ISO 17025 accreditation demonstrates to clients and regulatory bodies that our processes are reliable and our results are trustworthy. The standard covers various aspects, including:

- Management system: Defining roles and responsibilities, documentation control, and corrective actions.

- Technical requirements: Specifying requirements for calibration methods, traceability to national standards, and uncertainty assessments.

- Personnel competence: Ensuring that staff are qualified and have the necessary skills and training.

- Equipment maintenance: Mandating appropriate procedures for calibrating and maintaining equipment used in the calibration process.

Imagine ISO 17025 as a rigorous quality control system, ensuring that the results we produce are accurate, consistent, and comparable globally. Accreditation proves our commitment to the highest standards of quality.

Q 11. How do you handle out-of-tolerance results during calibration?

Handling out-of-tolerance results during calibration is a critical step, requiring careful investigation and action. The first step is to verify the result. This might involve repeating the calibration procedure, checking for errors in the setup, or using a different, verified instrument for cross-checking. If the out-of-tolerance result is confirmed, a thorough investigation is needed to identify the root cause. This could involve examining the instrument’s history, maintenance records, and operating conditions. Consider this like diagnosing a medical issue; a single test result might not tell the whole story.

Depending on the severity of the deviation and the instrument’s purpose, actions might range from minor adjustments to major repairs or replacement. A detailed report documenting the findings, corrective actions, and the final status of the instrument is essential. This might involve updating the calibration certificate or issuing a non-conformance report. Throughout this process, maintaining meticulous documentation and transparent communication with stakeholders are crucial for traceability and accountability.

Q 12. Describe your experience with different types of measurement instruments (e.g., multimeters, pressure gauges).

My experience encompasses a wide range of measurement instruments. I’ve extensively worked with multimeters, from basic handheld units to high-precision benchtop models used in precision electronics calibration. I’m familiar with various measurement types, including voltage, current, resistance, capacitance, and frequency. With pressure gauges, I’ve worked on both analog and digital devices, covering various pressure ranges and applications, including pneumatic and hydraulic systems. This includes understanding the differences between different pressure types (absolute, gauge, differential) and selecting appropriate calibration techniques based on those differences.

Beyond multimeters and pressure gauges, I also have experience with temperature measurement devices (thermocouples, RTDs, thermistors), dimensional measuring instruments (calipers, micrometers), and various other specialized instruments depending on the specific application. Each instrument requires a unique understanding of its operating principles, potential sources of error, and suitable calibration methods. For example, the calibration of a thermocouple would involve its comparison to a traceable standard thermometer at multiple temperature points, while a pressure gauge calibration might utilize a deadweight tester.

Q 13. What software or systems are you familiar with for managing calibration data?

I’m proficient in several calibration management software systems, including both standalone applications and cloud-based solutions. These systems enable efficient management of calibration schedules, data entry, certificate generation, and report creation. For example, I’ve used systems that allow for automated email reminders for upcoming calibrations, reducing the chance of overdue maintenance. Some of the common features include the ability to search and retrieve calibration data based on instrument ID, calibration date, and other relevant criteria. These systems are designed to ensure compliance with industry standards and support traceability across the entire calibration process.

Beyond specialized software, I also have experience with database management systems like SQL, which allows for advanced data analysis and reporting. The choice of system depends on the scale of operations and the specific needs of the laboratory, ensuring the chosen solution seamlessly integrates into our existing infrastructure and workflows. The ultimate goal is to have a fully traceable, secure, and efficient calibration management system.

Q 14. Explain the concept of calibration intervals and how they are determined.

Calibration intervals define the frequency of recalibration for a given instrument. They’re not arbitrary; they’re determined by several factors. The most critical is the instrument’s stability and its susceptibility to drift or degradation over time. A high-precision instrument used in a stable environment might have a longer interval than a less precise instrument subjected to harsh conditions. The manufacturer’s specifications often provide guidance, but this serves as a starting point.

Other factors include the instrument’s usage frequency and the criticality of its measurements. A frequently used instrument measuring a critical parameter, such as temperature in a pharmaceutical manufacturing process, will likely have shorter calibration intervals than an infrequently used instrument measuring a less crucial parameter. Furthermore, past calibration history plays a role. If an instrument shows significant drift or instability, the calibration interval might be shortened. Think of it as preventive maintenance; regular calibrations catch potential problems before they negatively impact accuracy. Regular review and adjustments of calibration intervals are essential to ensure optimum performance and reliability.

Q 15. How do you troubleshoot common issues encountered during calibration?

Troubleshooting calibration issues starts with understanding the specific problem. It’s like diagnosing a car problem – you need to systematically investigate. First, I’d check the equipment’s operational status, confirming power, connections, and any obvious physical damage. Then, I’d review the calibration procedure itself. Was it followed correctly? Are there any discrepancies between the documented procedure and what actually happened? Next, I’d examine the calibration data itself. Are there outliers or unexpected trends? This often points towards environmental factors, instrument drift, or even operator error. For example, if I’m calibrating a thermometer and consistently find it reading low, I might suspect a problem with the temperature probe or the reference standard. I might need to replace the probe, recalibrate the reference, or even investigate if the ambient temperature in the calibration lab is stable.

My approach often involves a process of elimination. I’d start with the simplest explanations (like a loose wire) and work my way up to more complex issues (like a faulty internal component). Documentation is key—meticulously recording all steps, observations, and corrective actions is crucial for traceability and future troubleshooting.

- Check the equipment: Power, connections, physical damage

- Review the procedure: Correctly followed? Discrepancies?

- Examine the data: Outliers? Trends?

- Process of elimination: Simple to complex issues

- Meticulous documentation: Traceability and future reference

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with statistical process control (SPC) in calibration.

Statistical Process Control (SPC) is vital in calibration for monitoring the stability and consistency of our measurement processes. Think of it as a ‘check-up’ for our calibration procedures. We use control charts, such as X-bar and R charts, to track key parameters over time. For instance, we might monitor the standard deviation of measurements taken on a specific piece of equipment during repeated calibrations. If the data points fall outside the established control limits, it signals a potential problem, like instrument drift or environmental changes affecting the measurement process. This allows us to detect shifts or trends before they lead to inaccurate measurements.

In my experience, SPC helps proactively identify issues, preventing costly rework and ensuring the reliability of our calibrated equipment. For example, if the control chart shows a consistent upward trend in the measurements of a pressure gauge, it suggests the gauge may need adjustment or repair, preventing potential errors in downstream processes. This proactive approach is far more efficient than only reacting to failures.

Q 17. How do you ensure the integrity of calibration data?

Ensuring the integrity of calibration data is paramount. We achieve this through a multi-layered approach. First, we use traceable standards—standards that are linked to national or international standards like NIST or UKAS. This provides a clear line of traceability back to fundamental units of measurement. Secondly, we maintain meticulous records. This includes comprehensive calibration certificates detailing all relevant parameters (date, equipment details, measurements, uncertainties). We also use validated calibration procedures—procedures that have been verified for accuracy and repeatability. These procedures leave no room for ambiguity, ensuring everyone performs calibrations consistently.

Furthermore, we employ robust data management systems to safeguard data from loss or corruption. These systems often incorporate digital signatures and audit trails. This not only ensures data integrity but also provides evidence of compliance with quality standards. Finally, regular audits and internal checks verify the effectiveness of our processes and detect any inconsistencies in the data.

Q 18. What are your experience with different calibration standards (e.g., NIST, UKAS)?

My experience spans various calibration standards, including NIST (National Institute of Standards and Technology) and UKAS (United Kingdom Accreditation Service). These organizations set the gold standard for measurement traceability. Working with NIST-traceable standards ensures our calibrations are linked back to the fundamental units of measurement defined by the US system. Similarly, UKAS accreditation demonstrates our competence to perform calibrations to internationally recognized standards. I’m familiar with navigating the requirements and procedures associated with both organizations, including maintaining proper documentation and conducting calibrations according to specific guidelines. Understanding these standards is critical for ensuring the global acceptance and reliability of our calibration results. The core principles are consistency, traceability, and rigorous documentation.

Q 19. Explain the difference between internal and external calibration.

Internal calibration involves using equipment and standards within your own organization to calibrate instruments. It’s like a ‘self-check’ – you’re verifying the accuracy of your instruments against your internal reference standards. External calibration, on the other hand, involves sending your instruments to an accredited external laboratory for calibration. This is like getting a ‘professional opinion’ – an independent third-party verifies your equipment’s accuracy against their traceable standards. Both have their place. Internal calibration is cost-effective for routine checks and monitoring equipment stability, while external calibration provides an independent verification, ensuring accuracy and compliance with regulatory requirements, especially for critical equipment.

The choice often depends on factors like the criticality of the measurement, budget, and the availability of suitable internal resources and standards. For example, a simple multimeter might undergo internal calibration, while a sophisticated piece of analytical equipment requiring specialized knowledge and equipment would need external calibration.

Q 20. Describe your experience with root cause analysis in calibration processes.

Root cause analysis (RCA) is crucial for continuous improvement in calibration. When calibration failures occur, we don’t simply fix the immediate problem; we delve deeper to understand the underlying cause. Think of it like investigating a car accident – we need to understand why the accident happened to prevent similar incidents. We use various RCA methodologies, such as the ‘5 Whys’ technique (asking ‘why’ repeatedly to uncover the root cause) or fault tree analysis (mapping out potential failure modes). For instance, if repeated calibrations of a specific pressure gauge consistently show drift, the ‘5 Whys’ might lead us to uncover a faulty pressure sensor, which was initially overlooked during a previous maintenance check. The outcome of the RCA is documented and corrective actions are implemented to prevent recurrence. This is not simply about fixing a problem; it’s about improving the entire calibration process to prevent future issues.

Q 21. What are your experience with calibration of electronic, mechanical and optical equipment?

My experience encompasses calibration across various equipment types: electronic, mechanical, and optical. Electronic equipment calibration might involve verifying the accuracy of oscilloscopes, multimeters, and power supplies. This typically involves comparing the equipment’s readings to known standards, often using precision voltage sources, current sources, and frequency generators. Mechanical equipment calibration often focuses on dimensional measurements, like using micrometers, calipers, and coordinate measuring machines (CMMs). This involves verifying the accuracy of these instruments against traceable standards and using statistical methods to assess their performance.

Optical equipment calibration is more specialized, dealing with instruments like spectrometers, microscopes, and interferometers. This often requires specialized knowledge of optics and the use of standardized light sources and optical components. Each type of equipment requires specialized techniques, standards, and procedures to ensure accurate and reliable calibration results. For example, calibrating a microscope may involve checking its magnification, resolution, and field of view against standardized test slides. Across all types, my approach focuses on ensuring traceability, minimizing uncertainties, and documenting each step thoroughly.

Q 22. How do you handle discrepancies between calibration results?

Discrepancies between calibration results are a common occurrence in metrology. Handling them effectively involves a systematic approach that prioritizes identifying the root cause and ensuring accurate measurement. First, I carefully review the calibration data, checking for any procedural errors or outliers. This includes verifying the traceability of the standards used, examining the environmental conditions during the calibration, and checking for any damage or malfunction of the equipment under test.

If the discrepancy falls within the acceptable tolerance limits specified in the calibration procedure or the instrument’s specifications, it’s usually documented and the equipment is considered acceptable for use. However, if the discrepancy exceeds the tolerance, a thorough investigation is initiated. This might involve recalibrating the equipment, checking the calibration equipment itself, or even re-evaluating the measurement process. For example, if a digital multimeter shows a significant drift from its previous calibration, we might check the battery voltage, inspect the internal components, and even consider recalibration using a higher-accuracy standard. A detailed report documenting the findings and corrective actions is always prepared and maintained in line with our quality management system.

Q 23. Describe your experience working within a quality management system (e.g., ISO 9001).

I have extensive experience working within ISO 9001 compliant quality management systems. My roles have consistently involved ensuring the calibration process adheres to the documented procedures, including planning, execution, and recording. This involves maintaining calibration schedules, managing calibration certificates, and ensuring traceability to national or international standards. For instance, in my previous role, I was responsible for implementing a new calibration software that improved our efficiency and traceability. This involved training colleagues on the new system, developing standardized operating procedures, and integrating the software with our existing quality management system. My responsibilities extended to internal audits to ensure that all aspects of our calibration processes met ISO 9001 requirements. I’m proficient in conducting root cause analysis and corrective actions, ensuring that non-conformances are addressed immediately and effectively. I’m also comfortable participating in management reviews and providing reports on the performance of the calibration laboratory.

Q 24. Explain the importance of using appropriate safety precautions during calibration.

Safety is paramount during calibration. Ignoring safety precautions can lead to injuries, equipment damage, or even invalidate calibration results. The specific precautions depend heavily on the equipment being calibrated. For example, when calibrating high-voltage equipment, safety glasses, insulated gloves, and appropriate grounding techniques are mandatory. Similarly, when working with lasers, appropriate laser safety eyewear is essential. Working with hazardous materials, such as mercury, requires adhering to strict safety regulations. A thorough risk assessment is always conducted before initiating any calibration process. This assessment identifies potential hazards and outlines the necessary precautions. These precautions are documented in the calibration procedure itself, ensuring everyone involved understands and follows them. Furthermore, regular safety training is crucial for ensuring that all calibration personnel are aware of and comply with the safety procedures. It’s about more than just following a checklist—it’s a mindset of proactive safety awareness.

Q 25. How do you stay updated on the latest advancements in metrology and calibration techniques?

Staying updated in the dynamic field of metrology requires a multi-pronged approach. I regularly attend professional conferences and workshops, like those offered by NIST or other national metrology institutes. These events provide valuable insights into the latest advancements and best practices. I also actively engage with professional organizations such as the American Society of Mechanical Engineers (ASME) and similar international bodies, accessing their publications and resources. Subscribing to relevant journals and industry newsletters keeps me abreast of emerging technologies and techniques. Online courses and webinars offered by reputable institutions are also instrumental in expanding my knowledge base. Furthermore, I maintain a network of professional contacts, exchanging information and experiences with colleagues in the field. This collaborative approach ensures I stay at the forefront of advancements in metrology and calibration.

Q 26. How do you prioritize calibration tasks in a busy environment?

Prioritizing calibration tasks in a busy environment requires a well-defined system. I typically use a combination of methods. Firstly, I rely on a robust calibration schedule that takes into account the criticality of the equipment, its frequency of use, and the associated risks of inaccurate measurements. Equipment critical to safety or product quality receives higher priority. Secondly, I utilize a computerized maintenance management system (CMMS) to track calibration due dates and generate alerts, ensuring timely scheduling. Thirdly, I employ a risk-based approach, prioritizing equipment that is most likely to impact product quality or operational efficiency. Finally, communication is key – open lines with end-users help me understand their immediate needs, allowing for efficient prioritization and better resource allocation. Think of it like a triage system in a hospital – the most critical cases get immediate attention, while others are addressed according to their urgency.

Q 27. Describe a situation where you had to resolve a complex calibration issue.

In one instance, we encountered a significant discrepancy during the calibration of a high-precision coordinate measuring machine (CMM). The CMM consistently produced measurements outside its tolerance limits, despite numerous recalibrations. After carefully reviewing the calibration procedure, environmental conditions, and the CMM’s operating parameters, we discovered that minute vibrations from nearby machinery were affecting the CMM’s accuracy. This wasn’t initially apparent because the vibrations were below the threshold of human perception. The solution involved isolating the CMM from the vibration source by installing vibration-damping pads and relocating the equipment to a more stable location in the lab. This ultimately resolved the issue, and it highlighted the importance of considering subtle environmental factors that can affect measurement accuracy. The experience reinforced the necessity of thorough investigation and a systematic approach to troubleshooting calibration problems.

Q 28. How do you ensure the effectiveness of the calibration process?

Ensuring the effectiveness of the calibration process requires a combination of strategies. Firstly, maintaining the traceability of our standards to national or international standards is essential. This guarantees the accuracy and reliability of our calibration results. Secondly, we employ rigorous quality control measures throughout the process, including regular audits and reviews of our procedures and equipment. Our calibration technicians receive ongoing training to ensure competency and adherence to standards. Thirdly, we regularly analyze our calibration data to identify any trends or anomalies that might indicate problems in the process. This data analysis helps us to proactively address potential issues and improve the overall effectiveness of our calibration system. Finally, we maintain detailed records of all calibrations, including the results, any discrepancies found, and the corrective actions taken. This documentation provides a clear audit trail and supports continuous improvement in our calibration practices. It’s a continuous cycle of verification, validation, and refinement to ensure we maintain the highest level of accuracy and confidence in our calibration procedures.

Key Topics to Learn for Metrology and Measurement Equipment Calibration Interview

- Measurement Uncertainty: Understanding sources of uncertainty, propagation of uncertainty, and methods for minimizing uncertainty in calibration processes. Consider practical examples like calculating uncertainty budgets for different measurement instruments.

- Calibration Standards and Traceability: Knowing the importance of traceable calibration standards to national or international standards organizations (e.g., NIST). Be prepared to discuss the chain of custody and its implications for measurement validity.

- Calibration Methods and Procedures: Familiarity with various calibration techniques for different types of equipment (e.g., pressure gauges, temperature sensors, balances). Think about the steps involved in a typical calibration procedure and potential troubleshooting scenarios.

- Calibration Software and Data Management: Understanding the role of software in automating calibration processes, managing calibration data, and generating reports. Consider the importance of data integrity and regulatory compliance.

- Statistical Process Control (SPC) in Calibration: Applying SPC techniques to monitor calibration processes, identify trends, and prevent out-of-control situations. Prepare examples of how control charts can be used to improve calibration effectiveness.

- Calibration Intervals and Equipment Life Cycle Management: Determining appropriate calibration intervals based on equipment usage, environmental factors, and manufacturer recommendations. Discuss strategies for managing the entire life cycle of measurement equipment.

- Common Calibration Equipment: Demonstrate knowledge of different types of calibration equipment, such as calibrators, standards, and related instruments. Be ready to discuss their specific applications and limitations.

- ISO 17025 and other relevant standards: Understand the requirements and implications of ISO 17025 (or other relevant calibration standards) for calibration laboratories and processes.

Next Steps

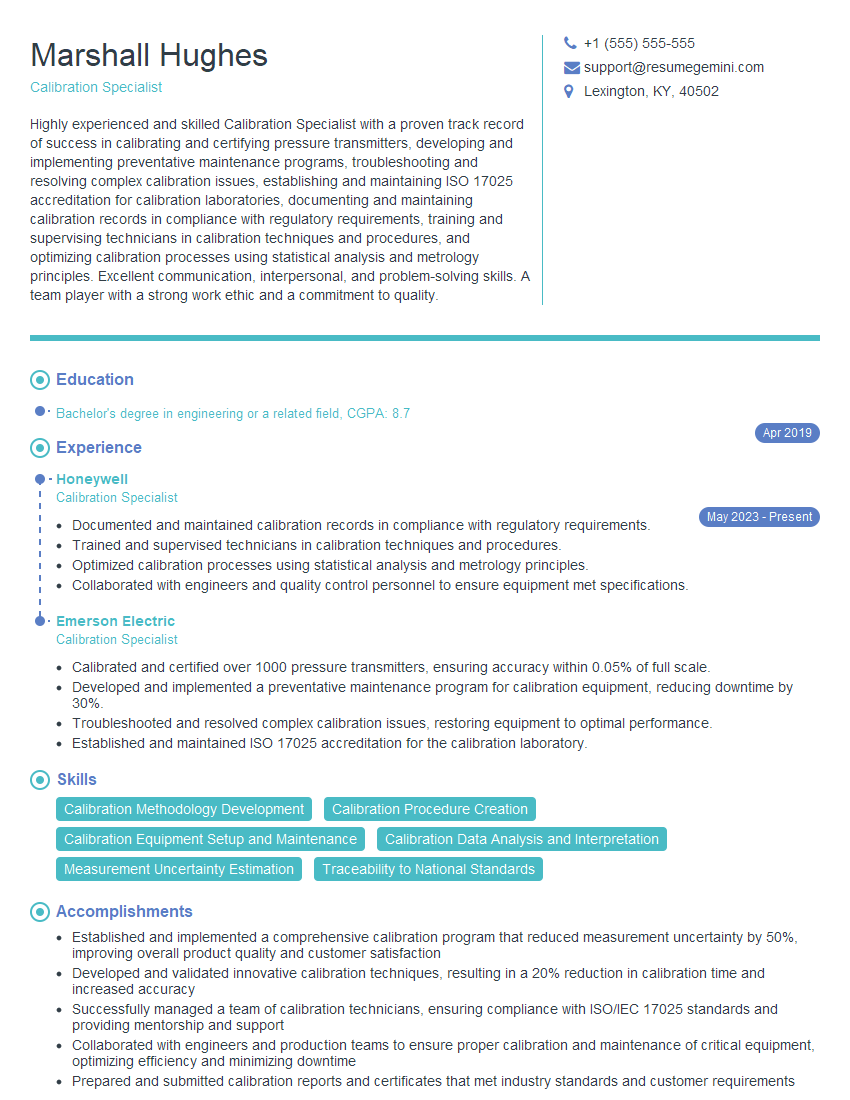

Mastering Metrology and Measurement Equipment Calibration opens doors to rewarding careers in quality control, manufacturing, and research. A strong understanding of these principles is highly valued by employers and significantly enhances your career prospects. To stand out in the job market, create a professional and ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource to help you build a compelling resume tailored to your specific industry. Leverage ResumeGemini’s expertise to craft a standout resume, and take advantage of the examples of resumes tailored to Metrology and Measurement Equipment Calibration provided to further enhance your application.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.