Cracking a skill-specific interview, like one for Research databases (e.g., JSTOR, Web of Science), requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Research databases (e.g., JSTOR, Web of Science) Interview

Q 1. Explain the difference between JSTOR and Web of Science.

JSTOR and Web of Science are both prominent research databases, but they serve different purposes. Think of JSTOR as a massive digital library, primarily focusing on the full text of scholarly journals, books, and primary sources across various disciplines. Its strength lies in providing access to the complete articles themselves, allowing for in-depth reading and analysis. Web of Science, on the other hand, is a citation indexing service. It’s less about providing full-text articles and more about tracking the influence and relationships between publications. It excels at identifying highly cited papers, mapping research trends, and uncovering connections between different works. Essentially, JSTOR provides the content, while Web of Science provides the context and impact analysis.

In short: JSTOR = Full-text articles; Web of Science = Citation analysis and research mapping.

Q 2. Describe your experience using Boolean operators in database searches.

Boolean operators (AND, OR, NOT) are essential for refining database searches. They allow you to combine keywords to narrow or broaden your results. My experience involves using these operators extensively to build precise search strings. For example, if I’m researching the impact of social media on political polarization, I might use the search string: ("social media" OR "online platforms") AND ("political polarization" OR "political division"). Here, the OR operator broadens the scope within each parenthesis, while the AND operator ensures that both concepts are covered in the results. Using NOT allows you to exclude irrelevant terms; for example, ("climate change" AND "mitigation") NOT "adaptation" would focus solely on mitigation strategies.

I’ve found that carefully constructed Boolean searches are crucial for efficiently navigating vast databases, significantly reducing the time spent sifting through irrelevant results. I always experiment with different combinations to optimize my search strategy. A poorly constructed search using only keywords will easily yield too many, or too few, results.

Q 3. How do you identify and mitigate bias in research results obtained from databases?

Identifying and mitigating bias is crucial in research. Database results aren’t immune; biases can stem from the selection of studies included, publication bias (studies with positive results are more likely to be published), or even the language used in the research itself. My approach involves a multi-step process:

- Awareness of Potential Biases: I start by being mindful of potential sources of bias, considering the demographics of the studied populations, the funding sources of the research, and the overall research methodology.

- Diversification of Search Strategies: I avoid relying on a single search strategy, exploring different keywords, databases, and search techniques. This helps to avoid a skewed sample of results.

- Critical Evaluation of Sources: I carefully examine the methodology of each study, looking for potential biases in sampling, data collection, and analysis. I pay attention to the limitations acknowledged by the authors.

- Seeking Diverse Perspectives: I actively search for studies that offer contrasting viewpoints or methodologies. This helps to identify potential limitations in existing research.

- Considering the Broader Context: I evaluate the findings in the context of the larger body of existing research, assessing whether the results align with the prevailing evidence or challenge existing understanding.

By employing these strategies, I aim to gain a more nuanced understanding of the research landscape and identify potential biases that might affect the interpretation of results.

Q 4. What are the key features of a well-structured database search strategy?

A well-structured database search strategy is akin to a well-crafted recipe—it requires careful planning and execution. Key features include:

- Clearly Defined Research Question: Begin with a specific and focused research question. This will guide the entire search process.

- Keyword Identification: Identify relevant keywords and synonyms, considering different terminology used in the field.

- Use of Boolean Operators: Utilize Boolean operators (AND, OR, NOT) to effectively combine keywords and refine search results.

- Controlled Vocabulary and Subject Headings: Employ subject headings or controlled vocabularies provided by the database to enhance precision.

- Iterative Search Refinement: Refine the search strategy based on initial results, adjusting keywords, operators, and search fields as needed. This is an iterative process, often needing multiple attempts.

- Documentation of Search Strategy: Meticulously record the search strategy used to ensure reproducibility and transparency.

Following these steps ensures a systematic and efficient approach to research, maximizing the chance of finding relevant and high-quality information.

Q 5. How do you evaluate the credibility and reliability of sources found in research databases?

Evaluating the credibility and reliability of sources is paramount in research. My approach considers several factors:

- Author Credibility: I assess the author’s expertise, affiliations, and publication history. Is the author a recognized expert in the field?

- Publication Venue: I examine the reputation and impact factor of the journal or publication where the source appeared. Is it a reputable, peer-reviewed journal?

- Methodology: I scrutinize the research methodology employed, evaluating the sample size, data collection methods, and statistical analysis. Are the methods rigorous and appropriate?

- Peer Review: I check if the source has undergone peer review, a crucial step in ensuring quality control.

- Evidence and Reasoning: I critically evaluate the evidence presented and the reasoning used to support the claims. Is the evidence compelling and logically sound?

- Date of Publication: I consider the recency of the publication, particularly in rapidly evolving fields. Older sources might be outdated.

- Citation Analysis: I examine the source’s citation count (if available) and the citation network to gauge its influence and impact.

By systematically evaluating these aspects, I can confidently determine the credibility and reliability of a source and its suitability for inclusion in my research.

Q 6. Explain the concept of citation indexing.

Citation indexing is a method of organizing and searching scholarly literature based on the citations between publications. Imagine a network where each paper is a node, and the links represent citations. Citation indexing databases, like Web of Science, track these citations, allowing researchers to trace the influence of a specific publication, identify related works, and map the evolution of research ideas over time. For instance, if a seminal paper on a particular topic is highly cited, the database can identify all subsequent publications that cited it, revealing the research lineage and evolution of understanding in that area. This allows researchers to understand the broader impact and context of a piece of research beyond just its individual merit.

Q 7. What are the limitations of using keyword searches alone?

While keyword searches are a valuable starting point, relying on them alone has limitations. Keywords can be ambiguous, leading to irrelevant results. Different authors might use varying terminology to describe the same concept. Furthermore, keyword searches alone cannot capture the semantic relationships between concepts, potentially missing crucial studies. The lack of advanced search operators or the use of only simple keywords misses nuances in context. For example, searching for just “climate change” would yield vastly different results compared to a search using ("climate change" AND "economic impact"). The latter allows much more precise and relevant results for a more focused research need.

Therefore, a comprehensive search strategy should incorporate various techniques beyond basic keyword searches, including Boolean operators, subject headings, and citation tracking, to overcome these limitations and ensure comprehensive results.

Q 8. Describe your experience with different search engines (e.g., Google Scholar, PubMed).

My experience with research search engines spans years and includes extensive use of Google Scholar, PubMed, and specialized databases like JSTOR and Web of Science. Each platform offers a unique approach to searching. Google Scholar, for example, provides a broad, web-based search encompassing various academic disciplines, but its results require careful evaluation for quality and relevance. PubMed excels in biomedical literature, offering advanced filtering options based on publication type, date, and keywords. JSTOR focuses on archival material, providing access to a wealth of historical and scholarly journals. Web of Science offers a powerful citation indexing system, allowing for in-depth analysis of research impact and trends. I’m adept at leveraging the strengths of each platform based on my research needs; for a broad overview, I might start with Google Scholar, but for a focused search in a specific field, I’d directly utilize PubMed or JSTOR, refining my search strategy using advanced Boolean operators and filters.

For instance, when researching the impact of social media on political polarization, I might start with a Google Scholar search for general overview articles, then refine my search using PubMed to find relevant studies in psychology and sociology, and ultimately utilize JSTOR for historical context and potentially relevant case studies. I’m comfortable navigating the nuances of each engine’s interface and utilizing its advanced features to achieve optimal search results.

Q 9. How do you manage large datasets extracted from research databases?

Managing large datasets extracted from research databases requires a structured approach, combining efficient data management software with careful planning. I typically begin by exporting data in a standardized format like CSV or a database format such as SQLite. For very large datasets, I might work with relational database management systems (RDBMS) like PostgreSQL or MySQL, which can handle massive quantities of structured information efficiently. I utilize scripting languages like Python with libraries such as Pandas to manipulate, clean, and analyze the data. Pandas provides functions for data cleaning, filtering, sorting, and transformation, making it an indispensable tool for managing large datasets.

# Example Python code using Pandas to filter a large dataset

import pandas as pd

data = pd.read_csv('large_dataset.csv')

filtered_data = data[(data['column1'] > 10) & (data['column2'] == 'value')]The key is to break down the process into manageable steps, regularly backing up your work and clearly documenting each stage. This allows for easy troubleshooting and collaboration. The choice of the software will depend on the dataset size, storage capacity, and available computational resources. For example, smaller datasets might be managed using spreadsheet software, whereas larger datasets necessitating complex analysis may require a full-fledged RDBMS and specialized programming languages.

Q 10. Explain your experience with data cleaning and preprocessing techniques.

Data cleaning and preprocessing are crucial steps in any research project. My experience involves various techniques, tailored to the specific dataset. Common issues I address include handling missing values (through imputation or removal), dealing with inconsistencies in data formats (e.g., standardizing date formats), and identifying and removing outliers. I often utilize regular expressions to clean textual data and correct errors. For numerical data, I employ statistical methods to identify and handle outliers, considering the context of the research question.

For example, in a study analyzing survey responses, I might use mean/median imputation for missing values, ensuring the chosen method aligns with the nature of the data and research objectives. If there are inconsistencies in survey responses, I would carefully review them and potentially remove responses with multiple inconsistent answers. I often visualize the data using tools like matplotlib to identify patterns and areas needing cleaning. This iterative process helps me ensure data quality and accuracy, which is paramount for reliable research results.

Q 11. How familiar are you with different database export formats (e.g., RIS, BibTeX)?

I am very familiar with various database export formats, including RIS, BibTeX, and EndNote. Each format serves a specific purpose. RIS (Research Information Systems) is a simple text-based format commonly used for exchanging citation information between different reference management software and databases. BibTeX is a widely used format, especially in LaTeX environments for creating bibliographies and managing references. EndNote is a proprietary format used by the EndNote reference management software. I can seamlessly convert between these formats using various tools and software, including dedicated converters and functionalities within reference management software. The choice of format depends on the intended use and compatibility requirements of the downstream applications or systems.

For example, when preparing a manuscript for publication, I’d typically export my references in BibTeX format if the journal uses LaTeX, or in RIS format if I’m using a different citation manager. Understanding these formats ensures smooth integration with various tools and prevents compatibility issues during the research workflow.

Q 12. Describe your experience with database management systems (DBMS).

My experience with database management systems (DBMS) includes proficiency in relational databases like MySQL and PostgreSQL, as well as familiarity with NoSQL databases such as MongoDB. I understand the underlying concepts of database design, including normalization, indexing, and query optimization. I’m comfortable using SQL to query, manipulate, and manage data within these systems. This includes creating tables, defining relationships, writing complex queries (using joins, subqueries, and aggregate functions), and ensuring data integrity.

For example, in a project involving large-scale text analysis, I might use PostgreSQL to store and manage a corpus of text documents, utilizing indexing to speed up search operations. I’d use SQL queries to extract specific information, clean the data, and perform various analyses. My skills extend to optimizing database performance by implementing appropriate indexing strategies and tuning query execution plans.

Q 13. How do you handle copyright and licensing issues when using research databases?

Handling copyright and licensing issues when using research databases is crucial for ethical and legal compliance. I am meticulous in understanding the terms of use and licensing agreements associated with each database. This includes acknowledging the source of the material appropriately in my publications and reports. Databases often have varying licensing agreements, such as Creative Commons licenses or subscription-based access, which grant different levels of usage rights. I always adhere to these guidelines and ensure that any use falls within the permitted scope of the license.

For instance, when utilizing material from JSTOR, I carefully check the license associated with each article and ensure that my intended use aligns with the license terms. This might involve obtaining permission for specific uses beyond fair use or obtaining appropriate citations. I always prioritize responsible and ethical data usage to maintain academic integrity.

Q 14. How do you ensure data privacy and security when working with research data?

Data privacy and security are paramount in my research workflow. I adhere to ethical guidelines and regulations, such as GDPR and HIPAA when handling sensitive data. My approach involves anonymizing or pseudonymising data whenever possible, removing personally identifiable information before analysis and storage. I use secure storage methods, such as encrypted hard drives and cloud storage solutions with robust security features. I also employ access control measures, limiting access to sensitive data to only authorized personnel.

For sensitive data, I typically utilize data encryption both in transit and at rest. When working with datasets containing personally identifiable information, I would replace identifiers with pseudonyms to prevent re-identification. I prioritize responsible data management practices throughout the research lifecycle, from data collection to final analysis and archiving. This careful approach ensures the privacy and security of research participants and protects against data breaches.

Q 15. Explain your experience using citation management software (e.g., Zotero, Mendeley).

Citation management software is indispensable for researchers. I’ve extensively used both Zotero and Mendeley, finding each offers unique strengths. Zotero, with its powerful browser extension, excels at quickly capturing citations directly from websites and PDFs. Its flexible organization system, using folders and collections, allows for highly customized management of research materials. Mendeley, on the other hand, offers a stronger collaborative aspect, facilitating easier sharing and group projects. I’ve found its built-in PDF annotation tools particularly useful for keeping track of notes and highlights directly within the research papers. My typical workflow involves using Zotero for initial citation capture and Mendeley for collaborative projects, leveraging the best features of both platforms. For instance, during my dissertation research, I used Zotero to manage my individual citations then later exported them to Mendeley to share annotated drafts with my advisor.

For larger projects, I’ve utilized the advanced features like creating custom citation styles and generating bibliographies in various formats. This ensures consistency and accuracy across different publications and projects. The ability to sync across devices is a crucial element, enabling seamless access to my citation library from anywhere.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are the ethical considerations of using research databases?

Ethical considerations when using research databases are paramount. They revolve around issues of copyright, plagiarism, and proper attribution. It’s crucial to respect intellectual property rights by only using materials as permitted by the database’s terms of service and copyright laws. This often includes adhering to fair use guidelines, considering the purpose and extent of the use. Furthermore, always properly cite any sources obtained from the database, accurately and completely, to avoid plagiarism. This includes not only direct quotations but also paraphrased information and ideas. Misrepresenting the source, even unintentionally, is unethical. Additionally, access to some databases comes at a cost either through institutional subscriptions or individual fees, hence respecting their terms of use, such as not sharing login credentials inappropriately, is essential for upholding the integrity of these resources.

For example, I once encountered a situation where a colleague was using figures from an article without proper attribution. I advised him on the importance of citing correctly, emphasizing that even slight alterations didn’t exempt him from the obligation. This highlights the responsibility researchers bear in upholding the ethical standards surrounding database usage.

Q 17. How do you stay up-to-date with new developments in research databases and technologies?

Keeping current with research database advancements is ongoing. I subscribe to newsletters from major database providers (like Web of Science, JSTOR, etc.), which announce updates and new features. I regularly attend webinars and conferences focused on information science and library technologies, networking with other professionals in the field and learning about new database features and emerging research trends. I also actively follow relevant journals and blogs, such as those published by professional organizations like the American Library Association, which often cover technological developments impacting research databases. Finally, participation in online forums and professional groups on platforms like LinkedIn provides a platform to learn about new tools and methodologies from other researchers and professionals.

A recent example is learning about the increasingly sophisticated search functionalities offered by many databases, including advanced Boolean operators and natural language processing techniques. This allows for more nuanced and effective research, a key factor in my productivity.

Q 18. Describe a time you had to troubleshoot a complex database search query.

During a literature review on the impact of social media on political polarization, I encountered a challenge in refining my search query on JSTOR. My initial search, using keywords like “social media” AND “political polarization,” yielded far too many irrelevant results. The problem was the broad nature of these terms and the absence of specific filters. To troubleshoot, I systematically refined my approach.

- Step 1: More Specific Keywords: I replaced “social media” with more precise terms like “Twitter” OR “Facebook” and refined “political polarization” with phrases like “political ideology” OR “election campaigns”.

- Step 2: Boolean Operators: I experimented with nested parentheses to control the order of operations. For example:

(("Twitter" OR "Facebook") AND ("political ideology" OR "election campaigns")) AND "United States" - Step 3: Date Limiting: I added a date range to focus on recent studies, significantly reducing the number of irrelevant results.

- Step 4: Subject Filters: I used JSTOR’s subject headings to narrow down results to specific fields like political science or communication studies.

Through iterative refinement using these steps, I significantly improved the precision of my search results, obtaining highly relevant articles directly applicable to my research question. This experience reinforced the importance of a structured approach to database searching, combining precise keywords, Boolean logic, and the utilization of available filter options.

Q 19. How do you approach selecting the appropriate database for a specific research topic?

Choosing the right database depends on the research topic and its specific requirements. It’s akin to selecting the right tool for a specific job. Consider the following:

- Discipline: Databases specialize in specific fields. For example, PubMed is ideal for biomedical literature, while IEEE Xplore is excellent for engineering and computer science.

- Coverage: Some databases provide broad coverage, while others are more focused. JSTOR offers a vast archive across humanities and social sciences, whereas Web of Science concentrates heavily on citation data.

- Publication Type: Are you looking for journal articles, books, conference papers, or other materials? Different databases specialize in different types of publications.

- Search Features: Advanced search capabilities can be crucial. Features like Boolean operators, wildcard characters, and subject heading searches enhance search efficiency and precision.

For instance, researching the history of a specific painting would likely lead me to JSTOR’s art history collection, while research on a new algorithm would point me towards IEEE Xplore. A systematic approach, starting with an understanding of the subject and its literature, is key to selecting the optimal database.

Q 20. Explain the importance of metadata in research databases.

Metadata is the structural information about data, essentially the descriptive information that makes research databases searchable and usable. Think of it as the library catalog system, providing details to help you find what you’re looking for. Without metadata, navigating the vast quantities of information in a database would be extremely difficult or even impossible. It includes elements such as:

- Title: The name of the article or book.

- Author(s): The individuals who created the work.

- Abstract: A summary of the work’s contents.

- Keywords: Descriptive terms identifying the work’s subject matter.

- Publication date: When the work was published.

- Journal title (for articles): Name of the journal where the article was published.

Accurate and complete metadata allows for effective searching and retrieval of relevant documents. It enables users to locate specific works efficiently, filtering by author, date, subject, etc. Well-structured metadata improves the discoverability of research and ensures its proper attribution, thus promoting scientific communication and advancement.

Q 21. How do you assess the relevance of search results?

Assessing the relevance of search results requires a critical approach, going beyond simply looking at the title. I consider several factors:

- Abstract Review: I carefully read the abstract to determine if the paper addresses my research question or hypothesis. This provides a quick summary and helps to filter out irrelevant results.

- Keyword Matching: I check if the keywords used in the search query are present in the paper’s title, abstract, or keywords section.

- Author Expertise: The author’s reputation and publications in the field can indicate the reliability and relevance of the work.

- Publication Venue: The journal or publication where the work appeared holds significance. Reputable journals have a higher standard of peer review and can indicate greater reliability.

- Publication Date: The recency of the publication is important, especially in rapidly evolving fields. Older articles might be relevant but require careful assessment for their continued applicability.

- Citation Count: A high number of citations suggests the paper has had significant impact in the field, although this is not always an indicator of quality or relevance to my specific needs.

By systematically evaluating these factors, I can filter out irrelevant results and identify the most suitable articles for my research. This helps to manage the volume of information and focus on the studies that are most pertinent to my research objectives.

Q 22. How do you handle inconsistent or incomplete data in research databases?

Handling inconsistent or incomplete data is crucial for reliable research. Think of it like building a house – you can’t have a stable structure with weak foundations. In research databases, this means meticulously checking for errors and employing strategies to either correct or manage the problematic data.

My approach involves several steps:

- Data Cleaning: This involves identifying and correcting obvious errors like typos or inconsistencies in formatting (e.g., date formats). I use data cleaning tools within the database software or import the data into a statistical package like R or Python for more extensive cleaning.

- Data Imputation: For missing values, I don’t simply delete the entire record. Instead, I consider appropriate imputation methods depending on the data type and the nature of the missingness. For example, I might use mean imputation for numerical data or mode imputation for categorical data, but always acknowledge these limitations in my analysis.

- Data Validation: I rigorously check the data against known sources or established ranges. This helps identify outliers or values that are clearly incorrect. This often involves creating visualizations to spot anomalies.

- Sensitivity Analysis: When dealing with significant amounts of incomplete data, I perform sensitivity analyses to assess how much the results would change if I used different imputation methods or excluded incomplete data. This adds robustness to my findings.

For example, if I’m working with a database of clinical trial results and find missing dosages for some patients, I wouldn’t simply remove those patients. Instead, I’d explore imputation techniques – perhaps using the average dosage for similar patients – while transparently noting this limitation in my report.

Q 23. Describe your experience with advanced search techniques in databases (e.g., proximity operators, wildcard characters).

Advanced search techniques are essential for efficiently retrieving relevant information from vast research databases. Think of them as powerful tools that let you precisely target your search, rather than relying on broad keywords.

My experience encompasses various techniques:

- Proximity Operators: These allow me to find results where specific terms appear within a certain distance of each other. For example, using the operator

NEARorADJin Web of Science allows me to find documents where ‘climate change’ and ‘adaptation’ are mentioned close together, indicating a stronger relationship than if they were far apart. - Wildcard Characters: These are incredibly useful when you’re unsure of the exact spelling or want to search for variations of a word. For example, using an asterisk (*) as a wildcard (e.g.,

organi*) in JSTOR would retrieve results containing ‘organic,’ ‘organizing,’ ‘organism,’ etc. - Boolean Operators: These are foundational—

AND,OR,NOT—allowing me to combine keywords to refine search results and improve precision. For instance, searching for('climate change' AND 'mitigation') NOT 'carbon capture'will focus on climate change mitigation strategies that don’t involve carbon capture. - Field Searching: Databases often allow you to specify the field in which to search (e.g., title, abstract, author). This significantly reduces irrelevant results. Targeting keywords to the abstract field, for example, is far more precise than a general search.

By combining these techniques, I can craft highly specific search strategies that maximize the relevance and minimize the number of irrelevant results. This is crucial when dealing with large databases with millions of records.

Q 24. What are the advantages and disadvantages of using different database types (e.g., relational, NoSQL)?

The choice between relational (SQL) and NoSQL databases depends heavily on the specific research project and data structure. Each has strengths and weaknesses.

- Relational Databases (SQL):

- Advantages: Excellent for structured data with well-defined relationships between entities; data integrity is well-maintained through constraints and ACID properties; mature technology with robust tools and support.

- Disadvantages: Scaling can be challenging and expensive; less flexible for handling unstructured or semi-structured data; schema rigidity can be limiting for evolving projects.

- NoSQL Databases:

- Advantages: Highly scalable and flexible; well-suited for large volumes of unstructured or semi-structured data (e.g., text, images); various models (document, key-value, graph) allow tailored data management.

- Disadvantages: Data consistency can be more difficult to maintain; less mature tooling and community support compared to SQL; complex queries can be challenging.

For example, a research project involving highly structured survey data with clear relationships between questions would likely benefit from a relational database. In contrast, a project dealing with large text corpora from social media might be better suited for a NoSQL document database due to its flexibility in handling semi-structured information.

Q 25. How do you interpret and present findings from research databases effectively?

Interpreting and presenting findings effectively hinges on clear communication and a deep understanding of both the data and the research question. It’s not enough to simply report numbers; you need to tell a compelling story with the data.

My process involves:

- Data Summary: I start by summarizing key findings using descriptive statistics (mean, median, standard deviation, etc.) and relevant tables and charts. I use clear and concise language to avoid jargon.

- Statistical Analysis: I employ appropriate statistical tests to assess relationships between variables and to draw valid inferences. I carefully choose tests based on the data type and research question.

- Visualizations: I use graphs and charts to visually represent key findings and highlight patterns. I select visualizations appropriate to the data and the audience.

- Narrative Integration: I don’t simply present data; I weave it into a coherent narrative that addresses the research question and contextualizes the findings within the existing literature.

- Limitations Acknowledgment: It is crucial to acknowledge limitations of the data, methodology, and analysis. Transparency builds credibility.

For instance, rather than simply stating ‘correlation between X and Y is significant,’ I would explain the nature of the relationship, its practical implications, potential confounding factors, and how it contributes to the broader understanding of the research topic.

Q 26. Describe your experience with data visualization techniques used to present information from databases.

Data visualization is key to making complex information easily understandable. I’ve used a range of techniques to effectively communicate insights from databases.

My experience includes:

- Bar charts and histograms: For comparing frequencies or distributions of categorical and numerical data.

- Scatter plots: To explore relationships between two numerical variables.

- Line graphs: To show trends over time.

- Heatmaps: To visualize correlations or other matrix-like data.

- Interactive dashboards: To allow users to explore the data themselves and create custom visualizations.

- Geographic Information Systems (GIS): To map spatial data and visualize geographic patterns.

Software like Tableau, Power BI, and R’s ggplot2 are invaluable tools. The choice of visualization depends entirely on the data and the message I want to convey. A cluttered or inappropriate visualization can obscure rather than clarify findings. I always strive for clarity and simplicity.

Q 27. How do you collaborate with others when working on research projects that utilize databases?

Collaboration is essential for successful research projects. My approach emphasizes clear communication, shared understanding, and efficient workflows.

Key aspects of my collaboration strategy:

- Project Planning: We define clear roles, responsibilities, and timelines upfront. This prevents confusion and ensures everyone is on the same page.

- Data Sharing: We use version control systems (e.g., Git) and collaborative platforms (e.g., Google Drive, SharePoint) to securely share data and code. This promotes transparency and prevents data silos.

- Regular Meetings: We have regular meetings to discuss progress, address challenges, and maintain alignment on goals. These meetings are structured and action-oriented.

- Clear Communication: We utilize various communication tools (email, instant messaging, project management software) to ensure timely and effective communication.

- Documentation: We maintain meticulous documentation of the entire research process, including data cleaning, analysis methods, and interpretations. This enhances reproducibility and future collaboration.

For instance, on a recent project involving large genomic datasets, we used a cloud-based platform to securely store and share the data, and Git to track all code changes. We held weekly meetings to review analysis plans and ensure consistency in the methodology.

Q 28. Describe a time you had to learn a new database or software quickly.

During a project analyzing social media data for sentiment analysis, we needed to use a new NoSQL database (MongoDB) that none of our team had experience with. I approached this challenge systematically.

My strategy included:

- Online Tutorials and Documentation: I spent several days working through MongoDB’s official tutorials and documentation. This provided a foundational understanding of its core features.

- Hands-on Practice: I set up a small test instance of MongoDB and experimented with different queries and data manipulations to reinforce my learning.

- Community Resources: I utilized online forums and communities (like Stack Overflow) to address specific questions and challenges.

- Collaboration with Team: I shared my learning with the team and we collaboratively worked through examples, troubleshooting issues together.

Within a week, I was comfortable enough to contribute effectively to the project, contributing to the design of the database schema and performing data analysis. This experience highlighted the importance of self-directed learning and collaborative problem-solving when faced with new technologies.

Key Topics to Learn for Research Databases (e.g., JSTOR, Web of Science) Interview

- Database Search Strategies: Understanding Boolean operators, truncation, wildcard characters, and advanced search techniques to efficiently locate relevant research materials.

- Citation Management & Analysis: Proficiency in utilizing citation management tools and interpreting citation counts, impact factors, and other bibliometric indicators to assess research impact.

- Database-Specific Features: Familiarizing yourself with the unique features and functionalities of JSTOR and Web of Science, including their respective strengths and limitations for different research needs.

- Data Extraction & Analysis: Knowing how to effectively extract relevant data from these databases and potentially perform basic analysis using spreadsheet software or other tools.

- Ethical Considerations: Understanding issues related to plagiarism, copyright, and responsible use of research databases.

- Data Organization & Presentation: Demonstrating the ability to organize and present extracted data in a clear and concise manner, suitable for reports or presentations.

- Troubleshooting & Problem Solving: Developing the capacity to identify and resolve common issues encountered when using these databases, such as search errors or data inconsistencies.

- Different Research Methodologies & Database Applications: Connecting the use of databases to various research methodologies (qualitative, quantitative) and showing how the databases support these approaches.

Next Steps

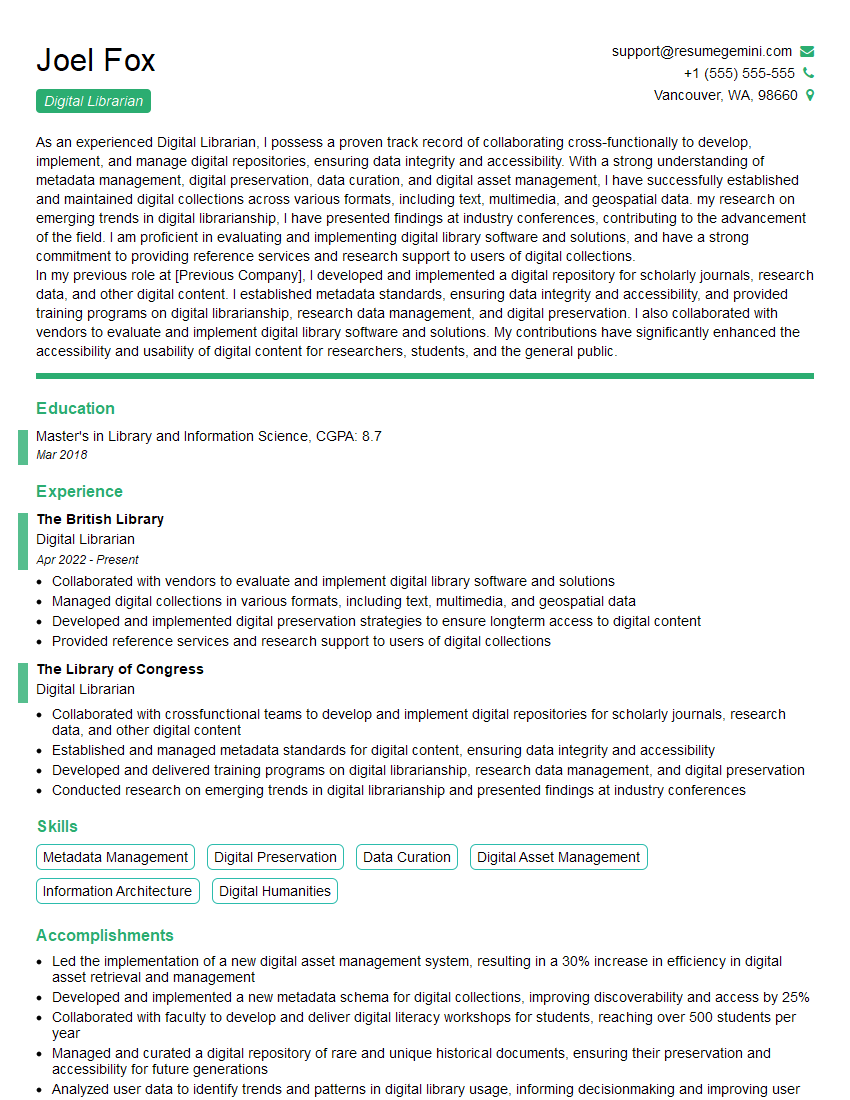

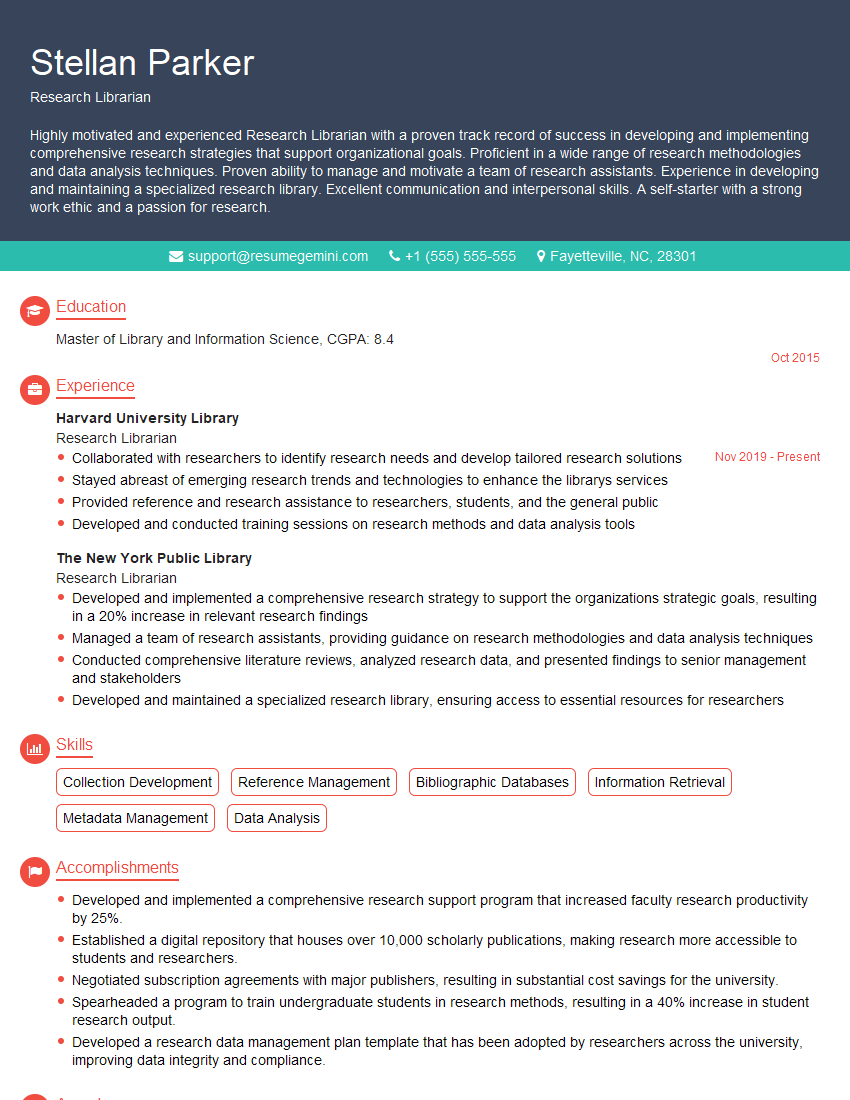

Mastering research databases like JSTOR and Web of Science is crucial for career advancement in academic research, data analysis, and information management. Proficiency in these tools demonstrates valuable skills and significantly enhances your research capabilities. To maximize your job prospects, it’s essential to present these skills effectively on your resume. Creating an ATS-friendly resume will help your application stand out. ResumeGemini is a trusted resource that can help you build a professional and impactful resume that showcases your skills effectively. Examples of resumes tailored to showcasing experience with research databases like JSTOR and Web of Science are available within ResumeGemini to help you build yours.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.