Preparation is the key to success in any interview. In this post, we’ll explore crucial Troubleshooting Techniques and Root Cause Analysis interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Troubleshooting Techniques and Root Cause Analysis Interview

Q 1. Describe your approach to troubleshooting a complex technical issue.

My approach to troubleshooting complex technical issues is systematic and methodical. I follow a structured process that prioritizes understanding the problem before attempting solutions. It begins with clearly defining the problem: what is the specific error, what are the observable symptoms, and what is the impact? Next, I gather information by checking logs, monitoring system performance, and interviewing users or other relevant personnel. This data helps me formulate hypotheses about the root cause. Then, I test these hypotheses in a controlled manner, isolating variables and progressively narrowing down possibilities. I always document each step, including my findings, hypotheses, and actions taken. This documentation is crucial for future reference and collaboration. Finally, I verify the solution to ensure it resolves the problem completely and doesn’t introduce new issues. This entire process resembles a detective investigation – gathering clues, forming theories, testing them, and confirming the solution.

For instance, if a web application is slow, I wouldn’t immediately assume database issues. I’d first check server resource utilization (CPU, memory, disk I/O), network latency, and the application logs for error messages. Only after a systematic investigation would I focus on the database as a potential cause.

Q 2. Explain the 5 Whys technique and provide an example of its application.

The 5 Whys technique is a simple yet powerful iterative interrogative technique used to explore cause-and-effect relationships. By repeatedly asking “Why?” five times (or until the root cause is identified), you progressively drill down to the fundamental reason behind a problem. It’s a great tool for uncovering underlying issues that might not be immediately apparent.

Example: Let’s say a website is down.

- Why? Because the web server isn’t responding.

- Why? Because the server’s hard drive is full.

- Why? Because the log files are consuming excessive space.

- Why? Because an error in the application is generating large, un-rotated log files.

- Why? Because there was a coding error in the latest deployment that resulted in excessive log entries.

The root cause: a coding error in the recent deployment. Addressing this coding error resolves the website downtime. Note that while five “Whys” is a guideline, the process stops when a satisfactory root cause is found, which may require more or fewer iterations.

Q 3. What are some common root cause analysis methodologies you’ve used?

Throughout my career, I’ve employed several root cause analysis (RCA) methodologies, including the 5 Whys (as described above), Fishbone Diagrams (Ishikawa diagrams), Fault Tree Analysis (FTA), and Pareto Analysis. Each has its strengths depending on the complexity and nature of the problem.

- Fishbone Diagrams: Visually represent potential causes categorized by factors like people, processes, materials, machines, environment, and methods. This facilitates brainstorming and collaborative problem-solving.

- Fault Tree Analysis: A top-down approach that uses a tree-like structure to identify potential causes that lead to a specific failure. It’s particularly effective for complex systems where multiple events can contribute to a failure.

- Pareto Analysis: Focuses on identifying the 20% of causes that contribute to 80% of the effects (the Pareto principle). It helps prioritize efforts towards addressing the most impactful causes.

The choice of methodology depends on the context. For simple issues, 5 Whys might suffice. For complex, multi-faceted problems, a Fishbone Diagram or Fault Tree Analysis provides a more structured and comprehensive approach.

Q 4. How do you prioritize multiple technical issues simultaneously?

Prioritizing multiple technical issues involves a combination of factors: urgency, impact, and feasibility. I employ a matrix-based approach. Each issue is evaluated based on its urgency (how quickly it needs resolving) and its impact (how severely it affects the business or users). Issues are categorized into quadrants based on this assessment. This allows me to focus on high-urgency, high-impact issues first. I also consider feasibility – how easily or quickly can the issue be resolved? A simple, quick fix might be prioritized over a complex, time-consuming one even if the latter has a higher overall impact.

For example, a critical system outage affecting all users would be a high-urgency, high-impact issue, taking precedence over a low-impact performance degradation on a rarely used feature.

Q 5. Describe a time you identified a root cause that others missed.

In a previous role, we experienced intermittent failures in our payment processing system. The initial troubleshooting focused on database performance and network connectivity. While these aspects were reviewed, the problem persisted. I suspected a less obvious cause: the interaction between our payment gateway and a newly implemented security feature. Others had overlooked this interaction due to the feature’s seemingly unrelated nature. By meticulously tracing the system’s logs and examining the interactions during the failures, I identified a timing issue where the security check was inadvertently blocking legitimate transactions under specific load conditions. This was confirmed by recreating the issue in a test environment and the fix resolved the intermittent failures.

Q 6. How do you document your troubleshooting process?

Thorough documentation is essential. I maintain a detailed record of my troubleshooting process using a combination of methods. I use a ticketing system to track each issue, including the initial report, steps taken, findings, and the ultimate resolution. This also helps with reproducibility. For more complex issues, I supplement this with comprehensive internal documentation which might include: detailed logs, screenshots, diagrams illustrating system architecture or flowcharts showing the steps I took in my investigation. This ensures transparency, facilitates collaboration, and serves as a valuable knowledge base for future reference. It also allows for a review of my process and opportunities for continuous improvement.

Q 7. Explain the difference between reactive and proactive troubleshooting.

Reactive troubleshooting addresses problems *after* they occur. It’s a response to an immediate issue; for example, fixing a server crash or resolving a user complaint. Proactive troubleshooting, on the other hand, aims to prevent issues *before* they happen. This involves regularly monitoring systems, identifying potential vulnerabilities, and implementing preventative measures. Think of it like this: reactive troubleshooting is like putting out a fire; proactive troubleshooting is like installing a fire suppression system.

Proactive approaches include scheduled system backups, regular security audits, capacity planning, and implementing robust monitoring and alerting systems. These can significantly reduce downtime and improve system reliability.

Q 8. How do you handle situations where the root cause is unclear or difficult to identify?

When the root cause isn’t immediately obvious, I employ a systematic approach. Think of it like detective work – you need to gather evidence and eliminate possibilities. I begin by meticulously documenting all observable symptoms, focusing on inconsistencies and patterns. This might involve reviewing logs, checking system metrics, and interviewing users or other technical staff.

Next, I utilize a combination of techniques. 5 Whys is a great starting point – repeatedly asking ‘why’ to drill down to the underlying issue. I also employ hypothesis testing, formulating potential root causes and designing experiments or observations to validate or refute them. For instance, if I suspect a network issue, I’ll run ping tests and traceroutes. If the problem seems related to a specific software component, I might isolate it and test its functionality in a controlled environment. Sometimes, this requires escalating the issue to a senior engineer or utilizing specialized tools.

If the problem remains elusive after extensive investigation, I document all findings, propose interim solutions to mitigate the impact, and escalate for further expertise or consider bringing in specialized external support. The key is to remain methodical and persistent, always recording my steps to avoid repeating mistakes and to ensure transparency.

Q 9. How do you effectively communicate technical issues to non-technical stakeholders?

Communicating technical issues to non-technical stakeholders requires translating complex information into easily digestible language. I avoid jargon and technical terms whenever possible, using simple analogies and relatable examples. For instance, instead of saying ‘database deadlock,’ I might explain it as ‘two processes trying to use the same information at the same time, creating a traffic jam.’

I often use visuals like flowcharts, diagrams, or even simple drawings to illustrate the problem and its impact. I focus on the consequences of the issue – the business impact, rather than just the technical details. For example, instead of saying ‘the application server is down,’ I’d say ‘customers are unable to place orders, resulting in lost revenue.’

I also tailor my communication to the audience. A report to executive management will focus on high-level impacts and proposed solutions, whereas a communication to a team might involve more technical detail. Regular updates and proactive communication build trust and ensure everyone stays informed.

Q 10. Describe your experience with using diagnostic tools.

My experience with diagnostic tools is extensive and spans various operating systems and applications. I’m proficient in using tools like Wireshark for network analysis, identifying bottlenecks and security vulnerabilities. I’m skilled with log analysis tools like Splunk or ELK stack, allowing me to quickly sift through vast amounts of data to identify error patterns. I also have experience with system monitoring tools like Nagios or Prometheus, which help me proactively identify performance degradations before they become critical issues.

Furthermore, I’m comfortable using debugging tools specific to programming languages like debuggers in Python or Java. These tools help me step through code, inspect variables, and identify the exact point of failure during runtime. The choice of tool always depends on the specific issue; my experience allows me to select the most appropriate tool for the task at hand. For example, if a server is exhibiting unusual CPU spikes, I would use a system monitoring tool, while a intermittent application error would require using a debugger or log analyzer.

Q 11. What metrics do you use to measure the effectiveness of your troubleshooting efforts?

Measuring the effectiveness of my troubleshooting efforts relies on both qualitative and quantitative metrics. Quantitatively, I track metrics like Mean Time To Resolution (MTTR) – the average time it takes to fix an issue, and Mean Time Between Failures (MTBF) – the average time between system failures. A decrease in MTTR signifies improvement in my efficiency. Improved MTBF indicates an increase in system stability and reliability.

Qualitatively, I assess the thoroughness of the root cause analysis, ensuring the issue is truly resolved and not just masked. I also consider customer satisfaction, gauged through feedback and surveys. Did the solution effectively address the problem, and was the communication clear and helpful? These qualitative factors are crucial to ensuring long-term improvements and preventing future recurrences. Regular review and analysis of these metrics, alongside post-incident reviews with my team, are essential for continuous improvement.

Q 12. How do you determine the severity of a technical issue?

Determining the severity of a technical issue involves considering several factors. Impact is paramount – how many users are affected? What is the business impact? Is there financial loss, disruption to operations, or safety implications?

Urgency is equally important – how quickly does the issue need to be resolved? Is it critical to business operations, causing immediate financial loss or safety risk? Or is it a minor inconvenience with a longer acceptable resolution time? I use a severity matrix that weighs these factors. For example, a critical issue might be a complete system outage affecting all users, while a low-severity issue could be a minor visual bug affecting a small percentage of users. This matrix helps prioritize my work and allocate resources effectively.

Q 13. Explain your understanding of fault trees and their use in RCA.

A fault tree is a top-down, hierarchical diagram used in root cause analysis to visually represent the causes and contributing factors leading to a specific event or failure. The top node represents the undesired event (the failure), and the subsequent branches progressively break down the event into its root causes. Each branch uses Boolean logic (AND, OR) to illustrate the relationship between contributing factors.

For example, a fault tree for a server outage might have ‘Server Outage’ as the top node. This could be caused by (OR) ‘Hardware Failure’ and ‘Software Error.’ ‘Hardware Failure’ might be further broken down into (AND) ‘Power Supply Failure’ and ‘Hard Drive Crash.’ Fault trees aid in identifying multiple potential root causes, often revealing surprising interactions or dependencies between seemingly unrelated factors. By systematically analyzing the tree, we can identify critical points for preventative maintenance or process improvement.

Q 14. How do you balance speed and accuracy in troubleshooting?

Balancing speed and accuracy in troubleshooting requires a strategic approach. While speed is crucial to minimize downtime and impact, rushing can lead to inaccurate diagnoses and ineffective solutions. I start by quickly gathering initial information to understand the problem’s scope and severity. This quick assessment helps prioritize critical issues and avoid wasting time on low-impact problems. Then, I transition into a more methodical investigation, utilizing diagnostic tools and techniques described earlier.

Applying the Pareto principle (80/20 rule) is helpful; 80% of the problems often stem from 20% of the causes. By focusing on identifying the most likely causes first, we can often resolve the problem faster. Simultaneously, I communicate updates regularly to stakeholders, setting expectations and managing their anxiety. This approach ensures a rapid response without sacrificing the thoroughness required for accurate root cause identification and a long-term solution. It’s a balance between fast initial response, methodical investigation, and clear communication.

Q 15. Describe your experience with escalation procedures.

Escalation procedures are crucial for handling complex or critical issues that exceed an individual’s or team’s capabilities. My experience involves a multi-layered approach, starting with internal attempts at resolution. If the problem persists, I follow a defined escalation path, typically involving progressively senior engineers or managers. This ensures appropriate expertise and resources are deployed. For instance, in a previous role, a persistent network outage wasn’t resolving with standard troubleshooting. I followed our escalation protocol, contacting the network administrator team after exhausting local solutions. Their advanced tools and knowledge quickly pinpointed a faulty router, resolving the issue within an hour. Effective communication during the escalation is key – clear, concise updates to those involved are vital for transparency and efficient problem resolution. This includes using a ticketing system to maintain a complete history of the issue, actions taken, and the current status.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you prevent recurring issues after root cause analysis?

Preventing recurring issues after root cause analysis (RCA) is paramount. My approach involves a three-pronged strategy: implementing corrective actions to address the root cause directly, implementing preventative measures to stop similar issues from happening again, and enhancing monitoring to detect potential problems early. For example, if an RCA reveals inadequate error logging as the cause of a system crash, the corrective action would be to improve logging capabilities. Preventative measures might involve implementing automated alerts for critical error thresholds. Enhanced monitoring could include setting up real-time dashboards to visualize system health and identify potential anomalies before they escalate into outages. Finally, documenting all changes and lessons learned in a centralized knowledge base ensures that future troubleshooting efforts can leverage past experiences. This proactive approach minimizes downtime and improves overall system reliability.

Q 17. What is your preferred method for documenting solutions to prevent future issues?

My preferred method for documenting solutions is using a wiki-style knowledge base, accessible to all relevant team members. This allows for collaborative updates and ensures everyone has access to the latest information. The documentation should include a clear description of the problem, the steps taken to diagnose and resolve it, the root cause analysis, and preventative measures implemented. I also like to include screenshots, diagrams, and code snippets where relevant, making the documentation more user-friendly and comprehensive. We use a consistent template to ensure consistency and ease of retrieval. Consider this example: If a specific database query repeatedly caused performance bottlenecks, the documentation would detail the query, its performance impact, the optimization techniques used (e.g., indexing, query rewriting), and any preventative measures, like automated query analysis or performance monitoring thresholds.

Q 18. How do you use data analysis in your troubleshooting process?

Data analysis is integral to my troubleshooting process. I use various tools and techniques to analyze logs, metrics, and other data sources to identify patterns, anomalies, and potential root causes. For instance, analyzing server logs might reveal error spikes correlated with specific user actions or system events. Tools like Splunk or ELK stack are incredibly helpful for this. I also leverage performance monitoring data to pinpoint bottlenecks or resource constraints. In one instance, analyzing network traffic data revealed a significant increase in bandwidth consumption during specific hours, leading us to identify and resolve a poorly configured application that was inadvertently consuming excessive bandwidth. This data-driven approach allows for more accurate diagnosis and prevents reliance on guesswork, leading to faster and more effective problem resolution.

Q 19. Have you used Fishbone diagrams? Describe your experience.

Fishbone diagrams, also known as Ishikawa diagrams or cause-and-effect diagrams, are a powerful visual tool for brainstorming potential root causes of a problem. I’ve used them extensively in various RCA sessions. The diagram’s structure helps in systematically exploring various categories of potential causes, such as people, processes, machines, materials, environment, and methods. For example, if a project is consistently late, a Fishbone diagram would help us visually organize potential causes under each category, leading to a clearer understanding of the contributing factors. The collaborative nature of creating a Fishbone diagram ensures that multiple perspectives are considered, leading to a more comprehensive and accurate analysis. The visual representation also makes it easier to communicate the findings to others.

Q 20. Explain your experience with using Pareto analysis in RCA.

Pareto analysis, the 80/20 rule, is valuable in RCA for prioritizing issues. It helps identify the vital few causes contributing to the majority of problems. I’ve applied this by analyzing data to identify the top issues causing the most significant impact or downtime. For example, if we’re experiencing frequent application errors, a Pareto analysis might reveal that 80% of the errors stem from just 20% of the code modules. This allows us to focus our remediation efforts on those critical areas, maximizing impact. It helps avoid wasting resources on less impactful problems. By concentrating efforts on the major causes, it achieves faster resolution and prevents the issue from recurring.

Q 21. How do you handle pressure and stress during a critical incident?

Handling pressure and stress during critical incidents requires a structured approach. I prioritize clear communication, delegating tasks effectively, and maintaining a calm demeanor to guide the team. Taking short breaks to regroup and clear my head can help prevent burnout. I also rely on established checklists and troubleshooting methodologies to prevent decision fatigue. In one instance, during a major system failure, I followed a step-by-step plan, ensuring all necessary data was collected and procedures were followed. This systematic approach helped keep things under control. Clear communication to stakeholders minimized anxiety, and by delegating tasks, I ensured that the team remained focused and efficient in resolving the issue. A post-incident review helps assess how effectively we managed the situation and identify areas for improvement.

Q 22. Describe a situation where you had to troubleshoot a problem under tight deadlines.

One time, our production database experienced a sudden outage, impacting all our customer-facing applications. This happened right before a major marketing campaign launch – a truly high-pressure situation. We had less than two hours to restore service, or we risked significant financial losses and reputational damage.

My immediate action was to assemble a team and systematically assess the situation. We utilized a combination of monitoring tools to identify the affected services and the extent of the problem. We quickly eliminated network issues as a cause. Focusing on the database server, we checked the logs and observed a sudden spike in I/O operations just before the crash, indicating a potential disk failure. Simultaneously, another team member explored database replication and recovery mechanisms. We implemented a failover to our secondary database replica and were able to restore limited service within 45 minutes. The full restoration, including a thorough data integrity check, took 1 hour and 15 minutes. The root cause, as it turned out, was a failing hard drive in the primary database server. This tight-deadline scenario highlighted the importance of proactive monitoring, disaster recovery planning, and having a well-rehearsed team capable of swift, efficient problem resolution.

Q 23. What are some common pitfalls to avoid during root cause analysis?

Root cause analysis (RCA) is a powerful technique, but it’s prone to pitfalls if not executed carefully. Common mistakes include:

- Prematurely jumping to conclusions: This often occurs when the initial symptoms are mistaken for the root cause. Always thoroughly investigate all potential contributing factors before concluding the RCA.

- Focusing on symptoms rather than causes: A slow application might be a symptom of a database bottleneck, network latency, or insufficient server resources. RCA needs to find the ‘why’ behind the symptom.

- Confirmation bias: Once a potential root cause is identified, there’s a tendency to seek evidence that supports it and ignore contradictory evidence. This bias hinders objective analysis.

- Ignoring human factors: Errors often originate from human actions, such as misconfiguration, lack of training, or process failures. These aspects should not be overlooked.

- Lack of a structured approach: RCA demands a systematic process. Using a defined methodology (e.g., the 5 Whys, fishbone diagram) helps ensure thoroughness.

Avoiding these pitfalls requires a disciplined approach, open communication, and a commitment to objective fact-finding.

Q 24. How do you verify that the root cause has been correctly identified?

Verifying a root cause is crucial and involves several steps. Simply fixing the immediate problem isn’t enough; you must ensure that fixing the root cause prevents recurrence. I usually employ these verification strategies:

- Implementation of corrective actions: After identifying and addressing the root cause, the corrective actions are implemented.

- Monitoring and observation: After the corrective actions are in place, the system is closely monitored to ensure the problem doesn’t reoccur. This monitoring may span several days, weeks, or even months, depending on the nature of the problem.

- Data analysis: Relevant metrics and logs are analyzed to confirm whether the implemented solutions have successfully resolved the underlying issue and prevented recurrence.

- Validation by multiple stakeholders: Involve different team members to review the findings and conclusions of the RCA to ensure the solution is comprehensive and well-validated.

The goal is to demonstrate that the solution is not merely a workaround but a permanent fix that eliminates the root cause of the problem, achieving lasting resolution.

Q 25. How do you handle conflicting information or opinions during RCA?

Conflicting information is common in RCA. It’s critical to handle it constructively. My approach involves:

- Gathering all perspectives: Encourage everyone to share their observations and opinions, regardless of how seemingly insignificant they may be.

- Documenting everything: Maintain a detailed record of all information, including conflicting views, to facilitate objective analysis and avoid misinterpretations.

- Fact-finding through data: Instead of relying on opinions, seek evidence from logs, monitoring data, and other objective sources to resolve disagreements.

- Facilitated discussion: Lead a collaborative session to discuss the discrepancies. Frame the discussion around the objective of finding the truth, not proving anyone right or wrong.

- Escalation if necessary: If conflicts remain unresolved, seek guidance from senior engineers or management to provide an impartial perspective.

The key is to create a safe space for open dialogue where differences of opinion are valued, and the goal is collaborative problem-solving.

Q 26. Describe a time you failed to identify the root cause. What did you learn?

In one instance, our application experienced intermittent performance degradation. Initially, we focused on optimizing database queries and server resources, but the problem persisted. We missed a crucial detail: a poorly configured load balancer that was inconsistently distributing traffic across application servers. The inconsistent load caused some servers to become overloaded, leading to performance issues. We learned a valuable lesson:

- The importance of holistic investigation: We initially tunnel-visioned on the application code and database, neglecting other layers of the infrastructure.

- The value of thorough logging and monitoring: Better logging and monitoring of the load balancer would have alerted us to the root cause much earlier.

- The necessity of challenging assumptions: We prematurely assumed the problem was within the application itself and failed to question our initial hypotheses.

This failure led to a more robust monitoring and logging strategy, improved incident response procedures, and a stronger emphasis on cross-functional collaboration.

Q 27. How do you stay current with the latest troubleshooting techniques and technologies?

Staying current in troubleshooting techniques requires continuous learning. I actively use these methods:

- Online courses and certifications: Platforms like Coursera, edX, and Udemy offer valuable courses on various troubleshooting and RCA methodologies.

- Industry conferences and webinars: Attending conferences and webinars allows me to network with experts and learn about the latest tools and techniques.

- Professional journals and publications: Reading industry publications keeps me up-to-date on new research and best practices.

- Online communities and forums: Participating in online communities, such as Stack Overflow, provides access to a wealth of knowledge and practical experience shared by peers.

- Hands-on practice: I actively seek opportunities to apply new knowledge and skills in real-world projects, reinforcing my learning through experience.

Continuous learning is vital for maintaining expertise in this dynamic field.

Q 28. Describe your experience using a specific RCA tool (e.g., Six Sigma).

I have extensive experience using Six Sigma methodologies for RCA. Specifically, I’ve applied the DMAIC (Define, Measure, Analyze, Improve, Control) cycle in several projects. For example, during a project to improve the stability of a microservice architecture, we followed these steps:

- Define: We clearly defined the problem – frequent downtime of a specific microservice – and its impact on the overall system.

- Measure: We gathered data on the frequency, duration, and causes of downtime using monitoring tools and logs.

- Analyze: We utilized various analytical techniques, such as Pareto charts and fishbone diagrams, to identify the root causes of the downtime – specifically, inadequate error handling and resource contention.

- Improve: We implemented solutions such as improved error handling mechanisms, circuit breakers, and resource allocation strategies.

- Control: We established monitoring processes to track the effectiveness of the implemented solutions and ensure continued system stability.

The Six Sigma methodology provided a structured and data-driven approach, resulting in a significant reduction in microservice downtime and improved overall system reliability.

Key Topics to Learn for Troubleshooting Techniques and Root Cause Analysis Interview

- Defining the Problem: Mastering the art of clearly articulating the issue, gathering comprehensive information, and identifying the scope of the problem. This includes understanding the difference between symptoms and root causes.

- Troubleshooting Methodologies: Familiarize yourself with various systematic approaches like the 5 Whys, Fishbone diagrams (Ishikawa diagrams), and fault trees. Understand when to apply each method effectively.

- Data Analysis and Interpretation: Develop your skills in analyzing logs, metrics, and other data sources to identify patterns and anomalies that point to the root cause. Practice interpreting complex data sets efficiently.

- Hypothesis Generation and Testing: Learn how to formulate testable hypotheses based on available data and systematically test these hypotheses to eliminate possibilities and narrow down the root cause.

- Documentation and Communication: Practice clearly documenting your troubleshooting process, findings, and proposed solutions. Effective communication of technical information to both technical and non-technical audiences is crucial.

- Preventive Measures and Solutions: Develop your ability to not only identify the root cause but also to propose effective and sustainable solutions to prevent recurrence. Think about long-term preventative strategies.

- Tools and Technologies: Gain familiarity with relevant troubleshooting tools and technologies used in your industry. This may include specific software, monitoring systems, or debugging tools.

Next Steps

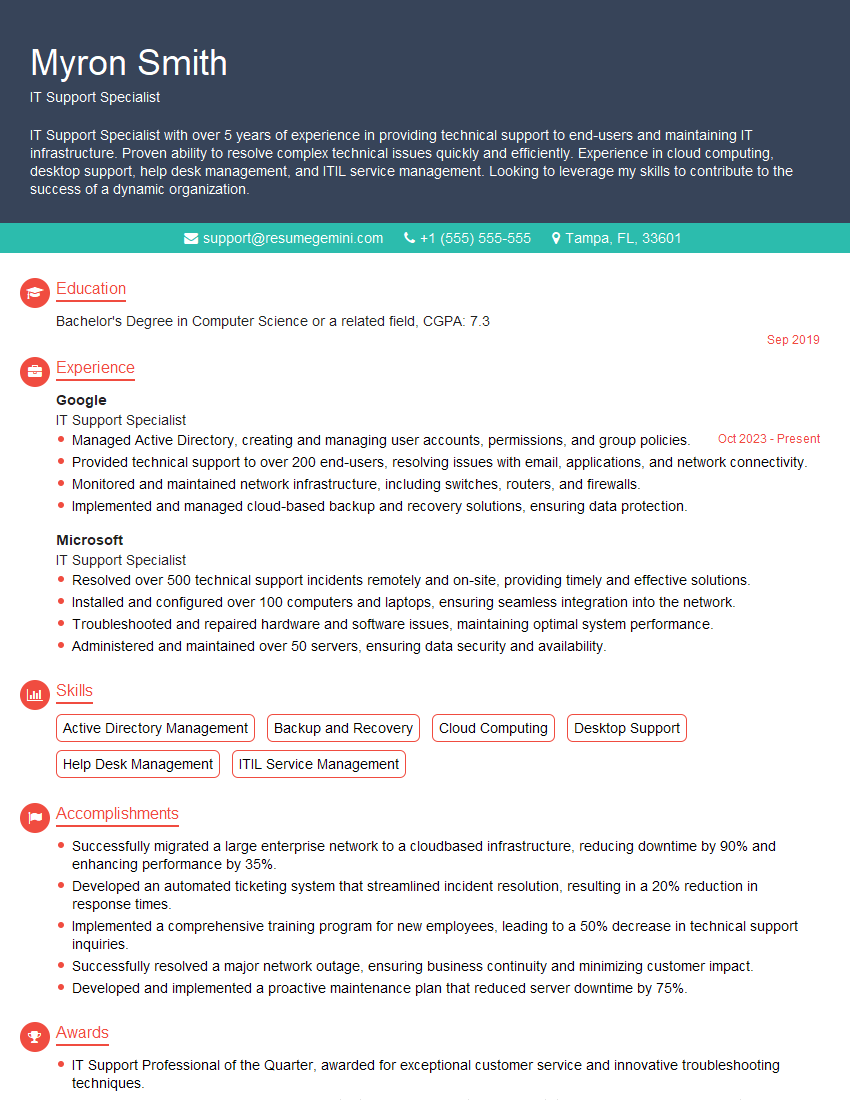

Mastering Troubleshooting Techniques and Root Cause Analysis is vital for career advancement in virtually any technical field. These skills demonstrate your ability to solve complex problems, think critically, and contribute effectively to a team. To maximize your job prospects, it’s crucial to create a resume that showcases these skills effectively to Applicant Tracking Systems (ATS). Building an ATS-friendly resume highlights your qualifications and increases your chances of landing an interview. We highly recommend using ResumeGemini to create a compelling and professional resume that effectively presents your expertise in Troubleshooting Techniques and Root Cause Analysis. ResumeGemini offers examples of resumes tailored to this specific skill set, helping you build a document that stands out from the competition.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.