Feeling uncertain about what to expect in your upcoming interview? We’ve got you covered! This blog highlights the most important Evaluation Research interview questions and provides actionable advice to help you stand out as the ideal candidate. Let’s pave the way for your success.

Questions Asked in Evaluation Research Interview

Q 1. Define evaluation research and its core purpose.

Evaluation research is a systematic process of gathering and analyzing information to determine the effectiveness, efficiency, and impact of a program, policy, or intervention. Its core purpose is to provide credible evidence to inform decision-making, improve program implementation, and ultimately achieve better outcomes. Think of it as a comprehensive checkup for a program, providing insights into what’s working, what’s not, and how to make it better.

For example, evaluating a new literacy program might involve assessing whether students’ reading scores improve, whether teachers find the program easy to use, and whether the program is cost-effective compared to existing methods.

Q 2. Explain the difference between formative and summative evaluation.

Formative and summative evaluations differ in their timing and purpose. Formative evaluation happens during the implementation of a program. It’s designed to improve the program while it’s still ongoing. Think of it as a ‘progress report’ that helps fine-tune the program along the way. Data collected in formative evaluations is often used to make adjustments and improvements to the program’s design or delivery.

Summative evaluation, on the other hand, happens after the program has been completed. It focuses on assessing the overall impact and effectiveness of the program. It’s the ‘final grade’ that summarizes the program’s accomplishments and identifies areas for future programs. This evaluation often determines whether the program should be continued, modified, or discontinued.

Imagine building a house: formative evaluation is like checking the foundation and walls during construction to ensure they are sound before moving on, while summative evaluation is the final inspection to verify the house is built according to plan and meets building codes.

Q 3. Describe the various types of evaluation designs (e.g., experimental, quasi-experimental, etc.).

Evaluation designs vary depending on the research question and available resources. Here are some key types:

- Experimental designs: These designs involve randomly assigning participants to either a treatment (program) group or a control group. This allows researchers to isolate the effects of the program by comparing the outcomes of the two groups. They offer the strongest evidence of cause-and-effect relationships, but are not always feasible.

- Quasi-experimental designs: These designs are similar to experimental designs but lack random assignment. Participants are assigned to groups based on pre-existing characteristics, which limits the ability to draw strong causal conclusions. However, they are often more practical in real-world settings.

- Pre-experimental designs: These designs are the least rigorous, often lacking control groups or pre-tests. They’re useful for preliminary exploration or when resources are severely limited, but their findings should be interpreted cautiously.

- Descriptive designs: These designs focus on describing program characteristics and outcomes without making causal inferences. They are useful for understanding program implementation, gathering baseline data, or exploring stakeholder perspectives.

The choice of design depends on factors like the research question, available resources, ethical considerations, and the feasibility of random assignment.

Q 4. What are the key components of a strong evaluation plan?

A strong evaluation plan is essential for a successful evaluation. Key components include:

- Clearly defined evaluation questions: What specifically do you want to learn? These questions should be measurable and linked to the program’s goals and objectives.

- Logic model: A visual representation of the program’s theory of change, outlining the inputs, activities, outputs, outcomes, and overall impact.

- Evaluation design: Selection of appropriate methods based on the research question and resources.

- Data collection methods: Choosing appropriate methods (surveys, interviews, observations, document review) to collect data relevant to the evaluation questions.

- Data analysis plan: How will you analyze the data to answer the evaluation questions? This should include statistical analysis techniques (if quantitative data) or qualitative data analysis approaches (e.g., thematic analysis).

- Timeline and budget: A realistic schedule and budget allocation for all aspects of the evaluation.

- Dissemination plan: How will the findings be communicated to stakeholders? This includes reports, presentations, and other forms of communication.

Q 5. How do you select appropriate evaluation methods for a given program or project?

Selecting appropriate evaluation methods requires careful consideration of several factors:

- Evaluation questions: The methods should directly address the research questions.

- Program characteristics: The nature of the program, its target population, and context will influence the choice of methods.

- Resources: Time, budget, and personnel constraints will limit the feasibility of certain methods.

- Ethical considerations: Protecting participant privacy and ensuring informed consent are crucial.

- Feasibility: Can the chosen methods realistically be implemented given the time constraints and resources?

For example, a program aimed at improving employee satisfaction might use surveys to collect quantitative data on employee morale and qualitative methods like interviews to explore the reasons behind satisfaction levels.

Q 6. Explain the difference between quantitative and qualitative data in evaluation.

Quantitative and qualitative data provide different types of information in evaluation research. Quantitative data are numerical data that can be statistically analyzed. This type of data often focuses on measuring the magnitude or frequency of certain phenomena. Examples include test scores, survey responses, and program participation rates.

Qualitative data are non-numerical data that provide rich descriptions of experiences, perspectives, and meanings. Examples include interview transcripts, field notes from observations, and documents. Qualitative data is excellent for exploring underlying reasons, understanding context, and gaining in-depth insights.

Often, a mixed-methods approach that combines both quantitative and qualitative data provides a more comprehensive understanding of a program’s effectiveness.

Q 7. Describe your experience with data collection techniques (e.g., surveys, interviews, focus groups).

I have extensive experience using various data collection techniques, including:

- Surveys: I’ve designed and administered both online and paper-based surveys, using validated scales and questionnaires to measure attitudes, behaviors, and knowledge. I’m proficient in using survey software like Qualtrics and SurveyMonkey.

- Interviews: I conduct both structured and semi-structured interviews, employing active listening techniques and probing questions to gather in-depth information from individuals. I’m experienced in coding and analyzing interview transcripts using qualitative data analysis software like NVivo.

- Focus groups: I’ve facilitated numerous focus groups, creating a safe and inclusive environment for participants to share their perspectives and experiences. I’m skilled in guiding discussions, managing group dynamics, and analyzing group discussions.

- Observations: I’ve conducted both structured and unstructured observations, using checklists and field notes to systematically record behaviors and events.

- Document review: I have experience analyzing various types of documents, including program reports, meeting minutes, and policy documents, to understand program implementation and context.

My experience spans diverse settings, including educational programs, community interventions, and healthcare initiatives. I adapt my data collection techniques to the specific needs of each project, ensuring rigorous and ethical data collection practices.

Q 8. How do you ensure the validity and reliability of evaluation data?

Ensuring the validity and reliability of evaluation data is paramount. Validity refers to whether the evaluation truly measures what it intends to measure, while reliability refers to the consistency and stability of the measurements. We achieve this through a multi-pronged approach:

- Triangulation: Employing multiple data sources (e.g., surveys, interviews, observations) to corroborate findings and reduce bias. For instance, if evaluating a new teaching method, we might use student test scores, teacher feedback, and student interviews to get a comprehensive picture.

- Robust Measurement Instruments: Utilizing well-established and validated questionnaires or tests. This ensures the questions are clear, unbiased, and accurately capture the intended constructs. Before using a survey, we’d check its psychometric properties (reliability and validity coefficients).

- Pilot Testing: Conducting a small-scale test run to identify and address any flaws in the data collection process before full implementation. This helps to refine questionnaires and identify potential problems in the data analysis strategy.

- Inter-rater Reliability (for qualitative data): If using qualitative methods like interviews or observations, multiple raters independently analyze the data to assess agreement and minimize subjective biases. We might use Cohen’s Kappa to quantify the level of agreement.

- Careful Data Cleaning: Identifying and handling missing data, outliers, and inconsistencies. This often involves data cleaning techniques and the use of statistical procedures to address missing data.

By combining these methods, we significantly strengthen the credibility and trustworthiness of our evaluation findings.

Q 9. What statistical methods are you proficient in using for evaluation analysis?

My statistical proficiency spans a broad range of methods appropriate for various evaluation designs. I’m adept at both descriptive and inferential statistics.

- Descriptive Statistics: Frequencies, means, standard deviations, percentages, and measures of central tendency are routinely used to summarize the data and present key findings. For example, calculating the average test score improvement in a program’s intervention group.

- Inferential Statistics: I regularly utilize t-tests, ANOVA (analysis of variance), regression analysis (linear, multiple, logistic), and chi-square tests to analyze relationships between variables and draw conclusions about population parameters based on sample data. For example, a t-test would compare the mean test scores of an intervention group versus a control group to determine the effectiveness of a program.

- Non-parametric methods: When data doesn’t meet assumptions of normality, I utilize appropriate non-parametric alternatives such as Mann-Whitney U test or Kruskal-Wallis test.

- Effect Size Calculations: I calculate and interpret effect sizes (e.g., Cohen’s d, eta-squared) to determine the magnitude of any observed effects, going beyond simple statistical significance. This helps determine the practical significance of the findings.

The choice of statistical methods always depends on the research question, the nature of the data, and the evaluation design.

Q 10. Explain your experience with data analysis software (e.g., SPSS, R, STATA).

I have extensive experience with several data analysis software packages, including SPSS, R, and STATA. My proficiency includes data entry, cleaning, transformation, statistical analysis, and generating reports and visualizations.

- SPSS: I’m comfortable performing all types of statistical analysis within SPSS, including creating descriptive statistics, running regression models, and generating reports. I have used it extensively for survey data analysis and large-scale data management.

- R: I utilize R for its flexibility and extensive packages for data manipulation (dplyr, tidyr), visualization (ggplot2), and advanced statistical modeling. R’s open-source nature and vast community support make it invaluable for complex analyses.

- STATA: I use STATA for its strengths in longitudinal data analysis, particularly with complex survey designs and causal inference methodologies. For example, I have utilized STATA to analyze panel data in evaluating long-term program impacts.

My choice of software depends on the specific needs of the project. For example, for simple descriptive analyses and generating reports, SPSS might be sufficient, while for complex statistical modeling and reproducible research, R would be preferred. STATA is particularly useful for projects with complex data structures.

Q 11. How do you interpret and present evaluation findings to stakeholders?

Interpreting and presenting evaluation findings requires careful consideration of the audience. I strive to communicate complex information clearly and concisely, tailoring the presentation to the stakeholders’ level of understanding.

- Clear and Concise Language: Avoiding technical jargon or explaining it clearly. I use analogies and real-world examples to illustrate key findings.

- Visualizations: Employing charts, graphs, and tables to present data in an accessible format. Data visualizations make complex data much easier to grasp.

- Storytelling: Structuring the presentation as a narrative, highlighting key findings and their implications. This helps to engage the audience and make the results more memorable.

- Interactive Presentations: When appropriate, I use interactive tools to enhance engagement and allow stakeholders to explore the data themselves.

- Targeted Communication: I tailor the presentation to the audience. A presentation to a board of directors will differ significantly from a presentation to program staff.

For instance, when presenting to a funding agency, I focus on the impact and cost-effectiveness of the program, whereas when presenting to program staff, I focus on areas for improvement and potential next steps.

Q 12. Describe your experience in creating evaluation reports.

I have extensive experience creating evaluation reports, encompassing various formats and audiences. My reports typically include:

- Executive Summary: A concise overview of the evaluation purpose, methods, key findings, and conclusions.

- Introduction and Background: Contextualizing the evaluation within the broader program or initiative.

- Methodology: Detailing the data collection methods, sample size, and data analysis techniques used.

- Findings: Presenting the results clearly and concisely, using tables, charts, and narrative descriptions. I always include limitations of the study.

- Conclusions and Recommendations: Summarizing the key findings, drawing conclusions about program effectiveness, and offering specific recommendations for improvement.

- Appendices: Including supplementary materials such as questionnaires, interview protocols, and detailed statistical tables.

I am adept at using various software (Word, PowerPoint, specialized reporting tools) to produce professional and high-quality reports. My reports are designed to be accessible, informative, and actionable.

Q 13. How do you address challenges in data collection or analysis during an evaluation?

Challenges in data collection and analysis are inevitable. My approach involves proactive planning and flexible problem-solving:

- Addressing Missing Data: Employing appropriate statistical techniques (e.g., imputation) to handle missing data, depending on the nature and extent of the missingness. The method used depends on the type of data missing (MCAR, MAR, MNAR) and the impact of imputation on results.

- Dealing with Outliers: Investigating outliers carefully to determine if they represent genuine data points or errors. I might choose to remove them, transform the data, or use robust statistical methods less sensitive to outliers.

- Adapting Methods: If unforeseen challenges arise during data collection, such as low response rates or unexpected issues in the field, I modify data collection strategies as necessary and transparently document any changes in the methodology section of the report.

- Seeking Expert Advice: For particularly complex challenges, I consult with colleagues or experts in relevant fields. This ensures I use the best available techniques for overcoming challenges.

- Transparency and Documentation: All changes made to the methodology and the rationale for addressing challenges are meticulously documented in the evaluation report. This builds trust and maintains credibility.

For example, if a low response rate compromises the representativeness of the sample, I would clearly state the limitations and possible biases in the report and discuss potential alternative approaches to address these limitations.

Q 14. How do you ensure the ethical considerations are addressed in your evaluation work?

Ethical considerations are central to my evaluation work. My approach ensures:

- Informed Consent: Participants are fully informed about the purpose of the evaluation, their rights, and how their data will be used. Consent is obtained before data collection. Anonymity and confidentiality are always ensured.

- Data Privacy and Confidentiality: Implementing procedures to protect the privacy and confidentiality of participants’ data. This often involves data anonymization and secure data storage.

- Transparency and Honesty: Openly communicating the evaluation findings and limitations, avoiding misrepresentation or manipulation of data.

- Beneficence and Non-maleficence: Prioritizing the wellbeing of participants. The evaluation process should not harm participants, either directly or indirectly.

- Justice and Fairness: Ensuring fair and equitable treatment of all participants. This means avoiding bias in data collection and interpretation.

- Adherence to relevant guidelines and regulations: Following professional ethical codes and relevant regulations concerning data privacy and research ethics (e.g., IRB review board guidelines).

Throughout the evaluation process, I maintain a commitment to the ethical treatment of all participants and adhere strictly to all relevant ethical guidelines and regulations.

Q 15. What is the role of stakeholder engagement in the evaluation process?

Stakeholder engagement is crucial for successful program evaluation. It ensures the evaluation is relevant, credible, and ultimately useful. Think of it as building a bridge: you need to understand the needs and perspectives of everyone who will use that bridge – from the engineers who built it to the community who will cross it. Without understanding their perspectives, you risk building a bridge that doesn’t meet anyone’s needs.

Effective engagement involves identifying key stakeholders early on – program staff, beneficiaries, funders, policymakers, etc. Then, I employ various methods to gather their input throughout the evaluation process. This could include interviews, focus groups, surveys, and participation in evaluation design and data interpretation. For example, in a recent evaluation of a community health program, I actively involved community leaders in shaping the evaluation questions and interpreting the findings. This ensured the results were directly relevant to their needs and priorities and increased their buy-in to the recommendations.

The benefits of robust stakeholder engagement are manifold: increased data quality, higher levels of trust and cooperation, improved dissemination and utilization of evaluation findings, and ultimately, a greater likelihood of positive program change.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you manage competing priorities and deadlines in evaluation projects?

Managing competing priorities and deadlines in evaluation projects demands strong organizational skills and proactive communication. It’s like juggling – you need to keep all the balls in the air, but you also need a strategy to prevent any from dropping. I utilize project management tools, such as Gantt charts, to visualize tasks, timelines, and dependencies. This provides a clear overview of the entire project and allows for efficient resource allocation. I also hold regular meetings with the evaluation team and stakeholders to monitor progress, address challenges, and make necessary adjustments to the plan.

Prioritization is key. I use techniques like MoSCoW analysis (Must have, Should have, Could have, Won’t have) to rank tasks based on their importance and feasibility within the given timeframe. This helps make informed decisions about what can be achieved within the available resources and time constraints. Open communication with stakeholders is crucial, keeping them informed about potential delays or changes in the project plan. Transparency is crucial to maintain trust and manage expectations.

Q 17. Describe your experience with logic models and their use in evaluation.

Logic models are visual representations of a program’s theory of change. They illustrate the connections between program inputs, activities, outputs, outcomes, and ultimately, its impact. Think of it as a roadmap showing how a program is supposed to work. I use logic models extensively in evaluations to clarify the program’s intended effects and to identify the key factors to be measured.

In practice, I often start by working with program staff to develop or refine an existing logic model. This collaborative process ensures everyone shares a common understanding of the program’s intended impact and the underlying assumptions. The model then guides the design of the evaluation – defining the indicators to measure progress across various stages of the program and providing a framework for interpreting the results. For instance, in an evaluation of an educational program, the logic model would detail the relationship between teacher training (inputs), classroom activities (activities), student knowledge gains (outputs), improved academic performance (outcomes), and increased career opportunities (impact).

Q 18. Explain your understanding of different evaluation frameworks (e.g., CIPP, Goal-Free).

Several evaluation frameworks exist, each with its strengths and weaknesses. The CIPP model (Context, Input, Process, Product) focuses on evaluating different aspects of a program at various stages. It is useful for providing comprehensive feedback at various points in a program’s lifecycle. The Goal-Free evaluation emphasizes assessing actual outcomes without preconceived notions about how the program should work. It helps uncover unintended consequences and promotes unbiased assessment. Other frameworks include Utilization-focused evaluation, which centers on using the evaluation findings to inform decision-making, and Participatory evaluation, which prioritizes stakeholder involvement at every stage.

The choice of framework depends on the evaluation’s purpose, resources, and the context. For instance, CIPP is ideal for comprehensive evaluations of large-scale programs, while a Goal-Free approach might be more suitable for exploratory evaluations or when the program’s theory of change is unclear. I adapt and combine elements from different frameworks to create a tailored approach that best suits each project’s unique needs.

Q 19. How do you measure program impact and outcomes effectively?

Measuring program impact and outcomes requires a multifaceted approach, carefully considering both quantitative and qualitative data. It is crucial to define clear, measurable indicators aligned with the program’s objectives and the logic model. For instance, to evaluate a job training program’s impact on employment, you might measure the percentage of participants who find jobs, their average earnings, and the duration of their employment.

Quantitative methods, like statistical analysis of pre- and post-intervention data, are important to establish causal links. However, these should be complemented by qualitative methods, such as interviews or focus groups, to gain a deeper understanding of the experiences and perspectives of program participants and stakeholders. This rich qualitative data provides context and depth to the quantitative findings, offering a more comprehensive picture of program impact. For example, you might find that while overall employment rates increased, certain subgroups of participants experienced more challenges – information only available through qualitative data.

Furthermore, rigorous methods are needed to control for confounding variables and ensure the observed effects are indeed attributable to the program. Using control groups, appropriate statistical techniques, and clear outcome definitions are essential aspects of accurately measuring program impacts.

Q 20. How do you handle conflicting stakeholder perspectives on program evaluation?

Conflicting stakeholder perspectives are inevitable in program evaluations. The key is to manage these conflicts constructively and transparently. I facilitate open dialogue and encourage stakeholders to articulate their perspectives and concerns. I create a safe and inclusive space for discussion, emphasizing the shared goal of improving the program.

I use techniques like structured deliberation to guide discussions and ensure all viewpoints are heard. This could involve using a structured framework to explore different perspectives, identifying common ground, and working towards consensus. Sometimes, not all conflicts can be resolved completely, but the process of structured discussion can lead to a shared understanding of the different perspectives and inform more nuanced conclusions and recommendations. The focus remains on utilizing the diverse perspectives to enrich the evaluation and strengthen the recommendations.

Q 21. Describe your experience with utilizing mixed methods in evaluation research.

Mixed methods approaches, combining quantitative and qualitative methods, are powerful tools in evaluation research. They offer a more holistic and nuanced understanding of program effectiveness than relying on either approach alone. For example, in evaluating a community development project, I might use quantitative data (e.g., surveys) to assess changes in community indicators like crime rates or business growth, alongside qualitative data (e.g., interviews and focus groups) to explore the lived experiences of residents and the factors contributing to or hindering program success.

The integration of quantitative and qualitative data can take various forms, such as sequential designs (qualitative data informing the quantitative design or vice versa) or concurrent designs (collecting both types of data simultaneously). The specific approach depends on the research questions and the resources available. The strength of mixed methods lies in its ability to provide a more comprehensive, in-depth understanding of the program’s effects, thus leading to more robust and contextually relevant conclusions.

Q 22. What are the limitations of different evaluation approaches?

Different evaluation approaches, such as quantitative, qualitative, and mixed methods, each possess unique strengths and limitations. Quantitative approaches, relying heavily on statistical analysis of numerical data, can lack contextual richness and may miss nuanced perspectives. For instance, a quantitative evaluation of a literacy program solely focusing on test scores might overlook improvements in critical thinking or confidence, crucial aspects of literacy development. Qualitative methods, emphasizing in-depth understanding through interviews, observations, and document analysis, can be time-consuming and lack generalizability to larger populations. Imagine trying to understand the impact of a new teaching method solely through interviews with a small group of teachers; the findings might not represent the experiences of all teachers. Mixed methods evaluations, combining both quantitative and qualitative data, offer a more comprehensive picture but can be complex and resource-intensive, demanding expertise in both approaches. The optimal approach always depends on the specific evaluation question, available resources, and desired level of detail.

Q 23. How do you ensure the sustainability of program impacts beyond the evaluation period?

Ensuring the sustainability of program impacts requires a multifaceted strategy starting during program implementation. This involves building capacity within the organization or community to continue the program’s activities after the evaluation period. For example, we might train local staff to implement the program’s core components, ensuring they possess the necessary skills and knowledge. We also need to build strong partnerships with stakeholders – government agencies, community organizations, and other relevant actors – who can provide ongoing support and resources. Furthermore, we need to create systemic changes that support the program. This might involve influencing policy decisions or integrating the program into existing institutional structures. Finally, documenting best practices and lessons learned from the evaluation is crucial, providing a blueprint for future implementation and adaptation. In a recent evaluation of a rural development project, we worked closely with local leaders to establish a sustainable management committee that could oversee the project’s activities after our involvement ended, ensuring the project’s positive impacts lasted.

Q 24. How do you select appropriate indicators for measuring program success?

Selecting appropriate indicators for measuring program success requires a clear understanding of the program’s goals and objectives. We start by identifying the key outcomes the program aims to achieve. For instance, if the program is designed to improve student literacy, indicators might include test scores, reading comprehension levels, and changes in students’ attitudes towards reading. It is crucial to use a mix of outcome indicators, which measure changes in the target population, and process indicators, which monitor program implementation fidelity. Process indicators ensure that the program is being delivered as intended. For instance, for the literacy program, process indicators might track the number of training sessions conducted for teachers, the number of books distributed, and the level of teacher engagement. The selection of indicators should also consider data availability, feasibility of data collection, and the resources required. Ideally, indicators should be SMART (Specific, Measurable, Achievable, Relevant, and Time-bound).

Q 25. Explain your experience with cost-benefit analysis in evaluation.

Cost-benefit analysis (CBA) is a crucial aspect of evaluation, helping to determine the economic efficiency of a program. In a recent project evaluating a job training program, we conducted a CBA by estimating the program’s costs (e.g., training materials, instructor salaries) and comparing them to the benefits (e.g., increased employment rates, higher wages, reduced welfare dependency). We used both quantitative and qualitative data. Quantitative data included wage increases and employment statistics. Qualitative data helped us understand the long-term impacts on participants’ lives (e.g., improved self-esteem, better quality of life). This holistic approach gave us a more comprehensive picture. The results informed policy decisions on resource allocation, showing that the program offered a significant return on investment. Challenges in CBA include accurately quantifying intangible benefits and assigning monetary values to non-market effects, such as improved health or environmental protection. We addressed this using various valuation techniques like contingent valuation, where we surveyed participants to estimate their willingness to pay for the program’s benefits.

Q 26. Describe your experience with using technology for data collection and analysis in evaluation.

Technology has revolutionized data collection and analysis in evaluation. I have extensive experience using various software tools for data management, analysis, and visualization. For instance, I’ve used REDCap for secure data collection, SPSS for statistical analysis, and R for advanced statistical modeling. In a recent evaluation of a public health intervention, we used mobile devices equipped with survey software to collect data from participants in real-time. This streamlined data collection, reducing errors and increasing efficiency. Technology also facilitates data analysis. Using statistical software packages allows for efficient analysis of large datasets, allowing for more sophisticated analytical approaches. Data visualization tools like Tableau and Power BI allow us to present complex findings clearly and effectively to both technical and non-technical audiences. The use of technology also raises ethical considerations, such as data security and privacy, requiring strict adherence to ethical guidelines.

Q 27. How do you communicate complex evaluation findings to non-technical audiences?

Communicating complex evaluation findings to non-technical audiences requires careful planning and clear communication strategies. I avoid using technical jargon and instead employ clear, concise language, using analogies and real-world examples to illustrate complex concepts. For instance, instead of saying “the regression model showed a statistically significant effect,” I might say “our analysis shows a strong relationship between the program and its positive impact.” Visual aids, such as charts, graphs, and infographics, are essential tools for simplifying information. Storytelling also plays a crucial role, using narratives to convey the human impact of the program. In a recent presentation, I used case studies of individual participants to illustrate the program’s impact, making the data more relatable and compelling. In addition to the report, we created a short video that summarized the key findings in an accessible way.

Q 28. What are some common challenges in conducting evaluation research, and how have you overcome them?

Common challenges in evaluation research include gaining access to data, managing stakeholder expectations, and ensuring the ethical conduct of the research. Gaining access to data can be challenging, particularly in sensitive contexts. I’ve overcome this by building strong relationships with stakeholders, explaining the purpose of the evaluation, and emphasizing the benefits of the research. Managing stakeholder expectations involves clear communication from the outset, setting realistic timelines and deliverables, and actively engaging stakeholders throughout the evaluation process. Ensuring ethical conduct involves obtaining informed consent from participants, protecting their privacy and confidentiality, and adhering to relevant ethical guidelines. In a study involving vulnerable populations, we worked closely with an ethics committee to ensure that our research methods were ethically sound and protected the rights of participants. We addressed potential biases through rigorous sampling strategies and careful data analysis techniques.

Key Topics to Learn for Evaluation Research Interview

- Evaluation Design: Understand different evaluation designs (e.g., experimental, quasi-experimental, qualitative) and their strengths and weaknesses. Consider how to select the most appropriate design for a given research question and context.

- Data Collection Methods: Become proficient in various data collection techniques, including surveys, interviews, focus groups, document analysis, and observational methods. Practice explaining the rationale behind choosing specific methods for a particular evaluation.

- Quantitative Data Analysis: Master basic statistical concepts and techniques relevant to evaluating program outcomes. Be prepared to discuss descriptive statistics, inferential statistics (e.g., t-tests, ANOVA), and regression analysis. Focus on interpreting results in the context of the evaluation question.

- Qualitative Data Analysis: Develop skills in qualitative data analysis methods, such as thematic analysis, grounded theory, and content analysis. Practice coding and interpreting qualitative data to identify key themes and patterns.

- Program Logic Models: Understand how to develop and utilize program logic models to clarify program theory, identify key inputs, activities, outputs, outcomes, and overall impact. Be able to critically evaluate existing logic models.

- Reporting and Dissemination: Practice effectively communicating evaluation findings to diverse audiences through clear and concise reports, presentations, and visualizations. Develop your ability to tailor your communication style to the audience’s needs and knowledge level.

- Ethical Considerations: Familiarize yourself with ethical issues related to evaluation research, including informed consent, confidentiality, and data security. Be prepared to discuss ethical dilemmas and how to address them.

- Utilizing Existing Data: Explore how secondary data analysis can be utilized in evaluation research, including administrative data and publicly available datasets. This demonstrates resourcefulness and cost-effectiveness.

- Stakeholder Engagement: Understand the importance of engaging stakeholders throughout the evaluation process, from planning to dissemination. Be prepared to discuss strategies for effective collaboration and communication with diverse stakeholders.

Next Steps

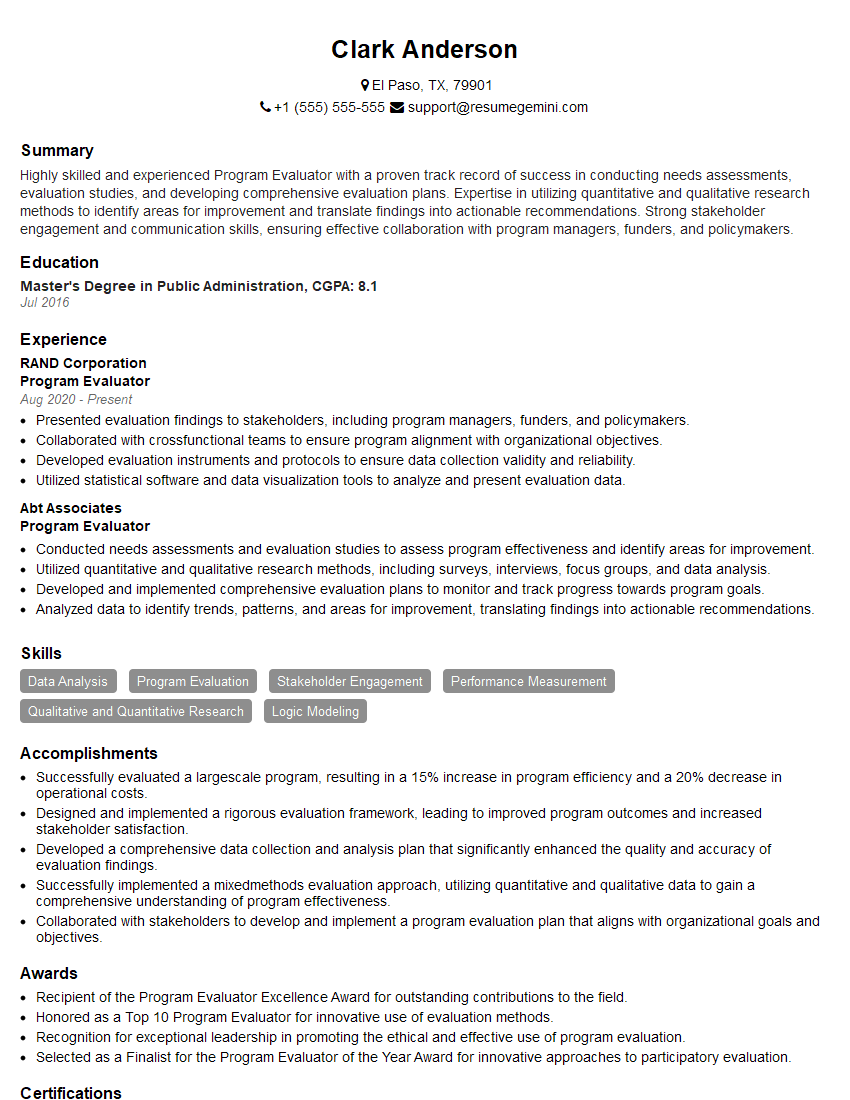

Mastering evaluation research significantly enhances your career prospects, opening doors to impactful roles in diverse sectors. A strong, ATS-friendly resume is crucial for maximizing your job search success. ResumeGemini is a trusted resource that can help you craft a compelling resume showcasing your evaluation research skills and experience. Examples of resumes tailored to Evaluation Research are provided to help guide you. Take the next step towards your dream career!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.