Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Statistical Software Proficiency (e.g., SPSS, SAS) interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Statistical Software Proficiency (e.g., SPSS, SAS) Interview

Q 1. Explain the difference between a one-tailed and a two-tailed test.

Imagine you’re testing a new drug. A one-tailed test assumes the drug will only improve the condition (or only worsen it), while a two-tailed test checks for any difference – improvement *or* worsening. In a one-tailed test, you’re only interested in one direction of the effect. For example, if you’re testing whether a new fertilizer increases crop yield, you would use a one-tailed test, hypothesizing a positive effect. A two-tailed test examines if there’s *any* difference from a control group – either positive or negative. This is crucial because the p-value interpretation changes. A two-tailed test requires a stronger result to reject the null hypothesis (no difference) since it accounts for deviations in both directions. In SPSS or SAS, you specify this through the test options.

In short: One-tailed tests look for an effect in one direction; two-tailed tests look for an effect in either direction. The choice depends on the research question and prior knowledge.

Q 2. Describe the assumptions of linear regression.

Linear regression models the relationship between a dependent variable and one or more independent variables. Several assumptions underpin its validity:

- Linearity: The relationship between the dependent and independent variables is linear. A scatter plot helps visualize this. Transformations might be needed if this assumption is violated.

- Independence of errors: Residuals (differences between observed and predicted values) are independent of each other. This is often violated in time-series data. Addressing this might involve techniques like autocorrelation correction.

- Homoscedasticity: The variance of the residuals is constant across all levels of the independent variable(s). This implies the spread of the data points is consistent around the regression line. Visual inspection using residual plots helps check this. If violated, transformations or weighted least squares might be considered.

- Normality of errors: Residuals are normally distributed. This assumption is less crucial with larger sample sizes due to the Central Limit Theorem. Histograms or Q-Q plots help assess normality. If severely violated, consider using robust regression techniques.

- No multicollinearity: Independent variables are not highly correlated with each other. High multicollinearity inflates standard errors and makes it difficult to interpret the effect of individual predictors. Variance Inflation Factors (VIFs) help detect multicollinearity.

Violating these assumptions can lead to inaccurate or misleading results. Diagnostic plots and tests are essential for checking them after model fitting in SPSS or SAS.

Q 3. How do you handle missing data in SPSS/SAS?

Missing data is a common challenge. The best approach depends on the pattern and amount of missingness, as well as the nature of the data. In SPSS and SAS, several methods exist:

- Listwise deletion: Cases with any missing values are excluded from the analysis. This is simple but can lead to substantial loss of information if missing data is substantial or not missing completely at random (MCAR).

- Pairwise deletion: Only cases with complete data for a particular analysis are used. This preserves more data but can create inconsistencies between analyses if correlations are used.

- Mean/Median imputation: Missing values are replaced with the mean or median of the observed values for that variable. Simple but can underestimate the variance and distort relationships.

- Regression imputation: Missing values are predicted using a regression model based on other variables. More sophisticated than mean imputation but still introduces bias if the relationships are not accurately modelled.

- Multiple imputation: Creates multiple plausible datasets by imputing missing data multiple times using different imputation models. Analysis is done on each imputed dataset, and results are combined to provide a more robust estimate.

- Maximum likelihood estimation: This approach incorporates missing data into the parameter estimation process without actually imputing values. It’s often preferred if the amount of missing data is considerable.

In SPSS, you can use the MISSING VALUES command or the Analyze > Missing Value Analysis procedure. In SAS, you have options within procedures like PROC MI (multiple imputation) and PROC REG (regression imputation).

The choice of method depends on the context. For instance, if missing data is MCAR and the percentage is low, listwise deletion might be acceptable. But for more complex scenarios, multiple imputation is often preferred for its robustness.

Q 4. What are the different methods for outlier detection?

Outliers are data points that significantly deviate from the rest of the data. Several methods exist for their detection:

- Visual inspection: Scatter plots, box plots, and histograms can visually reveal outliers.

- Z-scores: Data points with Z-scores above a certain threshold (e.g., 3 or -3) are considered outliers. This method assumes normality.

- Interquartile range (IQR): Outliers are defined as points below Q1 – 1.5*IQR or above Q3 + 1.5*IQR, where Q1 and Q3 are the first and third quartiles.

- Cook’s distance (regression): Measures the influence of each data point on the regression coefficients. High Cook’s distance suggests an influential outlier.

- Leverage (regression): Measures how far a data point’s independent variable values are from the means of the independent variables. High leverage points can unduly influence the regression model.

Outlier handling requires careful consideration. You might need to investigate why the outlier occurred – a data entry error or a genuinely unusual observation. Options include removing the outlier (if justified), transforming the data, or using robust methods less sensitive to outliers.

Q 5. Explain the concept of p-value and its significance.

The p-value represents the probability of obtaining results as extreme as, or more extreme than, the observed results, assuming the null hypothesis is true. It’s a measure of evidence *against* the null hypothesis. A small p-value (typically below 0.05) indicates strong evidence against the null hypothesis, leading to its rejection. This does *not* mean the alternative hypothesis is proven; it simply suggests there’s sufficient evidence to reject the null. The choice of significance level (alpha) is arbitrary, though 0.05 is commonly used.

For example, if the p-value for a t-test is 0.03, it implies that there’s only a 3% chance of obtaining the observed results if there were truly no difference between the groups being compared. Therefore, we reject the null hypothesis of no difference.

It’s crucial to interpret p-values within the context of the study design, sample size, and effect size. A small p-value with a small effect size might be statistically significant but not practically meaningful.

Q 6. What are the different types of sampling methods?

Sampling methods determine how we select a subset (sample) from a larger population. Different methods have different implications for the representativeness of the sample and the generalizability of the results.

- Probability sampling: Every member of the population has a known, non-zero probability of being selected. Examples include:

- Simple random sampling: Each member has an equal chance of being selected.

- Stratified random sampling: The population is divided into strata (subgroups), and random samples are drawn from each stratum.

- Cluster sampling: The population is divided into clusters, and a random sample of clusters is selected. All members within the selected clusters are included.

- Systematic sampling: Every kth member of the population is selected after a random starting point.

- Non-probability sampling: The probability of selection is unknown. Examples include:

- Convenience sampling: Selecting readily available individuals.

- Quota sampling: Selecting participants to meet pre-defined quotas (e.g., a certain number of males and females).

- Snowball sampling: Participants recruit other participants.

- Purposive sampling: Selecting participants based on specific characteristics.

The choice of sampling method depends on the research question, resources, and the nature of the population. Probability sampling generally yields more generalizable results, but it’s often more time-consuming and expensive.

Q 7. How do you perform a t-test in SPSS/SAS?

A t-test compares the means of two groups. In SPSS and SAS, the procedure is fairly straightforward:

SPSS:

- Go to

Analyze > Compare Means > Independent-Samples T Test(for comparing means of two independent groups) orAnalyze > Compare Means > Paired-Samples T Test(for comparing means of two related groups). - Select the dependent variable and the grouping variable.

- For independent samples, define the grouping variable’s groups.

- Run the analysis. The output includes the t-statistic, degrees of freedom, p-value, and confidence interval.

SAS:

You would use PROC TTEST. For example, for independent samples:

proc ttest data=mydata; class group; var score; run;

Here, mydata is your dataset, group is the grouping variable, and score is the dependent variable.

The output provides similar information as SPSS’s output. Remember to check assumptions (normality of data, equal variances, independence of observations) before interpreting the results. Violation of assumptions might necessitate alternative non-parametric tests (like the Mann-Whitney U test or Wilcoxon signed-rank test).

Q 8. How do you perform ANOVA in SPSS/SAS?

Analysis of Variance (ANOVA) is a statistical test used to compare the means of two or more groups. In SPSS and SAS, the process is similar, though the menu navigation differs slightly. Both software packages use a general linear model (GLM) approach to perform ANOVA.

In SPSS: You’d typically go to Analyze > General Linear Model > Univariate. You’ll specify your dependent variable (the continuous variable you’re measuring) and your independent variable (the categorical variable defining your groups). SPSS will then provide you with an ANOVA table containing F-statistics, p-values, and other relevant statistics to assess the significance of differences between group means. Post-hoc tests, like Tukey’s HSD, can be added to determine which specific groups differ significantly if the overall ANOVA is significant.

In SAS: The PROC GLM procedure is commonly used. A typical code snippet might look like this:

proc glm; class group; model dependent_variable = group; run;Here, ‘group’ represents your categorical independent variable and ‘dependent_variable’ is your continuous dependent variable. SAS will output an ANOVA table similar to SPSS, allowing you to interpret the results.

Imagine a scenario where you’re testing the effectiveness of three different fertilizers on plant growth. Your dependent variable would be plant height, and your independent variable would be the fertilizer type. ANOVA would tell you if there’s a statistically significant difference in plant height among the three fertilizer groups.

Q 9. Explain the concept of correlation and its interpretation.

Correlation measures the strength and direction of a linear relationship between two continuous variables. A correlation coefficient, often represented by ‘r’, ranges from -1 to +1.

- +1: Perfect positive correlation – as one variable increases, the other increases proportionally.

- 0: No linear correlation – no relationship between the variables.

- -1: Perfect negative correlation – as one variable increases, the other decreases proportionally.

Interpreting the correlation coefficient involves considering both its magnitude and sign. A magnitude closer to +1 or -1 indicates a stronger relationship, while a magnitude closer to 0 indicates a weaker relationship. The sign indicates the direction of the relationship (positive or negative).

For example, a correlation coefficient of 0.8 between ice cream sales and temperature indicates a strong positive correlation: as temperature increases, ice cream sales tend to increase. A correlation coefficient of -0.7 between hours spent exercising and body weight might suggest a moderately strong negative correlation: as hours spent exercising increase, body weight tends to decrease.

Important Note: Correlation does not imply causation. Just because two variables are correlated doesn’t mean one causes the other. There could be a third, unmeasured variable influencing both.

Q 10. How do you create a frequency table in SPSS/SAS?

Frequency tables summarize the distribution of a variable by showing the number (or percentage) of observations for each category or value. Creating them in SPSS and SAS is straightforward.

In SPSS: Navigate to Analyze > Descriptive Statistics > Frequencies. Select the variable(s) you want to summarize and choose the desired statistics (e.g., percentages, cumulative percentages).

In SAS: The PROC FREQ procedure is used. A simple example:

proc freq data=mydata; tables variable1 variable2; run;This code will generate frequency tables for variable1 and variable2. You can add options to control the output (e.g., percentages, cumulative frequencies).

Imagine you’re analyzing survey data on customer satisfaction. A frequency table for the ‘satisfaction level’ variable (e.g., very satisfied, satisfied, neutral, dissatisfied, very dissatisfied) would show how many respondents fall into each category.

Q 11. Explain the difference between Type I and Type II errors.

Type I and Type II errors are potential mistakes in hypothesis testing. They relate to the decision of whether to reject or fail to reject the null hypothesis.

- Type I Error (False Positive): Rejecting the null hypothesis when it’s actually true. Think of it as a false alarm. The probability of making a Type I error is denoted by α (alpha), often set at 0.05 (5%).

- Type II Error (False Negative): Failing to reject the null hypothesis when it’s actually false. Think of it as missing a real effect. The probability of making a Type II error is denoted by β (beta).

Consider a medical test for a disease. A Type I error would be diagnosing someone with the disease when they don’t have it. A Type II error would be failing to diagnose someone who actually has the disease.

The balance between Type I and Type II errors is crucial. Lowering the probability of one type of error often increases the probability of the other. The choice of α and the sample size influence the probabilities of these errors.

Q 12. How do you perform a chi-square test in SPSS/SAS?

The chi-square test is a statistical test used to analyze categorical data. It determines if there’s a significant association between two categorical variables. Both SPSS and SAS offer straightforward ways to perform this test.

In SPSS: Go to Analyze > Descriptive Statistics > Crosstabs. Select your two categorical variables, and under the Statistics button, check the Chi-square box. SPSS will output a chi-square statistic, degrees of freedom, and a p-value.

In SAS: Use PROC FREQ with the CHISQ option:

proc freq data=mydata; tables var1*var2 / chisq; run;This will generate a chi-square test of independence for variables var1 and var2. The output includes the chi-square statistic, p-value, and other relevant information.

For example, you might use a chi-square test to see if there’s an association between gender and preference for a particular brand of soft drink. The null hypothesis would be that there’s no association; the alternative hypothesis would be that there is an association.

Q 13. What are the different types of data (nominal, ordinal, interval, ratio)?

Data can be categorized into four levels of measurement:

- Nominal: Categorical data with no inherent order. Examples include gender (male, female), eye color (blue, brown, green), and marital status (single, married, divorced).

- Ordinal: Categorical data with a meaningful order but no equal intervals between categories. Examples include education level (high school, bachelor’s, master’s), customer satisfaction (very satisfied, satisfied, neutral, dissatisfied, very dissatisfied), and rankings (first, second, third).

- Interval: Numerical data with equal intervals between values but no true zero point. Examples include temperature in Celsius or Fahrenheit (0°C doesn’t mean no temperature), and years (year 0 doesn’t mean no time).

- Ratio: Numerical data with equal intervals and a true zero point. Examples include height, weight, age, income, and number of children.

Understanding the level of measurement is critical because it dictates the types of statistical analyses that are appropriate. For example, you can’t calculate a meaningful average for nominal data.

Q 14. How do you handle categorical variables in regression analysis?

Categorical variables can’t be directly included in regression analysis as they are; they need to be transformed into numerical representations. The most common methods are dummy coding (for binary categorical variables) and effect coding (for multiple categories).

Dummy Coding: For a binary categorical variable (e.g., gender: male/female), create a dummy variable that takes the value 1 for one category (e.g., male) and 0 for the other (e.g., female). This allows the regression model to assess the difference in the outcome variable between the two groups.

Effect Coding: For a categorical variable with multiple categories (e.g., education level: high school, bachelor’s, master’s), you need multiple dummy variables. One common approach is to create k-1 dummy variables for k categories, where each dummy variable represents a comparison to a reference category. For example, you might have a dummy variable for ‘bachelor’s’ (1 if bachelor’s degree, 0 otherwise) and another for ‘master’s’ (1 if master’s degree, 0 otherwise), with ‘high school’ serving as the reference category.

Both SPSS and SAS handle this automatically through their regression procedures. In SPSS, you would simply select your categorical variable(s) in the independent variable list, and SPSS will automatically create dummy variables for you. SAS also offers options within its regression procedures for specifying how categorical variables are to be handled.

Example: Predicting income based on gender and education level. Gender is a binary variable (coded as 0 and 1), and education level is a categorical variable with multiple levels (high school, bachelor’s, master’s), which would need to be represented using dummy coding or other suitable methods before being included in your regression model.

Q 15. Explain the difference between correlation and causation.

Correlation and causation are often confused, but they represent distinct relationships between variables. Correlation simply indicates a statistical association between two or more variables – when one changes, the other tends to change as well. This change can be positive (both increase together) or negative (one increases as the other decreases). However, correlation does not imply causation. Just because two variables are correlated doesn’t mean one directly causes the change in the other.

Example: Ice cream sales and crime rates might be positively correlated. As ice cream sales increase, so might crime rates. However, this doesn’t mean eating ice cream causes crime. Both are likely influenced by a third variable, such as warmer weather. More people are outside in the summer, leading to increased ice cream consumption and potentially increased opportunities for crime.

Causation, on the other hand, implies a direct cause-and-effect relationship. One variable directly influences another. To establish causation, you need to demonstrate a temporal relationship (cause precedes effect), a strong correlation, and rule out other potential confounding variables. This often requires controlled experiments or sophisticated statistical analysis.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you perform logistic regression in SPSS/SAS?

Logistic regression is used to model the probability of a binary outcome (0 or 1, yes or no) based on one or more predictor variables. In SPSS and SAS, the process is similar but the syntax differs.

In SPSS:

- Go to Analyze > Regression > Binary Logistic.

- Move your dependent (binary outcome) variable into the ‘Dependent’ box and your predictor variables into the ‘Covariates’ box.

- You can choose various options like method (Enter, Forward, Backward, etc.), which affects how variables are entered into the model. Enter includes all variables simultaneously, while stepwise methods add or remove variables based on statistical significance.

- Click ‘OK’ to run the analysis. SPSS will output coefficients, odds ratios, and various goodness-of-fit statistics.

In SAS:

You’d use the PROC LOGISTIC procedure. A basic example looks like this:

proc logistic data=mydata;model outcome = predictor1 predictor2;run;Where ‘mydata’ is your dataset, ‘outcome’ is your binary dependent variable, and ‘predictor1’ and ‘predictor2’ are your predictor variables. Numerous options can be added to this code to specify the method, link function, and other details of your analysis.

Both SPSS and SAS will provide output that includes things like the estimated coefficients (used to calculate the probability), odds ratios (indicating how much the odds of the outcome change for a one-unit change in a predictor), and model fit statistics (like likelihood ratio chi-square and Hosmer-Lemeshow goodness-of-fit test).

Q 17. What are the different methods for data visualization in SPSS/SAS?

Both SPSS and SAS offer a wide array of data visualization tools. The specific methods are similar in concept but differ in execution.

Common methods include:

- Histograms: Show the distribution of a single continuous variable.

- Bar charts: Display the frequencies or proportions of categorical variables.

- Scatter plots: Illustrate the relationship between two continuous variables.

- Box plots: Summarize the distribution of a continuous variable, showing median, quartiles, and outliers.

- Line graphs: Track changes in a variable over time or another continuous variable.

- Pie charts: Show the proportion of different categories within a whole.

SPSS offers these through its menus (Graphs > Chart Builder or Legacy Dialogs). SAS uses procedures like PROC SGPLOT (for more modern, versatile graphics) and PROC GCHART (for more traditional chart types). Both offer extensive customization options for aesthetics and labels. For example, in SAS using PROC SGPLOT you can create a variety of plots, adding details like titles and axis labels in the code for greater control.

Q 18. How do you interpret a confusion matrix?

A confusion matrix is a table used to evaluate the performance of a classification model (like logistic regression). It summarizes the counts of true positive (TP), true negative (TN), false positive (FP), and false negative (FN) predictions.

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Actual Positive | TP | FN |

| Actual Negative | FP | TN |

TP: Correctly predicted positive cases.

TN: Correctly predicted negative cases.

FP: Incorrectly predicted positive cases (Type I error).

FN: Incorrectly predicted negative cases (Type II error).

From the confusion matrix, you can calculate several important metrics:

- Accuracy: (TP + TN) / Total = Overall correctness of the model.

- Precision: TP / (TP + FP) = Proportion of true positives among all predicted positives.

- Recall (Sensitivity): TP / (TP + FN) = Proportion of true positives correctly identified.

- Specificity: TN / (TN + FP) = Proportion of true negatives correctly identified.

- F1-score: 2 * (Precision * Recall) / (Precision + Recall) = Harmonic mean of precision and recall; useful when classes are imbalanced.

The interpretation depends on the context. A high accuracy might be desirable generally, but in some applications (e.g., medical diagnosis), high sensitivity (avoiding false negatives) might be prioritized over high specificity.

Q 19. Explain the concept of ROC curve and AUC.

The Receiver Operating Characteristic (ROC) curve is a graphical representation of the performance of a binary classification model at various classification thresholds. It plots the true positive rate (sensitivity) against the false positive rate (1 – specificity) as the threshold varies.

The Area Under the Curve (AUC) is a single metric summarizing the ROC curve. It represents the probability that the model will rank a randomly chosen positive instance higher than a randomly chosen negative instance. AUC ranges from 0 to 1, with 1 representing a perfect classifier and 0.5 representing a classifier no better than random guessing.

Interpretation:

- AUC close to 1: Excellent performance; the model effectively distinguishes between positive and negative instances.

- AUC around 0.7-0.8: Good performance.

- AUC around 0.5: Poor performance; the model is essentially guessing randomly.

Both the ROC curve and AUC are particularly useful when dealing with imbalanced datasets (where one class is significantly more prevalent than the other), as they provide a more comprehensive assessment of classifier performance than accuracy alone.

Q 20. How do you perform data transformation in SPSS/SAS?

Data transformation involves modifying the values of variables to improve the model’s assumptions or performance. Common transformations include:

- Log transformation: Applying a logarithm (base 10 or natural log) to reduce skewness and stabilize variance. Useful for variables with skewed distributions.

- Square root transformation: Similar to log transformation, but less aggressive. Can be useful for count data.

- Reciprocal transformation: Taking the inverse of a variable. Useful for variables with heavily skewed distributions.

- Standardization (Z-score): Transforming data to have a mean of 0 and a standard deviation of 1. Useful for variables with different scales.

In SPSS: Transformations can be performed using the ‘Transform’ menu, with options for computing variables based on different functions (like log, square root, etc.).

In SAS: Transformations are typically done within the data step using functions like LOG(), SQRT(), and so on. For instance, data transformed_data; set mydata; log_var = log10(original_var); run; creates a new variable ‘log_var’ containing the base-10 logarithm of ‘original_var’.

The choice of transformation depends on the specific data and the goals of the analysis. It’s important to carefully consider the impact of transformations on the interpretation of the results.

Q 21. Explain the concept of multicollinearity.

Multicollinearity refers to a high correlation between two or more predictor variables in a regression model. When predictors are highly correlated, it becomes difficult to isolate the individual effects of each variable on the outcome. This can lead to unstable coefficient estimates (meaning small changes in the data can lead to large changes in the coefficients), inflated standard errors (making it harder to find statistically significant results), and difficulty in interpreting the model.

Consequences:

- Unreliable coefficient estimates

- Inflated standard errors

- Difficulty in interpreting the model

- Reduced model stability

Detecting multicollinearity:

- Correlation matrix: Examine the correlation coefficients between predictor variables. High correlations (typically above 0.8 or 0.9) suggest multicollinearity.

- Variance Inflation Factor (VIF): VIF measures how much the variance of a coefficient estimate is increased due to multicollinearity. A VIF above 5 or 10 often indicates a problem.

Addressing multicollinearity:

- Remove one or more of the correlated variables: This is the simplest approach, but it might lead to information loss.

- Combine correlated variables: Create a new variable that captures the common information.

- Principal Component Analysis (PCA): PCA reduces the dimensionality of the data by creating new uncorrelated variables (principal components) that capture most of the variance.

- Ridge regression or Lasso regression: These techniques can handle multicollinearity by shrinking the coefficients towards zero.

The best approach depends on the specific situation and the nature of the correlated variables.

Q 22. How do you build a decision tree in SPSS/SAS?

Building a decision tree in SPSS or SAS involves using their respective classification and regression tree (CART) algorithms. Think of it like creating a flowchart that helps predict an outcome based on a series of yes/no questions. In SPSS, you’d typically use the ‘CHAID’ (Chi-squared Automatic Interaction Detection) or ‘QUEST’ (Quick, Unbiased, Efficient Statistical Tree) algorithms within the ‘Analyze > Classify > Tree…’ menu. SAS uses the PROC HPSPLIT procedure, which is very powerful and offers more control over the tree-building process.

SPSS Example (Conceptual): Let’s say you want to predict customer churn. You might start with a question like ‘Is the customer’s monthly bill above $50?’ If yes, you might follow with ‘Have they contacted customer support in the last month?’. Each branch represents a different subset of customers, and you continue building branches until you reach a point where further splitting doesn’t improve the prediction accuracy.

SAS Example (Conceptual): The PROC HPSPLIT procedure allows for greater control over parameters such as the minimum number of observations per node, the maximum depth of the tree, and the pruning method. This is particularly useful when dealing with complex datasets and wanting to avoid overfitting (where the model is too tailored to the training data and doesn’t generalize well). You would specify the dependent variable and independent variables in the procedure. The output will show the tree structure, the rules at each node, and measures of prediction accuracy. It’s all about systematically dividing the data based on feature importance to create meaningful predictions.

Q 23. What are the strengths and weaknesses of different statistical tests?

The choice of statistical test depends heavily on the type of data you have (e.g., continuous, categorical) and the research question you’re trying to answer. Each test has its own assumptions and limitations. Let’s compare a few common tests:

- t-test: Compares the means of two groups. It’s robust but assumes normality and equal variances. A violation of these assumptions might necessitate a non-parametric alternative like the Mann-Whitney U test.

- ANOVA (Analysis of Variance): Compares the means of three or more groups. Similar to the t-test, it relies on normality and equal variances assumptions; violations lead to non-parametric tests like the Kruskal-Wallis test.

- Chi-square test: Examines the relationship between two categorical variables. It doesn’t assume normality, but does assume sufficient sample size in each cell.

- Correlation: Measures the linear relationship between two continuous variables. It doesn’t imply causation; it simply indicates an association. Spearman’s rank correlation is a non-parametric alternative for non-normally distributed data.

Strengths and Weaknesses Summary: Parametric tests (like t-tests and ANOVA) are powerful when assumptions are met, providing more precise results. However, non-parametric tests are more flexible and handle violations of assumptions better, although they are often less powerful.

Real-world Example: In a clinical trial comparing a new drug to a placebo, a t-test could assess if there’s a significant difference in blood pressure between the two groups. If the blood pressure data is not normally distributed, the Mann-Whitney U test would be a more suitable choice.

Q 24. How do you perform cluster analysis in SPSS/SAS?

Cluster analysis groups similar data points together. Think of sorting a pile of LEGOs into groups based on color, size, or shape. In SPSS, you typically use the ‘Analyze > Classify > K-Means Cluster’ procedure. SAS offers PROC FASTCLUS (for large datasets) and PROC CLUSTER (more flexible, but potentially slower).

SPSS K-Means Clustering: This iterative algorithm assigns data points to clusters based on minimizing the within-cluster variance. You specify the number of clusters (k) beforehand. The algorithm iteratively reassigns data points to clusters until it converges to a stable solution. You’ll need to decide on appropriate distance measures (e.g., Euclidean distance).

SAS PROC FASTCLUS: Designed for speed and efficiency with large datasets, it’s a good choice when dealing with millions of observations. It provides a quick way to obtain an initial clustering solution.

Interpreting Results: After running the analysis, you’ll get cluster centroids (the average values of variables for each cluster) and cluster assignments for each data point. You interpret these centroids to understand the characteristics of each cluster.

Real-world Example: Market research often uses cluster analysis to segment customers based on purchasing behavior and demographics, allowing for targeted marketing campaigns.

Q 25. Explain the concept of factor analysis.

Factor analysis is a statistical method used to reduce the dimensionality of a dataset by identifying underlying latent variables (factors) that explain the correlations among a set of observed variables. Imagine you have many questions on a survey; factor analysis can help you group these questions into fewer, more meaningful underlying themes or constructs.

Example: A survey measuring customer satisfaction might have questions about product quality, customer service, and price. Factor analysis might reveal two underlying factors: ‘Product Experience’ (combining questions on product quality and customer service) and ‘Value Perception’ (combining questions on price and value for money). These factors are unobserved variables but are inferred from the patterns in the responses to the observed questions.

Process: Factor analysis involves correlation analysis, eigenvalue extraction (to determine the number of factors), and factor rotation (to improve the interpretability of factors).

SPSS and SAS: Both SPSS and SAS provide tools for performing factor analysis. You’ll need to specify the variables, the method of extraction (e.g., principal components analysis), and the rotation method (e.g., varimax). The results typically include factor loadings (which show the relationship between variables and factors) and factor scores (which represent the scores of each observation on the extracted factors).

Q 26. How do you handle large datasets in SPSS/SAS?

Handling large datasets in SPSS and SAS requires strategies to optimize processing speed and memory usage. Techniques include:

- Data sampling: Analyze a representative subset of the data to get quick preliminary results. This is particularly useful for exploratory analysis.

- Data partitioning: Divide the data into smaller chunks and process them separately. The results can then be combined.

- In-database processing: For very large datasets, it’s often more efficient to perform the analysis directly within the database management system (DBMS) rather than loading the entire dataset into SPSS or SAS.

- SAS’s PROC SQL and SPSS’s SQL interface: Leverage the power of SQL to perform data manipulation and subsetting before loading the data into the statistical software. This can reduce processing time.

- Using high-performance computing (HPC): For extremely large datasets, parallel processing on clusters or cloud computing resources can be employed.

- Memory Management: Optimize memory usage by carefully managing data types and closing unnecessary files.

Example: If you have a dataset of 100 million records, analyzing the full dataset in SPSS directly is highly inefficient. Instead, you might draw a 1% sample for initial analysis and then use PROC SQL to subset the data and execute analysis on smaller chunks.

Q 27. What are some best practices for data cleaning in SPSS/SAS?

Data cleaning is crucial before any analysis. It ensures accuracy and reliability of results. Best practices include:

- Handling missing data: Use appropriate techniques such as imputation (replacing missing values with estimated values) or analysis methods that handle missing data robustly. Simply removing rows with missing data can introduce bias.

- Identifying and correcting outliers: Outliers can disproportionately influence results. Investigate their cause; they may be errors or genuinely extreme values. Techniques include box plots, scatter plots and z-score analysis to identify them. Consider transformations or using robust statistical methods.

- Checking data consistency and validity: Ensure that data is within expected ranges and conforms to defined data types. Identify and correct inconsistencies.

- Data transformation: Transforming variables (e.g., using logarithms or standardizing) can improve the performance of statistical methods and improve model interpretability.

- Documentation: Keep a detailed record of all cleaning steps performed.

Example: In a customer database, you might find age values of ‘999’ or negative values. These are clearly errors and should be investigated and corrected or removed. Using appropriate data validation rules can prevent these types of errors from entering the database in the first place.

Q 28. Describe your experience using macros or scripting in SPSS/SAS.

I have extensive experience using macros in SAS and syntax in SPSS to automate repetitive tasks and create custom functions. Macros/syntax allow me to streamline workflows, improve code efficiency, and enhance reproducibility.

SAS Macros Example: I’ve used SAS macros to create reusable modules for data cleaning, statistical analysis, and report generation. This means that instead of writing the same code repeatedly for different datasets, I can create a macro that takes the dataset name as an input and performs the necessary operations. This saves time and reduces the risk of errors. For instance, a macro could automatically check for missing values, impute them using a specific method, and generate summary statistics for each variable.

SPSS Syntax Example: In SPSS, I frequently use syntax for tasks like creating custom variables, running complex statistical models, and generating customized output. This allows for detailed control over the analysis process and facilitates reproducibility by documenting every step taken. For example, I can use syntax to loop through a series of datasets and perform the same analysis on each one, saving the results in separate files.

Benefits: Macros and syntax significantly improve efficiency and reliability, reducing manual effort and the risk of human errors in data processing and analysis. They are essential for complex projects involving large datasets and multiple analyses. The use of version control software (like GIT) further enhances reproducibility by keeping track of the code and associated changes over time.

Key Topics to Learn for Statistical Software Proficiency (e.g., SPSS, SAS) Interview

Landing your dream job requires demonstrating a solid grasp of statistical software. Focus your preparation on these key areas:

- Data Import and Cleaning: Mastering techniques for importing data from various sources (CSV, Excel, databases), handling missing values, and identifying outliers. Practical application: Demonstrate your ability to clean and prepare real-world datasets for analysis.

- Descriptive Statistics: Calculate and interpret measures of central tendency, dispersion, and distribution. Understand how to effectively visualize these using histograms, box plots, and scatter plots. Practical application: Explain the insights you can gain from descriptive statistics and how to choose appropriate visualizations.

- Inferential Statistics: Grasp the concepts behind hypothesis testing, t-tests, ANOVA, and regression analysis. Understand the assumptions of these tests and their limitations. Practical application: Design and interpret statistical tests to draw meaningful conclusions from data.

- Data Manipulation and Transformation: Become proficient in data manipulation techniques, including filtering, sorting, merging, and recoding variables. Practical application: Demonstrate your ability to restructure data to suit specific analytical needs.

- Statistical Modeling: Develop a strong understanding of different statistical models (linear regression, logistic regression, etc.) and their applications. Practical application: Explain how to select and interpret the results of a suitable statistical model for a given problem.

- Output Interpretation and Reporting: Learn to effectively communicate your findings through clear and concise reports, focusing on interpreting statistical results in the context of the research question. Practical application: Present your analysis in a way that is easily understandable to a non-technical audience.

- Advanced Techniques (depending on the role): Explore topics such as factor analysis, cluster analysis, time series analysis, or other advanced techniques relevant to the specific job description.

Next Steps

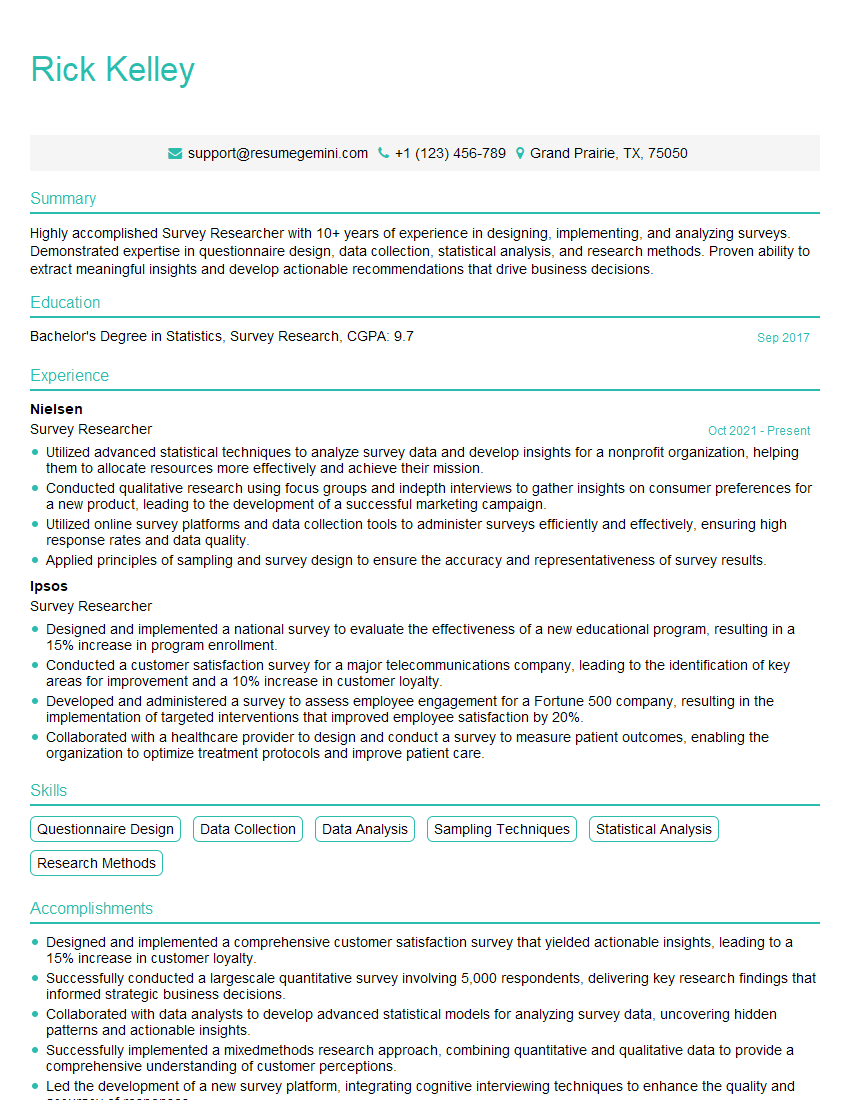

Mastering statistical software like SPSS and SAS is crucial for career advancement in data analysis, research, and many other fields. It opens doors to exciting opportunities and higher earning potential. To maximize your job prospects, create a compelling, ATS-friendly resume that showcases your skills effectively. ResumeGemini is a trusted resource that can help you build a professional resume tailored to your experience and target roles. We provide examples of resumes specifically designed for candidates with Statistical Software Proficiency (e.g., SPSS, SAS) to help you get started. Let’s make your next career move a success!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.