Are you ready to stand out in your next interview? Understanding and preparing for Assessment Strategy Development interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Assessment Strategy Development Interview

Q 1. Explain the difference between formative and summative assessment.

Formative and summative assessments are two crucial types of evaluation used throughout the learning process. They differ primarily in their purpose and timing.

Formative assessment is ongoing and focuses on monitoring student learning during the learning process. Think of it as a ‘check-in’ to see how students are progressing and identify areas needing improvement. It’s designed to inform instruction and guide learning, not to assign a final grade. Examples include quizzes, class discussions, exit tickets, and informal observations. The goal isn’t to evaluate a student’s final mastery, but to improve their understanding before summative assessment.

Summative assessment, on the other hand, measures student learning at the end of an instructional unit, course, or program. It summarizes what a student has learned and is typically used to assign a grade or evaluate program effectiveness. Final exams, standardized tests, and major projects are all examples of summative assessments. These assessments are designed to gauge a student’s overall understanding and proficiency.

Analogy: Imagine building a house. Formative assessments are like checking the foundation and walls during construction – identifying and fixing any problems along the way. Summative assessment is the final inspection, determining if the house meets the building code and is ready for occupancy.

Q 2. Describe your experience with developing assessment blueprints.

Developing assessment blueprints is a cornerstone of my approach to assessment strategy. I’ve extensively used them in various contexts, from designing curriculum-aligned tests for K-12 students to creating competency-based evaluations for professional development programs. The process typically involves:

- Identifying learning objectives: Clearly defining what students should know and be able to do at the end of the learning experience. This involves identifying the specific knowledge, skills, and attitudes that are targeted.

- Determining assessment methods: Selecting appropriate methods based on the learning objectives (e.g., multiple-choice questions for factual recall, essays for critical thinking, practical demonstrations for skill assessment).

- Creating a table of specifications: This crucial step maps the learning objectives to the assessment items. It ensures appropriate coverage of the content and different cognitive levels (e.g., knowledge, comprehension, application, analysis, synthesis, evaluation). This table acts as a blueprint, guaranteeing alignment between what is taught and what is assessed.

- Developing assessment items: Crafting high-quality, clear, and unambiguous questions or tasks aligned with the learning objectives and the table of specifications. This stage involves careful consideration of item difficulty, distractor effectiveness (for multiple-choice), and rubric development (for performance-based tasks).

- Pilot testing and revision: Before final implementation, I always recommend pilot testing the assessment with a small group to identify any issues with clarity, difficulty, or fairness. This step allows for necessary revisions before widespread use.

For example, in a recent project designing an assessment for a medical training program, the blueprint ensured that both theoretical knowledge and practical skills were equally weighted and thoroughly assessed through a combination of written exams, simulations, and practical demonstrations.

Q 3. What are the key considerations when selecting an assessment method?

Selecting an assessment method requires careful consideration of several key factors. The most important factors are the learning objectives, the target audience, the resources available, and the overall assessment goals.

- Alignment with learning objectives: The chosen method must accurately measure the intended learning outcomes. For example, an essay exam is better suited for assessing critical thinking than a multiple-choice test.

- Target audience: The assessment must be accessible and appropriate for the students’ abilities, background, and needs. Considerations for diverse learners and accessibility needs are crucial.

- Resources and constraints: Practical considerations include the time available for administration, scoring, and feedback; budgetary limitations; and available technology.

- Assessment goals: The purpose of the assessment – formative or summative, high-stakes or low-stakes – will influence the method selected. A high-stakes exam demands greater rigor and reliability than a quick formative check-in.

- Validity and reliability: The chosen method should yield results that are valid (measuring what it intends to measure) and reliable (consistent and free from error).

For instance, assessing practical skills in a carpentry class requires hands-on projects or demonstrations, rather than a written test. Conversely, measuring knowledge of historical events might best be served by multiple-choice or essay questions.

Q 4. How do you ensure the fairness and validity of an assessment?

Ensuring fairness and validity in assessment is paramount. It involves a multifaceted approach:

- Clear instructions and rubrics: Provide students with clear instructions, and for performance-based assessments, use well-defined rubrics that specify the criteria for evaluation. This transparency minimizes bias and ensures consistent grading.

- Item analysis: Analyze assessment items for potential bias, ensuring they are free from cultural or gender stereotypes. Analyze item difficulty and discrimination indices to identify items that need improvement.

- Multiple assessment methods: Using a variety of assessment methods (e.g., tests, projects, presentations) provides a more comprehensive picture of student learning and mitigates the limitations of any single method.

- Standard setting: For high-stakes assessments, utilize standard setting methods (e.g., Angoff method, Bookmark method) to establish clear performance standards and ensure consistent grading across raters.

- Rater training: If using subjective assessment methods (e.g., essay scoring, performance assessments), provide raters with comprehensive training to minimize subjectivity and increase inter-rater reliability.

- Accessibility considerations: Ensure the assessment is accessible to all students, including those with disabilities, by providing appropriate accommodations.

For example, in a language proficiency test, I would use multiple assessment methods such as oral interviews, writing samples, and listening comprehension tests, thereby reducing reliance on a single method and providing a holistic evaluation.

Q 5. What are some common threats to assessment validity and reliability?

Several factors can threaten assessment validity and reliability. It’s crucial to be aware of these potential issues to mitigate their impact:

- Test anxiety and bias: Students’ emotional states can affect performance. Bias in test design can unfairly advantage or disadvantage certain groups.

- Poorly written items: Ambiguous questions, confusing instructions, and flawed item design can lead to inaccurate results.

- Insufficient sample size: A small number of assessment items might not accurately reflect a student’s overall knowledge or skills.

- Inadequate scoring rubrics: Unclear or inconsistent scoring rubrics can lead to subjective and unreliable grading.

- External factors: Environmental distractions (noise, temperature) or testing conditions can affect student performance.

- Inconsistent administration: Variations in the way the assessment is administered can lead to differences in performance, affecting reliability.

- Cheating: Academic dishonesty undermines the validity and reliability of any assessment.

For example, poorly written multiple-choice questions with ambiguous options or misleading distractors can negatively impact validity, while inconsistent scoring of essays can threaten reliability.

Q 6. Explain your understanding of different assessment scoring methods.

Assessment scoring methods vary greatly depending on the type of assessment. Common methods include:

- Norm-referenced scoring: Compares student performance to the performance of a larger group (norm group), resulting in scores like percentiles or standardized scores. This approach focuses on relative standing within a group.

- Criterion-referenced scoring: Compares student performance against a predetermined standard or criterion, indicating whether a student has mastered specific learning objectives. This method focuses on absolute performance.

- Percentage scoring: Simple method of calculating the proportion of correctly answered items to the total number of items. This approach is easy to understand but may not reflect the complexity of learning objectives.

- Rubric scoring: Uses a scoring rubric to evaluate student work based on pre-defined criteria. This is particularly useful for complex tasks and performance-based assessments.

- Holistic scoring: Provides a single overall score based on a global impression of the student’s work. This method can be faster but might be less detailed than analytic scoring.

- Analytic scoring: Assigns separate scores to different aspects of the student’s work, providing more detailed feedback.

The choice of scoring method depends on the assessment’s purpose and the type of information needed. For instance, a summative exam might utilize norm-referenced scoring to compare student performance across a cohort, whereas formative feedback might employ criterion-referenced scoring or rubric scoring to highlight areas for improvement.

Q 7. How do you incorporate technology into assessment strategies?

Technology offers powerful tools to enhance assessment strategies in numerous ways:

- Computer-based testing: Online platforms can deliver assessments efficiently to large groups, provide immediate feedback, and automate scoring for objective items. Platforms like Moodle, Canvas, or Blackboard allow for creating and administering various assessment formats, saving time and resources.

- Adaptive testing: Computer-adaptive tests adjust the difficulty of questions based on a student’s responses, providing more precise measurements of ability levels. This approach offers personalized learning experiences tailored to each student’s proficiency.

- Simulation and virtual environments: Simulations allow students to practice real-world scenarios in a safe environment and provide opportunities for formative assessment during the learning process. For example, a medical student might practice a surgical procedure in a virtual environment.

- Automated essay scoring: Software can analyze essay responses, providing feedback on grammar, style, and content, though human judgment remains essential for comprehensive evaluation.

- Data analytics: Educational data mining techniques analyze assessment data to identify trends and patterns, informing instructional adjustments and improving the effectiveness of learning programs.

Example: Using a Learning Management System (LMS) to deliver online quizzes and track student progress, providing immediate feedback and identifying knowledge gaps.

Incorporating technology thoughtfully requires careful consideration of accessibility, equity, and the limitations of technology. It’s vital to ensure that technology enhances, rather than hinders, the learning process.

Q 8. Describe your experience with using item response theory (IRT).

Item Response Theory (IRT) is a sophisticated statistical model used in assessment to analyze individual item performance and estimate examinee abilities. Unlike classical test theory, IRT focuses on the relationship between an examinee’s response to an item and their underlying latent trait (the ability being measured). This allows for more precise measurements and comparisons across different tests and test forms.

In my experience, I’ve extensively used IRT in developing high-stakes assessments. For example, I led a project to develop a new teacher certification exam. We used IRT to calibrate items across different test forms, ensuring fairness and comparability. This involved analyzing item parameters (difficulty, discrimination, and guessing parameters) using software like BILOG-MG or Winsteps. We identified items that were poorly performing and revised them based on IRT analyses. The result was a more efficient and psychometrically sound assessment.

Furthermore, I’ve utilized IRT for computer adaptive testing (CAT), which tailors the difficulty of items presented to the examinee based on their responses. This increased efficiency and precision of measurement, reducing the overall test length while maintaining high reliability.

Q 9. How do you handle challenges in assessment development?

Assessment development inevitably presents challenges. I approach these systematically. First, I clearly define the assessment goals and objectives, ensuring alignment with the intended learning outcomes. This clarifies the scope and reduces the likelihood of unexpected issues. Second, I prioritize thorough content validation, involving subject matter experts (SMEs) throughout the development process to ensure accuracy and relevance.

Challenges like item writing difficulties or unexpected technical issues are addressed iteratively through pilot testing and feedback analysis. This may involve refining items, updating test administration procedures, and even refining the overall assessment design. For instance, in a recent project, pilot testing revealed an unexpected bias in an item. By analyzing the results, we identified the source of the bias and revised the item, demonstrating a commitment to fairness and accuracy.

Ultimately, I embrace challenges as opportunities for improvement, ensuring that assessments are not just valid but also reliable and fair.

Q 10. How do you ensure assessment results are actionable and informative?

Actionable and informative assessment results require careful consideration of the intended audience and purpose. To achieve this, I focus on clear reporting and the use of meaningful metrics. For instance, instead of just providing a raw score, I might present percentile ranks, scaled scores, or proficiency levels, making the data easily interpretable for diverse audiences.

Furthermore, I ensure the results provide specific feedback that can inform instructional practices or individual learning plans. This might involve detailed performance reports at the item level, identifying areas of strength and weakness. For example, in a competency-based assessment, results would highlight specific skills mastered and those requiring further development, enabling targeted interventions.

Finally, I create reports that effectively communicate the data using clear visuals and concise summaries, making the information accessible to both technical and non-technical stakeholders.

Q 11. Describe your experience with criterion-referenced and norm-referenced assessments.

Criterion-referenced assessments measure performance against a predetermined standard, while norm-referenced assessments compare performance against a group. I have extensive experience with both.

Criterion-referenced assessments are ideal when the goal is to measure mastery of specific skills or knowledge. For example, a driving test is criterion-referenced; you either meet the driving standards or you don’t. In my work, I’ve developed several criterion-referenced assessments for evaluating student proficiency in various subjects.

Norm-referenced assessments, on the other hand, rank individuals within a group. Standardized achievement tests are typical examples, allowing comparisons among students. I’ve been involved in the development and analysis of norm-referenced assessments, including the creation of norms and the interpretation of standardized scores.

The choice between these approaches depends entirely on the assessment’s purpose and the desired information.

Q 12. How do you evaluate the effectiveness of an assessment strategy?

Evaluating the effectiveness of an assessment strategy requires a multi-faceted approach. First, I assess the psychometric properties of the assessment, focusing on reliability (consistency of scores) and validity (accuracy in measuring the intended construct). Reliability can be assessed using techniques like Cronbach’s alpha, while validity involves examining content, criterion, and construct validity evidence.

Second, I consider the practicality and feasibility of the assessment, examining factors such as time constraints, cost-effectiveness, and ease of administration and scoring. Third, I analyze the impact of the assessment on student learning and instructional practice. This might involve studying the relationship between assessment scores and subsequent performance or gathering feedback from teachers and students on the assessment’s usefulness.

Finally, I examine whether the assessment achieved its intended purpose and led to improved outcomes. This holistic approach ensures a comprehensive evaluation of the assessment’s effectiveness.

Q 13. What experience do you have with adaptive testing?

Adaptive testing dynamically adjusts the difficulty of items presented to the test-taker based on their responses. I have significant experience with adaptive testing, primarily using computer-based platforms. This technology is particularly useful for optimizing test length while maintaining measurement precision.

I’ve worked on projects where we implemented item banks tailored for adaptive testing, using IRT models to select items appropriate for each examinee’s estimated ability level. For example, in a language proficiency assessment, an adaptive test might begin with questions of moderate difficulty. If the test-taker answers correctly, the difficulty increases; if incorrectly, it decreases. This approach ensures that each examinee is challenged appropriately, leading to more efficient and accurate measurements.

The advantages include personalized assessment experiences, reduced testing time, and improved measurement precision. However, careful consideration must be given to item bank development, algorithm design, and the potential for algorithmic bias.

Q 14. How do you communicate assessment results to stakeholders?

Communicating assessment results effectively requires tailoring the information to different stakeholders. For teachers, I provide detailed reports with item-level analysis, highlighting areas of strength and weakness to inform instructional decisions. For administrators, I present summaries of overall performance, identifying trends and patterns. For students, the communication is more personalized, focusing on individual strengths and areas for improvement.

I use a variety of methods to convey the results, including written reports, presentations, and data visualizations. For example, I might use graphs and charts to illustrate performance trends, making complex data more accessible. Furthermore, I ensure that the language used is clear and easily understood, avoiding technical jargon whenever possible. The goal is to ensure that every stakeholder can understand and utilize the assessment data to make informed decisions.

Transparency and open communication are paramount throughout the process, ensuring that all stakeholders understand the assessment’s purpose, methodology, and implications.

Q 15. Describe your experience with developing assessments for different learning styles.

Developing assessments for diverse learning styles is crucial for ensuring fair and accurate evaluation. It’s not a one-size-fits-all approach; instead, it requires understanding the different ways individuals learn and adapting assessment methods accordingly. For example, visual learners benefit from diagrams, charts, and videos, while auditory learners respond well to lectures, discussions, and audio recordings. Kinesthetic learners, on the other hand, need hands-on activities and practical applications.

- Visual Learners: I incorporate visual aids like infographics, flowcharts, and image-based questions into my assessments.

- Auditory Learners: I utilize oral presentations, interviews, and audio-based quizzes.

- Kinesthetic Learners: I design assessments that involve simulations, role-playing, or practical demonstrations.

- Reading/Writing Learners: Traditional written exams and essays remain effective for this group but I also incorporate varied question types such as short answer and multiple choice to cater to different comprehension levels.

In practice, I often create assessments with multiple formats within a single evaluation to cater to a broad range of learning preferences. For instance, a module on project management might include a written report, a presentation, and a practical project simulation.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What software or tools are you proficient in for assessment development?

Proficiency in assessment development software is essential. My expertise encompasses several tools, each with its strengths:

- Articulate Storyline 360: For creating engaging and interactive e-learning modules and assessments with branching scenarios and multimedia integration. I use it to design complex assessments with adaptive questioning, providing personalized feedback based on learner responses.

- Adobe Captivate: Similar to Articulate Storyline, but with a robust video editing capability. This is useful for creating video-based assessments or incorporating video demonstrations into assessments.

- ExamView: A powerful tool for creating and managing traditional paper-based and computer-based tests. Its test generation features and item banking capabilities improve efficiency and ensure assessment reliability.

- Google Forms/Microsoft Forms: These are excellent for quick surveys and simple assessments, useful for formative evaluations and gathering rapid feedback.

- Moodle/Canvas: I’m experienced in integrating assessments within Learning Management Systems (LMS). This allows for seamless delivery, grading, and tracking of student progress.

Beyond software, I’m also proficient in using spreadsheet software (Excel, Google Sheets) for data analysis and reporting, crucial for interpreting assessment results.

Q 17. How do you ensure the security and confidentiality of assessment data?

Security and confidentiality of assessment data are paramount. My approach involves a multi-layered strategy:

- Secure Storage: All assessment data is stored on password-protected servers with restricted access. I utilize cloud-based solutions with robust security features like encryption and two-factor authentication.

- Data Anonymization: When feasible, I anonymize data to protect individual identities. This is particularly important when analyzing results for research or improvement purposes.

- Access Control: I implement strict access control protocols, ensuring that only authorized personnel have access to sensitive assessment data.

- Compliance with Regulations: I ensure all my practices comply with relevant data privacy regulations, such as FERPA (Family Educational Rights and Privacy Act) or GDPR (General Data Protection Regulation), depending on the context.

- Regular Security Audits: I advocate for and participate in regular security audits to identify and address any potential vulnerabilities.

In cases where sensitive information must be retained, I utilize robust encryption methods to protect the data during storage and transmission.

Q 18. How do you stay updated on the latest trends and best practices in assessment?

Staying current in the field of assessment requires ongoing professional development. My strategies include:

- Professional Organizations: Active membership in organizations like the American Educational Research Association (AERA) provides access to research, conferences, and networking opportunities.

- Conferences and Workshops: Attending relevant conferences and workshops allows me to learn about the latest assessment technologies and best practices from industry experts.

- Peer-Reviewed Journals: I regularly review peer-reviewed journals focused on educational measurement and assessment to stay informed on current research and trends.

- Online Resources: Utilizing reputable online resources and professional development platforms offers access to a wealth of information and training materials.

- Mentorship and Collaboration: I actively engage in mentorship and collaboration with colleagues to share knowledge and best practices.

Continuous learning is essential to remain adaptable and effective in this ever-evolving field.

Q 19. Describe your experience with developing assessments for diverse populations.

Developing assessments for diverse populations necessitates a deep understanding of cultural nuances, language barriers, and learning differences. My approach emphasizes inclusivity and accessibility:

- Universal Design for Learning (UDL): I apply the principles of UDL to create assessments that are flexible and adaptable to diverse learners. This means providing multiple means of representation, action, and engagement.

- Language Support: Where necessary, I provide assessments in multiple languages or offer translation services to ensure equitable access.

- Accessibility Considerations: I design assessments that are accessible to individuals with disabilities, adhering to accessibility guidelines like WCAG (Web Content Accessibility Guidelines).

- Cultural Sensitivity: I carefully review assessments to ensure they are culturally appropriate and avoid biases that might disadvantage certain groups. This includes considering the cultural context and avoiding stereotypes.

- Pilot Testing: Before large-scale implementation, I conduct pilot testing with representative samples of the diverse population to identify and address potential issues.

For example, when developing assessments for ESL (English as a Second Language) learners, I might incorporate visual aids, simplify language, or allow for more time to complete the assessment.

Q 20. How do you manage the budget and timeline for an assessment project?

Effective budget and timeline management are crucial for successful assessment projects. My approach involves:

- Detailed Project Plan: I begin with a comprehensive project plan that outlines tasks, timelines, and resource allocation.

- Budget Estimation: I develop a detailed budget that accounts for all anticipated costs, including software, personnel, materials, and potential unforeseen expenses.

- Regular Monitoring: I closely monitor the project’s progress against the timeline and budget, making adjustments as needed.

- Risk Management: I proactively identify potential risks and develop mitigation strategies to minimize disruptions.

- Clear Communication: I maintain clear and consistent communication with stakeholders to ensure alignment and address any concerns.

Utilizing project management tools like Gantt charts or agile methodologies can further enhance efficiency and transparency in managing budget and timelines. For instance, breaking down the project into smaller, manageable phases with defined deliverables allows for better control and progress tracking.

Q 21. Explain your experience with qualitative and quantitative data analysis in assessment.

Both qualitative and quantitative data analysis are essential for comprehensive assessment evaluation. Quantitative analysis provides numerical data for objective measurement, while qualitative analysis provides rich contextual understanding.

- Quantitative Analysis: This involves statistical analysis of numerical data, such as test scores, response times, and item difficulty indices. I utilize statistical software packages (SPSS, R) to analyze data, identifying trends, correlations, and patterns.

- Qualitative Analysis: This involves analyzing non-numerical data, such as open-ended responses, interviews, and observations. Techniques like thematic analysis and content analysis are used to identify recurring themes and patterns in the data.

- Mixed-Methods Approach: Often, I employ a mixed-methods approach, combining both quantitative and qualitative data to gain a more complete understanding of the assessment’s effectiveness. This integrated approach provides a richer interpretation of findings than either method alone.

For example, I might analyze quantitative data to determine the overall average score on a test and then use qualitative data from student interviews to understand reasons for high or low performance. This allows me to refine assessments based on both objective measures and insightful feedback.

Q 22. How do you involve stakeholders in the assessment development process?

Stakeholder involvement is crucial for creating assessments that are relevant, valid, and useful. I employ a multi-faceted approach, starting with initial needs analysis workshops. These sessions bring together key stakeholders – instructors, learners, subject matter experts, administrators, and even potential employers – to define learning objectives, identify assessment needs, and establish criteria for success. This collaborative process ensures everyone’s voice is heard and that the assessment aligns with the overall program goals.

Following the needs analysis, I use a variety of methods to maintain ongoing communication and feedback. This includes regular progress updates, focus groups to test assessment drafts, and surveys to collect feedback on the final assessments. For example, during the development of a new certification exam, we conducted several focus groups with practicing professionals to ensure the assessment accurately reflected real-world skills and knowledge. This iterative process significantly improved the assessment’s validity and acceptance by the professional community.

Finally, I believe in transparent communication throughout the entire process. Regular reports and feedback sessions help stakeholders understand the rationale behind design choices and address any concerns. This open dialogue fosters trust and ensures the final assessment meets the needs of all involved parties.

Q 23. What is your experience with legal and ethical considerations in assessment?

Legal and ethical considerations are paramount in assessment development. I am acutely aware of issues concerning fairness, equity, accessibility, and the protection of learner data. For instance, I ensure all assessments adhere to the Americans with Disabilities Act (ADA) guidelines, providing reasonable accommodations for learners with disabilities. This might involve offering alternative formats like Braille or audio versions of tests, providing extended time, or adjusting testing environments.

Furthermore, I prioritize maintaining the confidentiality and security of learner data, adhering to all relevant privacy regulations such as FERPA (Family Educational Rights and Privacy Act) and GDPR (General Data Protection Regulation). This includes secure storage of data, anonymization where possible, and strict control over access to assessment results. I meticulously document all decisions and processes related to assessment development to ensure transparency and accountability.

I also carefully consider the potential for bias in assessment design and strive to create assessments that are free from cultural, gender, or socioeconomic bias. This includes carefully reviewing all questions and materials to ensure they are neutral and avoid using language or imagery that could disadvantage particular groups.

Q 24. Describe your experience with using different assessment formats (e.g., multiple-choice, essay, performance-based).

My experience encompasses a wide range of assessment formats, each suited to different learning objectives and assessment needs. Multiple-choice questions are efficient for assessing factual recall and knowledge, and I leverage these when appropriate. However, I’m aware of their limitations in assessing higher-order thinking skills such as critical analysis and problem-solving.

Essay questions are effective for assessing a student’s ability to synthesize information, construct arguments, and communicate their understanding. For example, in a history course, an essay question might prompt a student to analyze the causes of a particular historical event. Performance-based assessments are invaluable for evaluating practical skills. This could range from a coding project in computer science to a science experiment in biology, or a presentation in a communication course.

The choice of assessment format is always strategic. I select the most appropriate method(s) based on the specific learning objectives and the skills I aim to measure. Often, a combination of formats is used to obtain a comprehensive and nuanced understanding of learner achievement.

Q 25. How do you ensure that assessments align with learning objectives?

Alignment between assessments and learning objectives is fundamental to valid and reliable assessment. I begin by clearly defining the learning objectives using a taxonomy such as Bloom’s revised taxonomy, ensuring they are measurable, observable, and achievable. This creates a clear framework for designing assessment tasks.

Each assessment item is then meticulously crafted to directly address a specific learning objective. For example, if a learning objective states that students will be able to ‘analyze the impact of industrialization on society,’ assessment items will require students to perform this analysis. This might involve essay questions, case studies, or problem-solving scenarios that directly assess analytical skills.

A mapping table is used to explicitly link assessment items to the learning objectives. This table serves as a crucial reference point throughout the development and review process, ensuring complete alignment and minimizing ambiguity. This systematic approach guarantees that the assessment accurately reflects what was taught and what students were expected to learn.

Q 26. How do you address bias in assessment design and implementation?

Addressing bias is a critical aspect of assessment development and requires careful planning and execution. I use several strategies to mitigate bias. Firstly, I carefully examine the language used in assessment items, ensuring it is inclusive, culturally sensitive, and avoids gendered or culturally specific terms that could disadvantage certain groups.

Secondly, I ensure that assessment content is representative of the diverse student population. This involves incorporating examples and scenarios that reflect the experiences and backgrounds of all learners. Thirdly, I involve diverse reviewers in the assessment development process, to provide different perspectives and identify any potential biases I may have overlooked.

Finally, I utilize statistical analysis to identify potential bias after the assessment is administered. This involves examining item response data to identify items that show differential item functioning (DIF), meaning that certain groups of students may be performing differently on the same item, independent of their overall ability. If DIF is detected, the item is revised or removed to eliminate bias.

Q 27. What is your experience with developing assessments for online delivery?

I have extensive experience developing assessments for online delivery, understanding the unique challenges and opportunities presented by this modality. Key considerations include accessibility, security, and the use of technology to enhance the assessment experience.

To ensure accessibility, I employ strategies such as providing alternative text for images and videos, using appropriate font sizes and colours, and offering alternative formats of assessments for students with disabilities. Security is paramount, and I utilize various techniques such as proctoring software, randomized question banks, and time limits to maintain the integrity of online assessments.

I also leverage technology to create engaging and interactive assessments. This might involve incorporating multimedia elements, simulations, or interactive exercises to make the assessment process more dynamic and engaging for learners. For instance, I’ve designed online assessments using platforms like Moodle and Canvas, incorporating features like adaptive testing and automated feedback mechanisms to provide immediate feedback to students.

Q 28. Describe a time you had to revise an assessment strategy due to unexpected challenges.

During the development of a high-stakes licensing exam, we encountered an unexpected challenge. Initial pilot testing revealed low scores across all participant groups, indicating a problem with the assessment’s difficulty, not the candidates’ preparation. This suggested we needed to adjust the assessment strategy significantly.

Our initial analysis showed that the questions were overly complex and did not adequately reflect the actual tasks performed by professionals in the field. We conducted a thorough review of the assessment blueprint and content, comparing it with updated industry standards and feedback from subject matter experts. This led us to revise several questions, reduce the overall difficulty, and add more practice questions to the test bank.

We also adjusted the scoring system to better reflect the relative importance of different skills. Finally, we conducted further pilot testing with a new cohort to validate the revised assessment. The revised strategy resulted in more valid and reliable results, ensuring the exam accurately measured the competencies required for licensure. This experience highlighted the importance of iterative design, ongoing feedback, and flexibility in responding to unforeseen challenges during assessment development.

Key Topics to Learn for Assessment Strategy Development Interview

- Needs Analysis & Goal Setting: Defining clear assessment objectives, identifying target audiences, and aligning assessments with organizational goals. Consider practical application in diverse settings, from employee performance reviews to large-scale competency modeling.

- Assessment Method Selection: Understanding the strengths and weaknesses of various assessment methods (e.g., questionnaires, interviews, simulations, performance tests) and choosing the most appropriate methods for specific needs. Explore case studies demonstrating effective method selection based on context and resources.

- Test Development & Validation: Designing valid and reliable assessments, ensuring fairness and minimizing bias. Understand the principles of psychometrics and the importance of data-driven decision-making in refining assessments.

- Data Analysis & Interpretation: Analyzing assessment data to draw meaningful conclusions, identifying trends, and making data-informed recommendations. Practice interpreting various types of assessment data and communicating findings effectively.

- Assessment Implementation & Delivery: Managing the logistics of assessment implementation, including scheduling, communication, and administration. Consider challenges related to scalability, accessibility, and ethical considerations.

- Evaluation & Improvement: Developing strategies for evaluating the effectiveness of assessment programs and making continuous improvements based on data and feedback. Explore methods for measuring the ROI of assessment initiatives.

Next Steps

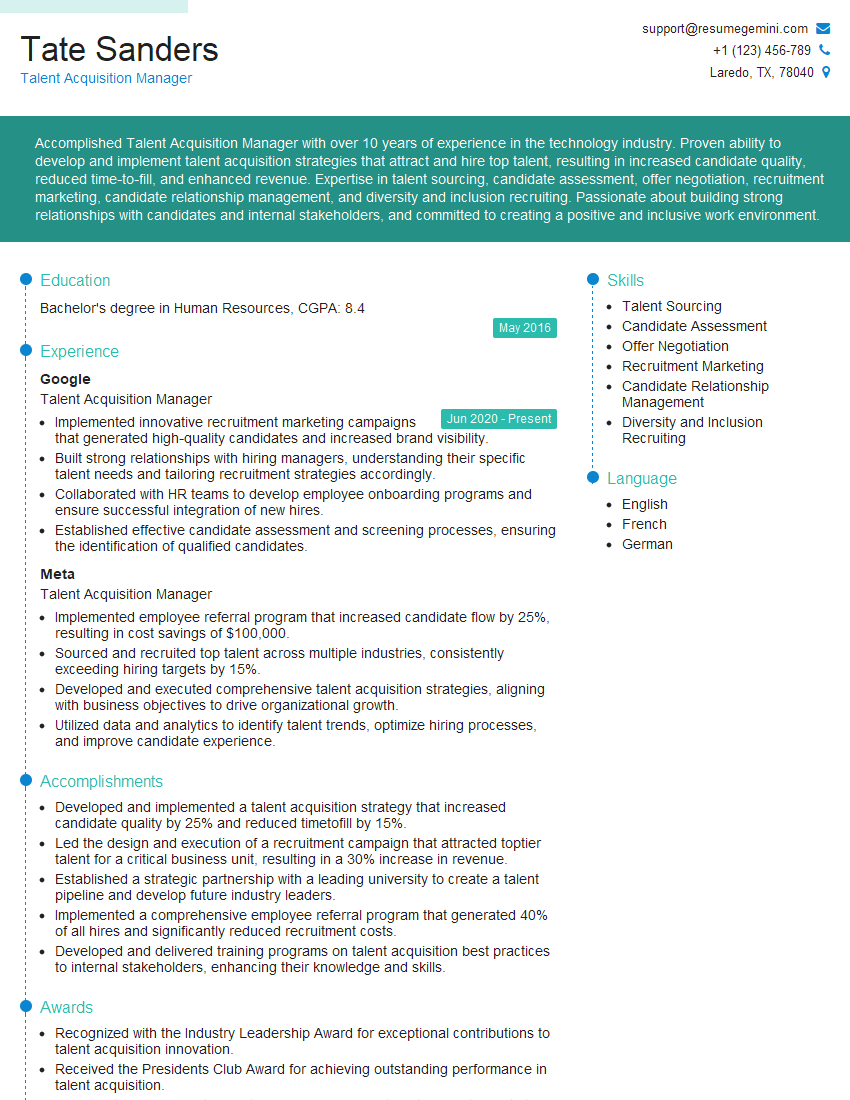

Mastering Assessment Strategy Development is crucial for career advancement in HR, talent management, and organizational development. A strong understanding of these principles opens doors to leadership roles and higher earning potential. To significantly enhance your job prospects, creating a well-structured, ATS-friendly resume is paramount. ResumeGemini is a trusted resource offering tools and guidance to build a professional resume that showcases your skills and experience effectively. Examples of resumes tailored to Assessment Strategy Development are available to help you craft a compelling application that stands out.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.