Preparation is the key to success in any interview. In this post, we’ll explore crucial Cognitive Ability Assessment interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Cognitive Ability Assessment Interview

Q 1. Explain the difference between fluid and crystallized intelligence.

Fluid intelligence and crystallized intelligence represent two distinct, yet related, aspects of cognitive ability. Think of it like this: fluid intelligence is your brain’s raw processing power, your ability to think flexibly and solve novel problems. Crystallized intelligence, on the other hand, is your accumulated knowledge and skills gained through experience and learning.

Fluid intelligence is the ability to reason abstractly and solve problems independently of prior knowledge. It’s your capacity to adapt to new situations and learn quickly. For example, solving a complex logic puzzle you’ve never seen before relies heavily on fluid intelligence. It typically peaks in early adulthood and then gradually declines with age.

Crystallized intelligence is the storehouse of information and skills you’ve acquired throughout your life. It reflects your vocabulary, general knowledge, and expertise in specific areas. For instance, answering questions about historical events or explaining complex scientific concepts taps into your crystallized intelligence. Unlike fluid intelligence, crystallized intelligence tends to increase throughout adulthood as we accumulate more knowledge and experience.

In essence, fluid intelligence is about how well you think, while crystallized intelligence is about what you know.

Q 2. Describe three common types of cognitive ability tests.

Many cognitive ability tests exist, each measuring different aspects of cognition. Three common types include:

- Raven’s Progressive Matrices: This test focuses primarily on fluid intelligence, requiring participants to identify patterns and complete visual analogies. It’s relatively culture-fair, meaning it’s less influenced by specific knowledge or language skills. A common example involves identifying the missing piece in a sequence of increasingly complex abstract designs.

- Wechsler Adult Intelligence Scale (WAIS) or Wechsler Intelligence Scale for Children (WISC): These are comprehensive intelligence tests that assess a broad range of cognitive abilities, including verbal comprehension, perceptual reasoning, working memory, and processing speed. They provide a full-scale IQ score along with scores for individual subtests, offering a more detailed picture of cognitive strengths and weaknesses. For example, one subtest might involve recalling a series of digits in reverse order (working memory).

- Wonderlic Personnel Test: This is a widely used brief cognitive ability test often employed in employment settings. It assesses various cognitive skills, like verbal comprehension, numerical reasoning, and problem-solving, within a short timeframe. The test is known for its brevity and efficiency in screening candidates for roles requiring problem-solving and quick thinking, typical questions involve solving simple math problems or completing analogies.

Q 3. What are the advantages and disadvantages of using norm-referenced scoring?

Norm-referenced scoring compares an individual’s performance to the performance of a large, representative group (the norm group) who have previously taken the same test. This allows us to determine how an individual’s score ranks relative to others. Let’s examine the advantages and disadvantages.

Advantages:

- Provides a standardized score: Allows for easy comparison across different individuals and groups, regardless of when or where the test was administered.

- Facilitates ranking and selection: Useful in competitive situations, such as college admissions or job recruitment, where selecting top performers is crucial.

- Identifies relative strengths and weaknesses: Provides insight into an individual’s performance compared to the norm group, highlighting areas of excellence or deficiency.

Disadvantages:

- Dependent on the norm group: The validity of the score is only as good as the representativeness of the norm group. A poorly chosen norm group can lead to biased interpretations.

- Can be misleading for absolute performance: Focuses on relative standing rather than absolute achievement. A high percentile rank might still indicate subpar absolute performance on some measures.

- Can exacerbate inequalities: If the norm group is not inclusive, the test may unfairly disadvantage individuals from underrepresented groups.

Q 4. How do you interpret a candidate’s score on a cognitive ability test?

Interpreting a candidate’s score on a cognitive ability test requires a nuanced approach that goes beyond simply looking at the raw score. Consider these steps:

- Understand the test’s standardization: Examine the test manual to understand the norm group and scoring system used. This includes percentiles, standard scores (e.g., z-scores, T-scores), and any other relevant statistical information.

- Consider the context: What job or position is the test being used for? The required cognitive abilities will differ greatly between a data scientist role and a customer service position. The interpretation should align with the specific job requirements.

- Compare the score to the norm: Determine where the candidate’s score falls within the distribution of scores from the norm group. A percentile rank of 90, for example, indicates that the candidate performed better than 90% of the norm group.

- Consider other factors: A cognitive ability test shouldn’t be the only criterion used for selection. Consider the candidate’s experience, skills, personality, and other relevant factors. A low cognitive ability score might not preclude a candidate if other strengths compensate for it.

- Recognize test limitations: Cognitive ability tests only assess a limited range of cognitive abilities. They don’t measure motivation, work ethic, or interpersonal skills.

Example: If a candidate scores in the 75th percentile on a verbal reasoning test for a marketing position, this suggests they possess above-average verbal reasoning skills compared to others. However, this score alone isn’t sufficient for hiring; you must integrate it with their experience and interview performance to make a comprehensive evaluation.

Q 5. Explain the concept of test validity and reliability in the context of cognitive ability assessment.

Test validity and reliability are crucial psychometric properties that determine the quality and trustworthiness of a cognitive ability test.

Validity refers to how well the test measures what it is intended to measure. A valid cognitive ability test accurately assesses the specific cognitive skills it claims to assess, and these skills are relevant to the real-world situations they are used for. Several types of validity exist, including content validity (does it cover the relevant content domain?), criterion validity (does it predict future performance on a relevant criterion?), and construct validity (does it measure the theoretical construct it’s designed to measure?).

Reliability refers to the consistency and stability of the test’s measurements. A reliable test produces similar scores when administered repeatedly to the same individuals under similar conditions. High reliability means the test scores are not due to random error and are more likely to reflect the true cognitive ability of the individual. There are different ways to assess reliability, including test-retest reliability (consistency over time), internal consistency (consistency between items within the test), and inter-rater reliability (agreement between raters if the test requires subjective judgment).

Imagine a test designed to measure problem-solving ability. High validity means that people who score high on the test genuinely possess strong problem-solving skills in real-world scenarios. High reliability means that if the same person takes the test twice, they will obtain very similar scores both times, independent of temporary factors.

Q 6. What are some ethical considerations when administering cognitive ability tests?

Ethical considerations are paramount when administering cognitive ability tests. Several key concerns include:

- Informed consent: Candidates must understand the purpose of the test, how the results will be used, and their right to refuse to participate.

- Confidentiality: Test results should be kept confidential and only accessed by authorized personnel. Data protection regulations need to be followed diligently.

- Fairness and bias: Tests should be free from bias and ensure equal opportunity for all candidates, regardless of their background or demographics. Care must be taken to avoid tests that disproportionately disadvantage certain groups.

- Test security: Measures must be in place to prevent test leakage and unauthorized access to test materials. This maintains the integrity of the test.

- Appropriate use: Tests should only be used for purposes they were designed for. Misusing a test, or using it to make high-stakes decisions without considering other relevant information, is unethical.

- Feedback to candidates: Providing meaningful feedback to candidates, not just their score, is crucial. Understanding what aspects of the test were difficult or easy can be helpful to them.

Q 7. How can you ensure fair and unbiased assessment practices?

Ensuring fair and unbiased assessment practices necessitates a multi-faceted approach:

- Using validated and reliable tests: Select tests that have demonstrated strong psychometric properties and are free from known biases.

- Developing diverse norm groups: Norm groups should be representative of the population for which the test is intended, minimizing potential biases based on gender, ethnicity, or socioeconomic status.

- Providing accommodations for individuals with disabilities: Reasonable accommodations, such as extended time or alternative formats, should be provided to ensure fair assessment for individuals with disabilities.

- Training assessors: Assessors should receive adequate training on administering, scoring, and interpreting the tests ethically and accurately.

- Using multiple assessment methods: Relying solely on cognitive ability tests is inappropriate. Combine results with other assessment methods, such as interviews, work samples, or situational judgment tests, for a more holistic evaluation.

- Regularly review and update assessment methods: Assessment practices should be regularly reviewed and updated to ensure they remain fair and effective in light of evolving research and social contexts.

Transparency and communication are also key. Candidates should be clearly informed about the assessment process and the criteria for selection. By employing these measures, organizations can foster fairness, equity, and trust in their assessment practices.

Q 8. Discuss the impact of cultural biases on cognitive ability test results.

Cultural biases in cognitive ability tests are a significant concern. These biases arise when test questions or formats inadvertently favor individuals from certain cultural backgrounds over others. This isn’t about inherent ability, but rather about familiarity with specific cultural contexts, language nuances, and testing styles. For example, a question relying on knowledge of a specific sport popular in one culture might disadvantage individuals from cultures where that sport is unknown. This can lead to inaccurate assessments, underestimating the potential of individuals from underrepresented groups.

Addressing this requires careful test development and validation. This involves diverse teams creating questions that are culturally fair and avoiding culturally loaded content. Equally important is ensuring that the testing environment is comfortable and familiar to all test-takers, minimizing any additional stress that might arise from an unfamiliar setting.

Furthermore, using multiple assessment methods, and not relying solely on a single cognitive ability test, can help mitigate the effects of cultural bias. Combining cognitive assessments with behavioral interviews, work samples, or simulations provides a more holistic picture of a candidate’s abilities.

Q 9. How do you address concerns about test anxiety during assessment?

Test anxiety significantly impacts performance on cognitive ability assessments. High levels of anxiety can interfere with concentration, memory recall, and problem-solving abilities, leading to scores that don’t accurately reflect a person’s true cognitive capabilities.

To address this, we need a multi-pronged approach. Firstly, creating a relaxed and supportive testing environment is crucial. This includes clear instructions, a comfortable setting, and assuring the candidate that the test is simply one piece of information used in the selection process.

Secondly, providing test-takers with opportunities to practice similar questions beforehand can greatly reduce anxiety. This familiarization helps them understand the format and build confidence. Thirdly, incorporating calming techniques like deep breathing exercises or mindfulness practices before the test can be beneficial for some candidates.

Finally, understanding and acknowledging that anxiety is a normal human response can be reassuring for the test-taker, helping them to feel more comfortable and focused during the assessment.

Q 10. Describe your experience with different types of cognitive ability test questions (e.g., verbal, numerical, spatial reasoning).

My experience spans a wide range of cognitive ability test questions, encompassing verbal, numerical, and spatial reasoning tasks. Verbal reasoning typically involves assessing comprehension, vocabulary, and logical reasoning through passages and questions. I’ve worked with tests that involve analogies, sentence completion, and reading comprehension exercises.

Numerical reasoning questions assess mathematical skills and problem-solving capabilities. This could include tasks involving number series, data interpretation from tables and charts, and arithmetic calculations. I have experience with tests ranging from simple calculations to complex statistical reasoning problems.

Spatial reasoning tests evaluate the ability to visualize and manipulate objects in space. This often involves tasks like mental rotation of shapes, identifying patterns in visual arrays, and understanding spatial relationships. I’ve administered tests using both paper-and-pencil and computer-based formats, utilizing different types of shapes and patterns.

Q 11. How do you identify and mitigate potential response biases?

Response biases, such as social desirability bias (responding in a way that is seen as socially acceptable) or acquiescence bias (agreeing with statements regardless of content), can significantly distort the results of cognitive ability tests. Identifying these biases requires careful analysis of the responses.

One strategy is to include a mix of positively and negatively worded items to counteract acquiescence bias. For social desirability bias, using forced-choice questions or incorporating lie scales (questions designed to detect dishonest responding) can be helpful. Examining response patterns and inconsistencies within an individual’s responses is also crucial in detecting potential biases.

Mitigating these biases involves using validated test instruments, careful question design, and appropriate scoring methods that can account for or at least partially correct such responses. Statistical analysis of the results can help flag suspicious response patterns.

Q 12. Explain the importance of integrating cognitive ability assessments with other selection methods.

Cognitive ability tests shouldn’t stand alone in the selection process. They provide valuable insights into general cognitive capabilities, but integrating them with other methods paints a more comprehensive and nuanced picture of a candidate’s suitability for a role. Relying solely on a cognitive ability test can lead to incomplete assessments, potentially missing strong candidates or overlooking critical skills.

Combining cognitive ability tests with structured interviews, behavioral assessments, work sample tests, and situational judgment tests provides a richer understanding of candidate characteristics. For example, a cognitive ability test may reveal high cognitive potential, but an interview can uncover communication skills or teamwork abilities. A work sample test allows for a direct assessment of practical skills. This holistic approach offers a more robust and valid prediction of job performance and reduces the chance of making biased hiring decisions.

Q 13. How do you interpret the results of a cognitive ability test in relation to job performance?

Interpreting cognitive ability test results in relation to job performance requires a nuanced understanding. A high score doesn’t automatically guarantee success, nor does a low score always signify failure. The relationship is often correlational, not deterministic. Furthermore, the strength of the correlation can vary significantly depending on the job itself.

For jobs requiring complex problem-solving, high levels of abstract reasoning, and quick learning, cognitive ability tests often show a stronger correlation with performance. However, for jobs that are more routine or heavily reliant on specific learned skills, the correlation might be weaker. Context matters! Therefore, one needs to examine the job requirements carefully and consider the test’s validity in predicting success for that specific role.

It’s crucial to consider other factors in conjunction with test results, such as experience, education, personality traits, and motivations. A comprehensive approach that considers multiple data points yields a far more accurate prediction of job performance.

Q 14. What are some alternative assessment methods to cognitive ability tests?

While cognitive ability tests are valuable, alternative assessment methods can offer complementary insights. These alternatives can often better capture aspects of a candidate’s profile that are not easily measured through traditional cognitive tests.

Some alternatives include:

- Situational Judgment Tests (SJTs): Assess how candidates would handle specific work-related scenarios.

- Work Sample Tests: Require candidates to perform tasks similar to those in the target job.

- Behavioral Interviews: Explore past behaviors to predict future performance.

- Personality Assessments: Measure personality traits relevant to job success.

- Assessment Centers: Use multiple methods to evaluate candidates in a simulated work environment.

The choice of alternative method(s) depends on the specific job requirements and the information needed to make informed hiring decisions. A combination of methods is often the most effective approach.

Q 15. How do you ensure the security and confidentiality of assessment data?

Ensuring the security and confidentiality of assessment data is paramount. We employ a multi-layered approach, starting with robust physical security measures for servers and data storage. Access to the data is strictly controlled through role-based access control, meaning only authorized personnel with a legitimate need can access specific data sets. Data encryption, both in transit and at rest, is crucial to protect against unauthorized access. We also adhere to strict data anonymization protocols, removing or masking any personally identifiable information whenever possible. Regular security audits and penetration testing help identify and address vulnerabilities proactively. Furthermore, we are compliant with all relevant data privacy regulations, such as GDPR and CCPA, ensuring transparency and accountability in our data handling practices. Think of it like Fort Knox for sensitive information – multiple layers of protection ensuring the safety of the data.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Discuss the legal and regulatory implications of cognitive ability testing.

The legal and regulatory landscape surrounding cognitive ability testing is complex and varies by jurisdiction. Key considerations include the Americans with Disabilities Act (ADA) in the US, which requires reasonable accommodations for individuals with disabilities. We must ensure our tests are fair and unbiased, avoiding discriminatory practices. Data protection laws, like GDPR in Europe and CCPA in California, mandate stringent data handling procedures, including informed consent and data security. Furthermore, issues of test validity and reliability must be addressed to ensure the tests measure what they intend to and produce consistent results. Using tests that lack validity or reliability can lead to legal challenges if used for high-stakes decisions such as hiring. We must also comply with all relevant copyright and intellectual property laws when using commercially available assessments. Failing to consider these legal and ethical considerations can result in costly lawsuits and reputational damage.

Q 17. How do you communicate assessment results to candidates effectively?

Communicating assessment results effectively requires sensitivity and clarity. We avoid overly technical jargon and instead use plain language that is easily understandable. Results are presented in a balanced way, highlighting both strengths and areas for potential improvement, avoiding a purely judgmental approach. We provide context for the scores, explaining what they mean in relation to the overall distribution of scores. For example, we might say “Your score on the verbal reasoning section was in the 75th percentile, indicating strong performance relative to other candidates.” We also emphasize the limitations of the test, acknowledging that it’s just one piece of information in the overall selection process and doesn’t capture the entirety of a candidate’s abilities. Providing personalized feedback, tailored to the individual’s strengths and areas for development, makes the results more meaningful and actionable. A follow-up conversation to answer any questions they may have is also crucial.

Q 18. Describe a situation where you had to troubleshoot a problem with a cognitive ability test.

During a large-scale recruitment drive, we encountered an unexpected technical glitch: the test platform became unresponsive for a significant number of candidates during the peak hours. The problem stemmed from a server overload due to the high volume of simultaneous users. Our immediate response involved deploying a secondary server to distribute the load, and we provided candidates with extended access to the test to compensate for the lost time. In parallel, we investigated the root cause – insufficient server capacity – and implemented long-term solutions including scaling our infrastructure and implementing a more robust queuing system. Thorough post-incident analysis allowed us to refine our systems and avoid similar issues in the future. This experience highlighted the importance of robust technical infrastructure and proactive monitoring in high-stakes assessments. We learned a valuable lesson about the need for redundancy and scalability in handling large-scale testing.

Q 19. What are some common statistical measures used to analyze cognitive ability test data?

Several statistical measures are vital for analyzing cognitive ability test data. These include measures of central tendency (mean, median, mode) to summarize the distribution of scores. Measures of dispersion (standard deviation, variance) show how spread out the scores are. Correlation coefficients (Pearson’s r) measure the strength and direction of the relationship between two variables, for example, the correlation between test scores and job performance. Reliability coefficients (Cronbach’s alpha) assess the internal consistency of the test, indicating how well the items measure the same construct. Validity coefficients demonstrate the extent to which the test accurately predicts job performance or other relevant criteria. Normative data, providing comparisons of an individual’s score to a larger group, are also crucial for interpreting the results meaningfully. Understanding these measures is crucial for drawing accurate and meaningful inferences from the test data.

Q 20. Explain the concept of differential item functioning (DIF).

Differential Item Functioning (DIF) refers to the phenomenon where an item on a test functions differently for different groups, even when those groups have the same underlying ability. For instance, an item might be easier for men than women, even if both groups have the same overall cognitive ability level. DIF analysis is essential for ensuring fair and unbiased assessments. It helps identify items that might be inadvertently disadvantaging certain demographic groups. Common methods for detecting DIF include logistic regression and Mantel-Haenszel procedures. If DIF is detected, the problematic items are usually revised or removed to ensure that the test is equally challenging for all groups. Ignoring DIF can lead to inaccurate and discriminatory test results, violating principles of fairness and equity.

Q 21. How do you determine the appropriate cut-off scores for a cognitive ability test?

Determining appropriate cut-off scores for a cognitive ability test is a crucial step, requiring careful consideration of several factors. A purely statistical approach, such as selecting a score at a certain percentile (e.g., the 70th percentile), can be useful but needs further justification. We must also consider the job requirements and the relationship between test scores and job performance. A criterion-referenced approach involves setting a cut-off score based on the minimum level of ability necessary for successful job performance. This often involves analyzing data from incumbents who are already performing well in the job. We might use regression analysis or other statistical methods to model the relationship between test scores and performance metrics such as productivity or supervisor ratings. Furthermore, legal and ethical considerations, ensuring fairness and avoiding discrimination, play a vital role in determining the cut-off scores. A transparent and well-justified process is critical, and the chosen cut-off should be reviewed and updated periodically to maintain its validity.

Q 22. Discuss the role of technology in administering and scoring cognitive ability tests.

Technology has revolutionized cognitive ability testing, impacting both administration and scoring. Previously, tests were often paper-based, requiring manual scoring, which was time-consuming and prone to error. Now, computer-based testing (CBT) is the norm. This offers numerous advantages.

- Automated Administration: CBT platforms automatically present test items, track response times, and manage test progress, eliminating manual handling. This ensures standardization and reduces administrative burden.

- Objective Scoring: Automated scoring eliminates human bias and significantly reduces the time required to get results. Sophisticated algorithms can analyze responses, identify patterns, and provide detailed performance reports.

- Adaptive Testing: Technology enables adaptive testing, where the difficulty of subsequent items adjusts based on the test-taker’s performance. This optimizes test length and precision, providing a more accurate assessment in less time.

- Item Banking and Test Generation: Large item banks can be easily managed and utilized to create diverse test forms, minimizing the risk of test leakage and improving test security.

- Data Analysis and Reporting: Powerful software facilitates detailed data analysis, allowing for the identification of trends, strengths and weaknesses, and comparison of results across different groups.

For example, imagine comparing the manual grading of 100 paper-based aptitude tests versus a computerized system instantly providing scores and detailed analytics for the same number of tests. The difference in efficiency is dramatic.

Q 23. How do you evaluate the effectiveness of a cognitive ability test in a specific context?

Evaluating the effectiveness of a cognitive ability test requires a multifaceted approach, focusing on its validity, reliability, and fairness within a specific context. We need to consider the test’s purpose and the characteristics of the target population.

- Validity: Does the test actually measure what it claims to measure (e.g., does it accurately predict job performance)? This is assessed through various methods like criterion-related validity (correlating test scores with job performance) and content validity (ensuring the test covers all relevant cognitive abilities).

- Reliability: Does the test provide consistent results over time and across different raters? This can be assessed through test-retest reliability and internal consistency measures.

- Fairness: Does the test produce unbiased results across different demographic groups? Bias analysis is crucial to ensure equitable outcomes. Unfairness can stem from cultural differences, language barriers, or differential item functioning (DIF).

- Practicality: Is the test cost-effective, easy to administer and score, and appropriate for the available resources?

In a real-world scenario, let’s say a company uses a cognitive ability test to screen candidates for a software engineering position. We would evaluate its validity by checking how well test scores correlate with actual performance metrics, like coding efficiency and problem-solving skills. Reliability would be assessed through test-retest reliability and internal consistency. Finally, fairness analysis would be conducted to eliminate any bias against certain demographic groups. If the test lacks validity or fairness, it needs to be revised or replaced.

Q 24. What are some best practices for developing a cognitive ability test?

Developing a high-quality cognitive ability test is a rigorous process requiring expertise in psychometrics and a deep understanding of cognitive abilities. Here are some best practices:

- Clear Definition of Objectives: Define precisely what cognitive abilities need to be assessed and the specific purpose of the test (e.g., selection, placement, diagnosis).

- Item Development: Create test items that are clear, unambiguous, and relevant to the target population. Employ diverse item types (e.g., multiple choice, verbal reasoning, numerical reasoning) to comprehensively assess cognitive abilities.

- Pilot Testing and Refinement: Thorough pilot testing with a representative sample is critical. This allows for identifying problematic items, assessing item difficulty, and refining the test’s overall structure.

- Psychometric Analysis: Conduct rigorous psychometric analyses to evaluate the test’s reliability, validity, and fairness. This includes calculating reliability coefficients, conducting factor analysis, and assessing differential item functioning.

- Norming: Develop norms based on a large, representative sample to allow for meaningful interpretation of scores.

- Accessibility Considerations: Design the test to be accessible to candidates with disabilities, offering appropriate accommodations as needed.

- Legal and Ethical Considerations: Ensure the test complies with all relevant legal and ethical guidelines, including those related to fairness, privacy, and informed consent.

Consider a scenario where a test is being designed to select candidates for a role requiring strong problem-solving skills. The development process would involve carefully selecting and designing problem-solving tasks of varying complexity, piloted with a sample group of individuals representing the target population, followed by rigorous statistical analysis to ensure the test accurately measures what it aims to and avoids bias.

Q 25. Describe your experience with using cognitive ability tests in specific industries.

My experience spans various industries, including technology, finance, and healthcare. In the technology sector, I’ve used cognitive ability tests to assess candidates for software engineering, data science, and project management roles. These tests typically focus on logical reasoning, problem-solving, and spatial abilities. The results are crucial in identifying candidates who possess the cognitive skills needed to thrive in fast-paced and complex work environments.

In the finance industry, I’ve worked on assessing candidates for roles such as financial analysts and investment bankers. Tests often include numerical reasoning, abstract reasoning, and attention to detail, crucial components for successful performance in these sectors. The focus is on identifying candidates with strong analytical skills and the ability to process numerical information quickly and accurately.

Within healthcare, cognitive ability tests are used, though more cautiously and often in combination with other assessment tools, to screen candidates for roles demanding critical thinking, decision-making, and attention to detail. The emphasis is on ensuring candidates have the cognitive capabilities to handle the complexities and pressures associated with patient care and medical decision-making. The context is critical: direct application to patient care demands a more holistic and cautious approach than, say, a screening process for an administrative role.

Q 26. How do you stay updated on the latest research and best practices in cognitive ability assessment?

Staying current in the field of cognitive ability assessment requires continuous learning. I actively engage in several strategies:

- Professional Journals and Publications: I regularly read journals like the Journal of Applied Psychology, Personnel Psychology, and Intelligence to stay abreast of the latest research findings and methodologies.

- Conferences and Workshops: Attending conferences and workshops hosted by organizations such as the Society for Industrial and Organizational Psychology (SIOP) provides opportunities to learn about cutting-edge research and best practices from leading experts in the field.

- Online Resources and Databases: I utilize online databases like PsycINFO and Web of Science to search for relevant articles and research studies.

- Professional Networks: Engaging with colleagues and professionals in my network through online forums and professional groups facilitates discussion and sharing of knowledge and experiences.

- Continuing Education: I participate in continuing education programs to enhance my expertise in psychometrics, assessment design, and related areas.

For example, I recently attended a workshop on the application of machine learning techniques in cognitive ability test development, gaining valuable insights into new approaches to test construction and analysis.

Q 27. What is your experience with different software platforms for administering cognitive ability tests?

My experience encompasses a range of software platforms for administering cognitive ability tests, both proprietary and open-source. I’m familiar with platforms like:

- Test platforms with built-in item banks: These provide pre-built tests and scoring functionality, simplifying the administration process. They often offer reporting capabilities and data analytics tools.

- Customizable platforms: These offer greater flexibility in designing and deploying tests, allowing for tailored assessments specific to organizational needs. They typically require greater technical expertise in managing and interpreting data.

- Open-source tools: While demanding more technical skill in setup and data analysis, these platforms offer cost-effective solutions for specific testing requirements.

The choice of platform depends largely on factors like budget, technical expertise, and the specific needs of the assessment. For instance, a large organization with a dedicated psychometrics team might choose a customizable platform to create highly tailored assessments. A smaller organization might opt for a user-friendly, pre-built platform to minimize setup time and costs.

Q 28. How do you ensure the accessibility of cognitive ability tests for candidates with disabilities?

Ensuring accessibility for candidates with disabilities is paramount. This necessitates a multifaceted approach incorporating various strategies:

- Universal Design Principles: Developing tests adhering to universal design principles ensures that the test is usable by people with a wide range of abilities and disabilities from the outset. This may include using clear and simple language, providing sufficient time to complete the test, and avoiding unnecessary visual or auditory distractions.

- Assistive Technologies: Providing access to appropriate assistive technologies, such as screen readers, text-to-speech software, or alternative input devices, is crucial for candidates with visual or motor impairments.

- Alternative Formats: Offering the test in alternative formats, such as Braille or large print, caters to specific visual needs.

- Accommodations: Providing reasonable accommodations, such as extended time, breaks, or alternative testing environments, ensures fairness for candidates with disabilities. The accommodation should be determined on a case-by-case basis, following guidance from accessibility experts.

- Careful Review of Test Items: Conducting thorough reviews of test items to identify and eliminate potential barriers for candidates with disabilities is essential to ensure fairness and eliminate potential bias.

For example, if a candidate has a visual impairment, providing the test in Braille format, or using screen-reader compatible software, might be an appropriate accommodation. Similarly, extra time could be allocated for candidates with processing speed challenges.

Key Topics to Learn for Cognitive Ability Assessment Interview

Succeeding in a cognitive ability assessment interview requires a strategic approach. Understanding the underlying principles and practicing your problem-solving skills are crucial. Here’s a breakdown of key areas to focus on:

- Verbal Reasoning: Understanding complex written material, identifying main ideas, drawing inferences, and analyzing arguments. Practical Application: Analyze case studies, synthesize information from multiple sources, and articulate your conclusions clearly and concisely.

- Numerical Reasoning: Interpreting data presented in tables, charts, and graphs; performing calculations and applying mathematical concepts to solve problems. Practical Application: Analyze financial statements, interpret statistical data, and make informed decisions based on quantitative information.

- Abstract Reasoning: Identifying patterns, relationships, and logical sequences in abstract figures and diagrams. Practical Application: Solving complex problems with limited information, identifying trends, and thinking creatively to find solutions.

- Logical Reasoning: Evaluating arguments, identifying fallacies, and drawing valid conclusions based on given premises. Practical Application: Making sound judgments, solving complex puzzles, and constructing well-reasoned arguments to support your decisions.

- Spatial Reasoning: Visualizing and manipulating objects in three-dimensional space; understanding relationships between shapes and forms. Practical Application: Solving spatial problems related to design, architecture, or engineering; improving problem-solving in visually-based scenarios.

Next Steps

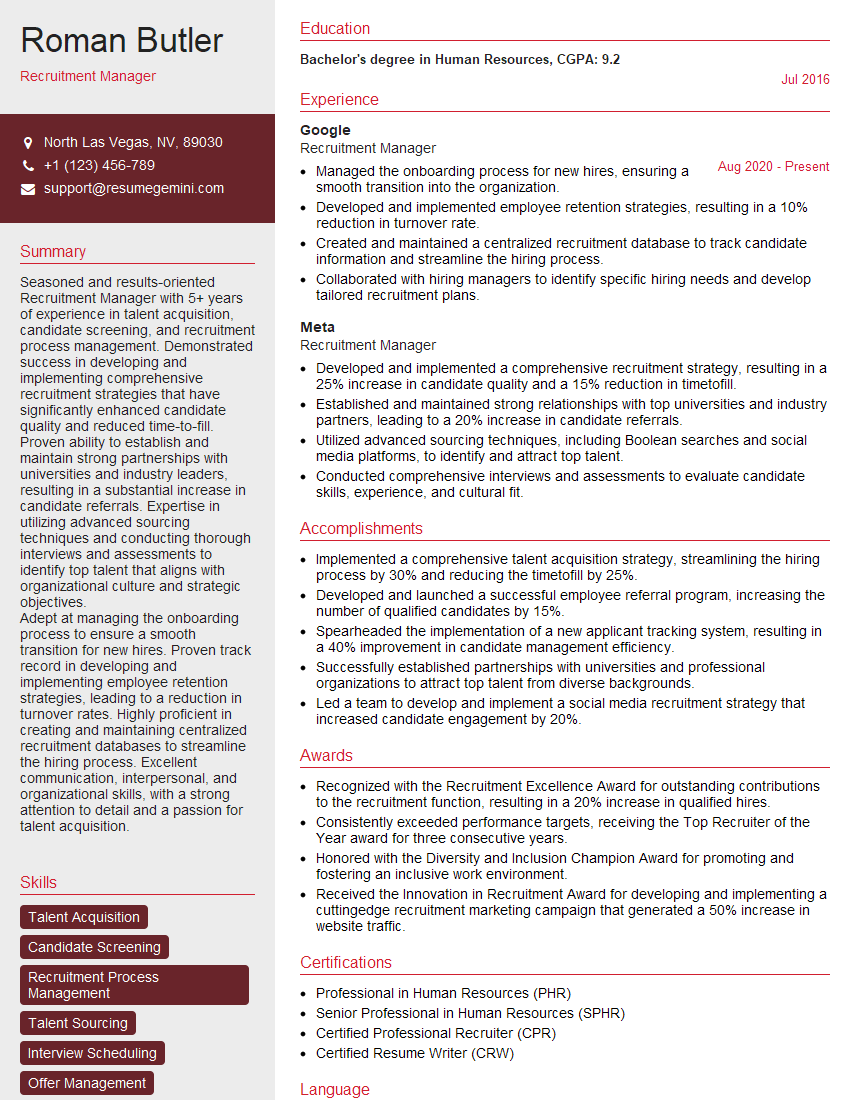

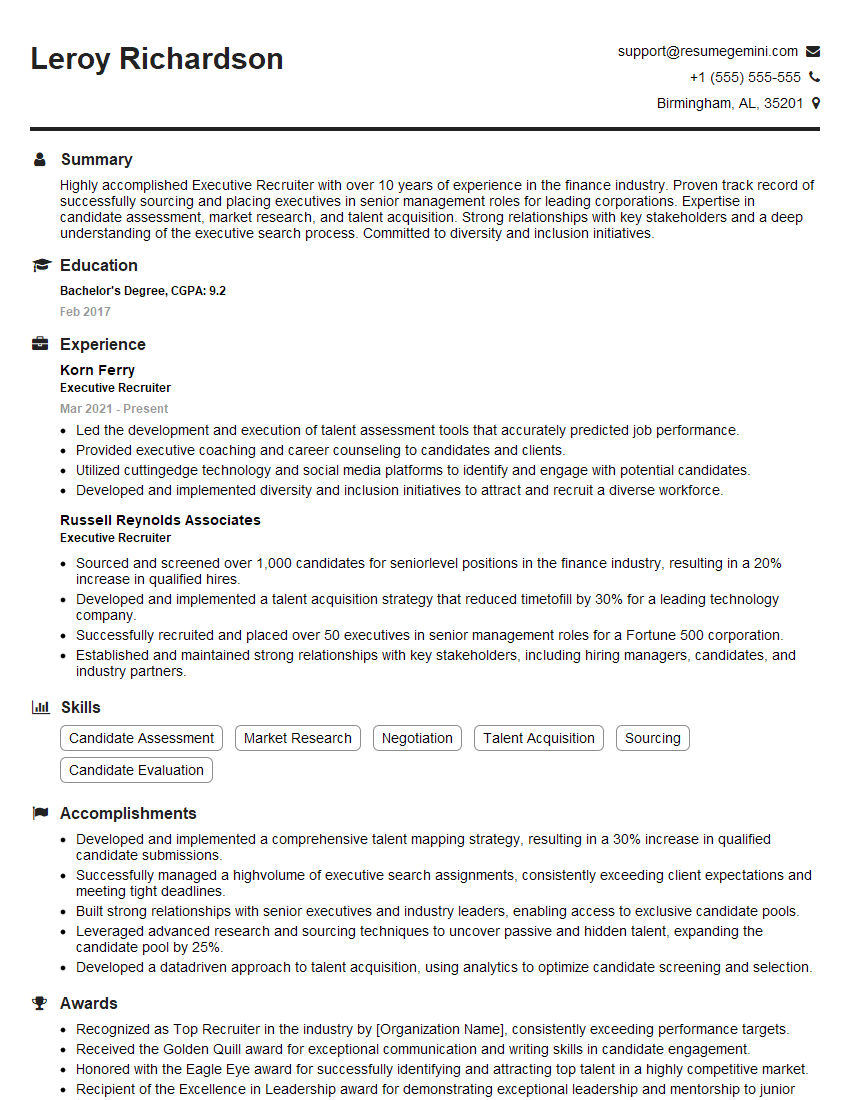

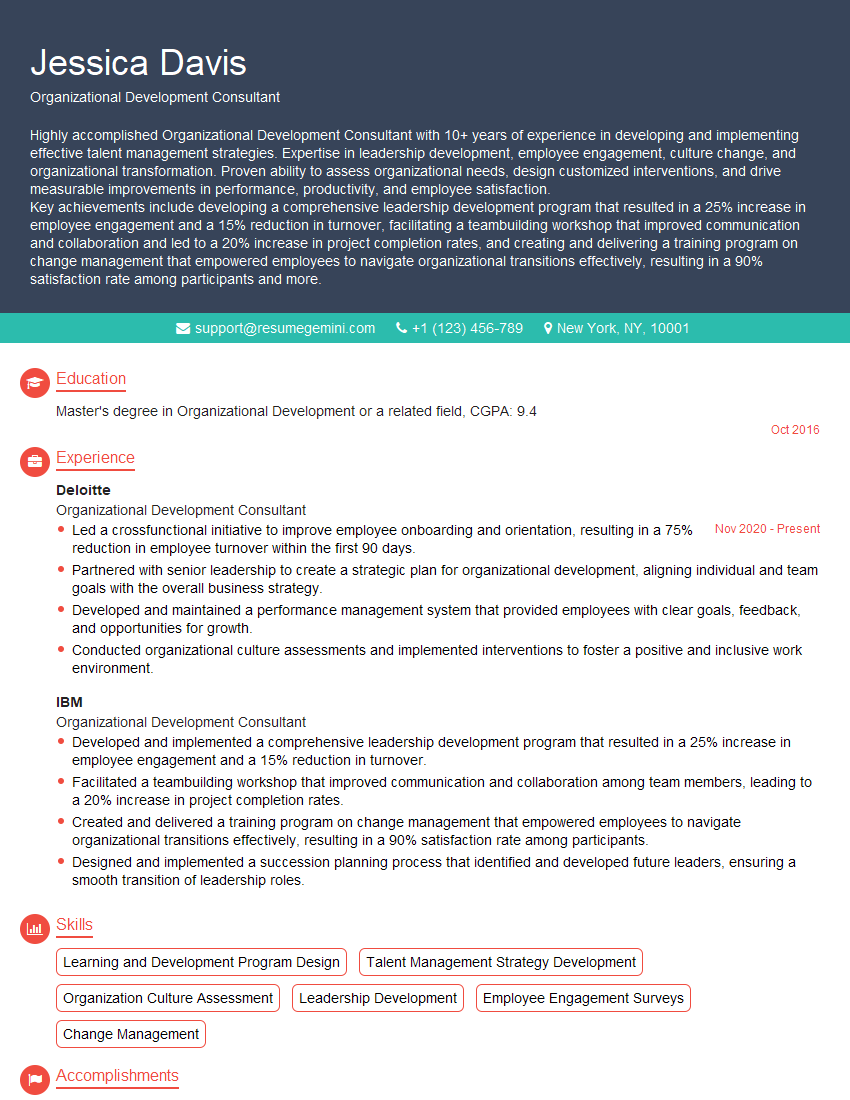

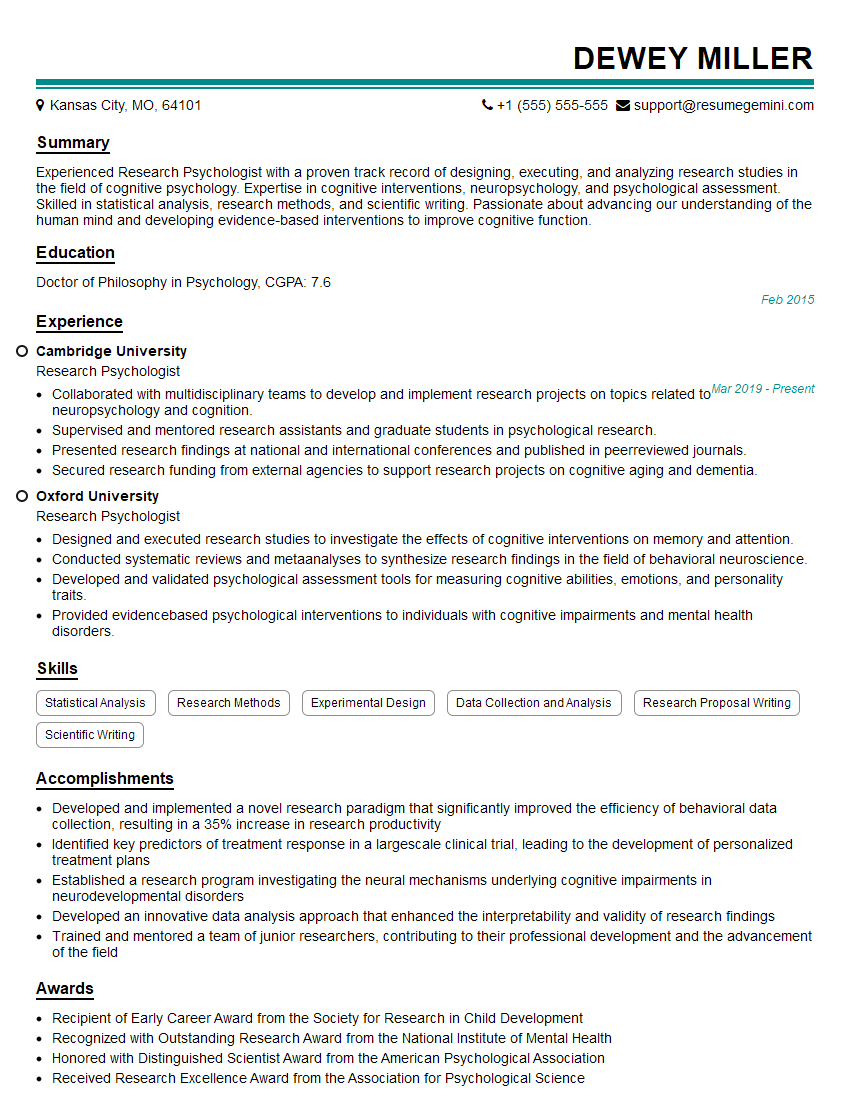

Mastering cognitive ability assessments is vital for career advancement, unlocking opportunities in competitive fields. A strong performance demonstrates crucial problem-solving and analytical skills highly valued by employers. To maximize your chances, focus on creating an ATS-friendly resume that highlights your relevant abilities. ResumeGemini is a trusted resource to help you build a professional and impactful resume that showcases your strengths effectively. Examples of resumes tailored to Cognitive Ability Assessment are provided to guide you. Take advantage of these resources to present yourself as the ideal candidate.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.