Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Monitoring and Evaluation of Treatment Progress interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Monitoring and Evaluation of Treatment Progress Interview

Q 1. Describe your experience with different data collection methods in the context of treatment progress monitoring.

My experience encompasses a wide range of data collection methods, tailored to the specific treatment and population. For instance, in a clinical setting, I’ve extensively used structured clinical interviews, employing standardized questionnaires to gather consistent data on patient symptoms, functional abilities, and treatment adherence. These are crucial for quantitative analysis. I’ve also utilized patient self-report measures, such as validated scales assessing depression or anxiety levels, which provide valuable insights into the patient’s subjective experience. Qualitative data is equally important. I’ve conducted focus groups and individual interviews to explore the patient’s lived experience of treatment, gaining richer understanding of their perspectives and challenges. Finally, physiological measures like blood pressure or heart rate variability are used where clinically relevant, adding an objective dimension. The choice of method always depends on the research question, the nature of the treatment, and the resources available.

- Structured Interviews: Provides quantifiable data, easy to compare across participants.

- Self-Report Measures: Captures patient perspective, but prone to bias.

- Qualitative Interviews: In-depth understanding of lived experiences; time-consuming to analyze.

- Physiological Measures: Objective data, requires specialized equipment and expertise.

Q 2. How do you ensure the accuracy and reliability of data collected for treatment progress evaluation?

Accuracy and reliability are paramount. We start by ensuring the validity and reliability of our chosen measurement instruments. This involves reviewing existing literature on the chosen tools and, when necessary, conducting pilot testing to refine the instruments. To minimize errors, we provide comprehensive training to data collectors, emphasizing standardized procedures and consistent application of protocols. We employ double data entry, comparing the entered data with the original data collection sheets to identify and correct discrepancies. Regular quality checks are incorporated throughout the process – supervisors review collected data for completeness and consistency. Finally, we use statistical methods to assess data quality, like identifying outliers and assessing inter-rater reliability when multiple assessors are involved.

Think of it like building a house: if the foundation (data collection methods) isn’t strong, the entire structure (analysis and conclusions) will be weak. Rigorous quality control procedures at every step ensure the solidity of our findings.

Q 3. Explain your understanding of various statistical methods used in analyzing treatment outcomes.

My understanding of statistical methods is extensive, encompassing both descriptive and inferential statistics. Descriptive statistics, such as means, standard deviations, and frequencies, provide summaries of the data. These give us a picture of the treatment’s overall impact. Inferential statistics, however, help us draw conclusions about the population based on the sample data. We frequently use t-tests to compare the means of two groups (e.g., treatment vs. control), ANOVA for comparing means across multiple groups, and regression analysis to explore the relationships between variables (e.g., treatment intensity and outcome). We might use survival analysis to examine time-to-event outcomes, such as relapse or remission. The choice of method depends on the research question, data type, and assumptions that can be made about the data.

For example, if we were evaluating a new medication for depression, we’d likely use a t-test to compare the mean depression scores of patients receiving the medication versus those in a placebo group. The results would tell us whether the medication significantly reduces depression symptoms.

Q 4. What are the key performance indicators (KPIs) you would use to track treatment progress?

The KPIs used depend on the specific treatment goals and context, but common examples include:

- Treatment Adherence: Percentage of patients completing the prescribed treatment regimen. This tells us about patient engagement.

- Symptom Reduction: Measured by validated scales, showing the extent of improvement in symptoms.

- Functional Improvement: Assessing improvements in daily living activities, reflecting the treatment’s impact on real-world functioning.

- Quality of Life: Measuring subjective well-being, providing a holistic perspective on treatment effectiveness.

- Cost-Effectiveness: Assessing the cost per unit of improvement, considering resource utilization.

- Relapse Rates: Tracking the proportion of patients experiencing a recurrence of symptoms.

These KPIs, used together, provide a comprehensive picture of treatment progress, allowing for targeted interventions and program adjustments.

Q 5. How do you identify and address biases in data collection and analysis during treatment evaluation?

Identifying and addressing bias is crucial for ensuring the integrity of our evaluations. We address potential biases through careful study design. For example, randomization in clinical trials helps to reduce selection bias. In data collection, we employ standardized protocols to minimize interviewer bias and utilize blinding when possible to avoid assessment bias. During analysis, we use appropriate statistical techniques to account for confounding variables. For example, regression analysis can control for the effects of extraneous factors. We always document our methods thoroughly to promote transparency and allow for critical review. Recognizing and mitigating bias is an ongoing process, requiring vigilance and careful consideration throughout the entire M&E cycle.

Consider a study on a new therapy. If participants in the treatment group were more motivated than those in the control group, this could lead to a biased outcome, inflating the apparent effectiveness of the therapy. Careful selection methods and statistical controls are necessary to account for such variations.

Q 6. Describe your experience with developing and implementing M&E plans for treatment programs.

I have extensive experience in developing and implementing M&E plans. This involves a collaborative process, starting with defining clear program goals and objectives. We then identify the relevant KPIs and determine appropriate data collection methods. A detailed data collection plan is developed, including timelines, responsible parties, and quality control procedures. Data analysis plans are outlined beforehand, specifying the statistical methods to be used. Finally, a communication plan is established to ensure timely dissemination of results to stakeholders. Regular monitoring and evaluation meetings are conducted to track progress, identify challenges, and make necessary adjustments. This iterative approach ensures the M&E plan remains relevant and adaptable to the evolving needs of the program.

For example, in developing an M&E plan for a community-based mental health program, I would work with stakeholders to define clear goals – such as reducing stigma, improving access to care, and enhancing mental well-being. The plan would then outline how these goals will be measured through quantitative and qualitative data collection, ensuring that data gathered can directly inform program improvements.

Q 7. How do you communicate complex M&E data to stakeholders with varying levels of technical expertise?

Communicating complex M&E data effectively to diverse stakeholders requires a multifaceted approach. We employ a combination of methods, tailoring the communication strategy to the audience’s technical expertise. For stakeholders with limited technical backgrounds, we use clear, concise summaries, supplemented with visual aids such as graphs and charts. We avoid jargon and explain technical terms clearly. For more technically proficient stakeholders, we provide detailed reports containing statistical analyses and methodological information. We also hold regular meetings and workshops to discuss findings and answer questions. In addition, we produce infographics and presentations that convey key findings in an accessible and engaging way. The ultimate goal is to make the information readily understandable, promoting informed decision-making and program improvement.

Imagine presenting to a group of community leaders versus a group of epidemiologists. You’d use vastly different language and levels of detail in each scenario to convey the same results effectively.

Q 8. How do you use M&E data to inform program improvements and adjustments?

Monitoring and Evaluation (M&E) data is the lifeblood of any successful treatment program. We use this data not just to track progress, but to actively improve the program itself. Think of it like navigating with a map – the M&E data provides crucial information about where we are, where we’re going, and whether we need to adjust our course.

Specifically, we analyze trends in key indicators (e.g., patient outcomes, adherence rates, resource utilization). If we see a consistent drop in a particular outcome measure, for instance, we investigate the root cause. This could involve reviewing the treatment protocols, assessing staff training, or exploring potential barriers faced by patients. Based on our findings, we implement targeted changes – perhaps refining a specific technique, improving patient communication strategies, or allocating more resources to a struggling area. This iterative process of data analysis, interpretation, and program adjustment is essential for ensuring program effectiveness and sustainability.

For example, in a substance abuse treatment program, if we observe a high relapse rate among a specific demographic group, we might analyze their unique challenges and tailor interventions to better address their needs. This could include providing culturally sensitive support or addressing specific social determinants of health.

Q 9. What software and tools are you proficient in for data analysis and reporting in the context of treatment progress?

My proficiency in data analysis and reporting spans several software and tools. I’m highly skilled in using statistical packages like R and SPSS for advanced data analysis, including regression modeling and survival analysis, which are crucial for understanding treatment efficacy and predicting outcomes. I’m also proficient in data visualization tools like Tableau and Power BI to create clear and compelling reports that effectively communicate our findings to stakeholders. These tools help to transform raw data into actionable insights.

Furthermore, I’m experienced in using database management systems such as SQL Server and MySQL to manage and query large datasets efficiently. For collaborative data management and sharing, I utilize platforms like REDCap, which offer secure data storage and robust data management functionalities.

Q 10. Describe a situation where you had to troubleshoot data quality issues. What was your approach?

In a recent project evaluating a mental health intervention, we encountered inconsistencies in the diagnosis codes recorded by different clinicians. This compromised the reliability of our analysis on treatment effectiveness across different diagnoses. My approach to troubleshooting involved a multi-step process:

- Data Validation: I first conducted a thorough review of the data, identifying specific instances of discrepancies and inconsistencies.

- Root Cause Analysis: I then met with the clinicians involved to understand the source of the discrepancies. This revealed a lack of standardized training on the diagnostic coding system.

- Data Cleaning and Correction: Where possible, we corrected the inconsistencies using existing information. For cases where clarification was needed, we followed up with patients’ medical records.

- Preventive Measures: Finally, we implemented a comprehensive training program for clinicians on standardized diagnostic coding, ensuring future data collection would adhere to the established guidelines. We also implemented stricter data entry protocols and regular quality checks.

This systematic approach ensured improved data quality and the reliability of our subsequent analysis.

Q 11. How do you handle missing data in your analysis of treatment progress?

Missing data is an inevitable challenge in any M&E process. Ignoring it can lead to biased conclusions. My approach to handling missing data involves a combination of strategies, always keeping in mind the nature of the missing data and the potential impact on our analysis.

Firstly, I thoroughly investigate the pattern of missing data. Is it Missing Completely at Random (MCAR), Missing at Random (MAR), or Missing Not at Random (MNAR)? Different approaches are appropriate for each type. For MCAR, simple methods like complete case analysis might be suitable if the missing data is minimal. For MAR and MNAR, more sophisticated techniques are necessary.

Common methods I use include imputation techniques (e.g., multiple imputation using chained equations in R or SPSS), which replace missing values with plausible estimates based on the observed data. I might also employ weighting methods to adjust for the missing data. The choice of method depends critically on the context and the nature of the data. It’s essential to document all methods used and justify their selection transparently.

Q 12. Explain your understanding of different types of treatment program evaluations (e.g., formative, summative).

Treatment program evaluations fall into different categories based on their purpose and timing. Formative evaluations are conducted during the program’s implementation phase. Their aim is to gather feedback and make necessary adjustments while the program is running. Think of it as a ‘course correction’ during a journey. They involve methods like focus groups, interviews, and observations to identify strengths and weaknesses, and guide improvement efforts.

Summative evaluations, on the other hand, assess the overall effectiveness of a program after its completion. This type of evaluation often involves quantitative methods like comparing outcomes between intervention and control groups, using statistical tests to assess the impact of the treatment. It’s like reviewing the overall success of the journey at the destination.

Other types include process evaluations which focus on how a program is implemented, and impact evaluations which assess the long-term effects of a program. Understanding these distinctions is crucial in choosing the appropriate evaluation design and methods for a specific program.

Q 13. How do you ensure ethical considerations are addressed during data collection and analysis in treatment progress evaluation?

Ethical considerations are paramount in M&E. We ensure that all data collection and analysis activities adhere to the highest ethical standards, prioritizing participant privacy and confidentiality. This involves obtaining informed consent from all participants, ensuring anonymity or confidentiality of data, and protecting sensitive information according to relevant regulations (e.g., HIPAA).

Data security is also a critical ethical concern. We use secure data storage and access control mechanisms to prevent unauthorized access and data breaches. All data analysis reports are anonymized to protect participant identity. Furthermore, we adhere to strict data governance policies to maintain the integrity and trustworthiness of the data throughout the entire M&E process.

We also ensure transparency in our methods and reporting. Our findings are presented objectively, without bias, and potential limitations of the analysis are clearly articulated. Ethical review board approvals are obtained whenever necessary, ensuring all our activities are conducted responsibly and ethically.

Q 14. Describe your experience with qualitative data collection and analysis methods in the context of treatment progress.

Qualitative data collection methods, such as interviews and focus groups, play a vital role in enriching our understanding of treatment progress, providing valuable insights into patient experiences and perspectives that quantitative data alone cannot capture. For example, in a study evaluating a new therapy for anxiety, we conducted semi-structured interviews with patients to explore their lived experiences, the challenges they faced, and their perceived benefits from the treatment. These qualitative data offer rich descriptions of the context and process of treatment, adding depth and nuance to the quantitative findings.

Analysis of qualitative data involves thematic analysis, where we systematically identify recurring patterns and themes from the interview transcripts or field notes. This might involve using software like NVivo to assist in coding and organizing the data. We meticulously document our analysis process, ensuring transparency and rigor. These qualitative findings are crucial for understanding the ‘why’ behind the quantitative results. For example, while quantitative data might show improvement in anxiety scores, qualitative data could reveal factors contributing to successful engagement with the therapy or barriers faced by certain subgroups.

Q 15. What are the limitations of relying solely on quantitative data in evaluating treatment progress?

Relying solely on quantitative data, like blood pressure readings or weight measurements, in evaluating treatment progress offers a limited perspective. While numbers provide valuable objective information, they don’t capture the complete picture of a patient’s experience and response to treatment.

- Missing the Qualitative Context: Quantitative data lacks the rich detail of a patient’s subjective experience. For instance, a patient’s blood pressure might be within the normal range, but they might still report feeling fatigued or experiencing pain. This qualitative information is crucial for understanding the treatment’s overall effectiveness.

- Ignoring Individual Variations: Quantitative data may mask individual differences in response to treatment. Two patients might have the same quantitative outcomes, yet one might have achieved them through significant effort, while the other effortlessly. This nuance is lost without qualitative data.

- Limited Understanding of Barriers and Facilitators: Quantitative data alone doesn’t explain why a patient is progressing (or not progressing) as expected. It doesn’t reveal factors like adherence to medication, socioeconomic challenges, or side effects that influence treatment outcomes.

In essence, quantitative data tells us what is happening, while qualitative data helps us understand why it’s happening, leading to a more comprehensive and nuanced evaluation.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you integrate quantitative and qualitative data to provide a holistic view of treatment progress?

Integrating quantitative and qualitative data creates a powerful synergy for a holistic understanding of treatment progress. It’s like having a bird’s-eye view (quantitative) and a ground-level view (qualitative) simultaneously.

- Triangulation: We use quantitative data to measure objective outcomes, such as disease markers or functional capacity. Qualitative data, gathered through interviews, focus groups, or observations, provides contextual information, validating, enriching, or challenging the quantitative findings. For example, if quantitative data shows a decrease in blood glucose levels, qualitative data from patient interviews might reveal challenges with adhering to the dietary regimen.

- Mixed Methods Approach: We often employ mixed-methods designs, combining quantitative and qualitative data collection and analysis techniques within a single study or evaluation. This could involve analyzing survey data (quantitative) alongside thematic analysis of patient narratives (qualitative).

- Data Interpretation: The interpretation of data becomes richer. Quantitative data informs the ‘what’ while qualitative data explores the ‘why,’ offering a deeper understanding of underlying factors. For instance, if a program aimed to reduce hospital readmissions shows a reduced rate (quantitative), qualitative interviews with patients can explore whether this is due to improved health literacy, increased access to support services, or some other factor.

This integrated approach leads to more accurate, insightful, and actionable conclusions regarding treatment progress, ultimately facilitating more effective interventions and improved patient care.

Q 17. How do you prioritize competing demands and deadlines when managing multiple M&E projects?

Prioritizing competing demands and deadlines in multiple M&E projects requires a structured approach. Think of it as conducting an orchestra – each instrument (project) needs attention, but the conductor (you) ensures harmony.

- Project Prioritization Matrix: I utilize a matrix that weighs projects based on factors like urgency, impact, and resource requirements. This helps visualize which projects deserve immediate attention and which can be slightly deferred.

- Time Blocking and Scheduling: I allocate specific time blocks for each project, sticking to the schedule as much as possible. This prevents one project from consuming all the time.

- Delegation and Collaboration: Where possible, I delegate tasks to skilled team members, fostering collaboration and efficient workload distribution.

- Regular Monitoring and Adjustment: I track progress regularly, allowing for flexibility to adjust the schedule if unforeseen challenges arise. This might involve renegotiating deadlines or reallocating resources.

Clear communication with stakeholders is paramount. Managing expectations and providing regular updates keeps everyone informed and prevents misunderstandings.

Q 18. Describe a time you had to adapt your M&E approach due to unexpected challenges.

During a study evaluating a new diabetes management program, we encountered unexpected high attrition rates. Initially, our M&E plan focused on quantitative data collection at predetermined intervals. However, the high dropout rate threatened the validity of our findings.

We adapted by incorporating qualitative methods. We conducted semi-structured interviews with participants who dropped out, exploring the reasons for their withdrawal. This revealed key barriers to program participation, such as transportation difficulties and conflicting work schedules.

Based on this feedback, we revised the program to offer flexible scheduling and transportation assistance. This significantly improved retention rates in subsequent cohorts, demonstrating the importance of adapting our M&E approach to unforeseen challenges and using qualitative data to inform program improvements.

Q 19. How do you ensure data security and confidentiality in handling sensitive patient data?

Data security and confidentiality are paramount when handling sensitive patient data. We adhere strictly to ethical guidelines and regulatory requirements.

- Data Anonymization and De-identification: We remove or replace any identifying information from datasets whenever possible. This minimizes the risk of re-identification.

- Secure Storage and Access Control: We use encrypted databases and restricted access protocols. Only authorized personnel with a legitimate need for access can view patient data. This often involves password protection, multi-factor authentication, and role-based access control.

- Data Encryption During Transmission: We ensure data is encrypted during transmission using secure protocols like HTTPS to protect it from unauthorized interception.

- Compliance with Regulations: We strictly adhere to relevant regulations such as HIPAA (in the US) or GDPR (in Europe), ensuring compliance with data privacy standards.

- Regular Security Audits and Training: We conduct regular security audits to identify and address any vulnerabilities. All personnel involved in data handling receive training on data privacy and security best practices.

We treat data security as an ongoing process requiring continuous vigilance and improvement.

Q 20. What are some common challenges faced in monitoring and evaluating treatment progress, and how do you overcome them?

Several challenges commonly arise in monitoring and evaluating treatment progress.

- Data Collection Challenges: Inconsistent data collection methods, missing data, and difficulty in accessing data from multiple sources can hinder effective evaluation. Solution: We establish standardized data collection protocols, utilize data management tools, and build strong relationships with data providers to ensure data quality and accessibility.

- Incomplete Data: This can significantly limit the ability to draw accurate conclusions. Solution: We develop strategies to minimize missing data, such as reminders, follow-up calls, and imputation techniques (where appropriate).

- Bias: Selection bias, observer bias, and recall bias can all affect the accuracy of data. Solution: We use rigorous sampling methods, blinding techniques where feasible, and employ multiple data collection methods to mitigate bias.

- Resource Constraints: Limited funding, personnel, and time can hamper effective M&E. Solution: We prioritize tasks, optimize data collection methods, and seek collaborations to maximize resources.

Overcoming these challenges requires careful planning, a robust M&E framework, and a flexible approach to adapt to unforeseen circumstances.

Q 21. How do you define success in monitoring and evaluating treatment progress?

Success in monitoring and evaluating treatment progress isn’t solely defined by achieving pre-set quantitative targets. It’s a multifaceted concept.

- Improved Patient Outcomes: The primary measure of success is demonstrable improvement in patient health, well-being, and quality of life. This may involve reductions in disease symptoms, improved functional capacity, or enhanced patient satisfaction.

- Effective Program Implementation: A successful M&E system also demonstrates that the treatment program is being implemented effectively and efficiently. This includes assessing the reach, coverage, and quality of services delivered.

- Data-Driven Program Improvement: Success lies in the ability to use M&E data to inform continuous quality improvement and program adjustments. This involves identifying areas for strengthening the program, addressing implementation challenges, and optimizing resource allocation.

- Knowledge Generation: A successful M&E system contributes to the broader body of knowledge by generating evidence that informs clinical practice and policy decisions.

Therefore, success is a combination of achieving tangible improvements in patient care, demonstrating program effectiveness, and utilizing data for continuous enhancement and knowledge generation.

Q 22. Describe your experience with presenting M&E findings to a range of stakeholders.

Presenting M&E findings effectively requires tailoring the message to the audience. My experience involves communicating complex data to diverse stakeholders, from community health workers to high-level government officials and funding agencies. I’ve found success by using a combination of approaches. For example, when presenting to community health workers, I use simple visuals like charts and graphs with clear, concise explanations focusing on the impact on their communities. For senior officials, I provide a more detailed analysis incorporating quantitative and qualitative data, highlighting key trends and policy implications. I always start with a clear executive summary, then delve into the specifics based on the audience’s needs and understanding. I also make sure to actively solicit feedback and answer questions thoroughly to ensure understanding and buy-in.

For instance, in a recent project evaluating a maternal health intervention, I presented findings to community leaders using infographics depicting the reduction in maternal mortality rates in their specific areas. This helped them understand the program’s success and build confidence. Conversely, to the funding agency, I provided a detailed report that included statistical significance tests, cost-effectiveness analysis, and recommendations for future programming, along with the community-level summary data to contextualize the project’s success. Ultimately, my goal is always to ensure the information is both accessible and compelling to all stakeholders.

Q 23. How do you ensure the sustainability of your M&E systems?

Ensuring the sustainability of M&E systems is crucial for long-term impact. My approach focuses on building capacity, fostering ownership, and integrating M&E into the organization’s routine operations. This involves several key strategies:

- Capacity Building: Training local staff on data collection, analysis, and reporting techniques is essential. I believe in a hands-on approach, mentoring staff and providing ongoing technical assistance. This ensures that the system isn’t reliant on external expertise.

- Ownership and Integration: M&E should not be an add-on; it needs to be integrated into the program’s planning and implementation. Involving stakeholders from the outset and ensuring they have a sense of ownership over the data ensures sustained effort. This might involve establishing a local M&E committee comprised of program staff and community members.

- Simple and Efficient Systems: Overly complex M&E systems are hard to maintain. I focus on designing systems that are efficient, user-friendly, and utilize readily available technology. This might involve leveraging mobile data collection tools or open-source software.

- Resource Allocation: Adequate budget and human resources must be allocated to M&E activities. This includes setting aside funds for equipment, software, and staff training on an ongoing basis.

- Documentation and Standard Operating Procedures: Creating detailed manuals and SOPs ensures the system can be easily replicated and maintained over time, even with staff turnover.

For example, in a previous role, we successfully transitioned M&E responsibilities to the local government by implementing a comprehensive training program and establishing a sustainable data management system using locally available resources. This ensured the program’s monitoring continued effectively even after our departure.

Q 24. Explain your understanding of the importance of regular data quality checks and audits.

Regular data quality checks and audits are paramount for the credibility and usefulness of M&E findings. Poor data quality can lead to flawed conclusions and ineffective interventions. My approach involves a multi-faceted strategy:

- Data Cleaning and Validation: This involves checking for inconsistencies, errors, and outliers in the collected data. I utilize both manual checks and automated data validation tools to identify and address these issues.

- Regular Audits: Periodic audits of the entire data collection process, including data entry, analysis, and reporting, are essential. This helps identify systematic errors and areas for improvement.

- Source Data Verification: Regular spot checks of the source data against the entered data help ensure accuracy and identify discrepancies early. This can be as simple as comparing a subset of paper-based forms to the entered digital records.

- Use of Data Quality Indicators: Tracking key data quality indicators, such as completeness, consistency, and timeliness of data collection, helps to monitor trends and identify potential problems. This allows for early intervention and prevents minor problems from escalating.

Imagine a scenario where incorrect data on patient attendance in a health program leads to the underestimation of the program’s impact. Regular data quality checks would have highlighted this issue, ensuring accurate evaluation and potentially preventing resource misallocation. I use a range of quality control measures to ensure that M&E data remains reliable and accurate, resulting in informed decision-making.

Q 25. How do you stay current with the latest developments and best practices in Monitoring and Evaluation?

Staying abreast of the latest developments in M&E is critical for maintaining professional competence. I actively engage in several strategies:

- Professional Development: I regularly attend conferences, workshops, and training courses focused on M&E methodologies and best practices. This ensures I am up-to-date with emerging technologies and approaches.

- Professional Networks: I actively participate in professional networks and online communities related to M&E. This allows me to share knowledge, learn from others’ experiences, and stay informed about the latest trends.

- Literature Reviews: I regularly read academic journals and research publications on M&E. This keeps me abreast of new research findings and methodological advancements.

- Online Courses and Resources: I utilize online courses and resources, such as those offered by organizations like WHO and UNICEF, to enhance my knowledge and skills.

For instance, I recently completed a course on using machine learning techniques in data analysis for M&E, significantly enhancing my ability to analyze large and complex datasets. Continuous learning is essential in this dynamic field, ensuring that I can apply the most effective and current methodologies in my work.

Q 26. What are your salary expectations for this role?

My salary expectations are commensurate with my experience and the requirements of this role. I am open to discussing this further and aligning my expectations with the offered compensation package.

Q 27. Do you have any questions for me?

I am eager to learn more about the specific challenges and opportunities associated with this M&E role within your organization. Could you elaborate on the team structure and the technologies currently used for data management? I am also curious to know about the long-term goals and strategic direction for M&E within your organization.

Key Topics to Learn for Monitoring and Evaluation of Treatment Progress Interview

- Data Collection Methods: Understanding various methods like chart reviews, patient interviews, and surveys; their strengths, weaknesses, and appropriate applications in treatment progress monitoring.

- Indicator Selection & Development: Defining meaningful indicators to track treatment effectiveness, aligning them with program goals, and ensuring data quality and reliability.

- Qualitative Data Analysis: Interpreting patient narratives and feedback to gain a deeper understanding of treatment experiences and identify areas for improvement beyond quantitative metrics.

- Quantitative Data Analysis: Utilizing statistical methods to analyze numerical data, identify trends, and assess the impact of interventions on treatment outcomes. This includes understanding descriptive and inferential statistics.

- Reporting & Communication: Effectively presenting findings to diverse audiences (clinicians, administrators, stakeholders) using clear visualizations and concise language.

- Ethical Considerations: Maintaining patient confidentiality, ensuring data security, and adhering to ethical guidelines throughout the monitoring and evaluation process.

- Program Improvement Strategies: Using M&E data to inform program adjustments, identify areas needing improvement, and enhance treatment effectiveness.

- Performance Measurement Frameworks: Familiarity with different frameworks (e.g., logic models, results frameworks) used to structure M&E activities.

- Technological Tools for M&E: Proficiency in using software and platforms for data management, analysis, and reporting (mentioning general categories, not specific software names).

- Problem-Solving & Critical Thinking: Analyzing data discrepancies, identifying potential biases, and developing solutions based on evidence-based findings.

Next Steps

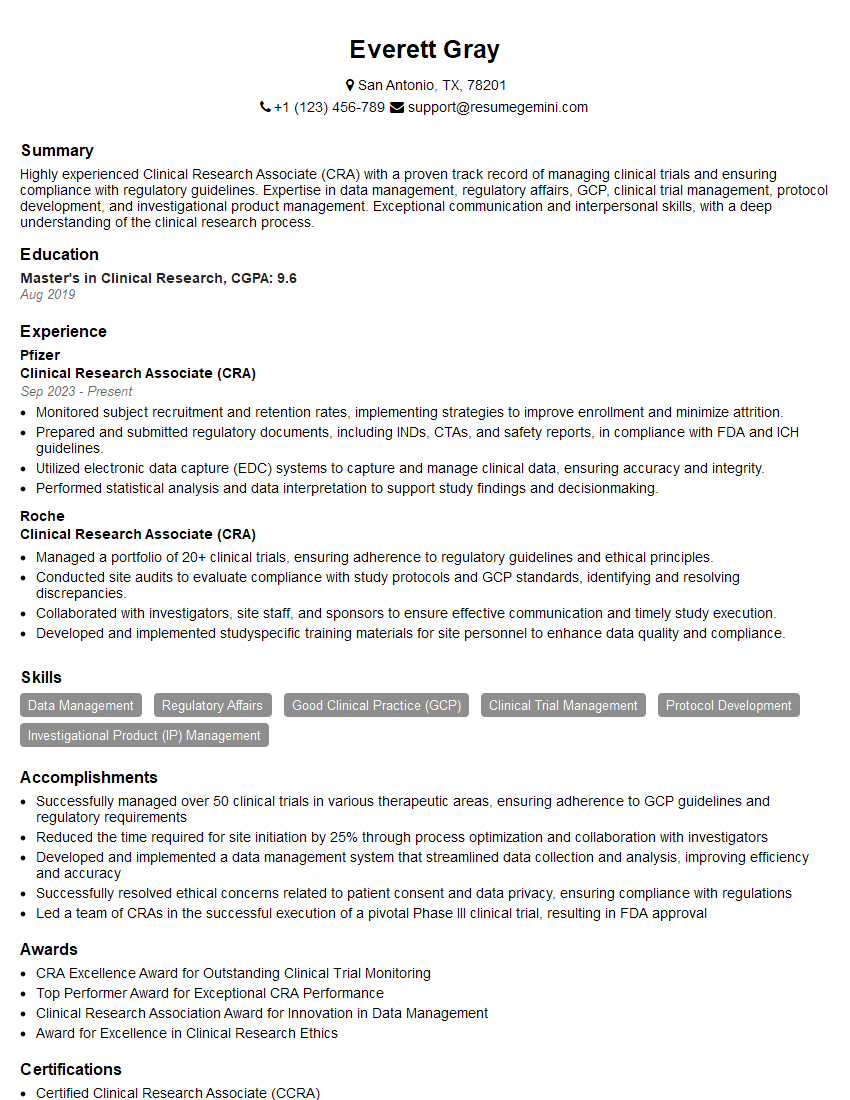

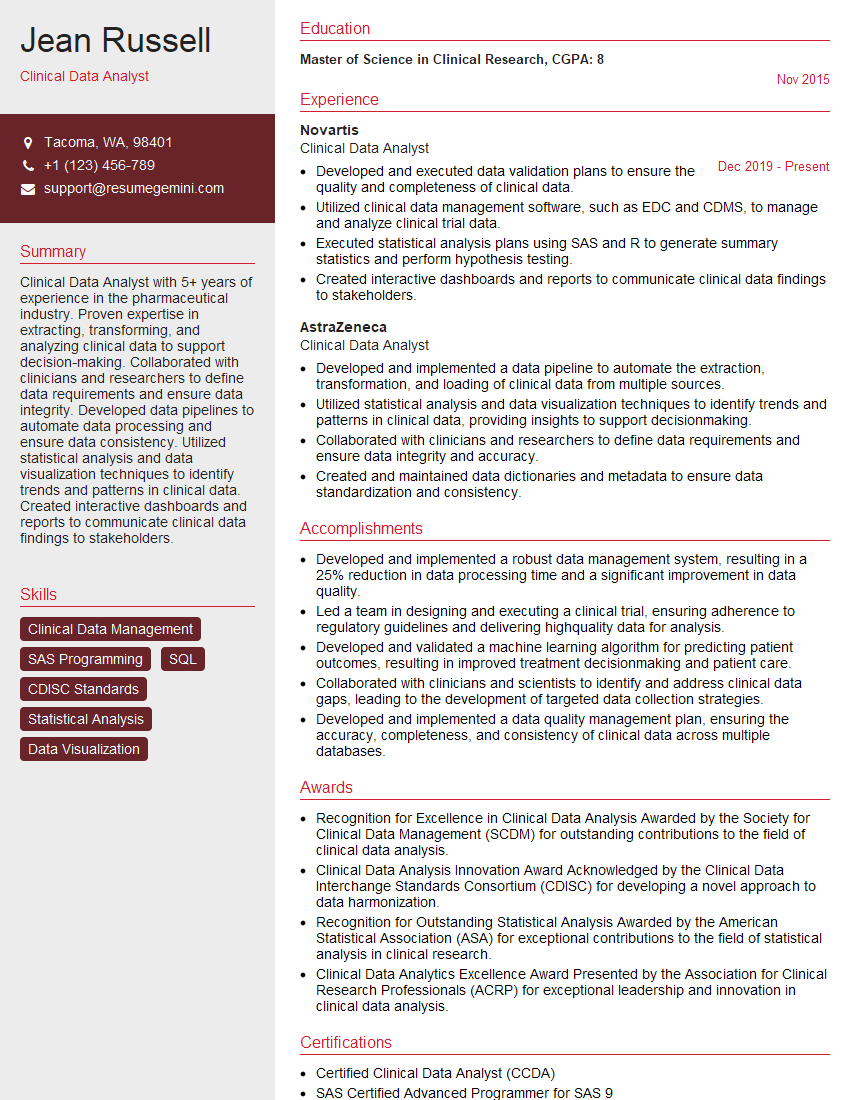

Mastering Monitoring and Evaluation of Treatment Progress is crucial for career advancement in healthcare and related fields. It demonstrates a commitment to evidence-based practice and continuous improvement, opening doors to leadership roles and impactful contributions. To maximize your job prospects, crafting an ATS-friendly resume is paramount. ResumeGemini is a trusted resource to help you build a professional and impactful resume that highlights your skills and experience effectively. Examples of resumes tailored to Monitoring and Evaluation of Treatment Progress are available, providing you with a head start in showcasing your qualifications to potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

To the interviewgemini.com Webmaster.

Very helpful and content specific questions to help prepare me for my interview!

Thank you

To the interviewgemini.com Webmaster.

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.