Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Electroencephalography (EEG) Analysis interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Electroencephalography (EEG) Analysis Interview

Q 1. Explain the different types of EEG waves and their associated brain states.

Electroencephalography (EEG) detects electrical activity in the brain using electrodes placed on the scalp. Different brain states are characterized by distinct EEG wave patterns, varying in frequency and amplitude. These waves are broadly classified into:

- Delta waves (0.5-4 Hz): These slow waves are associated with deep sleep, and also seen in infants and in certain pathological states like brain damage. Think of them as the brain’s ‘rest mode’ – very deep and slow.

- Theta waves (4-8 Hz): Often seen during drowsiness, meditation, and in some stages of sleep. They’re associated with creative thinking and emotional processing – a slightly more active, yet still relaxed state.

- Alpha waves (8-13 Hz): Present when awake but relaxed, with eyes closed. They’re often reduced or disappear during mental activity and are considered a marker of a calm and focused state. Imagine the alpha waves as the brain’s ‘calm focus’ mode.

- Beta waves (13-30 Hz): Dominant during wakefulness, alertness, and active cognitive engagement. They’re high-frequency waves reflecting busy thinking and active problem-solving. This is like the brain in its ‘active working’ mode.

- Gamma waves (30-100 Hz): Associated with higher cognitive functions like attention, perception, and consciousness. These are the fastest waves, representing complex cognitive processes and binding different parts of the brain together – ‘supercharged cognitive processing’.

The relative proportions and presence of these waves help clinicians diagnose neurological conditions like epilepsy, sleep disorders, and brain injuries.

Q 2. Describe the process of EEG electrode placement according to the 10-20 system.

The 10-20 system is a standardized method for placing EEG electrodes on the scalp. It ensures consistent electrode placement across individuals, improving the reproducibility and comparability of EEG recordings. The system is based on percentages of the distance between anatomical landmarks, namely the nasion (bridge of the nose), inion (prominent point at the back of the head), and pre-auricular points (points in front of the ears).

The scalp is divided into regions based on underlying cortical lobes (frontal, temporal, parietal, occipital). Electrodes are named according to their location (e.g., Fp1, F3, C4, Pz, O2). The numbers (1,2,3 etc.) indicate the hemisphere (odd numbers for the left, even for the right) and the letter indicates the lobe.

For example, Fp1 is on the frontal pole (Fp) of the left hemisphere (1), and O2 is at the occipital lobe (O) on the right hemisphere (2). Z indicates the midline. Precise placement is crucial for accurate interpretation. The system also includes a reference electrode (often earlobes or mastoids) and a ground electrode (usually a forehead location).

Clinicians follow carefully defined guidelines to ensure consistent electrode placement. Minor variations may exist between different EEG systems or clinics but the underlying principles remain consistent.

Q 3. What are common artifacts encountered in EEG recordings, and how are they mitigated?

EEG recordings are susceptible to various artifacts, which are unwanted signals that can obscure the underlying brain activity. Common artifacts include:

- Electrode artifact: Poor electrode contact, movement, or sweat can introduce noise. Good electrode placement and impedance checks can minimize this.

- Muscle artifact (EMG): Facial and jaw muscle activity produces electrical signals that contaminate EEG. Relaxation techniques and minimizing head movement can be helpful. Sometimes filtering techniques are used to remove some of this artifact.

- Eye movement artifact (EOG): Eye blinks and movements generate substantial electrical activity. This can be mitigated by EOG recordings (which monitor eye movements directly), and sophisticated software can often subtract the eye movement activity from the EEG recording.

- Cardiac artifact (ECG): Heartbeat generates electrical signals that can interfere. Proper grounding and shielding can help reduce this artifact. Software filtering techniques can also help remove this artifact.

- Line noise: Electrical interference from power lines (50 or 60 Hz) appears as rhythmic oscillations in the EEG. Shielding and digital filtering are effective countermeasures.

Artifact mitigation strategies often involve a combination of careful recording techniques, appropriate filtering methods (both hardware and software), and advanced signal processing techniques. It’s important to note that some artifacts can be difficult to remove completely without affecting the genuine EEG data, which is why careful technique is paramount.

Q 4. Explain the difference between amplitude and frequency in EEG signals.

In EEG signals, amplitude and frequency are fundamental properties that reflect different aspects of brain activity.

- Amplitude: Refers to the height of the EEG wave, measured in microvolts (µV). A larger amplitude indicates a stronger electrical activity in the underlying neural population. Think of it as the ‘strength’ of the brain’s signal.

- Frequency: Refers to the number of cycles per second, measured in Hertz (Hz). Higher frequencies indicate faster neuronal activity, while lower frequencies signify slower neuronal activity. This is the ‘speed’ of the brain’s signal.

For instance, delta waves have low frequency (0.5-4 Hz) and relatively high amplitude, while beta waves have high frequency (13-30 Hz) and lower amplitude. Analyzing both amplitude and frequency is crucial for characterizing brain states and identifying abnormalities.

Q 5. How is source localization performed in EEG analysis?

Source localization in EEG aims to determine the location of the brain activity that generated the measured scalp potentials. It’s an inverse problem, meaning that many different brain sources could potentially produce the same scalp EEG. This makes it a challenging task.

Several methods are used for source localization, including:

- Dipole modeling: This method assumes that the brain activity is generated by a small number of dipoles (representing localized current sources). The location and orientation of these dipoles are estimated by fitting the model to the scalp EEG data.

- Distributed source modeling: This approach assumes that brain activity is distributed across a large number of sources, and estimates the activity at each location using sophisticated mathematical techniques (e.g., minimum norm estimation, beamforming).

These techniques require sophisticated software and considerable computational power. The accuracy of source localization is affected by several factors, including the number of electrodes, the spatial resolution of the EEG, and the presence of artifacts. While it is a powerful tool, it’s important to acknowledge inherent limitations and potential uncertainties in the results.

Q 6. Describe different types of evoked potentials and their clinical applications.

Evoked potentials (EPs) are electrical responses in the brain elicited by specific stimuli, such as visual, auditory, or somatosensory inputs. These potentials are time-locked to the stimulus, allowing for analysis of the brain’s response to the specific input.

- Visual evoked potentials (VEPs): Elicited by visual stimuli (e.g., flashes of light, checkerboard patterns). Used to assess visual pathways and diagnose conditions like multiple sclerosis or optic neuritis.

- Auditory evoked potentials (AEPs): Elicited by auditory stimuli (e.g., clicks, tones). Used to evaluate auditory pathways and detect hearing loss or brainstem disorders.

- Somatosensory evoked potentials (SEPs): Elicited by electrical stimulation of peripheral nerves. Used to evaluate sensory pathways and diagnose neurological conditions affecting the spinal cord or brain.

The clinical application of EPs varies based on the specific type and the suspected pathology. For example, delayed latencies in AEPs can indicate problems in the brainstem, while abnormalities in SEPs may point to spinal cord lesions.

Q 7. Explain the concept of event-related potentials (ERPs).

Event-related potentials (ERPs) are voltage fluctuations in the EEG that are time-locked to the presentation of a specific stimulus or event. They represent the brain’s electrophysiological response to that event. Unlike evoked potentials, ERPs often include a range of components, including both early sensory responses and later cognitive responses.

ERPs are extracted from EEG data by averaging numerous trials of EEG recordings time-locked to the events of interest. This procedure reduces noise and enhances the signal-to-noise ratio, revealing the event-related brain activity. The averaged waveform shows different peaks and troughs, representing distinct neural processes that have been triggered by the event.

Common ERP components include the P300 (related to decision-making and attention) and the N400 (related to semantic processing). ERPs provide insights into cognitive processes like attention, memory, language processing, and decision-making, and are valuable tools in cognitive neuroscience and clinical neuropsychology.

Q 8. What are independent component analysis (ICA) and its role in artifact rejection?

Independent Component Analysis (ICA) is a powerful signal processing technique used to separate a multivariate signal into additive subcomponents. In EEG, this means decomposing the scalp EEG signal into a set of independent sources. Imagine a cocktail party: you hear a mix of overlapping voices (the EEG signal). ICA acts like a sophisticated sound engineer, separating each individual voice (the independent components) so you can hear them clearly.

Its role in artifact rejection is crucial. Many artifacts in EEG, such as eye blinks, muscle movements, and line noise, appear as distinct sources mixed with the brain’s electrical activity. ICA identifies these artifacts as separate components, allowing us to remove or attenuate them without significantly affecting the underlying brain activity. This results in a cleaner EEG signal better suited for analysis. For example, a component showing a characteristic pattern of an eye blink can be easily identified and removed, leaving behind a signal dominated by neuronal activity.

Practically, ICA is implemented using algorithms that search for statistically independent components. This usually involves minimizing the higher-order statistical dependencies between the components. The resulting components are then visually inspected and those identified as artifacts are rejected.

Q 9. How do you interpret EEG data to diagnose epilepsy?

Diagnosing epilepsy using EEG involves identifying characteristic patterns of abnormal brain activity called epileptiform discharges. These can include spikes (sharp, transient voltage deflections), sharp waves (similar to spikes but slightly less sharp), and spike-and-wave complexes (a spike followed by a slower wave). The location, frequency, and morphology of these discharges provide crucial information about the type and location of the epileptic focus within the brain.

Interpretation requires a thorough understanding of EEG morphology, frequency bands, and spatial distribution. We look for patterns that are consistent with different epilepsy syndromes. For example, absence seizures often present with characteristic 3 Hz spike-and-wave discharges generalized across the scalp. Focal seizures, originating from a specific brain area, may show localized epileptiform discharges. The process also involves considering the patient’s clinical history and neurological examination findings.

It’s important to note that a single EEG recording might not always capture an epileptic event. Prolonged monitoring, such as video-EEG, may be necessary for definitive diagnosis, particularly in patients with infrequent seizures. The interpretation is always done by a neurologist or epileptologist experienced in reading and understanding EEG data.

Q 10. Explain the use of EEG in sleep studies.

EEG plays a vital role in sleep studies, helping to classify different sleep stages and identify sleep disorders. During sleep, brain activity changes significantly, showing characteristic patterns for each stage (N1, N2, N3, REM). The EEG recording captures these changes in brainwave frequency and amplitude.

For example, stage N1 sleep is characterized by theta waves (4-7 Hz), while stage N2 shows sleep spindles (12-14 Hz) and K-complexes (sharp negative waves). Stage N3 (slow-wave sleep) is dominated by delta waves (0.5-4 Hz), reflecting deep sleep. REM (rapid eye movement) sleep is characterized by a desynchronized EEG pattern similar to wakefulness but with rapid eye movements detected by electrooculography (EOG).

EEG in sleep studies helps diagnose disorders like insomnia, sleep apnea, narcolepsy, and parasomnias. For instance, frequent awakenings during the night might indicate insomnia, while excessive daytime sleepiness can be associated with narcolepsy. Analyzing the timing and frequency of sleep stages helps assess sleep architecture and identify irregularities that may indicate underlying sleep disorders. The combined analysis of EEG, EOG, and EMG (electromyography) provides a comprehensive picture of sleep stages and related events.

Q 11. Describe the process of performing a quantitative EEG (qEEG) analysis.

Quantitative EEG (qEEG) analysis goes beyond visual inspection of the raw EEG signal. It involves using mathematical techniques to extract quantitative measures that reflect different aspects of brain activity. This can include things like spectral analysis (power spectral density), coherence, and brain connectivity.

The process typically involves:

- Data acquisition: Recording a high-quality EEG signal using appropriate electrodes and equipment.

- Preprocessing: This crucial step involves cleaning the data, removing artifacts (like eye blinks and muscle movements), and referencing the EEG signal.

- Feature extraction: This involves calculating various quantitative measures from the cleaned EEG data such as absolute and relative power in different frequency bands (delta, theta, alpha, beta, gamma), coherence between different brain regions, and other connectivity measures.

- Statistical analysis: Statistical tests are then used to compare qEEG measures across different groups of individuals (e.g., patients vs. controls) or to identify changes over time within an individual. Statistical analysis helps determine whether observed differences are meaningful or simply due to random variation.

- Interpretation: Finally, the results are interpreted in the context of the clinical presentation and other neuropsychological data. qEEG results alone are not usually sufficient for a definitive diagnosis; they are used in conjunction with other clinical information.

For example, increased theta power in the frontal lobes might be associated with attention-deficit hyperactivity disorder (ADHD), while decreased alpha power might be seen in anxiety disorders. However, these are general trends and not definitive diagnostic markers.

Q 12. What are the limitations of EEG as a neuroimaging technique?

While EEG is a valuable neuroimaging technique, it has limitations. Its main drawback is its poor spatial resolution. The EEG signal is a summation of electrical activity from many different cortical sources, making it difficult to pinpoint the exact location of the source of activity. Think of it like listening to a choir: you hear the combined sound but can’t easily distinguish each individual voice.

Other limitations include:

- Susceptibility to artifacts: EEG is sensitive to various artifacts, requiring careful artifact rejection techniques.

- Limited ability to image deep brain structures: EEG primarily reflects cortical activity and has limited ability to image deep brain structures.

- Sensitivity to head movements: Head movements during recording can introduce artifacts and affect the quality of the signal.

- Limited temporal resolution (in some aspects): While having excellent temporal resolution in the millisecond range, some advanced analysis techniques may have slower processing speeds.

Despite these limitations, EEG remains a widely used technique due to its high temporal resolution, non-invasiveness, and relative cost-effectiveness compared to other neuroimaging modalities.

Q 13. How does EEG differ from other neuroimaging modalities such as MEG or fMRI?

EEG, MEG (magnetoencephalography), and fMRI (functional magnetic resonance imaging) all measure brain activity but use different principles and offer complementary information.

- EEG measures electrical activity using electrodes placed on the scalp. It has excellent temporal resolution (milliseconds) but relatively poor spatial resolution.

- MEG measures magnetic fields produced by electrical activity in the brain. It offers better spatial resolution than EEG but is more expensive and less widely available. It also has excellent temporal resolution.

- fMRI measures changes in blood flow related to neuronal activity. It has good spatial resolution but poor temporal resolution (seconds).

In essence, EEG excels in capturing the timing of brain activity, MEG provides a balance between spatial and temporal resolution, and fMRI excels at localizing activity but lacks the speed to capture rapidly changing brain dynamics. Each modality is best suited for specific research questions or clinical applications. For example, EEG is ideal for studying rapid brain processes like evoked potentials, MEG can be used to map cortical sources of activity, and fMRI is often employed to study brain regions involved in cognitive tasks. Researchers often combine these techniques to overcome individual limitations and gain a more comprehensive understanding of brain function.

Q 14. Explain the concept of coherence and its use in EEG analysis.

Coherence in EEG analysis quantifies the linear statistical relationship between two EEG signals recorded from different brain regions. It measures the similarity in the phase and amplitude of oscillations between these regions. High coherence indicates that the signals are highly correlated, suggesting functional connectivity between the brain areas. Imagine two instruments playing in an orchestra: high coherence would mean they’re playing in sync, indicating coordination.

Coherence is often calculated across different frequency bands (delta, theta, alpha, beta, gamma) to determine the extent of functional connectivity within specific frequency ranges. For example, high alpha coherence between the frontal and parietal lobes might be associated with attentional processes. Changes in coherence patterns across different frequency bands can be indicative of various neurological conditions or cognitive states.

Practically, coherence analysis helps to investigate functional networks in the brain and how they change in different states (e.g., rest, task performance, disease). However, it’s crucial to note that coherence primarily reflects linear relationships, and non-linear relationships may exist that are not captured by this measure. Advancements in connectivity analysis are exploring more complex measures beyond simple coherence to capture these non-linear interactions.

Q 15. Describe different methods for EEG signal filtering.

EEG signal filtering is crucial for removing unwanted artifacts and noise, allowing us to focus on the brain’s electrical activity. We employ various methods, categorized broadly as:

- Linear Filtering: This is the most common approach, using techniques like:

- FIR (Finite Impulse Response) filters: These are non-recursive filters, meaning the output depends only on the current and past input samples. They are stable and easy to design, often used for removing powerline noise (e.g., 50Hz or 60Hz) or high-frequency noise. I frequently use them in my preprocessing pipelines.

- IIR (Infinite Impulse Response) filters: These are recursive filters, where the output depends on both current and past input and output samples. They are more computationally efficient than FIR filters but can be unstable if not designed carefully. Butterworth, Chebyshev, and Bessel filters are common examples. I would choose an IIR filter for its efficiency if dealing with a large dataset where computational time is crucial.

- Nonlinear Filtering: These methods are useful for dealing with impulsive noise or non-stationary artifacts. Examples include:

- Median filtering: Replaces each sample with the median of its neighboring samples, effective in removing spike noise.

- Wavelet denoising: Decomposes the signal into different frequency bands and selectively removes noise in specific bands. This is especially useful for preserving important signal features while removing noise.

The choice of filter depends heavily on the type of noise present and the desired characteristics of the filtered signal. For instance, in a study involving sleep EEG, I would utilize a combination of a notch filter to remove powerline interference, a high-pass filter to eliminate slow drifts, and potentially a wavelet denoising step to address muscle artifacts.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What software packages are you familiar with for EEG data analysis?

I have extensive experience with several leading EEG data analysis software packages. My proficiency includes:

- EEGLAB: A widely used MATLAB-based toolbox offering a comprehensive suite of tools for preprocessing, analysis, and visualization. I’ve used EEGLAB extensively for tasks ranging from independent component analysis (ICA) to time-frequency analysis. It is my go-to for advanced research applications.

- BrainVision Analyzer: A powerful and user-friendly software package known for its robust artifact rejection capabilities and intuitive interface. I frequently leverage BrainVision for its efficient handling of large datasets and its excellent visualization tools, particularly during clinical studies.

- Neurophysiological Biomarker Toolbox (NBT): I’m familiar with NBT for its capability in processing and analyzing EEG data using advanced signal processing techniques and machine learning algorithms. It’s particularly helpful for exploring complex relationships within EEG data.

- Python libraries (MNE-Python, SciPy): I also have significant programming experience in Python, utilizing libraries like MNE-Python and SciPy for advanced analysis and customized data processing pipelines. The flexibility of Python allows for tailor-made solutions to specific research needs.

My choice of software depends on the specific project demands and the type of analysis required. For example, if speed and ease of use are paramount, I might choose BrainVision Analyzer. For complex analysis involving custom algorithms, I would likely use Python with its extensive libraries.

Q 17. Describe your experience with EEG data preprocessing techniques.

EEG data preprocessing is a critical step, ensuring data quality and minimizing artifacts. My experience encompasses various techniques, including:

- Artifact Rejection: Identifying and removing or correcting artifacts like eye blinks, eye movements, muscle activity, and line noise. This often involves visual inspection, Independent Component Analysis (ICA), and automated artifact detection algorithms. For example, I’ve successfully applied ICA to separate ocular artifacts from brain activity using EEGLAB.

- Filtering: Applying various filters, as described previously, to remove unwanted frequency components.

- Rereferencing: Changing the reference electrode to improve signal quality and reduce artifacts. Common techniques include average re-referencing and Laplacian re-referencing. Choosing the correct reference is crucial for accurate interpretation of the data.

- Line Noise Correction: Removing power line interference (50/60 Hz) using notch filters. It is often the first step of my preprocessing pipeline.

- Segmentation: Dividing the continuous EEG data into epochs based on experimental events or time windows, preparing the data for further analysis.

I’ve successfully applied these techniques in numerous projects, consistently improving data quality and enabling reliable insights from my analysis. A particularly challenging project involved cleaning noisy EEG data from infants, requiring a careful combination of artifact rejection and filtering techniques to preserve the delicate brain signals.

Q 18. How do you handle missing data in EEG recordings?

Missing data in EEG recordings is a common issue. Handling it requires careful consideration to avoid introducing bias. My approach is multifaceted:

- Visual Inspection: Initially, I visually inspect the data to identify the nature and extent of the missing data. This helps determine the best approach.

- Interpolation: If the missing data is sparse and randomly distributed, I often use interpolation techniques, such as linear, spline, or cubic interpolation, to estimate the missing values. The choice depends on the characteristics of the data and the potential for distorting the signal. I’m cautious about overusing interpolation, as it can introduce artifacts.

- Advanced Imputation: For more complex missing data patterns, more sophisticated imputation techniques, like k-nearest neighbors or Expectation-Maximization (EM) algorithms, can be employed. These methods take into account the relationships between different channels and time points.

- Exclusion: If a large portion of data is missing or the missingness pattern suggests systematic issues, I might decide to exclude the affected segments or channels entirely from the analysis, ensuring transparency in my methods.

The decision on how to handle missing data is crucial. I always document my chosen methodology and consider the potential impact of missing data on my results, reporting these considerations in any publication.

Q 19. Explain the concept of time-frequency analysis in EEG.

Time-frequency analysis allows us to explore how the power of different frequency bands in the EEG signal changes over time. Instead of looking at the signal just in the time domain or frequency domain separately, we view the dynamics of frequency components across time. This is crucial because brain activity isn’t static; different brain rhythms (e.g., alpha, beta, theta, delta) fluctuate constantly.

Common time-frequency methods include:

- Short-Time Fourier Transform (STFT): This method divides the EEG signal into short, overlapping time windows and applies the Fourier Transform to each window. This provides a time-frequency representation showing how frequencies change over time. It’s a relatively simple method but has limitations in resolution (compromise between time and frequency precision).

- Wavelet Transform: This offers better time-frequency resolution compared to STFT, especially for non-stationary signals like EEG. Different wavelet functions are suited to capturing different types of transient events in the signal.

- Hilbert-Huang Transform (HHT): This method decomposes the EEG signal into Intrinsic Mode Functions (IMFs), which represent the underlying oscillations. It’s particularly effective for analyzing complex, nonlinear signals but is computationally more demanding.

Time-frequency analysis is invaluable for understanding event-related dynamics in the brain, such as changes in brain oscillations during cognitive tasks or in response to stimuli. For instance, I might use it to investigate changes in alpha power during a visual attention task, revealing how brain activity shifts with cognitive demands.

Q 20. Describe your experience with analyzing EEG data from different populations (e.g., children, adults).

My experience extends to analyzing EEG data from diverse populations, acknowledging the importance of adapting methodologies to developmental and individual differences.

- Children: Analyzing EEG data from children requires careful consideration of developmental changes in brain activity and the higher susceptibility to artifacts (movement). I utilize specialized techniques for artifact reduction and employ age-appropriate experimental designs. For example, I’ve used shorter recording sessions and incorporated engaging stimuli to obtain high-quality data from young children.

- Adults: Adults present a different set of challenges, potentially including individual variability in brain activity and the presence of clinical conditions affecting brain function. Careful attention to clinical history and individual characteristics is essential for appropriate data interpretation.

- Specific Populations: I have experience adapting EEG analysis techniques for specific populations with neurological or psychiatric disorders, including patients with epilepsy, ADHD, or Alzheimer’s disease. This includes adapting my analysis methods to consider known clinical features of these conditions and accounting for their influence on the EEG signal.

In each instance, a thorough understanding of the specific population’s characteristics is crucial for designing appropriate analysis strategies and interpreting results accurately. I prioritize ethical considerations and data privacy throughout my work.

Q 21. How would you approach troubleshooting a problem with an EEG recording?

Troubleshooting EEG recordings requires a systematic approach. My strategy involves:

- Visual Inspection: I begin by carefully inspecting the raw EEG data visually for obvious problems such as excessive noise, flatlines, or implausibly large amplitudes. This often reveals the source of the problem.

- Check Electrode Impedance: High impedance values can severely affect signal quality. I review the electrode impedance measurements to ensure they fall within acceptable limits.

- Examine Electrode Placement: Incorrect electrode placement can lead to artifacts and inaccurate measurements. I review the electrode placement protocol to ensure it aligns with the standardized 10-20 system (or other established system) and identify any potential errors.

- Assess Artifact Sources: If the problem is related to artifacts, I investigate potential sources like eye blinks, muscle movements, or environmental noise. This may involve reviewing the recording setup and the participant’s behavior during the recording.

- Examine the Recording System: I check the integrity of the recording equipment, including cables and amplifiers, to rule out hardware problems. Calibration and maintenance records are reviewed.

- Data Preprocessing Review: If the problem is apparent only after preprocessing, I examine the preprocessing steps to identify any errors in the filtering, artifact rejection, or other transformations.

Through systematic investigation, I have resolved numerous issues, ranging from simple electrode impedance problems to complex issues related to equipment malfunction. The key is a thorough and methodical investigation.

Q 22. Describe a challenging EEG case you worked on and how you solved it.

One particularly challenging case involved a patient presenting with atypical seizures. The EEG showed intermittent, irregular slow waves, but these weren’t consistent with any typical seizure pattern. The initial interpretation was inconclusive, leaving the clinical team uncertain about diagnosis and treatment. To solve this, we took a multi-pronged approach. First, we carefully reviewed the patient’s medical history, focusing on potential triggers or underlying conditions that could affect brainwave activity. Secondly, we employed advanced signal processing techniques like independent component analysis (ICA) to separate artifacts (like eye blinks and muscle movements) from genuine brain activity. This revealed subtle epileptiform discharges masked by the artifacts, pointing towards a focal epilepsy. Finally, we performed a long-term EEG monitoring to capture these subtle events more definitively. This combined approach provided a clearer picture, leading to a more precise diagnosis and a tailored treatment plan. The key takeaway was the importance of using multiple tools and a holistic view, rather than relying solely on initial interpretations of potentially ambiguous EEG data.

Q 23. What are the ethical considerations related to collecting and analyzing EEG data?

Ethical considerations in EEG are paramount. Patient confidentiality is key; all data must be anonymized and securely stored, complying with regulations like HIPAA (in the US) or GDPR (in Europe). Informed consent is absolutely crucial – patients must understand the purpose of the EEG, the data’s use, and potential risks before participation. Data integrity is another important aspect – ensuring the accuracy and authenticity of the EEG recordings through proper equipment calibration and recording procedures. There are also ethical implications concerning data ownership and potential biases in algorithms used for analysis; ensuring algorithms aren’t perpetuating existing societal biases is a critical concern. Finally, the use of EEG data for research requires careful ethical review and approval by institutional review boards (IRBs) to safeguard participant rights and welfare.

Q 24. Explain the difference between monopolar and bipolar EEG montages.

The difference lies in how EEG electrodes are referenced. In a monopolar montage, each electrode’s voltage is measured relative to a single reference electrode, often placed on the earlobe or mastoid process. This allows for the identification of voltage differences between each electrode and the reference point. Think of it like measuring the height of each mountain peak relative to sea level. In contrast, a bipolar montage measures the voltage difference between adjacent electrodes. This highlights local activity and is useful in identifying voltage gradients across the scalp. Imagine measuring the difference in elevation between two neighboring mountain peaks. The choice of montage depends on the clinical question. Monopolar montages are better for identifying focal activity, while bipolar montages are excellent for identifying the spread of activity and are often less susceptible to volume conduction effects.

Q 25. How would you explain complex EEG findings to a non-technical audience?

Explaining complex EEG findings to a non-technical audience requires clear, simple language and analogies. I’d avoid jargon and focus on the overall picture. For example, instead of saying “increased theta activity in the temporal lobes,” I might explain, “We saw some unusual brainwave patterns in the areas responsible for memory and emotion, suggesting potential underlying issues.” Visual aids, like simplified brain diagrams showing the relevant areas, can be incredibly helpful. I’d also emphasize the limitations of EEG interpretation, emphasizing that it’s just one piece of the puzzle in diagnosing neurological conditions and that it needs to be considered alongside other clinical information.

Q 26. Describe your experience working with EEG databases and repositories.

My experience with EEG databases includes working with both public and private repositories. I’ve used databases like the EEG-BCI database for research purposes, leveraging their standardized datasets to train and validate machine-learning models for seizure detection. I am also familiar with creating and managing our in-house EEG database for clinical applications and research. This involved data cleaning, standardization, and implementing robust data management protocols to ensure data integrity and accessibility. I’m proficient in utilizing database management systems (DBMS) to query and analyze large EEG datasets, and in programming languages (such as Python) to process and manipulate the data for analysis.

Q 27. What are your future goals in the field of EEG analysis?

My future goals involve pushing the boundaries of EEG analysis through advanced machine learning techniques. I’m particularly interested in developing more accurate and robust algorithms for automated seizure detection and prediction, improving the quality of life for patients with epilepsy. I also aim to contribute to the development of brain-computer interfaces (BCIs) using advanced EEG signal processing methods. Ultimately, I want to enhance the clinical utility of EEG and improve patient care by leveraging the power of data science and cutting-edge technologies.

Q 28. Are you familiar with any advanced EEG analysis techniques, such as machine learning applications in EEG?

Yes, I’m very familiar with advanced EEG analysis techniques, including machine learning applications. I have experience utilizing various machine learning algorithms, including convolutional neural networks (CNNs) and recurrent neural networks (RNNs), for tasks such as seizure detection, sleep stage classification, and emotion recognition from EEG data. I understand the importance of proper data preprocessing, feature extraction, and model selection in achieving optimal performance. For example, I've used CNNs to analyze EEG spectrograms, effectively capturing both temporal and spectral information crucial for accurate classification. The field is rapidly evolving, and I’m eager to stay abreast of the latest developments in this exciting area.

Key Topics to Learn for Electroencephalography (EEG) Analysis Interview

- EEG Signal Acquisition and Preprocessing: Understanding artifact rejection techniques (e.g., ICA, filtering), impedance checks, and data quality control.

- Event-Related Potentials (ERPs): Familiarize yourself with common ERP components (e.g., P300, N400) and their interpretation in cognitive neuroscience research and clinical applications.

- Frequency Analysis and Spectral Features: Mastering techniques like power spectral density (PSD) estimation, understanding frequency bands (delta, theta, alpha, beta, gamma), and their clinical significance.

- Time-Frequency Analysis: Explore techniques like wavelet transforms and their application in analyzing non-stationary EEG signals.

- Connectivity Analysis: Gain understanding of methods like coherence, Granger causality, and functional connectivity to explore brain network dynamics.

- Clinical Applications of EEG Analysis: Become familiar with EEG’s role in diagnosing epilepsy, sleep disorders, and other neurological conditions.

- Source Localization Techniques: Understand the principles behind techniques like dipole modeling and beamforming for localizing neural activity.

- Machine Learning in EEG Analysis: Explore the application of algorithms for classification, prediction, and feature extraction in EEG data analysis.

- Ethical Considerations and Data Privacy: Understand the ethical implications of handling sensitive patient data in EEG research and clinical settings.

- Interpreting EEG Reports and Findings: Practice analyzing EEG data and formulating concise and accurate reports.

Next Steps

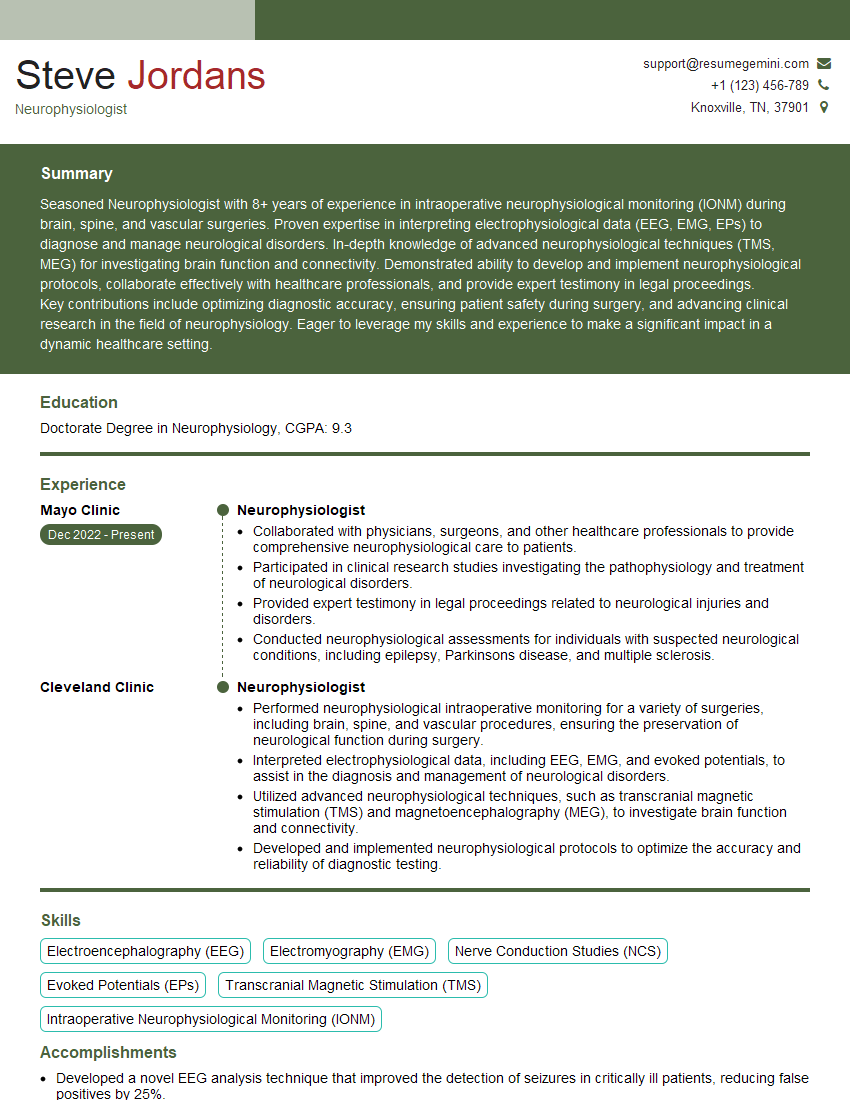

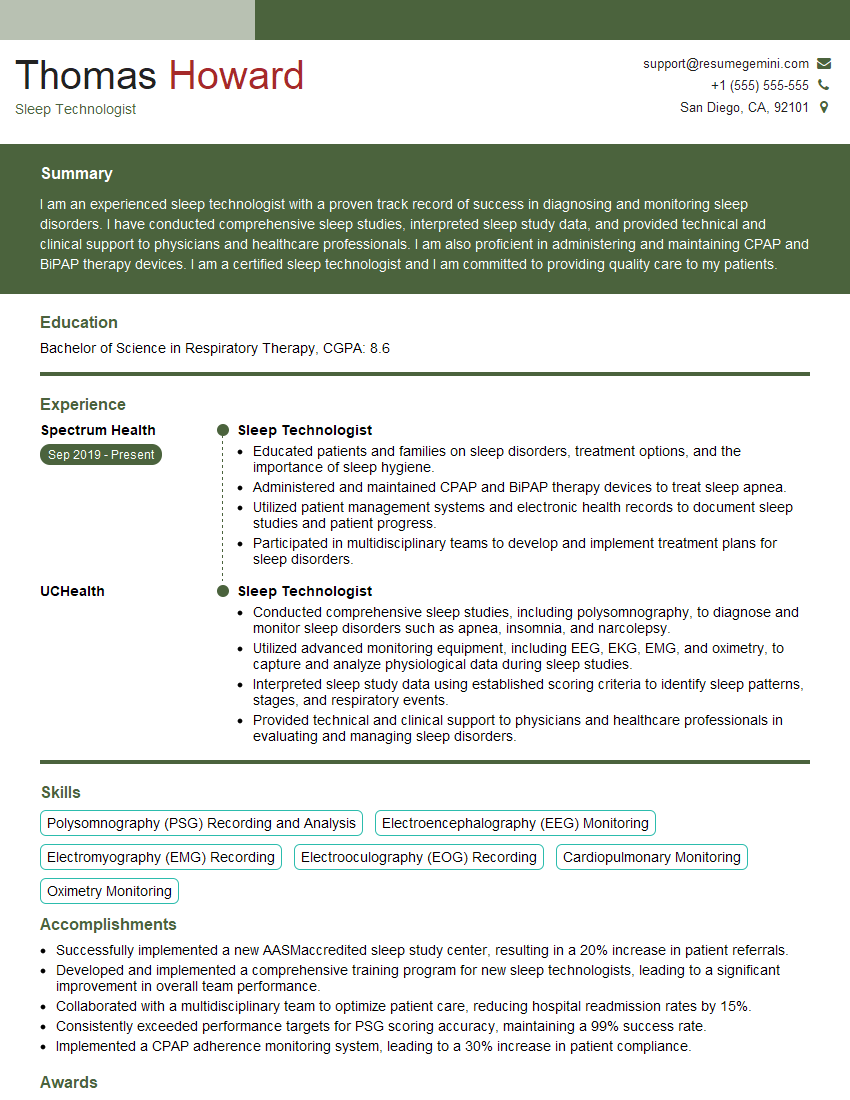

Mastering Electroencephalography (EEG) Analysis opens doors to exciting career opportunities in research, clinical settings, and the burgeoning field of neurotechnology. A strong understanding of these concepts will significantly enhance your interview performance and overall career prospects. To maximize your chances, focus on crafting a compelling and ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource to help you build a professional resume that stands out. They provide examples of resumes tailored to Electroencephalography (EEG) Analysis to help you get started.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

To the interviewgemini.com Webmaster.

Very helpful and content specific questions to help prepare me for my interview!

Thank you

To the interviewgemini.com Webmaster.

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.