Feeling uncertain about what to expect in your upcoming interview? We’ve got you covered! This blog highlights the most important Quality Assurance and Control Processes interview questions and provides actionable advice to help you stand out as the ideal candidate. Let’s pave the way for your success.

Questions Asked in Quality Assurance and Control Processes Interview

Q 1. Explain the difference between QA and QC.

While often used interchangeably, Quality Assurance (QA) and Quality Control (QC) have distinct roles in ensuring product quality. Think of QA as the preventative measure, focusing on establishing processes and procedures to prevent defects from occurring in the first place. QC, on the other hand, is the reactive measure, focused on identifying and correcting defects after they’ve been introduced.

QA encompasses a broader scope, including planning, defining standards, risk assessment, process improvement, and auditing. It aims to build quality into the entire software development lifecycle (SDLC). For example, a QA team might define coding standards, review design documents, and conduct training sessions for developers.

QC involves the actual testing and inspection of the product or process to detect defects. This includes activities such as unit testing, integration testing, system testing, and user acceptance testing (UAT). An example of QC would be running automated tests to verify functionality or manually testing user flows to identify usability issues.

Q 2. Describe your experience with various testing methodologies (e.g., Agile, Waterfall).

I have extensive experience with both Agile and Waterfall methodologies. In Waterfall, testing typically occurs in a dedicated phase after development is completed, often resulting in late detection of defects and higher remediation costs. My role involved meticulous test planning, designing comprehensive test cases, executing them rigorously, and documenting results thoroughly. I successfully managed testing for a large-scale ERP system implementation using a waterfall approach, ensuring the system met all functional and non-functional requirements before going live.

In Agile, testing is integrated throughout the entire SDLC, with frequent feedback loops and iterative development. This allows for early defect detection and quicker adaptation to changing requirements. In one project, using Scrum, I worked closely with developers and product owners to deliver incremental functionality, performing continuous testing (unit, integration, and system tests) during each sprint. This enabled us to deliver high-quality software with faster release cycles and improved user satisfaction.

I’m also familiar with other methodologies like V-model, which combines aspects of both Waterfall and Agile, providing a more structured approach to testing. I adapt my testing approach to fit the chosen methodology and project context.

Q 3. How do you define and measure the success of a QA process?

The success of a QA process is defined by its ability to deliver high-quality software that meets user expectations and business requirements while adhering to budget and timeline constraints. This isn’t just about finding bugs; it’s about minimizing defects, improving software quality, and ultimately, reducing the cost of fixing those defects later.

Key metrics used to measure success include:

- Defect Density: The number of defects found per unit of code or functionality. A lower defect density indicates better quality.

- Defect Leakage: The number of defects that escape into production. A low leakage rate shows effective testing.

- Test Coverage: The percentage of code or requirements tested. High test coverage provides greater confidence in software quality.

- Test Execution Time: The time taken to execute the tests. Optimizing this time improves efficiency.

- Customer Satisfaction: Ultimately, the most important metric is whether the end-users are satisfied with the software’s quality and functionality.

By tracking and analyzing these metrics, we can identify areas for improvement in the QA process and ensure consistent delivery of high-quality software.

Q 4. What are some common quality control tools and techniques you’ve used?

I’ve utilized a wide array of quality control tools and techniques, both manual and automated. These include:

- Test Management Tools: Jira, TestRail, Azure DevOps for planning, executing, and tracking tests.

- Defect Tracking Systems: Jira, Bugzilla, Mantis for managing and reporting defects.

- Automated Testing Frameworks: Selenium, Appium, Cypress for automated UI testing, JUnit, TestNG for unit testing.

- Static Code Analysis Tools: SonarQube, FindBugs for identifying potential coding defects early in the development cycle.

- Performance Testing Tools: JMeter, LoadRunner for assessing software performance under various loads.

- Code Reviews: A crucial technique for improving code quality and identifying potential issues early on.

- Checklists and Test Plans: Systematic approaches to ensure all aspects of testing are covered.

The choice of tools and techniques depends heavily on the project’s requirements, technology stack, and budget. I always aim to leverage the most effective tools to optimize the QA process.

Q 5. Describe your experience with test case design and execution.

Test case design and execution are core aspects of my QA experience. I utilize various techniques to design effective test cases, including equivalence partitioning, boundary value analysis, decision table testing, and state transition testing, ensuring comprehensive coverage of requirements.

For example, when testing a login feature, I would use equivalence partitioning to create test cases for valid usernames/passwords, invalid usernames/passwords, and empty fields. Boundary value analysis would help identify issues at the boundaries of input values (e.g., maximum password length).

During execution, I carefully follow the test cases, record results accurately, and report any discrepancies or defects encountered. I’m proficient in both manual and automated test execution, adapting my approach based on project needs and prioritizing automation for repetitive tasks to improve efficiency and reduce human error.

I also employ techniques such as exploratory testing to uncover unexpected issues and improve test case design for future iterations. My focus is always on designing test cases that are clear, concise, reproducible, and cover all relevant scenarios.

Q 6. How do you handle conflicting priorities between speed and quality?

Balancing speed and quality is a common challenge in software development. My approach is to prioritize quality but with a keen awareness of deadlines. Instead of a zero-sum game, I view speed and quality as mutually reinforcing goals. Rushing without proper testing can introduce significant problems later, costing even more time and resources in the long run.

Here’s how I approach this:

- Prioritization: I work closely with stakeholders to prioritize features based on risk and impact. High-risk features receive more thorough testing.

- Risk-Based Testing: Focus testing efforts on areas with the highest probability of defects or the most significant consequences if defects occur.

- Automation: Automate repetitive tests to reduce execution time without compromising quality.

- Test Optimization: Continuously improve testing processes to maximize efficiency and reduce test cycle time. This might involve using more efficient test techniques or refining test cases.

- Communication: Transparency with stakeholders regarding potential trade-offs between speed and quality is crucial. Open communication allows for informed decisions based on risk tolerance.

Ultimately, a well-planned and executed QA process contributes to both speed and quality, preventing rework and ensuring a smooth release.

Q 7. Explain your experience with bug tracking and reporting systems.

I have extensive experience using various bug tracking and reporting systems. My proficiency includes using systems like Jira, Bugzilla, and Azure DevOps to track, manage, and report bugs throughout the software development lifecycle.

My approach to bug reporting is meticulous and standardized. I ensure that each bug report contains all necessary information, including:

- Clear and concise title: Summarizes the bug.

- Detailed description: Explains the issue in detail, including steps to reproduce.

- Severity level: Indicates the impact of the bug (critical, major, minor, trivial).

- Priority level: Indicates the urgency of fixing the bug (high, medium, low).

- Screenshots or screen recordings: Provides visual evidence of the bug.

- Expected vs. actual results: Clearly outlines the difference between the expected behavior and the actual behavior.

- Environment information: Specifies the operating system, browser, and other relevant system details.

I regularly monitor the bug tracking system, providing updates on bug status, collaborating with developers to resolve issues, and verifying bug fixes. Effective bug tracking ensures consistent communication, facilitates collaboration, and improves the quality of the final product.

Q 8. How do you prioritize defects based on severity and risk?

Prioritizing defects involves a systematic approach considering both severity and risk. Severity refers to the impact of the defect on the software’s functionality, while risk considers the likelihood of the defect causing problems and the potential consequences. We typically use a matrix to categorize defects.

For example, a critical defect (high severity, high risk) – like a system crash – would be prioritized over a minor cosmetic issue (low severity, low risk) – such as a slightly misaligned button. Even a low-severity defect might be prioritized if it’s in a critical part of the system (high risk), for instance, a minor calculation error in the billing section.

- Severity Levels: Critical, Major, Minor, Trivial

- Risk Levels: High, Medium, Low

A defect with high severity and high risk is addressed immediately. Those with low severity and low risk might be deferred to a later release, depending on project deadlines and resource availability. This process ensures that critical issues impacting users are addressed first, while less impactful ones are managed efficiently.

Q 9. Describe your experience with different types of software testing (e.g., unit, integration, system, acceptance).

My experience spans the entire software testing lifecycle, encompassing various testing types. I’ve extensively worked on unit testing, verifying individual components’ functionality using techniques like JUnit or pytest. Integration testing ensures seamless interaction between different modules, often using integration testing frameworks. System testing validates the entire system as a whole against requirements, including functional and non-functional aspects. Finally, I have significant experience with User Acceptance Testing (UAT), where end-users validate the system aligns with their needs.

For instance, in a recent e-commerce project, I performed unit tests on individual modules such as the shopping cart, payment gateway integration, and product catalog. I then progressed to integration testing, verifying the seamless flow of adding items to the cart, proceeding to checkout, and finally completing the order. System testing involved end-to-end testing, encompassing all functionalities from user login to order delivery simulation. UAT involved real users testing the system’s usability and functionality in a realistic setting.

Q 10. What is your experience with automated testing frameworks?

I’m proficient in several automated testing frameworks, including Selenium for UI testing, RestAssured for API testing, and Cypress for end-to-end testing. My experience includes designing, developing, and maintaining automated test suites, leveraging these frameworks to enhance efficiency and reduce manual testing efforts. I also have experience with TestNG and JUnit for unit and integration testing.

For example, I used Selenium to automate regression testing of a web application, significantly reducing the time required for each testing cycle. This involved creating test scripts that simulated user interactions, verified expected outputs, and reported results automatically. I’ve also utilized CI/CD pipelines (like Jenkins or GitLab CI) to integrate automated tests into the development process, enabling continuous testing and early defect detection.

Q 11. Explain your experience with performance testing and tools.

Performance testing is crucial to ensure application scalability and responsiveness. I have experience conducting load testing, stress testing, and endurance testing using tools like JMeter and LoadRunner. I understand how to design realistic test scenarios, analyze performance metrics (response times, throughput, resource utilization), and identify performance bottlenecks.

In a project involving a high-traffic website, I used JMeter to simulate a large number of concurrent users accessing the website simultaneously. By analyzing the performance metrics, I identified a bottleneck in the database query and suggested optimizations to improve response times and ensure the website could handle peak loads efficiently. I then used the data to support recommendations for server upgrades and database optimization.

Q 12. How do you ensure effective communication with developers and stakeholders?

Effective communication is paramount in QA. I utilize various methods to ensure seamless interaction with developers and stakeholders. This includes regular meetings, detailed bug reports with clear steps to reproduce, and proactive communication about testing progress and potential risks. I emphasize clear, concise language, avoiding technical jargon when communicating with non-technical stakeholders. Tools like Jira or Azure DevOps are essential for tracking defects and progress transparently.

For instance, when reporting a bug, I provide a concise summary, detailed steps to reproduce the issue, screenshots or screen recordings where necessary, and expected versus actual results. This ensures developers have all the information required to quickly understand and resolve the problem. I also proactively communicate potential risks or delays to stakeholders, ensuring transparency and collaborative problem-solving.

Q 13. Describe your experience with risk assessment and mitigation in a QA context.

Risk assessment is a critical part of the QA process. I identify potential risks throughout the software development lifecycle using methods like Failure Mode and Effects Analysis (FMEA) and risk matrixes. This involves assessing the probability and impact of potential failures, and subsequently defining mitigation strategies. This could involve adding extra testing, adjusting schedules, or allocating additional resources.

For example, in a recent project, we identified a high risk associated with the integration of a third-party payment gateway. We mitigated this risk by conducting thorough integration testing, performing security audits, and establishing a fallback mechanism in case of payment gateway failure. Regular monitoring and communication were also implemented to proactively manage this risk.

Q 14. How do you stay updated with the latest QA trends and technologies?

Staying updated in the dynamic QA field requires a multi-faceted approach. I actively participate in online communities, attend webinars and conferences, and follow industry influencers and publications. I also engage in continuous learning through online courses (such as Coursera or Udemy) and certifications to deepen my expertise in new tools and methodologies. Hands-on experience with new technologies, experimenting with different approaches, is also vital.

For example, I recently completed a course on AI-powered testing to learn about the application of machine learning in test automation and defect prediction. I also actively participate in online forums and groups related to software testing to exchange knowledge and insights with other professionals in the field.

Q 15. Describe your experience with software development life cycle (SDLC) models.

Throughout my career, I’ve worked extensively with various Software Development Life Cycle (SDLC) models. Understanding the nuances of each model is crucial for tailoring QA processes for optimal effectiveness. For instance, the Waterfall model, with its linear, sequential approach, necessitates thorough upfront planning and detailed documentation, impacting how I design test cases and prioritize testing activities. In contrast, Agile methodologies like Scrum and Kanban prioritize iterative development and frequent feedback loops. This necessitates a more adaptable and flexible QA approach, with continuous testing integrated throughout the sprint cycles. I’ve personally utilized both Waterfall and Agile models, adapting my QA strategies accordingly. In Agile, for example, I’ve employed techniques like test-driven development (TDD) and continuous integration/continuous delivery (CI/CD) pipelines to ensure rapid feedback and early defect detection. My experience also includes working with DevOps methodologies, where QA is deeply integrated into the development and deployment pipeline, enabling faster release cycles and increased efficiency.

- Waterfall: Detailed test planning upfront, rigorous testing phases, less flexibility.

- Agile (Scrum/Kanban): Iterative testing, continuous integration, close collaboration with developers, adaptability.

- DevOps: Automation, continuous monitoring, fast feedback loops, increased collaboration across teams.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What is your approach to root cause analysis of software defects?

My approach to root cause analysis (RCA) of software defects is systematic and data-driven. I don’t just fix the immediate symptom; I dig deeper to understand the underlying cause to prevent recurrence. I typically follow a structured methodology, such as the 5 Whys technique or the Fishbone diagram (Ishikawa diagram).

For example, let’s say a login functionality fails intermittently. Instead of simply fixing the code causing the immediate error, I’d systematically ask “why” five times:

- Why did the login fail? Because the database connection timed out.

- Why did the database connection time out? Because the database server was overloaded.

- Why was the database server overloaded? Because of a spike in concurrent user login attempts.

- Why was there a spike in concurrent logins? Because of a recent marketing campaign.

- Why wasn’t the database capacity planned for the marketing campaign? Insufficient capacity planning.

This process reveals the root cause – inadequate capacity planning – allowing us to address the issue permanently, rather than just patching the immediate symptoms. I also utilize tools like defect tracking systems to analyze defect trends, identify recurring issues, and pinpoint areas needing improvement in the development process.

Q 17. How do you measure the effectiveness of your QA process?

Measuring QA effectiveness is critical. I use a multi-faceted approach, combining quantitative and qualitative metrics. Quantitative metrics include:

- Defect Density: The number of defects per lines of code (LOC) or per function point. A lower defect density indicates higher quality.

- Defect Severity: Categorizing defects by impact (critical, major, minor). Tracking severity distribution helps prioritize fixes.

- Test Coverage: Percentage of code or requirements covered by test cases. Higher coverage suggests more comprehensive testing.

- Test Execution Time: Evaluating efficiency of test processes and identifying bottlenecks.

Qualitative metrics include:

- Customer Satisfaction: Gathering feedback from end-users on software stability and usability.

- Team Feedback: Regular reviews and feedback sessions to identify areas for process improvements.

- Compliance with Standards: Measuring adherence to coding standards, security protocols, and regulatory guidelines.

By analyzing these metrics over time, I can track progress, identify trends, and make data-driven decisions to optimize QA processes. For instance, a high defect density in a particular module might indicate a need for more rigorous code reviews or additional testing in that area.

Q 18. Describe your experience with static code analysis tools.

I have extensive experience with various static code analysis tools, including SonarQube, Coverity, and FindBugs. These tools play a vital role in identifying potential defects early in the SDLC. Static analysis doesn’t require executing the code; it examines the source code directly for flaws such as coding style violations, security vulnerabilities, and potential bugs.

For example, SonarQube helps identify code smells, potential bugs, and security vulnerabilities. It also provides metrics on code complexity, code coverage, and code duplication. This helps us to improve the overall quality of the codebase. I use the findings from these tools to collaborate with developers to address issues before they become significant problems. Integrating static analysis tools into the CI/CD pipeline ensures continuous code quality checks, reducing the likelihood of introducing defects into the production environment. It also helps enforce coding standards and best practices, leading to more maintainable and robust code.

Q 19. How do you manage and mitigate the risk of scope creep in QA projects?

Scope creep, the uncontrolled expansion of project requirements, is a significant risk in QA projects. To mitigate this, I emphasize proactive measures throughout the project lifecycle.

- Clearly Defined Scope: Working with stakeholders to create a detailed, unambiguous project scope document at the outset is paramount. This document should include clearly defined deliverables, acceptance criteria, and timelines.

- Change Management Process: Establishing a formal change control process ensures any additional requirements are evaluated, prioritized, and documented. This includes assessing the impact on timelines, budget, and testing effort.

- Regular Communication: Frequent communication with stakeholders, including regular progress updates and meetings, enables early identification and discussion of potential scope creep.

- Agile Practices: Agile methodologies, with their iterative approach and frequent feedback loops, are inherently less susceptible to scope creep compared to Waterfall.

- Test Planning and Prioritization: Comprehensive test planning that incorporates risk assessment and prioritization ensures resources are focused on the most critical aspects of the project, helping to manage unforeseen requests.

By adhering to these practices, I help ensure the QA project remains focused, manageable, and delivers high-quality results within defined constraints.

Q 20. What is your experience with test data management?

Effective test data management is crucial for reliable and efficient software testing. My experience encompasses various strategies for creating, managing, and maintaining test data. This involves considerations around data volume, data variety, data quality, and data security.

I’ve worked with techniques such as:

- Test Data Generation Tools: Using tools to automatically generate realistic test data, ensuring sufficient coverage of various scenarios.

- Data Subsetting: Selecting a representative subset of production data for testing to reduce data volume and improve efficiency, while ensuring that the subset still reflects data characteristics from production.

- Data Masking/Anonymization: Protecting sensitive data during testing by masking or anonymizing personally identifiable information (PII).

- Test Data Management (TDM) Tools: Utilizing specialized TDM tools to manage the complete test data lifecycle, from creation and provisioning to refreshing and archiving.

- Data Virtualization: Using virtualized data sources to access production data without compromising the security or availability of production systems.

The choice of strategy depends on factors like the application’s data sensitivity, the scale of the test environment, and available resources.

Q 21. How do you ensure the quality of third-party integrations?

Ensuring the quality of third-party integrations is critical as these often represent significant points of failure in a system. My approach is multifaceted and involves collaboration with the third-party vendor and rigorous testing.

- Clearly Defined APIs and Contracts: Working with vendors to ensure well-defined APIs and service level agreements (SLAs) that outline expected performance and functionality.

- Integration Testing: Conducting thorough integration testing to validate the interaction between the application and the third-party system. This includes testing various scenarios, including successful and failed interactions.

- Performance Testing: Evaluating the performance of the integrated system under various load conditions to ensure acceptable response times and stability.

- Security Testing: Assessing the security of the integration points, paying close attention to potential vulnerabilities and ensuring data is exchanged securely.

- Vendor Collaboration: Maintaining open communication and collaboration with vendors throughout the integration process to address issues quickly and efficiently.

- Monitoring and Alerting: Setting up monitoring and alerting systems to detect and respond to integration issues after the system is live.

By using a comprehensive approach, we can build robust, high-quality applications that integrate effectively with third-party systems.

Q 22. Describe your experience working with offshore or outsourced QA teams.

My experience with offshore QA teams spans several years and various projects, encompassing both direct management and collaborative efforts. I’ve found that effective communication and clear documentation are paramount. For instance, on a recent project involving an outsourced team in India, we established daily stand-up meetings using video conferencing to maintain transparency and address issues promptly. We also implemented a centralized bug tracking system (Jira) with detailed reporting and workflow processes. This ensured consistent tracking of defects, regardless of geographical location. To bridge any cultural or time-zone differences, we established clear communication protocols, including preferred communication methods, response times, and documentation standards. This structured approach allowed for seamless collaboration, successful project delivery, and a high level of quality assurance. Successfully navigating cultural nuances and potential communication barriers is crucial for optimal teamwork and project outcomes.

Beyond communication, establishing robust quality control mechanisms is vital. This includes clear test plans, detailed acceptance criteria, and regular performance reviews of the offshore team. Utilizing shared, cloud-based test environments allows for real-time collaboration and monitoring of testing progress. Regular audits and quality checks are also imperative to maintain consistently high standards, even with a geographically dispersed team.

Q 23. How do you handle pressure and tight deadlines in a QA role?

Handling pressure and tight deadlines in QA requires a structured and proactive approach. My strategy involves prioritizing tasks based on risk and impact, using techniques like MoSCoW analysis (Must have, Should have, Could have, Won’t have). This helps to focus efforts on the most critical aspects of the software. For example, if a deadline is approaching and testing is incomplete, I will first prioritize testing high-risk areas that could cause significant problems in production. Once the critical areas are covered, I then move to lower-priority tests, aiming for maximum test coverage within the available time frame.

I also rely on effective communication with the development and project management teams. Transparent reporting on progress and any potential roadblocks enables collaborative problem-solving and helps manage expectations. Furthermore, automating repetitive testing tasks, using tools like Selenium or Appium, saves considerable time and resources, leaving more time for critical, manual testing. Ultimately, a calm and methodical approach, combined with strong communication and efficient resource allocation, is key to successfully navigate pressure and deliver high-quality results even under tight deadlines.

Q 24. Explain your experience with different types of testing environments.

My experience encompasses a wide range of testing environments, including:

- Development/Staging Environments: These mimic the production environment but are used for testing and development purposes. I’ve extensively used these to identify and resolve bugs before deployment.

- Test Environments: Dedicated environments specifically designed for testing purposes, often isolated from development and production. These usually include emulators, simulators, and virtual environments to test on different platforms.

- Production Environments: While less frequent, I have participated in controlled testing in live production environments utilizing techniques like A/B testing and canary deployments, carefully monitoring the impact of changes on live users.

- Cloud-based Environments: I have experience working with cloud-based testing platforms such as AWS and Azure, leveraging their scalability and flexibility for testing across different geographical locations and hardware configurations.

Understanding the nuances of each environment and its limitations is crucial for effective testing. For instance, a staging environment may not perfectly replicate production, leading to potential discrepancies. Therefore, rigorous testing in multiple environments is essential to minimize the risk of unforeseen issues in production. Each environment requires a slightly different testing approach and strategy. The choice of environment depends heavily on the stage of the software development lifecycle and the specific risks you are trying to mitigate.

Q 25. How do you handle disagreements with developers regarding software defects?

Disagreements with developers about software defects are a common occurrence in QA. My approach focuses on collaborative problem-solving and data-driven discussions. I start by clearly and concisely documenting the defect, including steps to reproduce, expected behavior, and actual behavior. This detailed report ensures we’re all on the same page and eliminates ambiguity. I use screenshots, videos, and log files to support my findings. If there is a disagreement about the severity or validity of a defect, I try to demonstrate its impact from the end-user perspective. For example, I might show how a seemingly minor visual bug could negatively impact user experience and conversion rates. I would present data from user testing, surveys or analytics to support my perspective.

If the disagreement persists, I escalate it to a senior QA engineer or project manager to facilitate a neutral discussion and help find a resolution. The goal is not to win an argument, but to find a shared understanding and a solution that benefits the software and the project. Open communication, a respect for differing opinions, and a focus on data and objective evidence are key to resolving these conflicts constructively.

Q 26. What are some common metrics you use to track QA performance?

Tracking QA performance is crucial for continuous improvement. Several key metrics I regularly use include:

- Defect Density: The number of defects found per lines of code or per module. This helps assess the overall quality of the software.

- Defect Severity: Categorizing defects based on their impact (e.g., critical, major, minor). This prioritizes testing efforts toward high-impact issues.

- Test Coverage: Percentage of the software codebase that has been tested. It helps in ensuring thorough testing.

- Test Execution Time: Time taken to complete a test cycle. Tracking this helps identify areas for automation or optimization.

- Defect Leakage: Number of defects that reach production. A high leakage rate indicates weaknesses in the QA process.

- Test Case Pass/Fail Rate: The ratio of passed to failed test cases. It highlights areas needing more attention.

Regularly monitoring these metrics provides insights into the efficiency and effectiveness of the QA process. This allows for timely identification of trends, problem areas, and opportunities for improvement. These metrics, combined with regular reporting and analysis, enables continuous improvement in quality and efficiency.

Q 27. Describe your experience with creating and maintaining QA documentation.

Creating and maintaining comprehensive QA documentation is essential for successful software projects. My experience involves generating various documents, including:

- Test Plans: These outline the scope, objectives, and approach for testing a particular software release. They describe the test environment, the testing tools, the team involved, and the timeline for testing.

- Test Cases: Detailed step-by-step instructions for executing specific tests. Each test case includes preconditions, steps, expected results, and postconditions.

- Test Scripts: Automated scripts for executing repetitive tests. These scripts usually include code snippets for automating test cases using programming languages like Python and Java and libraries like Selenium or Appium.

- Bug Reports: Detailed reports documenting defects found during testing. These include steps to reproduce the defect, screenshots, and suggested solutions.

- Test Summary Reports: High-level summaries of testing activities, including overall test coverage, number of defects found, and testing progress.

I utilize a version control system (e.g., Git) to manage QA documentation, ensuring that all documents are properly versioned and easily accessible. Consistent use of templates and a standardized format for documentation ensures maintainability and consistency throughout the project lifecycle. Well-maintained QA documentation is invaluable for traceability, collaboration, and ongoing improvement of the QA process. It serves as a historical record of testing activities, ensuring accountability and improving the overall quality of the software.

Key Topics to Learn for Quality Assurance and Control Processes Interview

- Understanding Quality Management Systems (QMS): Explore frameworks like ISO 9001 and their practical implementation in various industries. Consider the roles and responsibilities within a QMS structure.

- Testing Methodologies: Become proficient in different testing approaches like black-box, white-box, integration, and system testing. Understand their strengths and weaknesses, and when to apply each.

- Defect Tracking and Management: Learn how to effectively track, prioritize, and resolve defects using bug tracking systems. Practice documenting defects clearly and concisely.

- Risk Management in QA/QC: Understand the importance of proactive risk identification and mitigation strategies within a quality control process. Explore techniques for assessing and managing risks effectively.

- Statistical Process Control (SPC): Familiarize yourself with the application of statistical methods to monitor and control processes. Understand concepts like control charts and process capability analysis.

- Automation in QA/QC: Explore the role of automation tools and techniques in enhancing efficiency and effectiveness of testing processes. Research popular automation frameworks and tools.

- Quality Metrics and Reporting: Learn how to define, measure, and report key quality metrics to stakeholders. Practice creating insightful reports that communicate progress and areas for improvement.

- Continuous Improvement Methodologies: Understand and be prepared to discuss methodologies like Lean, Six Sigma, and Kaizen, and their application to improve QA/QC processes.

- Regulatory Compliance (if applicable): Depending on the industry, familiarize yourself with relevant regulations and standards that impact QA/QC processes.

- Problem-solving and Root Cause Analysis: Develop your ability to effectively analyze problems, identify root causes, and implement corrective actions to prevent recurrence.

Next Steps

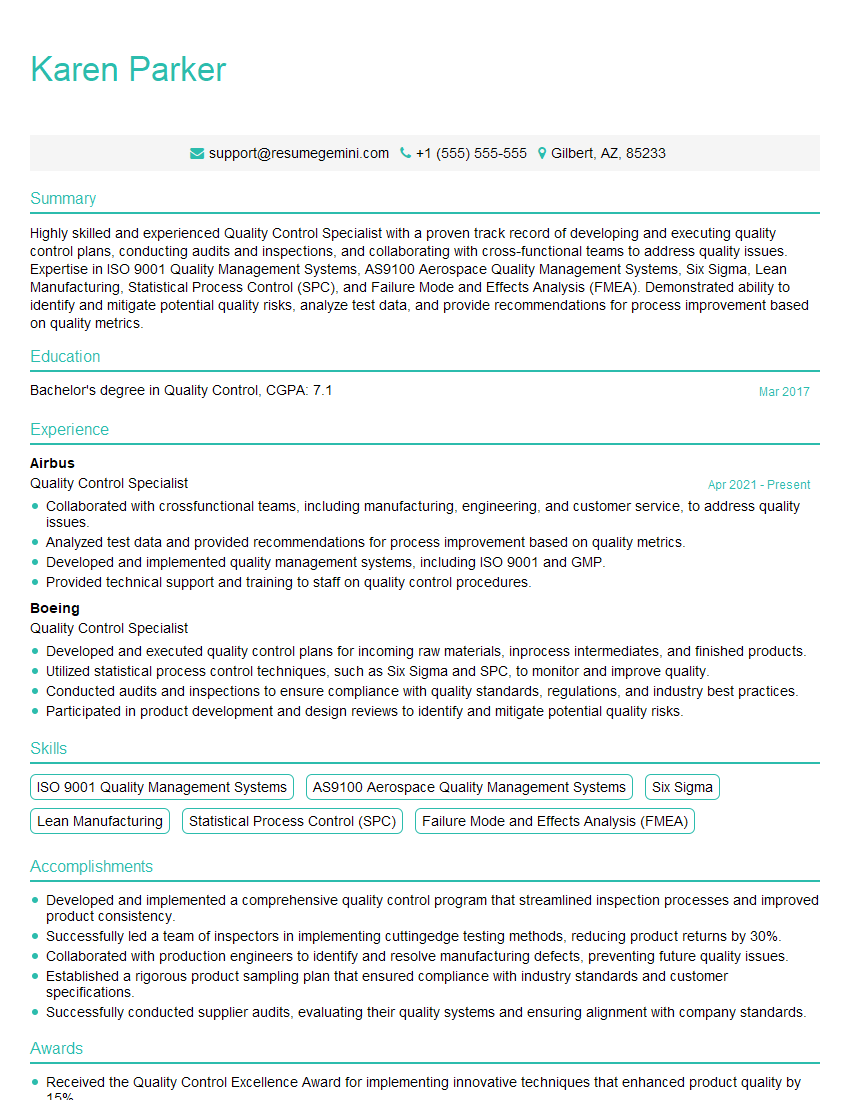

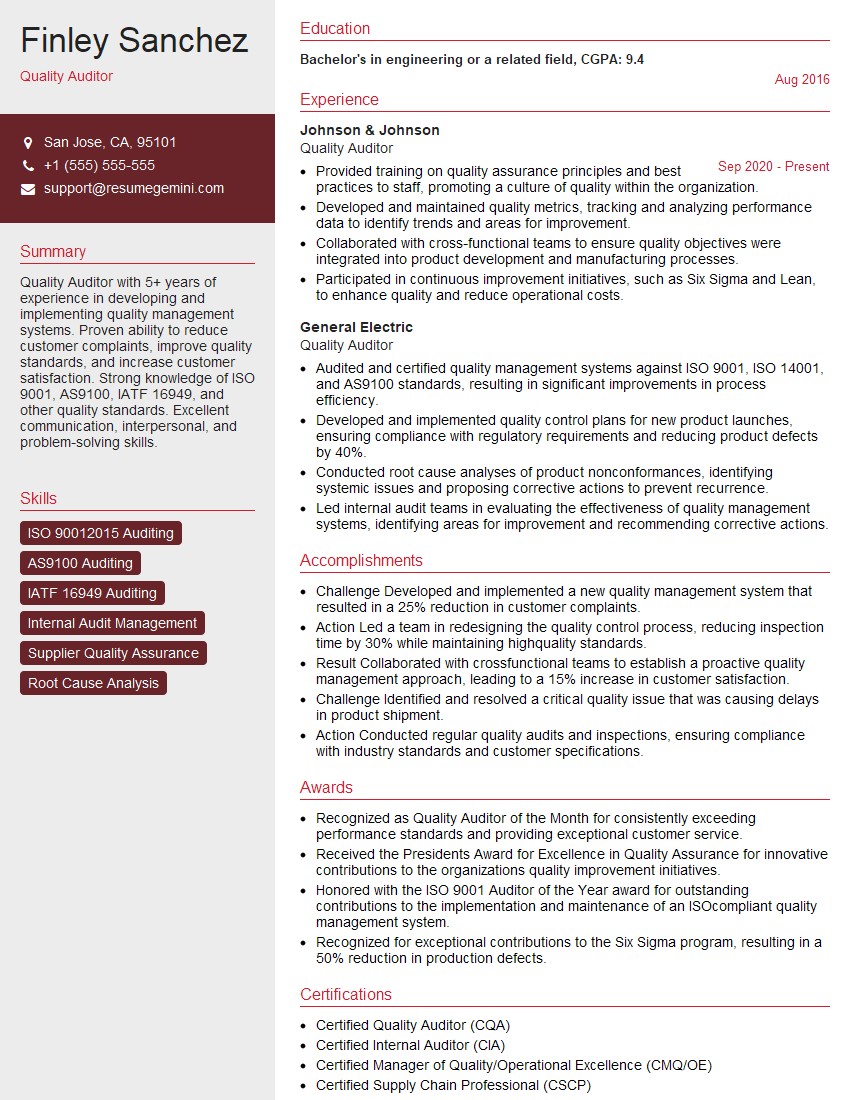

Mastering Quality Assurance and Control Processes is crucial for career advancement in today’s competitive landscape. A strong understanding of these principles demonstrates your commitment to quality and problem-solving, opening doors to exciting opportunities and higher earning potential. To maximize your job prospects, creating an ATS-friendly resume is paramount. ResumeGemini is a trusted resource to help you build a professional and effective resume that highlights your skills and experience. Examples of resumes tailored specifically to Quality Assurance and Control Processes are available to help guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.