Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Advanced Process Monitoring and Control interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Advanced Process Monitoring and Control Interview

Q 1. Explain the difference between open-loop and closed-loop control systems.

The core difference between open-loop and closed-loop control systems lies in their ability to respond to process disturbances. An open-loop system, also known as a feedforward control system, operates based solely on pre-programmed instructions or a predetermined input without considering the actual output. Think of a toaster: you set the time, and it runs for that duration regardless of whether the bread is actually toasted. It doesn’t measure the degree of toasting.

Conversely, a closed-loop system, or feedback control system, constantly measures the output and compares it to the desired setpoint. Any deviation triggers a corrective action to minimize the error. Imagine a cruise control system in a car: it monitors the car’s speed and adjusts the throttle to maintain the set speed, compensating for inclines or headwinds. The feedback loop ensures the system stays on track.

In essence, open-loop systems are simpler and cheaper but less accurate and robust to disturbances, while closed-loop systems are more complex but offer superior precision and adaptability.

Q 2. Describe different types of controllers (PID, MPC, etc.) and their applications.

Several types of controllers exist, each with its strengths and weaknesses.

- Proportional-Integral-Derivative (PID) controllers are the workhorses of process control. They use three terms to calculate the control action: proportional (responds to the current error), integral (compensates for accumulated error), and derivative (predicts future error based on the rate of change). PID controllers are versatile and relatively easy to tune, making them suitable for a vast array of applications, from temperature regulation in ovens to flow control in pipelines.

- Model Predictive Control (MPC) is an advanced control strategy that uses a mathematical model of the process to predict future outputs and optimize control actions over a time horizon. This allows for handling multiple inputs and outputs (multivariable control) and constraints (e.g., upper and lower limits on manipulated variables). MPC finds applications in complex processes like chemical reactors and refinery operations where optimizing multiple variables simultaneously is crucial.

- Other controllers include: Fuzzy Logic Controllers, which use fuzzy sets to handle imprecise or uncertain information; Neural Network Controllers, which adapt their control strategy through machine learning; and Adaptive Controllers that automatically adjust their parameters in response to changing process dynamics.

The choice of controller depends on the process complexity, desired performance, and available resources. Simple processes often benefit from PID controllers, while complex processes frequently require the advanced capabilities of MPC.

Q 3. What are the advantages and disadvantages of Model Predictive Control (MPC)?

Model Predictive Control (MPC) offers significant advantages, but also comes with some drawbacks:

Advantages:

- Handles constraints: MPC explicitly considers process constraints (limits on variables), preventing unsafe or inefficient operation.

- Multivariable control: It can effectively manage multiple inputs and outputs simultaneously, optimizing overall process performance.

- Predictive capabilities: It predicts future behavior, allowing for proactive control actions.

- Improved performance: MPC often achieves better performance compared to traditional PID controllers, especially in complex processes.

Disadvantages:

- Model complexity: Developing an accurate process model is crucial but can be challenging and time-consuming.

- Computational burden: MPC requires significant computational resources, which might be a limitation for some applications.

- Tuning complexity: Tuning MPC parameters can be more complex than tuning PID controllers.

- Sensitivity to model mismatch: If the model doesn’t accurately represent the real process, MPC performance can degrade.

In summary, MPC is a powerful tool for advanced process control, but its implementation requires careful consideration of its computational and modeling demands.

Q 4. How do you tune a PID controller?

PID controller tuning is a crucial step to ensure optimal performance. Several methods exist, but the most common are:

- Ziegler-Nichols method: This is a simple, empirical method based on the process’s ultimate gain and ultimate period. It involves pushing the system to the verge of instability to determine these parameters and then calculating the PID gains. It’s a quick starting point, but it often requires further fine-tuning.

- Cohen-Coon method: Another empirical method that uses the process’s reaction curve to estimate the PID gains. This method usually provides a more stable response than Ziegler-Nichols but may be slightly slower.

- Auto-tuning: Modern controllers often feature auto-tuning capabilities. They automatically adjust PID gains by analyzing the system’s response to test signals.

- Trial and error: This iterative process involves systematically adjusting the gains while observing the system’s response. It can be time-consuming but allows for fine-grained optimization.

Regardless of the method used, the tuning process is iterative, and you need to consider factors like the process dynamics (how fast the process responds), desired performance (speed of response, overshoot, settling time), and stability (avoiding oscillations).

Q 5. Explain the concept of process gain and its importance in control system design.

Process gain represents the change in the output of a process for a unit change in the input. It’s a crucial parameter in control system design because it quantifies the sensitivity of the output to changes in the input. For example, if a 1°C increase in the heating element temperature results in a 2°C increase in the oven temperature, the process gain is 2.

Knowing the process gain is essential for:

- Controller design: The gain influences the controller’s tuning parameters. A high gain might require aggressive controller action, while a low gain may necessitate gentler adjustments.

- Stability analysis: The gain affects the stability of the closed-loop system. Excessive gain can lead to oscillations or instability.

- Performance prediction: It helps predict the system’s response to changes in the setpoint or disturbances.

In practice, the process gain can be determined experimentally by introducing a small change in the input and measuring the resulting output change. It’s also possible to estimate the gain from a process model.

Q 6. What are the common performance indicators used to evaluate process control systems?

Several performance indicators are used to evaluate process control systems. They typically focus on:

- Setpoint tracking: How well the system maintains the desired setpoint. Metrics include IAE (Integrated Absolute Error), ISE (Integrated Squared Error), and ITAE (Integrated Time-weighted Absolute Error).

- Disturbance rejection: How effectively the system handles unexpected changes or disturbances. Metrics include the size and duration of deviations from the setpoint after a disturbance.

- Overshoot: How much the output exceeds the setpoint before settling. A high overshoot might indicate an aggressively tuned controller.

- Settling time: The time it takes for the output to settle within a specified range of the setpoint.

- Rise time: The time it takes for the output to reach a specified percentage (e.g., 90%) of the setpoint.

The choice of performance indicators depends on the specific application and control objectives. For example, in some processes, minimizing overshoot is more important than minimizing settling time.

Q 7. Describe different types of process disturbances and how they are handled.

Process disturbances are unplanned changes that affect the process output. These disturbances can originate from various sources:

- Load disturbances: Changes in the process load (e.g., changes in feed flow rate, input material composition).

- Environmental disturbances: Variations in ambient temperature, pressure, or humidity.

- Measurement noise: Errors or fluctuations in the sensors that measure the process variables.

- Equipment malfunctions: Failures or changes in the process equipment (e.g., valve leaks, pump malfunctions).

Handling process disturbances typically involves:

- Feedback control: Closed-loop control systems continuously monitor the output and adjust the manipulated variables to compensate for disturbances.

- Feedforward control: Using prior knowledge of disturbances to anticipate their effects and adjust the manipulated variables proactively. This is often combined with feedback control for robustness.

- Robust control design: Designing the controller to be less sensitive to variations in process parameters and disturbances.

- Process monitoring and alarm systems: Detecting and alerting operators to significant disturbances that might require manual intervention.

The best approach to handling disturbances depends on their nature, frequency, and severity. A combination of techniques is often necessary for effective disturbance rejection.

Q 8. Explain the concept of feedback control and its role in process stability.

Feedback control is the cornerstone of process stability. Imagine a thermostat: you set a desired temperature, and the thermostat measures the actual temperature. If there’s a difference (error), it adjusts the heating or cooling system to reduce the error and maintain the desired temperature. This is feedback control in action. In process control, a similar principle applies. A process variable (like temperature, pressure, or flow rate) is measured, compared to a setpoint (the desired value), and corrective action is taken based on the difference (error). This closed-loop system continuously monitors and corrects deviations, ensuring the process remains stable and operates within specified limits.

The role of feedback control in process stability is paramount. Without it, processes would be prone to significant variations and disturbances. For example, imagine a chemical reactor without a feedback controller regulating temperature. Even minor fluctuations in the feedstock or ambient temperature could lead to uncontrolled reactions, potentially resulting in unsafe conditions or product degradation. Feedback control minimizes these variations, providing robustness and reliability to the process.

Q 9. What are the challenges in implementing advanced process control systems?

Implementing advanced process control (APC) systems presents several challenges. One significant hurdle is the need for high-quality data. APC relies heavily on accurate and reliable sensor measurements. Noisy or inconsistent data can lead to poor control performance or even instability. Another challenge lies in model development. Accurate process models are crucial for designing effective control strategies. Obtaining these models can be computationally intensive and require significant expertise in process dynamics and modeling techniques. Furthermore, APC systems often require significant investment in both hardware and software, along with expertise for design, implementation, and maintenance. Finally, integrating APC systems into existing infrastructure can be complex, requiring careful consideration of compatibility and potential disruptions to existing operations.

For example, a refinery upgrading to advanced process control might face challenges in integrating new sensors and actuators with legacy equipment, requiring careful planning and potentially significant capital expenditure.

Q 10. How do you handle process constraints in an optimization problem?

Handling process constraints in optimization problems is vital for ensuring feasibility and safety. Constraints represent limitations on the process variables (e.g., maximum temperature, minimum flow rate, maximum pressure). These constraints can be incorporated into the optimization problem using various techniques. One common approach is to use constraint programming, where the constraints are explicitly defined as inequalities or equalities. For instance, a constraint might be T ≤ 150 °C, limiting the temperature (T) to a maximum of 150°C.

Another technique involves using penalty functions. These functions add a cost to the objective function if the constraints are violated. This encourages the optimizer to find solutions that satisfy the constraints. The choice of constraint handling method depends on the nature of the constraints and the optimization algorithm used. For example, linear programming techniques can handle linear constraints efficiently, while nonlinear programming methods are needed for more complex, nonlinear constraints.

Q 11. Explain the role of sensors and actuators in process control.

Sensors and actuators are the eyes and hands of a process control system. Sensors measure process variables, providing feedback to the controller. Actuators manipulate the process to correct deviations from the setpoint. They work together in a closed-loop system to maintain desired process conditions.

For instance, a temperature sensor measures the reactor temperature. If the temperature is below the setpoint, the controller signals an actuator (e.g., a heating element) to increase the heat input, raising the temperature. Without sensors, the controller would be blind, unable to ascertain the actual process state. Without actuators, the controller would be paralyzed, unable to effect changes in the process.

The accuracy and reliability of sensors and actuators are crucial. Faulty sensors can provide misleading information, leading to incorrect control actions. Similarly, unreliable actuators can fail to execute the controller’s commands, compromising the effectiveness of the control system. Therefore, regular calibration and maintenance of sensors and actuators are essential for reliable process control.

Q 12. Describe different types of sensor technologies used in process monitoring.

Various sensor technologies are employed in process monitoring, each offering unique advantages and disadvantages. These include:

- Temperature Sensors: Thermocouples, RTDs (Resistance Temperature Detectors), and thermistors are commonly used for temperature measurement, each with varying accuracy, response time, and operating range.

- Pressure Sensors: Diaphragm pressure sensors, strain gauge pressure transducers, and capacitive pressure sensors are used to measure pressure in various process applications.

- Flow Sensors: Differential pressure flow meters, ultrasonic flow meters, and Coriolis flow meters are employed to measure the rate of fluid flow.

- Level Sensors: Ultrasonic level sensors, float-type level sensors, and radar level sensors are used to determine the level of liquids or solids in tanks or vessels.

- pH Sensors: Electrochemical sensors measure the acidity or alkalinity of a solution.

- Gas Sensors: Various gas sensors, such as electrochemical sensors and infrared sensors, detect and quantify different gases.

The choice of sensor depends on the specific process variable, accuracy requirements, operating conditions, and cost considerations.

Q 13. How do you deal with sensor noise and measurement errors in process control?

Sensor noise and measurement errors are ubiquitous in process control. These errors can significantly affect the performance of the control system. Several techniques can be used to mitigate these issues:

- Filtering: Digital filters (e.g., moving average filters, Kalman filters) can smooth out noisy signals by averaging out random fluctuations.

- Calibration: Regular calibration ensures that sensor readings are accurate and consistent. Calibration involves comparing the sensor’s output to a known standard.

- Redundancy: Using multiple sensors to measure the same variable allows for error detection and correction. The readings from multiple sensors can be compared, and discrepancies can be identified and addressed.

- Data Validation: Implementing checks to identify and reject implausible readings can significantly reduce the impact of erroneous data. For example, setting bounds on acceptable readings can filter out out-of-range values.

For example, in a chemical reactor, a Kalman filter might be used to estimate the true temperature from noisy thermocouple readings. The filter incorporates a process model and noise statistics to improve the estimate’s accuracy.

Q 14. What is the importance of data logging and historical data analysis in process control?

Data logging and historical data analysis are crucial for optimizing process performance, troubleshooting issues, and improving control strategies. Data logging provides a record of process variables over time, allowing for detailed analysis of process behavior. This data can reveal trends, identify anomalies, and provide insights into process dynamics. Historical data analysis can be used to:

- Identify process bottlenecks: Analyzing historical data can pinpoint areas where the process is inefficient or underperforming.

- Improve process models: Historical data can be used to refine and improve process models, leading to better control performance.

- Detect and diagnose faults: By comparing current data with historical data, deviations from normal operating conditions can be identified, helping in prompt fault diagnosis.

- Optimize control parameters: Analysis of historical data can inform adjustments to control parameters, leading to improved process stability and efficiency.

Imagine a manufacturing plant with a large database of historical production data. Analyzing this data could identify hidden patterns, such as specific environmental conditions that lead to increased product defects, allowing the plant to adjust operational strategies to minimize those defects.

Q 15. Explain the concept of data reconciliation and its application.

Data reconciliation is the process of adjusting measured process data to make it consistent with known material balances and other process constraints. Think of it like balancing your checkbook – you have individual transactions (measurements), but they might not add up perfectly due to errors. Data reconciliation helps identify and correct these inconsistencies, resulting in a more accurate and reliable representation of the process.

Application: In a chemical plant, for example, we might measure the flow rates of several streams entering and leaving a reactor. Due to measurement errors (sensor drift, noise, etc.), the mass balance might not close perfectly. Data reconciliation algorithms use optimization techniques to adjust these measurements, minimizing the adjustments while ensuring that the mass balance is satisfied. This improved data quality is crucial for process monitoring, optimization, and fault detection.

A common method involves using a least-squares approach to minimize the differences between the adjusted measurements and the original measurements, while simultaneously satisfying the process model (mass balances, energy balances, etc.). This approach produces a set of reconciled data that is more accurate and consistent than the raw measurements.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you identify and diagnose process upsets?

Identifying and diagnosing process upsets requires a multi-faceted approach, combining process understanding, data analysis, and diagnostic tools. First, we establish a baseline of normal operation using historical data and statistical process control (SPC) charts. Deviations from this baseline indicate potential upsets.

- Real-time Monitoring: Continuous monitoring of key process variables (temperature, pressure, flow rates, compositions) using advanced process control (APC) systems and supervisory control and data acquisition (SCADA) systems. Alerts are triggered when deviations exceed predefined thresholds.

- Data Analysis Techniques: Statistical methods like principal component analysis (PCA) and partial least squares (PLS) can help identify patterns and correlations in the process data, revealing the root cause of upsets even with complex interactions between variables.

- Process Simulation: Dynamic process simulators can be used to model the process behavior under various conditions. By comparing the simulated behavior with the observed behavior, we can pinpoint the source of the upset.

- Expert Systems: For complex processes, expert systems can incorporate the knowledge of experienced operators and engineers to assist in diagnosing upsets and suggesting corrective actions.

Example: Suppose a reactor’s temperature unexpectedly rises. By analyzing the data, we might find a correlation between the temperature increase and a decrease in the cooling water flow rate. Further investigation might reveal a malfunction in the cooling water pump, identifying the root cause of the upset. The process simulation could then be used to assess the impact of the malfunction and guide the corrective actions.

Q 17. Describe your experience with different control system platforms (e.g., DCS, PLC).

I have extensive experience with various control system platforms, including Distributed Control Systems (DCS) like Honeywell Experion and Emerson DeltaV, and Programmable Logic Controllers (PLCs) such as Siemens Simatic and Rockwell Automation Allen-Bradley. My experience encompasses all aspects, from system configuration and programming to troubleshooting and maintenance.

DCS: I’ve worked on projects involving the design, implementation, and optimization of DCS-based control systems for large-scale industrial processes. This includes developing control strategies, configuring alarm management systems, and implementing advanced process control algorithms. The strength of DCS lies in its scalability, reliability, and advanced functionalities like historian capabilities for detailed data logging and analysis.

PLC: My experience with PLCs involves developing and implementing control programs for smaller, more discrete processes. PLCs are often chosen for their cost-effectiveness and adaptability in situations requiring simpler control logic. I’m proficient in various PLC programming languages such as ladder logic.

My expertise spans beyond the hardware; I’m equally comfortable working with the software interfaces and networking aspects of these systems, ensuring seamless communication and data integration across various components.

Q 18. What is your experience with process simulation software?

I have significant experience using process simulation software, primarily Aspen Plus and HYSYS. These tools are essential for process design, optimization, and troubleshooting. I’ve employed them in various capacities, including:

- Process Design: Developing steady-state and dynamic models of chemical processes to predict performance, identify bottlenecks, and optimize design parameters.

- Process Optimization: Using simulation to investigate the impact of changes to process operating conditions and design parameters, identifying optimal settings for maximum efficiency and yield.

- Troubleshooting: Modeling process upsets to understand their causes and effects, and to develop and test strategies for mitigating them.

- Operator Training: Creating realistic process simulations for training operators on how to handle various scenarios, improving their skills and response time.

For example, I used Aspen Plus to model a distillation column, optimizing its reflux ratio and number of trays to maximize product purity while minimizing energy consumption. This led to significant cost savings in the actual operation.

Q 19. Explain the concept of process safety and its relevance to process control.

Process safety is paramount in the operation of any industrial process. It involves identifying, analyzing, and mitigating hazards that could lead to accidents, such as fires, explosions, or releases of hazardous materials. Process control plays a critical role in ensuring process safety by maintaining the process within safe operating limits.

Relevance to Process Control: Control systems are designed to prevent unsafe operating conditions through various mechanisms:

- Safety Instrumented Systems (SIS): These systems are independent of the process control system and are designed to shut down the process in case of hazardous situations.

- Interlocks: These prevent unsafe operations by preventing certain actions unless specific conditions are met.

- Alarms and Trip Systems: These alert operators to potential hazards and automatically shut down the process if necessary.

- Emergency Shutdown Systems (ESD): These systems are designed to rapidly and safely shut down the process in case of emergencies.

By implementing robust control strategies and safety systems, we minimize the risk of accidents and protect personnel, equipment, and the environment. Safety is not just a checklist; it’s a mindset that should be integrated into every stage of process design and operation.

Q 20. How do you ensure the reliability and maintainability of process control systems?

Ensuring the reliability and maintainability of process control systems is crucial for safe and efficient operation. This involves several key strategies:

- Redundancy: Implementing redundant hardware and software components to minimize the impact of failures. For instance, using dual sensors or controllers to ensure continuous operation even if one component fails.

- Regular Maintenance: A preventative maintenance program is essential, involving periodic inspections, calibration, and repairs to prevent equipment failures and ensure optimal performance.

- Software Updates and Patches: Keeping the control system software up-to-date with the latest patches and updates to address security vulnerabilities and improve functionality.

- Data Backup and Recovery: Regular backups of configuration data and process data are essential to ensure that the system can be quickly restored in case of failures.

- Operator Training: Well-trained operators are crucial for safe and efficient operation. Training should cover normal operation, troubleshooting, and emergency procedures.

- Documentation: Comprehensive documentation of the control system, including design specifications, operating procedures, and maintenance logs, is essential for troubleshooting and maintenance.

Regular audits and reviews of the control system’s performance and reliability are also important to identify potential weaknesses and implement improvements.

Q 21. What is your experience with statistical process control (SPC)?

Statistical Process Control (SPC) is a powerful tool for monitoring and improving process performance. It involves using statistical methods to analyze process data and identify sources of variation. I have extensive experience using SPC techniques to improve process efficiency, reduce defects, and improve product quality.

Applications:

- Control Charts: I utilize various control charts, such as X-bar and R charts, p-charts, and c-charts, to monitor process variables and identify deviations from the norm. These charts help distinguish between common cause and special cause variation.

- Capability Analysis: This helps to determine whether the process is capable of meeting the specified requirements. This analysis can help identify areas for improvement to increase process capability.

- Process Optimization: By identifying the sources of variation, SPC can guide improvements to the process to reduce variability and improve consistency. For instance, by identifying a specific machine as a source of excessive variation, targeted maintenance or replacement can improve the overall process.

Example: In a manufacturing process, I used control charts to monitor the diameter of a machined part. By identifying a trend of increasing variability, we were able to trace it to a worn cutting tool. Replacing the tool immediately stabilized the process and reduced the number of defective parts.

Q 22. Describe different types of control charts and their applications.

Control charts are powerful statistical tools used to monitor process performance over time, detecting shifts in the mean or variability. They visually represent data, allowing for quick identification of trends and anomalies. Different charts cater to different data types and objectives.

- Shewhart Charts (X-bar and R charts): These are the most basic, used for continuous data. The X-bar chart tracks the average of subgroups, while the R chart monitors the range within each subgroup. They’re excellent for detecting shifts in the mean or increased variability. Example: Monitoring the average diameter of manufactured parts and the variation in diameter within a batch.

- Cumulative Sum (CUSUM) Charts: CUSUM charts are more sensitive to small shifts in the mean compared to Shewhart charts. They accumulate deviations from a target value, making them ideal for detecting gradual changes. Example: Monitoring the fill level of bottles on a production line, where a slow drift in the filling mechanism might not be immediately obvious on a Shewhart chart.

- Exponentially Weighted Moving Average (EWMA) Charts: EWMA charts give more weight to recent data points, making them responsive to recent changes. They’re useful when rapid detection of small shifts is critical. Example: Monitoring a chemical process where subtle changes in temperature can have significant consequences.

- p-charts and np-charts: These charts are used for attribute data (counts of defects or non-conforming items). p-charts track the proportion of defects, while np-charts track the number of defects. Example: Monitoring the percentage of defective chips produced on a semiconductor manufacturing line.

- c-charts and u-charts: These charts are also for attribute data, focusing on the number of defects per unit or per sample. c-charts are used when the sample size is constant, while u-charts are used when the sample size varies. Example: Monitoring the number of scratches on a painted surface per car body.

The choice of chart depends on the type of data (continuous or attribute), the size of the subgroups, and the sensitivity required to detect shifts in the process.

Q 23. Explain the concept of process capability analysis.

Process capability analysis assesses the ability of a process to consistently produce output within specified customer requirements or tolerances. It essentially answers the question: “Is our process capable of meeting the customer’s needs?” This is crucial for quality control and improvement.

This analysis involves comparing the process’s natural variation (measured by its standard deviation) to the tolerance limits (upper and lower specification limits) set by the customer or design specifications. Key metrics include:

- Cp (Process Capability Index): Measures the potential capability of the process, ignoring the process mean. It compares the spread of the process to the tolerance range. A Cp ≥ 1.33 is generally considered capable.

- Cpk (Process Capability Index): Considers both the spread and the centering of the process. It’s a more realistic indicator of actual capability, as it accounts for the process mean not being exactly centered within the specifications. A Cpk ≥ 1.33 is generally considered capable.

The calculation often involves statistical methods like determining the process mean and standard deviation from a large sample of data. A Cp or Cpk less than 1 indicates the process is not capable of meeting the specifications. Understanding the capability indices enables informed decisions on process improvements, reducing variation and improving quality. For example, if Cpk is low, focusing on reducing variation or shifting the process mean might be necessary.

Q 24. How do you handle batch process control challenges?

Batch processes present unique control challenges due to their discrete nature and the variability introduced between batches. Addressing these requires a multi-faceted approach.

- Robust Recipe Development: Careful recipe design is crucial to minimize batch-to-batch variation. This includes thorough testing and optimization of the recipe parameters.

- Real-Time Monitoring and Control: Implementing advanced sensors and control strategies to monitor critical process parameters during each batch is essential. This allows for adjustments during the batch, minimizing deviations from the target.

- Statistical Process Control (SPC) for Batches: Applying SPC techniques like control charts to batch data helps identify trends and deviations. However, standard charts may need adaptation for the batch nature of the data.

- Multivariate Statistical Process Control (MSPC): MSPC is particularly beneficial for batch processes with multiple interacting variables. It can detect subtle patterns and correlations between variables that might indicate a problem.

- Batch Data Analysis: Sophisticated data analysis techniques are essential to understand the sources of variation between batches and identify potential improvements. This might involve principal component analysis (PCA) or other multivariate methods.

For example, in a pharmaceutical batch process, real-time monitoring of temperature and pressure, combined with analysis of the final product’s characteristics, helps identify variations and correct issues throughout the process and in subsequent batches.

Q 25. Explain your experience with real-time data acquisition and processing.

My experience encompasses the entire lifecycle of real-time data acquisition and processing. I’ve worked extensively with various data acquisition systems, including programmable logic controllers (PLCs), distributed control systems (DCS), and industrial sensors. I’m proficient in selecting appropriate hardware based on the application requirements, connecting and configuring the hardware, and writing software for data acquisition and processing.

My expertise extends to various software technologies, including SCADA systems, historians, and database management systems. I’ve handled large volumes of data, applying techniques like data filtering, smoothing, and signal processing to improve data quality and reduce noise. I’m experienced in building real-time data pipelines to ensure data integrity and efficient processing. This often involves handling issues like data synchronization, data validation, and error handling in real-time environments. For example, I once implemented a real-time system for a chemical plant that monitored over 1000 process variables, alerting operators to critical deviations and archiving the data for historical analysis.

Q 26. What is your experience with fault detection and diagnosis techniques?

My experience with fault detection and diagnosis (FDD) techniques is extensive. I’ve applied various methods, both model-based and data-driven, to identify and diagnose faults in complex processes. Model-based approaches involve developing a mathematical model of the process and comparing its predictions to actual measurements to detect deviations.

Data-driven techniques, on the other hand, rely on analyzing historical process data to identify patterns associated with faults. These can include statistical process control (SPC), principal component analysis (PCA), and artificial neural networks (ANNs). I’ve successfully applied these to a variety of situations, including:

- Sensor fault detection: Using redundant sensors or statistical methods to detect inconsistencies and identify faulty sensors.

- Process equipment fault detection: Detecting equipment malfunctions such as pump failures or valve leaks using process variable deviations and model-based analysis.

- Process upset detection: Identifying significant deviations from normal operating conditions that may indicate an underlying problem.

The choice of FDD technique depends on factors like data availability, the complexity of the process, and the desired level of accuracy. A crucial aspect is integrating FDD with the overall process control system to provide timely alerts and enable automated responses to detected faults. For example, in a power plant, a timely diagnosis of a turbine fault can prevent significant damage and downtime.

Q 27. Describe your experience with implementing advanced analytics in process control.

I have significant experience implementing advanced analytics in process control, moving beyond traditional SPC techniques. This includes using machine learning (ML) and artificial intelligence (AI) for tasks like predictive maintenance, process optimization, and advanced process control.

Specific examples of my work include using:

- Predictive Maintenance: Developing ML models to predict equipment failures based on sensor data and historical maintenance records. This allows for proactive maintenance, reducing downtime and increasing efficiency. Example: Predicting the remaining useful life of a pump based on vibration and temperature data.

- Process Optimization: Using optimization algorithms to adjust process parameters in real-time, improving product quality, yield, and energy efficiency. Example: Optimizing the temperature and pressure profiles in a chemical reactor to maximize product yield.

- Advanced Process Control (APC): Implementing model predictive control (MPC) or other advanced control strategies, leveraging ML for model development and adaptation. This improves the robustness and performance of the control system. Example: Implementing MPC to control a complex distillation column, adapting to changes in feed composition and product demands.

Implementing advanced analytics often involves careful data preprocessing, model selection, validation, and integration with existing control systems. A crucial aspect is ensuring the explainability and trustworthiness of the models to build confidence among operators and stakeholders.

Q 28. How do you stay up-to-date with the latest advancements in process monitoring and control?

Staying current in the rapidly evolving field of advanced process monitoring and control requires a multi-pronged approach.

- Professional Development: I actively participate in conferences, workshops, and training courses to learn about the latest advancements in techniques, technologies, and best practices. This includes attending industry-specific events and participating in online learning platforms.

- Publications and Journals: I regularly read leading journals and publications in the field, including IEEE Transactions on Control Systems Technology, Journal of Process Control, and others. This allows me to keep abreast of the latest research and innovations.

- Networking and Collaboration: I maintain a strong network of colleagues and professionals in the field, engaging in discussions and collaborations to exchange knowledge and insights. This includes attending industry events and participating in online forums and communities.

- Industry News and Trends: I actively follow industry news and trends through websites, newsletters, and other sources. This allows me to track emerging technologies and their impact on process monitoring and control.

Continuous learning is crucial in this field; the landscape is constantly changing with advancements in AI, machine learning, and data analytics. By combining various methods, I can effectively stay informed about the latest developments and integrate them into my work.

Key Topics to Learn for Advanced Process Monitoring and Control Interview

- Process Dynamics and Modeling: Understanding process behavior, developing dynamic models (e.g., transfer functions, state-space models), and analyzing model accuracy and limitations.

- Advanced Control Strategies: Familiarity with PID control tuning methods, model predictive control (MPC), and other advanced control algorithms; understanding their strengths, weaknesses, and applicability in different process scenarios.

- Data Acquisition and Analysis: Proficiency in collecting, processing, and analyzing process data using various techniques (e.g., statistical process control, signal processing); identifying trends, anomalies, and potential process improvements.

- Process Optimization and Improvement: Applying control strategies to optimize process efficiency, reduce waste, and enhance product quality; experience with techniques such as design of experiments (DOE) and root cause analysis.

- Real-time Monitoring and Alerting Systems: Designing and implementing systems for real-time process monitoring, anomaly detection, and automated alerts; familiarity with SCADA systems and industrial communication protocols.

- Safety and Reliability: Understanding safety instrumented systems (SIS), process safety management (PSM), and the importance of robust control systems in maintaining safe and reliable operations.

- Software and Tools: Proficiency in relevant software packages for process simulation, data analysis, and control system design (mention specific tools relevant to the target audience if known).

- Troubleshooting and Problem-Solving: Experience in diagnosing and resolving process control issues, employing systematic troubleshooting methodologies to pinpoint root causes and implement effective solutions.

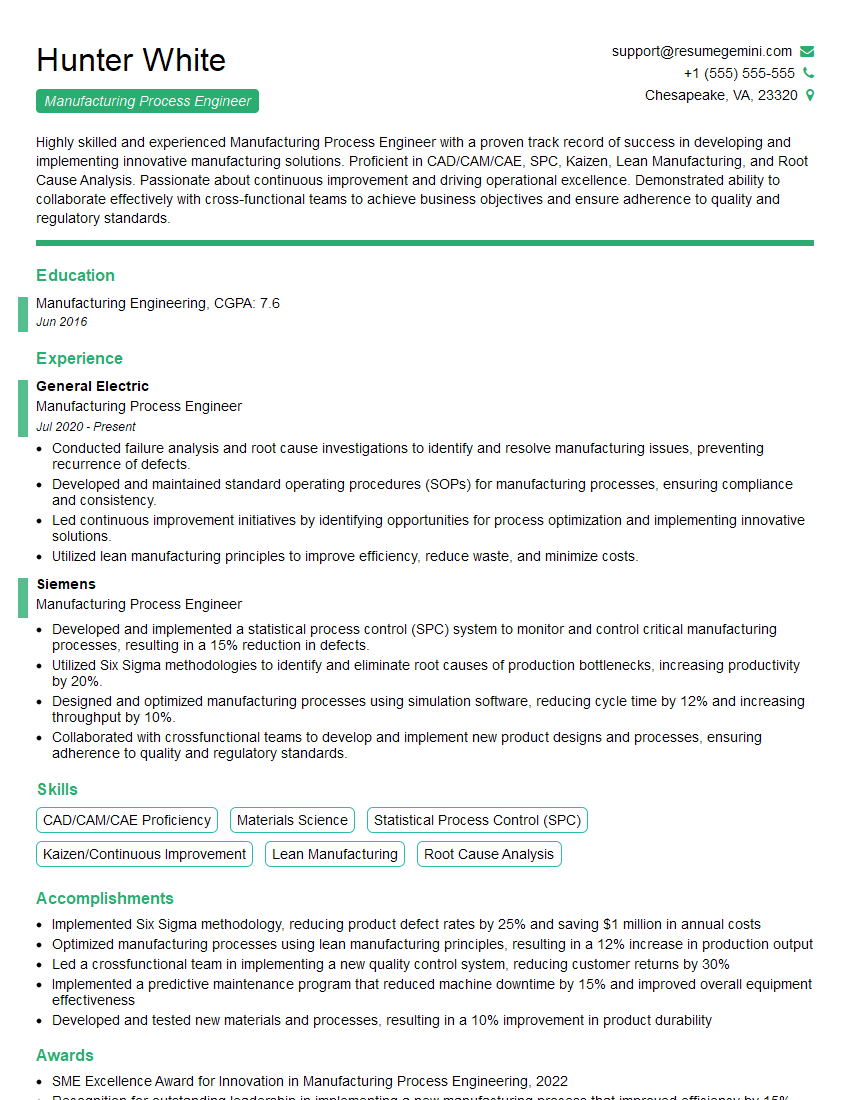

Next Steps

Mastering Advanced Process Monitoring and Control opens doors to exciting career opportunities and significantly enhances your value in the industry. A strong understanding of these concepts demonstrates your ability to optimize processes, improve efficiency, and ensure safety—highly sought-after skills in today’s competitive market. To maximize your job prospects, crafting an ATS-friendly resume is crucial. ResumeGemini is a trusted resource to help you build a professional and impactful resume that highlights your skills and experience effectively. We offer examples of resumes tailored specifically to Advanced Process Monitoring and Control roles to guide you in creating a winning application.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.