Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Artifact Analysis and Interpretation interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Artifact Analysis and Interpretation Interview

Q 1. Explain the process of identifying and classifying digital artifacts.

Identifying and classifying digital artifacts is the cornerstone of any digital forensic investigation. It’s a systematic process that begins with data acquisition – securely copying the relevant digital media (hard drives, phones, etc.) – and then moves to identification and categorization. We use a combination of automated tools and manual analysis to achieve this.

The Process:

- Data Acquisition: Creating a forensic image of the original device is crucial to preserve the integrity of evidence. We use tools like FTK Imager or EnCase to create bit-by-bit copies.

- Initial Triage: A quick overview of the data using tools like Autopsy or The Sleuth Kit helps identify the types of files and potential areas of interest. This guides further investigation.

- Artifact Identification: This involves using various techniques to locate specific artifacts, depending on the investigation’s goals. This may include searching for specific file types (e.g., .docx, .pdf, .jpg), registry entries (in Windows), metadata (within images or documents), or database entries.

- Classification: Once identified, artifacts are classified based on their relevance to the investigation. For example, a photograph might be classified as ‘evidence of a crime scene,’ while a deleted email could be classified as ‘potential communication related to the crime.’ We often employ a pre-defined taxonomy or create one specific to the case.

Example: In a fraud investigation, we might identify and classify bank transaction records, email communications, and spreadsheet files as financial records, communication logs, and potential evidence of manipulation, respectively. This classification allows us to prioritize analysis and reporting.

Q 2. Describe different types of digital artifacts and their significance in investigations.

Digital artifacts come in many forms, each with unique investigative significance. Thinking of them like puzzle pieces, each one contributes to the overall picture.

- System Logs: These records of system activity (e.g., Windows Event Logs, Linux syslog) are invaluable for reconstructing events and identifying unusual activity. They can show login attempts, file access, program execution, and network connections.

- User Data: This includes files created by users, such as documents, emails, images, and videos. This data often directly reflects the user’s activities and intentions.

- Metadata: Hidden information embedded within files, such as creation dates, modification times, author information, and GPS coordinates, can provide critical context and corroborate other evidence. Think of it as the ‘behind-the-scenes’ information.

- Database Files: These structured data stores contain vital information depending on the database application. For example, in a financial institution, the database of transactions will be central to investigation.

- Deleted Files: Even deleted files often leave remnants on storage media, which can be recovered using specialized tools. These deleted artifacts can reveal crucial information that the user tried to hide.

- Network Artifacts: Data related to network connections, such as router logs, network traffic captures (pcap files), and DNS logs, provide valuable insights into online activity.

Significance: The significance varies depending on the case. In a cybercrime investigation, network artifacts may be most crucial; while in a murder investigation, user data like photos or videos could be more significant. The context and the goal of the investigation determine the relative importance of each artifact type.

Q 3. How do you ensure the integrity and authenticity of collected digital artifacts?

Ensuring the integrity and authenticity of collected digital artifacts is paramount. It’s about building a chain of custody and proving that the evidence hasn’t been tampered with.

- Hashing: We use cryptographic hash functions (like SHA-256 or MD5) to generate a unique digital fingerprint of the acquired data. This allows verification that the data hasn’t been altered after acquisition. We document the hash value at each stage of the process.

- Write-Blocking Devices: Hardware or software write-blocking devices prevent any changes to the original media during acquisition, ensuring that the data remains untouched.

- Chain of Custody: Meticulous documentation of every step of the process, from acquisition to analysis, is critical. This detailed record ensures that the evidence’s path is traceable and accountable, preventing disputes regarding its authenticity.

- Forensic Imaging: Creating bit-by-bit copies (forensic images) instead of simply copying files ensures that even deleted or fragmented data is preserved.

- Validation: Regularly validate tools and processes to ensure reliability and accuracy.

Example: I recently worked on a case involving a compromised server. Before accessing the server’s hard drive, I created a forensic image, documented its SHA-256 hash value, and used a write-blocking device. After analysis, I recalculated the hash to confirm that the image hadn’t been altered.

Q 4. What are the common challenges encountered during artifact analysis?

Artifact analysis presents various challenges, some stemming from the sheer volume of data, others from the complexity of the technology involved.

- Data Volume and Complexity: Modern systems generate massive amounts of data, making analysis time-consuming and resource-intensive. Dealing with fragmented files and encrypted data adds further complexity.

- Data Hiding Techniques: Malicious actors employ sophisticated techniques to hide artifacts, like steganography (hiding data within images or other files) or data carving (recovering files from unallocated space).

- Data Volatility: Some artifacts (like RAM contents) are volatile and disappear when the system is powered off, requiring immediate acquisition and analysis.

- Encrypted Data: Encountering encrypted files, drives, or databases presents an obstacle. Accessing encrypted data requires specialized tools and often legal procedures.

- Technological Advancements: Keeping up-to-date with evolving technologies and new data formats is an ongoing challenge.

- Legal and Ethical Considerations: Ensuring the investigation adheres to all legal and ethical guidelines is of paramount importance.

Example: In a recent investigation, we encountered a large amount of encrypted data on a suspect’s computer. Cracking the encryption required specialized tools and a significant amount of time, demonstrating the challenge of analyzing encrypted data.

Q 5. Explain your experience with specific forensic tools used for artifact analysis.

My experience encompasses a range of forensic tools tailored to different needs.

- EnCase: A comprehensive digital forensics platform providing tools for imaging, data recovery, and artifact analysis. Its ability to handle large datasets and its advanced search capabilities make it very valuable.

- Autopsy: An open-source digital forensics platform built on The Sleuth Kit. Autopsy is particularly useful for its ability to quickly scan and analyze large volumes of data. Its intuitive interface facilitates efficient investigation.

- FTK Imager: A reliable tool for creating forensic images of storage media, ensuring data integrity and preserving evidence. It supports a variety of file systems.

- Volatility: Specifically designed for analyzing volatile memory (RAM), Volatility extracts information about running processes, network connections, and other crucial details, often crucial in live system analysis.

- AccessData Forensic Toolkit (FTK): This is a powerful and versatile tool providing various features for artifact analysis and evidence management.

Example: In one case, I used Volatility to analyze RAM from a suspect’s computer, identifying running processes and network connections that were crucial for piecing together their actions. In another, I used EnCase to analyze a hard drive image, extracting and analyzing email data to build a timeline of events.

Q 6. How do you prioritize the analysis of numerous artifacts in a time-constrained environment?

Prioritizing artifact analysis in a time-constrained environment demands a strategic approach. It’s less about analyzing everything and more about analyzing the *right things* first.

- Prioritization Matrix: I often use a matrix that considers the potential evidentiary value of an artifact (high, medium, low) and its ease of analysis (high, medium, low). High-value, easily analyzed artifacts are prioritized first.

- Investigation Objectives: Staying focused on the specific investigative questions helps eliminate irrelevant artifacts and guides the analysis towards the most pertinent information. Think of it like a detective narrowing down suspects; we do the same with artifacts.

- Automated Tools: Leveraging automated tools to initially filter and categorize large data sets can significantly accelerate analysis. This allows for faster triage and identification of high-priority targets.

- Timeboxing: Allocating specific time blocks to each analysis task ensures efficient time management and keeps the investigation moving forward.

- Collaboration and Resource Allocation: In complex investigations, coordinating with other team members allows for efficient distribution of tasks, leveraging diverse expertise and reducing analysis time.

Example: In a child exploitation case, I’d prioritize the analysis of images and videos first (high value, relatively easy analysis), followed by email and chat logs (medium value and complexity), with potentially less relevant artifacts (e.g., browsing history) handled last, if at all.

Q 7. Describe your approach to analyzing volatile and non-volatile memory.

Analyzing volatile and non-volatile memory requires different techniques and tools.

Volatile Memory (RAM): Volatile memory is the computer’s short-term memory, and its contents are lost when the power is turned off. Analyzing RAM requires specialized tools and a quick response. The main goal is to capture the RAM’s contents before it’s lost.

- Memory Acquisition: We use specialized tools to create a memory image – typically using tools like FTK Imager or a dedicated memory acquisition device – often while the system is running to minimize data loss.

- Memory Analysis: Then, tools like Volatility are used to analyze the memory image, looking for evidence of running processes, network connections, recently opened files, and other crucial data. This analysis helps reconstruct the system’s state at the time of acquisition.

Non-Volatile Memory (Hard Drives, SSDs): Non-volatile memory retains data even when the power is turned off. The analysis is generally less time-sensitive, focusing on extracting data using various techniques.

- Data Acquisition: We use write-blocking devices and forensic imaging tools like EnCase or FTK Imager to create exact bit-stream copies.

- Data Recovery: We use tools to recover deleted files and fragments of data, potentially revealing hidden or erased information.

- File System Analysis: Analyzing the file system structure helps to identify files, directories, and other relevant data structures.

- Data Carving: Techniques like data carving can be used to recover files even if the file system metadata is corrupted or missing.

Example: A live system analysis might involve capturing RAM to identify the source of a malware infection and then analyzing the hard drive to find the infection vector and any further malicious activity. The difference in methodology reflects the volatility of the data.

Q 8. How do you handle encrypted files and data during artifact analysis?

Encountering encrypted files is commonplace in artifact analysis. My approach is multifaceted and depends on the type of encryption and the investigation’s scope. Firstly, I attempt to identify the encryption method used – is it full-disk encryption (like BitLocker or FileVault), file-level encryption (like AES or PGP), or something else? This informs the next steps.

If the encryption key is readily available (e.g., through a password obtained legally), I’ll decrypt the files using appropriate tools and proceed with standard analysis. However, if the key is unknown, I might explore techniques like:

- Brute-force attacks: Only attempted if legally permissible and if the encryption method is weak or the password is short. This is resource-intensive and time-consuming.

- Dictionary attacks: Using lists of common passwords to guess the key. Effectiveness depends on the strength of the password.

- Rainbow table attacks: Pre-computed tables of hashes that can speed up the cracking process for certain encryption algorithms. Again, legality and ethical considerations are paramount.

- Analyzing metadata: Even encrypted files can contain metadata that might offer clues, such as file creation timestamps or author information.

In situations where decryption is impossible or infeasible, I document the encrypted files’ existence, their file sizes, timestamps, and any other relevant metadata. This information, though incomplete, can still contribute to the overall picture.

For example, I once analyzed a case where the suspect’s laptop used BitLocker. While initially I couldn’t decrypt the drive, metadata analysis revealed the creation dates of several encrypted files which, when correlated with other evidence, proved crucial in establishing a timeline.

Q 9. Explain your understanding of data recovery techniques.

Data recovery is the process of retrieving data from damaged, corrupted, or deleted storage media. My understanding encompasses both logical and physical data recovery methods.

Logical data recovery involves recovering data through software techniques. This is usually applicable when the data’s structure is intact but inaccessible due to file system corruption, accidental deletion, or logical errors. Tools like Recuva or PhotoRec are used to scan the storage device and recover files based on their file signatures.

Physical data recovery involves working at the hardware level, often requiring specialized tools and clean-room conditions. This is necessary when the storage medium itself is physically damaged, such as a hard drive with a failing head or a damaged SSD. Techniques include surface scans to find viable sectors and data reconstruction from damaged magnetic platters.

The process usually begins with imaging the drive to avoid further damage. Then, specific tools are used to analyze the raw data, identify file signatures, and reconstruct the file system. Success depends heavily on the extent of damage and the chosen tools.

For example, recovering deleted files from a user’s Recycle Bin is a simple case of logical recovery. On the other hand, extracting data from a physically damaged hard drive requires advanced physical data recovery techniques and specialized equipment.

Q 10. How do you correlate findings from different artifacts to build a comprehensive picture?

Correlating findings from diverse artifacts is essential for building a complete narrative. It’s like piecing together a puzzle; each artifact provides a piece of the picture. I employ several techniques:

- Timeline analysis: Ordering events chronologically by analyzing timestamps from various artifacts (log files, emails, file system metadata). This establishes a sequence of events.

- Cross-referencing: Identifying common elements (IPs, filenames, usernames) across different artifacts to link activities and establish connections.

- Data pattern recognition: Looking for repeating patterns or anomalies in the data to identify suspicious activities or trends.

- Network analysis: If network traffic logs are available, these are correlated with other artifacts to understand communication patterns and data flows.

- Visualization tools: Using tools that allow for visual representation of relationships between artifacts helps simplify complex datasets and uncover hidden connections.

For example, in a malware investigation, correlating log file entries showing suspicious network connections with registry keys modified by malware and file system artifacts created by malware can provide a comprehensive understanding of the infection process and the attacker’s actions.

Q 11. How do you document your findings and create a detailed report?

Documentation and reporting are crucial for artifact analysis. My approach follows a structured format, ensuring clarity and reproducibility.

1. Chain of Custody: Meticulously documented to ensure the integrity of evidence. Every step, from acquisition to analysis, is recorded.

2. Artifact Inventory: A detailed list of all artifacts examined, including their location, hash values (for integrity verification), and any associated metadata.

3. Analysis Methodology: A clear description of the tools and techniques used, justifications for choices, and limitations of the approach. This enables verification and validation.

4. Findings Summary: A concise overview of the key findings, presented in a logical order, including supporting evidence from artifacts.

5. Detailed Analysis: Provides specific details about each artifact, including screenshots, code snippets (in tags), and interpretations. Any anomalies or suspicious activities are thoroughly explained.

6. Conclusions: A summary of the overall findings and their implications, with a clear and concise answer to the investigation's initial questions.

7. Appendices: Includes supplementary materials such as raw data extracts, scripts used, and tool documentation.

The report is formatted professionally (often as a PDF) to ensure it's easily understood and can be presented in court, if necessary. The style and language are adjusted to suit the audience, whether it's law enforcement, management, or a technical team.

Q 12. Explain your understanding of legal and ethical considerations in artifact analysis.

Legal and ethical considerations are paramount in artifact analysis. My work is always guided by the principles of legality, integrity, and privacy.

Legality: I strictly adhere to all relevant laws and regulations, including search warrants, subpoenas, and data privacy laws. I only access data for which I have legal authorization. I am aware of the potential legal ramifications of my work and ensure my actions are always within legal bounds.

Privacy: I respect the privacy rights of individuals. I only access and analyze data that's legally permissible and necessary for the investigation. Any personally identifiable information (PII) is handled with extreme care and is anonymized whenever possible.

Integrity: I maintain the integrity of the evidence. I use secure and validated methods to acquire, handle, and analyze data. I document everything meticulously to create an auditable trail.

Professionalism: I act with professionalism and maintain objectivity in my analysis. I avoid any bias or preconceived notions and focus solely on the evidence.

For instance, I would never access data without a proper warrant or consent. I also carefully document all my actions to ensure that the process is transparent and repeatable. Any breaches of privacy or ethical issues are reported immediately to the appropriate authorities.

Q 13. Describe your experience with network forensics and analyzing network traffic logs.

Network forensics and the analysis of network traffic logs are critical components of my skillset. I use various tools and techniques to analyze network data, including packet captures (pcap files) and log files from routers, firewalls, and intrusion detection systems.

Packet Capture Analysis: I use tools like Wireshark to examine individual network packets. This allows for a detailed examination of network traffic, revealing communication patterns, protocols used, and the content of the data transmitted. I analyze this to identify malware communication, unauthorized access attempts, or data exfiltration.

Log File Analysis: I analyze logs from various network devices to identify suspicious activity, such as failed login attempts, unusual traffic patterns, or security alerts. The logs provide context to the packet capture data, offering insights into the source and destination of network communications.

Network Intrusion Detection: I understand the principles of intrusion detection and use the relevant tools to monitor and analyze network traffic for malicious activities. This includes identifying known attack signatures and anomalies that may indicate compromise.

For example, I've used Wireshark to identify a compromised machine that was communicating with a command-and-control server. Analyzing the network traffic revealed the malware’s communication protocol, data exfiltration techniques, and potentially the attacker's identity. Correlating this with logs from the firewall identified the compromised machine's IP address and helped us contain the breach.

Q 14. How do you analyze log files from various operating systems and applications?

Log files are invaluable sources of information. My approach involves a systematic analysis of log files from various operating systems and applications, recognizing that the format and content vary significantly.

Understanding Log File Structure: I first determine the log file format (e.g., syslog, Event Viewer logs, Apache logs). This determines the tools and techniques needed for parsing and analysis. Tools like Splunk, ELK stack, or dedicated log analysis tools are commonly used.

Parsing and Filtering: After identifying the format, I use appropriate tools to parse the logs and extract relevant information. I often filter the logs based on timestamps, keywords, or specific events to focus on areas of interest. This reduces the amount of data needed for analysis.

Pattern Recognition: I look for patterns in the log files indicative of security breaches, errors, or system performance issues. This often involves identifying repeating events, unusual activity, or inconsistencies.

Correlation with other data: I correlate log file data with other artifacts (e.g., network logs, registry keys) to create a holistic picture. This can reveal connections between seemingly unrelated events.

For instance, analyzing Windows Event Viewer logs can reveal failed login attempts, which when correlated with network logs showing suspicious connections from particular IP addresses, would be a strong indicator of a potential compromise.

Q 15. What are some common indicators of compromise (IOCs) you look for during an investigation?

Indicators of Compromise (IOCs) are clues that suggest a system has been compromised. Think of them as breadcrumbs left behind by an attacker. Finding them requires a systematic approach, examining various artifacts for anomalies. Common IOCs include:

Suspicious network connections: Unusual outbound connections to known malicious IP addresses or domains, especially those using unconventional ports or protocols. For instance, a system communicating with a known command-and-control server (C2) is a strong indicator.

Modified system files: Changes to critical system files like registry keys (in Windows), configuration files, or binaries, often timestamped suspiciously. Imagine finding a crucial system file with a modification time that doesn't align with any legitimate administrative action.

Unusual processes: The presence of unknown or unexpected processes running on the system, particularly those with unusual names or locations. This could involve processes attempting to hide themselves or communicate stealthily.

Registry key changes (Windows): Modifications to registry keys associated with startup programs, network settings, or security policies. These can point to persistent malware or backdoors.

Log file anomalies: Unusual entries in system, application, or security logs indicating failed login attempts, privilege escalation attempts, or data exfiltration. For example, an unusually large number of failed logins from unexpected geographic locations.

File hashes: Hash values (MD5, SHA1, SHA256) of suspicious files that match known malware signatures in threat intelligence databases.

Identifying these IOCs requires a combination of automated tools like SIEM systems and manual analysis of logs and system artifacts.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini's guide. Showcase your unique qualifications and achievements effectively.

- Don't miss out on holiday savings! Build your dream resume with ResumeGemini's ATS optimized templates.

Q 16. How do you handle artifacts from cloud-based environments?

Handling cloud artifacts presents unique challenges due to the distributed nature of cloud environments and the vast amount of data involved. My approach involves:

Identifying relevant cloud providers: First, determine which cloud services are in use (AWS, Azure, GCP, etc.).

Leveraging cloud APIs and logs: Cloud providers offer extensive APIs and logging services which are crucial. I would use these to retrieve virtual machine logs, network traffic data, security group configurations, and other relevant information. This is much more efficient than trying to manually gather data from individual machines.

Utilizing cloud forensics tools: Specialized tools can streamline the process of gathering and analyzing cloud data. These tools automate the collection and analysis of cloud artifacts, providing a more comprehensive view.

Data prioritization and filtering: Cloud environments generate massive amounts of data. Efficient investigation requires focusing on relevant data based on the nature of the incident. I would employ filtering techniques based on timestamps, IP addresses, or user activity.

Correlation across various cloud services: It's critical to correlate information across different cloud services (e.g., compute, storage, network) to get a holistic view of the attack.

For example, if investigating a data breach, I'd look at CloudTrail logs (AWS) or Activity logs (Azure) for suspicious API calls, storage access events, and network activity. Understanding the specific cloud architecture is key to successfully analyzing the artifacts.

Q 17. Explain your understanding of the chain of custody principle.

The chain of custody principle is crucial in digital forensics. It's a documented, unbroken trail showing the chronological order of possession and handling of evidence from the moment it's collected until it's presented in court (or used internally as evidence). Think of it as a meticulous record of who touched what, when, and why. Maintaining this chain is essential for ensuring the integrity and admissibility of evidence.

Breaks in the chain can severely compromise the credibility of evidence. This can lead to questions about evidence tampering or accidental alteration. Maintaining chain of custody involves:

Detailed documentation: Recording every step of the process, including date, time, location, individual handling the evidence, and any actions performed.

Secure storage: Storing evidence in a secure, controlled environment to prevent unauthorized access or modification. Secure hashing is used to verify integrity.

Hashing and checksums: Creating unique hash values to ensure the integrity of evidence before and after analysis. Any changes will result in a different hash value.

Detailed logs: Maintaining detailed logs of every action performed on the evidence during analysis.

Evidence bags and seals: Using tamper-evident bags or seals to secure physical evidence.

If the chain of custody is not properly maintained, the evidence may be deemed inadmissible in court, rendering the investigation useless.

Q 18. Describe your experience with malware analysis and reverse engineering.

I have extensive experience in malware analysis and reverse engineering. My experience includes static and dynamic analysis techniques. Static analysis involves inspecting the malware without actually executing it. This includes examining the code structure, file headers, imports and exports, and string literals for clues about its functionality.

Dynamic analysis involves running the malware in a controlled environment (like a virtual machine or sandbox) to observe its behavior. This can reveal its actions, network connections, and interactions with the operating system. Reverse engineering takes this a step further by disassembling or decompiling the code to understand its logic and functionality in detail.

I'm proficient in using various tools like IDA Pro, Ghidra, and debuggers to analyze malware samples. For example, I once analyzed a sophisticated piece of ransomware that used advanced evasion techniques. Through a combination of static and dynamic analysis and reverse engineering, I was able to identify its encryption algorithm, command-and-control server, and payment mechanisms, which was instrumental in developing a decryption tool and mitigating the damage.

Q 19. How do you identify and analyze malicious code within artifacts?

Identifying and analyzing malicious code requires a careful and methodical approach. I usually start with the IOCs, looking for unusual file types, file names, or behavior mentioned in the previous answers. Techniques include:

Static analysis: Examining the code without execution. This often involves using disassemblers (like IDA Pro or Ghidra) to understand the instructions. I look for suspicious function calls (e.g., functions related to network communication, file system access, or process manipulation). I also look for strings and constants that might indicate the malware's purpose or target.

Dynamic analysis: Executing the code in a safe environment (e.g., a virtual machine or sandbox) to observe its behavior. This helps reveal network connections, file system changes, and registry modifications. I use debuggers to step through the code, examine registers and memory, and set breakpoints to understand specific behaviors.

Sandboxing: Running the malware in a virtual machine to monitor its behavior without risking damage to the host system. This allows me to safely observe the malware's actions and collect evidence.

Using signature-based detection: Comparing the code's hash or other features with known malware signatures in databases. This can provide quick identification of known malware variants.

Heuristic analysis: Analyzing the code based on patterns and behaviors, rather than relying solely on signatures. This helps to detect new or unknown malware variants.

Once I have a good understanding of the code's behavior, I can determine its functionality and potential impact on the system. Context is critical; understanding the broader system environment is essential for proper interpretation.

Q 20. Explain your approach to analyzing mobile device artifacts.

Analyzing mobile device artifacts requires specialized tools and techniques due to the unique nature of mobile operating systems (iOS and Android). My approach involves:

Physical acquisition: Creating a forensic copy of the device's data using specialized hardware and software. This ensures the original device remains untouched.

Logical acquisition: Extracting data through the device's operating system, which typically involves accessing databases and file systems.

Using specialized forensic tools: Employing tools like Oxygen Forensic Detective, Cellebrite UFED, or MSAB XRY, designed specifically for mobile forensics. These tools simplify the process of extracting data, analyzing applications, and recovering deleted data.

Analyzing application data: Examining app databases, logs, and preferences for information relevant to the investigation. This might include browsing history, location data, communications logs, or data related to specific apps.

Analyzing system logs: Scrutinizing system logs for events, like logins, application installations, and network activity.

Cloud data integration: Many mobile devices sync data with cloud services (iCloud, Google Drive, etc.). Accessing and analyzing that data is critical for a complete picture.

Understanding the limitations and challenges, such as data encryption and device security features, is crucial. For example, analyzing an iOS device requires different techniques than an Android device, and overcoming device passcodes or encryption requires specialized knowledge.

Q 21. How do you interpret metadata associated with digital artifacts?

Metadata, the data about data, is incredibly valuable in digital forensics. It provides context and clues that can help determine when, where, and how a file was created, modified, or accessed. Examples of metadata include:

File creation and modification times: These timestamps can help place events in a timeline and correlate activity with other events.

Author information: Document metadata might reveal the author's identity.

GPS coordinates (geotags): Images and videos often contain geotags indicating where they were captured.

Software used to create a file: The application used to create a document might provide valuable clues about the type of activity involved.

File size and type: These basic attributes can help identify unusual files or files containing large amounts of data.

I use specialized tools to extract and analyze metadata. Even seemingly insignificant details can be important. For instance, a photo's timestamp might help prove a suspect's alibi is false. It's also crucial to be aware of the limitations of metadata; it can be modified or deleted, and its accuracy depends on the application and settings used.

Q 22. Describe your experience with various file systems and their structures.

Understanding file systems is fundamental to artifact analysis. Different file systems organize data in unique ways, impacting how we recover and interpret evidence. I've worked extensively with NTFS (used in Windows), ext4 (common in Linux), APFS (Apple's file system), and FAT32 (older, simpler system). Each has its own metadata structure, which contains crucial information like file creation timestamps, modification times, and access times. For example, NTFS uses Master File Table (MFT) entries to store file information, while ext4 uses inodes. My experience involves navigating these structures using tools like Autopsy and The Sleuth Kit to extract files, recover deleted data, and reconstruct file system activity. A recent case involved recovering deleted emails from an NTFS drive, where understanding the MFT's $I30 attribute (used for deleted files) was key to the successful recovery. The differences in how each file system handles slack space (unused space within a file or cluster) also greatly impact data recovery strategies.

Q 23. How do you address data fragmentation and reconstruction during artifact analysis?

Data fragmentation, where a file's data is scattered across the disk, is a common challenge. Reconstruction involves identifying and reassembling these fragments. I use several methods. First, I analyze the file system metadata to identify the file's expected size and location. Then, I employ tools like Scalpel or Foremost to carve files based on their headers and footers, even if fragmented. These tools look for known file signatures to help them locate potential file fragments. If the fragmentation is severe, I might leverage advanced techniques like examining the drive's slack space for remaining data pieces. In a past case, a fragmented image file was crucial evidence. By carefully carving and reassembling the fragmented pieces, we were able to reveal hidden information within the image, ultimately solving the case. This process often requires meticulous attention to detail and a strong understanding of the file system's structure.

Q 24. How do you handle data deduplication and its impact on analysis?

Data deduplication, removing redundant copies of data, impacts analysis by reducing the overall dataset size and potentially obscuring the timeline of events. While seemingly helpful for storage, it presents challenges because the same data block might be part of several files. If deduplication is used, identifying the original source and context of the data becomes crucial. I address this by leveraging specialized tools designed to handle deduplicated data environments; these tools can often reconstruct the original files and their associated metadata. I've encountered deduplication in cloud storage investigations where multiple user accounts stored similar files. Understanding the deduplication strategy employed by the cloud provider was essential to reconstructing the timeline of file creation and modification, showing which user uploaded the file first and determining the original source of critical evidence.

Q 25. What are your preferred methods for visualizing and presenting complex data sets?

Visualizing complex datasets is key to effective communication. My preferred methods include timeline visualizations, network graphs, and data flow diagrams. Tools like Timeline, Gephi, and custom scripts are invaluable. For instance, a timeline visualization helps illustrate the sequence of events over time, making it easier to identify patterns and anomalies. A network graph can represent relationships between files, users, and systems. For example, I might visualize email communication networks or file access patterns to uncover hidden connections. Data flow diagrams visually map the movement of data through a system, clarifying the steps involved and showing potential points of compromise. In a recent case, a network graph of file access patterns revealed insider threats. The visualization clearly depicted unauthorized access, revealing that multiple users were accessing sensitive files without proper authorization.

Q 26. Describe your experience with different hashing algorithms and their use in forensics.

Hashing algorithms are essential for data integrity verification and identifying duplicate files. I frequently use MD5, SHA-1, SHA-256, and SHA-512. MD5, while fast, is now considered cryptographically broken and should be avoided for forensic purposes where strong integrity is paramount. SHA-256 and SHA-512 offer greater collision resistance and are the preferred algorithms for generating hashes of evidentiary data. I use hashing to verify the authenticity of evidence, ensure that digital artifacts haven't been altered, and identify duplicates. In a recent investigation, we used SHA-256 hashes to compare files found on multiple devices, which helped us prove that data was copied across different systems, supporting a key aspect of the investigation. Hashing is not just about comparison; it's a cornerstone of digital evidence integrity. The integrity of the hash itself must also be protected. The complete hashing process – from the method used to the tool employed, and even the operating system it was run on – needs to be accurately documented for court admissibility.

Q 27. How do you stay updated with the latest advancements in artifact analysis techniques and tools?

Staying current is vital in this rapidly evolving field. I actively participate in professional organizations like SANS Institute and High Technology Crime Investigation Association (HTCIA). I regularly attend conferences, webinars, and workshops. I also follow leading researchers and experts through their publications and presentations. Subscribing to industry newsletters and following relevant blogs and online forums keeps me informed about the latest tools, techniques, and legal updates. I dedicate time to testing and evaluating new software and methodologies, ensuring I'm proficient in using the most effective and reliable methods. This ensures that my skills stay cutting-edge and that I'm always prepared to handle the most challenging forensic cases.

Key Topics to Learn for Artifact Analysis and Interpretation Interview

- Contextual Understanding: Developing a strong understanding of the historical, cultural, and social context surrounding the artifacts you'll analyze. This includes researching relevant timelines, societies, and technologies.

- Methodology & Techniques: Mastering various analytical methods, such as visual inspection, material analysis, and comparative studies. This also involves understanding the limitations and biases inherent in each method.

- Interpretation & Inference: Moving beyond simple observation to draw meaningful conclusions and interpretations from the artifacts. This involves formulating hypotheses, testing those hypotheses against evidence, and articulating your reasoning clearly and logically.

- Data Presentation & Communication: Effectively presenting your analysis and interpretations through clear and concise reports, presentations, or publications. This involves selecting appropriate visual aids and tailoring your communication style to your audience.

- Ethical Considerations: Understanding and adhering to ethical guidelines related to artifact handling, preservation, and interpretation, including issues of cultural sensitivity and ownership.

- Problem-Solving & Critical Thinking: Applying critical thinking skills to analyze complex data sets, identify inconsistencies, and solve problems related to incomplete or ambiguous evidence.

- Specific Artifact Types: Developing expertise in analyzing specific types of artifacts (e.g., pottery, textiles, tools) relevant to your target roles. Familiarize yourself with the unique characteristics and analytical techniques associated with these artifact types.

Next Steps

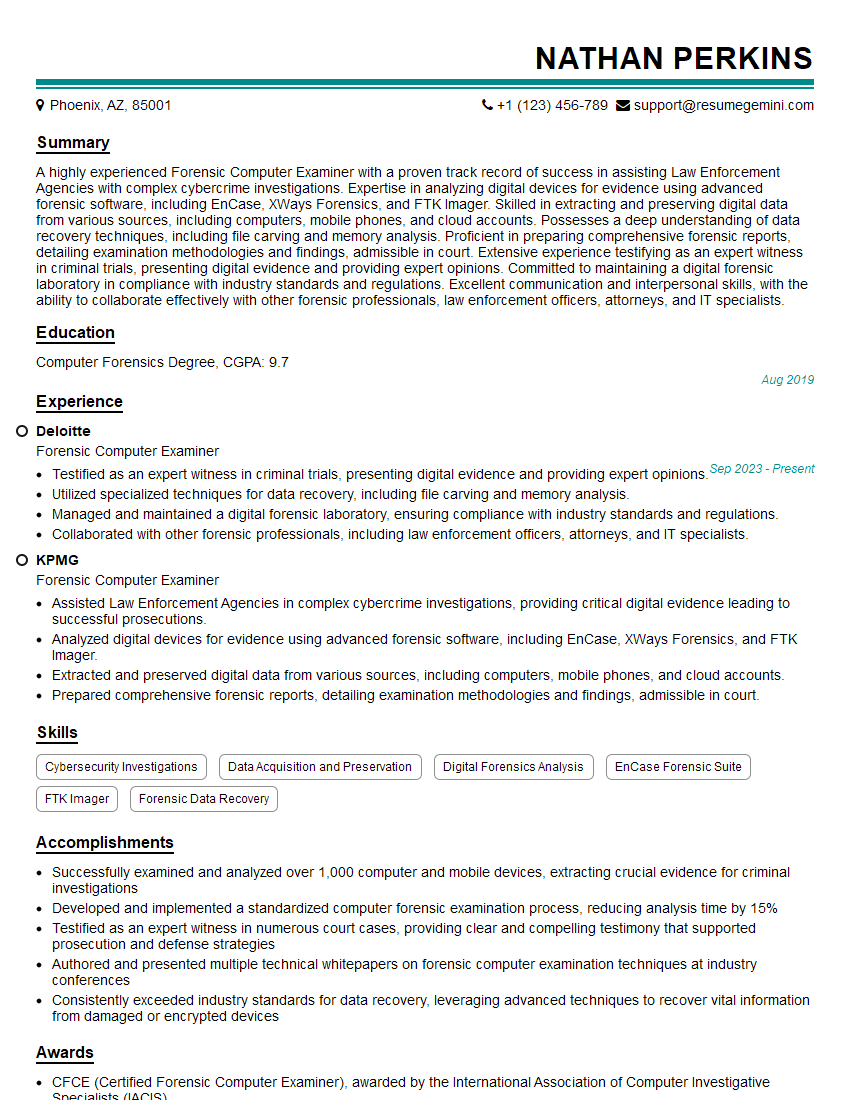

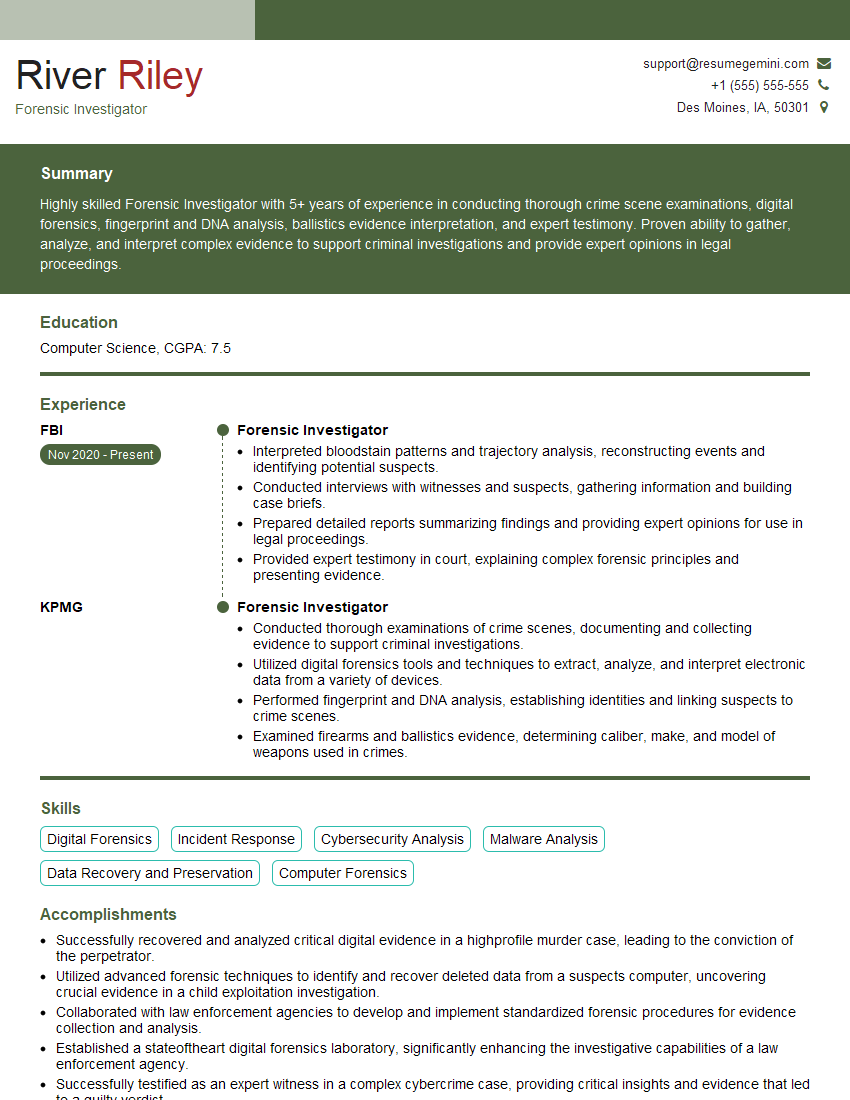

Mastering Artifact Analysis and Interpretation is crucial for career advancement in fields like archaeology, anthropology, history, and museum studies. A strong understanding of these principles opens doors to exciting and rewarding career opportunities. To maximize your job prospects, create an ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource that can help you build a professional and impactful resume tailored to your specific career goals. Examples of resumes tailored to Artifact Analysis and Interpretation positions are provided to help guide you through the process.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I have something for you and recorded a quick Loom video to show the kind of value I can bring to you.

Even if we don’t work together, I’m confident you’ll take away something valuable and learn a few new ideas.

Here’s the link: https://bit.ly/loom-video-daniel

Would love your thoughts after watching!

– Daniel

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.