Feeling uncertain about what to expect in your upcoming interview? We’ve got you covered! This blog highlights the most important Develop and implement quality assurance plans interview questions and provides actionable advice to help you stand out as the ideal candidate. Let’s pave the way for your success.

Questions Asked in Develop and implement quality assurance plans Interview

Q 1. Explain your experience in developing a comprehensive QA plan.

Developing a comprehensive QA plan is like creating a blueprint for ensuring a product’s quality. It involves a systematic approach, starting with understanding the project’s goals and scope. I begin by defining the testing objectives, identifying the target audience and their needs, and outlining the various testing types to be employed (unit, integration, system, user acceptance testing, etc.).

For example, in a recent project developing a mobile banking application, I started by outlining specific testing objectives such as verifying transaction security, ensuring seamless user navigation, and confirming compatibility across different devices and operating systems. I then defined the scope of testing, focusing on key functionalities like account management, fund transfers, and bill payments. The plan included detailed test cases, timelines, and resource allocation, ensuring all aspects were covered. Finally, I incorporated risk mitigation strategies to address potential issues proactively.

The plan also meticulously documents the entry and exit criteria for each testing phase. For example, the entry criteria for system testing might include successful completion of unit and integration testing, while the exit criteria would be achieving a pre-defined level of test coverage and defect resolution.

Q 2. Describe your process for risk assessment in a QA plan.

Risk assessment in QA is crucial for proactive issue management. It involves identifying potential problems that could impact the project’s quality, timeline, or budget. I typically employ a structured approach involving brainstorming sessions with the development team and stakeholders, reviewing previous project data, and analyzing requirements documents.

We use a risk matrix to categorize identified risks based on their likelihood and impact. For instance, a high-likelihood, high-impact risk might be a critical system failure, while a low-likelihood, low-impact risk might be a minor usability issue. Once risks are categorized, we develop mitigation strategies – including contingency plans and fallback options – for each. This might involve allocating additional testing time for high-risk areas, employing specific testing techniques, or adding extra resources to handle potential delays.

For the mobile banking app, a high-risk area was security vulnerabilities. Our mitigation strategy included penetration testing and security audits to proactively identify and address potential weaknesses before deployment.

Q 3. How do you define and measure the success of a QA plan?

The success of a QA plan is measured by several key indicators. It’s not just about finding bugs, but also about ensuring the product meets its quality goals and user expectations. Key metrics include:

- Defect Density: The number of defects found per lines of code or per module – lower is better.

- Defect Severity: The classification of defects based on their impact (critical, major, minor) – a lower number of critical defects is a key success factor.

- Test Coverage: The percentage of requirements and functionalities tested – aiming for high coverage ensures comprehensive testing.

- Time to Resolution: The time taken to resolve defects – faster resolution indicates efficient processes.

- Customer Satisfaction: Post-release feedback from users reflects the overall quality and usability of the product.

Success isn’t solely quantitative. A successful QA plan also leads to a smoother release process, reduced post-release issues, and enhanced customer satisfaction. We use dashboards to track these metrics throughout the project, allowing for continuous improvement and informed decision-making.

Q 4. What are the key components of a well-structured test plan?

A well-structured test plan is the roadmap for testing activities. Key components include:

- Test Plan Identifier: A unique identifier for the plan.

- Introduction: Overview of the project and the plan’s purpose.

- Scope: What will be tested and what will not be tested (in/out of scope).

- Test Items: List of software components or functionalities to be tested.

- Test Environment: Description of hardware, software, and network configurations.

- Test Approach: The methodology and strategies used (e.g., Agile, Waterfall, specific testing types).

- Test Deliverables: The documents, reports, and other outputs produced during testing.

- Test Schedule: Timeline of testing activities.

- Test Resources: Team members, tools, and equipment involved.

- Risks and Contingencies: Potential problems and mitigation strategies.

- Approvals: Signatures from stakeholders to indicate agreement and approval.

A clear and comprehensive test plan ensures everyone involved is on the same page, avoiding confusion and streamlining the testing process.

Q 5. How do you handle unexpected bugs or issues during testing?

Unexpected bugs are inevitable. My approach involves a structured process:

- Immediate Reporting: The bug is immediately reported using a bug tracking system (e.g., Jira, Bugzilla) with detailed information, including steps to reproduce, screenshots, and error logs.

- Severity Assessment: The severity of the bug is assessed, determining its impact on the application’s functionality.

- Prioritization: The bug is prioritized based on its severity and impact on the release schedule.

- Investigation and Debugging: The development team investigates the root cause and works on a fix.

- Regression Testing: After the fix is implemented, thorough regression testing is conducted to ensure the fix didn’t introduce new issues.

- Verification and Closure: The tester verifies the fix and closes the bug report once it’s successfully resolved.

Effective communication is crucial. Regular status updates with the development team help ensure everyone is aligned and problems are addressed promptly.

Q 6. Explain your experience with different testing methodologies (e.g., Agile, Waterfall).

I have extensive experience with both Agile and Waterfall testing methodologies. In Waterfall, testing typically occurs in a dedicated phase after development is complete. This allows for comprehensive testing but can lead to late bug detection. In Agile, testing is integrated throughout the development lifecycle, using iterative sprints. This allows for early bug detection and faster feedback loops.

In a recent project using Agile, we implemented continuous integration and continuous testing (CI/CT). This involved automated tests running every time code was committed to the repository, providing instant feedback to developers. In a previous Waterfall project, we followed a more traditional approach, conducting thorough testing in separate phases, including unit, integration, system, and user acceptance testing. The choice of methodology depends on project requirements, team structure, and project timelines.

Q 7. How do you prioritize testing efforts when facing time constraints?

Prioritizing testing efforts under time constraints requires a strategic approach. I use risk-based testing, focusing on critical functionalities and high-risk areas first. This ensures that the most important aspects of the application are thoroughly tested, even if complete testing isn’t feasible within the time frame. I use a risk matrix to guide this prioritization.

Tools like test management software help track progress and identify areas where testing is lagging. Automated testing is essential, as it allows for rapid execution of repetitive tests, freeing up time for manual testing of more complex areas. Furthermore, clear communication with stakeholders is essential to manage expectations and ensure agreement on the scope of testing within the available timeframe. Sometimes, scope reduction might be necessary, carefully selecting features to defer testing to a later release.

Q 8. Describe your experience with test case design techniques.

Test case design is the cornerstone of effective software testing. It involves systematically creating a set of test cases that cover various aspects of the software, ensuring comprehensive validation. I’m proficient in several techniques, including:

- Equivalence Partitioning: Dividing input data into groups (partitions) that are expected to be treated similarly by the system. For example, testing a field accepting ages would involve partitions like (0-17), (18-65), and (65+).

- Boundary Value Analysis: Focusing on the boundary values of input ranges. Continuing with the age example, I’d prioritize testing 0, 17, 18, 65, and 66.

- Decision Table Testing: Creating a table that outlines all possible combinations of input conditions and their corresponding expected outcomes. This is especially useful for handling complex business rules.

- State Transition Testing: Modeling the different states of the application and the transitions between them. This is crucial for applications with complex workflows, such as order processing systems.

- Use Case Testing: Designing test cases based on how a user might interact with the system. This approach focuses on realistic scenarios and user journeys.

I tailor my approach based on the complexity of the application and the specific requirements. For instance, in a simple calculator application, equivalence partitioning and boundary value analysis would be sufficient, while a complex e-commerce platform might necessitate a combination of techniques, including state transition and use case testing.

Q 9. What are your preferred tools for test management and execution?

My preferred tools for test management and execution depend on the project’s size and complexity. For smaller projects, I often find tools like Jira and TestRail sufficient. Jira’s flexibility allows for effective bug tracking and project management, while TestRail excels in managing test cases and generating reports. For larger, more complex projects, I’ve successfully employed tools like Zephyr Scale (integrated with Jira) and Xray (also integrated with Jira). These offer robust features for managing test plans, organizing test cycles, tracking execution, and generating detailed reports.

For test execution, I’m comfortable with both manual and automated testing. I frequently use browser developer tools for debugging, along with Selenium IDE for quick automated tests. The choice of tools is always determined by the specific needs of the project, keeping in mind efficiency and scalability.

Q 10. Explain your experience with test automation frameworks.

I possess extensive experience with various test automation frameworks. My expertise includes:

- Selenium WebDriver: I’ve used Selenium extensively for automating web application testing across different browsers. I’m comfortable with various programming languages such as Java, Python, and C# within the Selenium framework.

- Cypress: I’ve utilized Cypress for its ease of use, speed, and excellent developer experience. It’s particularly well-suited for end-to-end testing.

- RestAssured (API Testing): For testing RESTful APIs, RestAssured is my go-to framework in Java. It provides a fluent API for making requests and verifying responses.

Beyond the framework itself, I focus on designing maintainable and scalable automation frameworks. This involves utilizing design patterns like Page Object Model (POM) to decouple test logic from UI elements, enhancing readability and reducing maintenance efforts. I also prioritize robust error handling and reporting mechanisms.

For example, in a recent project involving a large e-commerce site, I designed a Selenium-based framework employing POM to automate regression testing. This significantly reduced testing time and improved the reliability of our test suite.

Q 11. How do you ensure test coverage across all aspects of a project?

Ensuring comprehensive test coverage is critical. My approach involves a multi-faceted strategy:

- Requirement Traceability Matrix (RTM): Creating an RTM to map requirements to test cases ensures that all requirements are addressed during testing.

- Test Coverage Analysis: Regularly analyzing test coverage to identify gaps. This can involve code coverage analysis (for unit and integration tests) and functional coverage analysis (to verify that all functionalities are tested).

- Risk-Based Testing: Prioritizing testing efforts based on the risk associated with different functionalities. Critical features receive more thorough testing.

- Combination of Testing Types: Employing a combination of testing levels—unit, integration, system, and user acceptance testing (UAT)—ensures comprehensive coverage across different aspects of the system.

Think of it like building a house: you wouldn’t just check the foundation; you’d inspect every aspect – walls, roof, plumbing, electricity – to ensure it’s structurally sound and functional. Similarly, I use different testing levels to ensure the software is thoroughly vetted from the smallest component to the fully integrated system.

Q 12. Describe a time you had to adapt your QA plan due to changing requirements.

In a recent project involving a mobile banking application, the requirement for biometric authentication was added midway through the development cycle. This necessitated a significant change to the existing QA plan. I immediately:

- Re-evaluated the existing test cases: I assessed which existing test cases were still relevant and which needed to be modified or replaced.

- Created new test cases: I developed new test cases specifically addressing the biometric authentication feature, considering different scenarios (successful authentication, unsuccessful authentication, timeout, etc.).

- Updated the test plan: I documented the changes in the test plan, including the new test cases and updated timelines.

- Communicated the changes: I communicated the impact of the change to the development team, project manager, and other stakeholders.

- Prioritized testing: Given the late addition, I worked with the team to prioritize testing efforts to ensure the timely release of the updated app.

Adaptability is crucial in QA. The key is to be proactive, communicate effectively, and remain flexible to ensure that the testing process adapts to evolving requirements without compromising quality.

Q 13. How do you collaborate with developers and other stakeholders during the QA process?

Collaboration is central to successful QA. I foster strong relationships with developers, product owners, and other stakeholders through:

- Daily Stand-up Meetings: Participating actively in daily stand-up meetings to discuss progress, identify roadblocks, and ensure alignment.

- Defect Triage Meetings: Collaborating with developers during defect triage meetings to analyze defects, assign ownership, and prioritize fixes.

- Regular Communication: Maintaining open communication channels through email, instant messaging, and project management tools to share updates and address concerns promptly.

- Test Case Reviews: Sharing test cases with developers for review before execution helps to clarify expectations and prevent misunderstandings.

- Knowledge Sharing: Sharing QA insights and best practices with the development team to improve overall software quality.

I view myself as a partner to the development team, not just a gatekeeper. By working collaboratively, we ensure that issues are identified and resolved efficiently, leading to a higher-quality product.

Q 14. How do you identify and track defects throughout the development lifecycle?

Defect tracking and management are integral to the QA process. I utilize a systematic approach that involves:

- Defect Reporting: Using a defect tracking system (like Jira) to meticulously document each defect, including steps to reproduce, expected behavior, actual behavior, severity, and priority.

- Defect Verification: Verifying that reported defects have been fixed correctly by retesting.

- Defect Regression Testing: Ensuring that fixes do not introduce new defects or break existing functionality.

- Defect Reporting Metrics: Tracking key metrics, such as the number of defects, defect density, and defect resolution time, to identify trends and improve the development process.

- Regular Reporting: Providing regular reports on the status of defects to stakeholders.

Think of it as a detective story; I meticulously gather evidence (defect reports) to identify the ‘culprit’ (the defect) and ensure it’s ‘apprehended’ (fixed) and won’t return to haunt us (regression testing).

Q 15. What metrics do you use to measure the effectiveness of your QA process?

Measuring the effectiveness of a QA process relies on a balanced set of metrics, focusing on both the efficiency of testing and the quality of the final product. I typically use a combination of metrics categorized into defect detection, testing efficiency, and customer satisfaction.

- Defect Density: This measures the number of defects found per lines of code or per feature. A lower defect density indicates better quality. For example, if we see a consistent decrease in defect density over several sprints, it suggests our QA process is improving.

- Defect Leakage: This tracks the number of defects that escape to production. A high leakage rate indicates weaknesses in our testing process and requires immediate attention. We’d investigate root causes, such as inadequate test coverage or insufficient testing of specific scenarios.

- Test Execution Efficiency: We measure the time taken to execute test cases and identify bottlenecks. This helps us optimize our testing processes, perhaps by automating repetitive tasks or improving test case design.

- Test Coverage: This metric assesses how much of the application’s functionality is covered by our test cases. High test coverage gives greater confidence in the quality of the software. We aim for high coverage, but also recognize that 100% is often unrealistic and that focusing on critical areas is more efficient.

- Customer Satisfaction (CSAT): While indirect, post-release feedback, including bug reports and customer surveys, provide crucial information on the overall quality and the effectiveness of our QA efforts.

By regularly monitoring these metrics and analyzing trends, we can identify areas for improvement and make data-driven decisions to enhance our QA process. We use dashboards and reporting tools to visualize these metrics and track progress over time.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you ensure the quality of test data used in your testing?

Ensuring high-quality test data is crucial for reliable testing. My approach involves a multi-pronged strategy:

- Data Subsetting: We don’t use the entire production dataset for testing; instead, we create subsets representative of the real-world data. This reduces test execution time and improves manageability.

- Data Masking: Protecting sensitive information is paramount. We use data masking techniques to replace sensitive data with realistic but fake values, ensuring compliance and security.

- Test Data Generation: For scenarios where real data is unavailable or insufficient, we use automated tools to generate synthetic test data that meets specific criteria (e.g., distributions, data types). This is particularly useful for boundary condition testing and stress testing.

- Data Management Tools: We leverage dedicated test data management tools that allow us to easily create, manage, and refresh test data, ensuring data consistency and minimizing manual effort. These tools often integrate with our CI/CD pipeline.

- Data Validation: Before using any data set, we perform rigorous validation to ensure data integrity and accuracy. This includes checks for completeness, consistency, and conformity to defined data models.

For instance, in a recent e-commerce project, we used a data masking tool to replace real customer addresses and credit card numbers with realistic fake values during performance testing, while preserving the structure and distribution of the original data.

Q 17. Describe your experience with performance testing and tools used.

Performance testing is a critical part of my QA process. My experience encompasses various aspects, from load testing and stress testing to endurance testing and spike testing. I’ve worked with a range of tools, adapting my choice based on project needs and budget.

- LoadRunner: A robust and widely used tool for simulating high user loads and identifying performance bottlenecks. I used it on a large-scale banking application to ensure the system could handle peak transaction volumes without degradation.

- JMeter: An open-source alternative to LoadRunner, excellent for load and performance testing of web applications. Its flexibility and extensibility make it suitable for various testing scenarios.

- k6: A modern open-source tool focused on developer-friendly scripting and cloud-based execution. Its ease of use and integration with CI/CD pipelines make it ideal for continuous performance testing.

Beyond the tools, I focus on creating realistic test scenarios based on anticipated user behavior, analyzing performance metrics (response times, throughput, resource utilization), and identifying and documenting performance bottlenecks. I also collaborate with developers to address performance issues and ensure timely resolution.

Q 18. How do you handle conflict between development and testing teams?

Conflicts between development and testing teams are inevitable, but they can be effectively managed with clear communication and a collaborative approach. I address these conflicts by:

- Promoting Open Communication: Establishing regular communication channels, such as daily stand-up meetings or sprint reviews, allows for early identification and resolution of issues. Transparent communication prevents misunderstandings and fosters a collaborative environment.

- Joint Problem Solving: Instead of assigning blame, I encourage joint problem-solving sessions. This collaborative approach helps identify root causes and fosters a sense of shared ownership in resolving the issue.

- Defining Clear Roles and Responsibilities: A well-defined process that outlines roles and responsibilities for both teams helps minimize potential conflict and clarify expectations. This might involve clear definitions of “Done” criteria.

- Using a Shared Issue Tracking System: A centralized system for tracking and managing defects ensures transparency and enables efficient collaboration in resolving issues. This system should provide clear visibility of the status of each issue.

- Mediation (if necessary): In cases where conflicts escalate, a neutral party can mediate to facilitate a resolution. This often involves facilitating discussions and helping teams find common ground.

For instance, in one project, a conflict arose regarding the severity of a bug. By facilitating a joint discussion with developers and testers, we were able to understand differing perspectives and reach a consensus on the appropriate action.

Q 19. Explain your approach to security testing.

My approach to security testing is comprehensive and incorporates various techniques to identify vulnerabilities:

- Static Application Security Testing (SAST): Analyzing code without execution to identify potential vulnerabilities. Tools like SonarQube are used for this purpose.

- Dynamic Application Security Testing (DAST): Testing the running application to identify vulnerabilities. Tools like OWASP ZAP are frequently employed.

- Penetration Testing: Simulating real-world attacks to assess the system’s resilience against malicious actors. This requires specialized skills and experience.

- Security Code Reviews: Manual inspection of the codebase to identify security weaknesses. This is particularly effective for catching vulnerabilities missed by automated tools.

- Vulnerability Scanning: Using automated tools to scan the application for known vulnerabilities. Tools like Nessus are commonly used.

The approach must be risk-based. We prioritize testing areas with higher security sensitivity. I also ensure that security testing is integrated throughout the software development lifecycle (SDLC), not just at the end. This includes incorporating security considerations during design, development, and testing phases.

Q 20. What is your experience with different types of testing (e.g., unit, integration, system, regression)?

My experience encompasses the full spectrum of software testing methodologies:

- Unit Testing: I have extensive experience writing and executing unit tests using frameworks like JUnit (Java) or pytest (Python). This ensures individual components function correctly before integration.

- Integration Testing: I test the interaction between different modules or components to ensure seamless data flow and functionality. I often use techniques like top-down or bottom-up integration testing.

- System Testing: I verify the complete system meets specified requirements and functions as designed. This often involves end-to-end testing of the entire application flow.

- Regression Testing: I conduct regression testing after code changes to ensure new features or bug fixes haven’t introduced new problems. This often involves automated regression test suites.

I’m proficient in using various testing techniques such as black-box, white-box, and grey-box testing, adapting my approach based on the project’s specific needs and context. I ensure thorough test coverage across all levels of testing to minimize the risk of defects making it to production.

Q 21. How do you ensure compliance with industry standards and regulations in your QA processes?

Ensuring compliance with industry standards and regulations is critical for building trustworthy and reliable software. My approach includes:

- Understanding Relevant Standards: Thorough understanding of applicable standards like ISO 9001, ISO 27001 (information security), HIPAA (healthcare), or PCI DSS (payment card industry) is crucial. This understanding informs the design of our QA processes.

- Implementing Compliance Measures: This involves incorporating specific checks and procedures into the QA process to meet the requirements of the relevant standards. This might include specific security testing measures, documentation requirements, or audit trails.

- Regular Audits and Reviews: Periodic audits and reviews ensure our QA processes remain compliant and effective. This also helps to identify any gaps in our compliance efforts.

- Documentation and Traceability: Maintaining comprehensive documentation of QA processes, test results, and compliance measures is essential for demonstrating compliance and supporting audits. A well-documented system allows us to easily trace requirements to test cases and ensure that everything is covered.

- Training and Awareness: Regular training and awareness programs for the team on relevant standards and regulations ensure everyone understands their responsibilities in maintaining compliance.

For example, in a healthcare project, I ensured our QA process adhered strictly to HIPAA guidelines by incorporating specific security testing protocols and procedures focused on data protection and patient privacy.

Q 22. How do you manage a large-scale QA project with multiple teams?

Managing a large-scale QA project with multiple teams requires a structured approach emphasizing clear communication, defined roles, and efficient coordination. Think of it like conducting an orchestra – each section (team) plays a vital role, but needs a conductor (project manager) to ensure harmony and a cohesive performance.

- Centralized Test Management System: Employing a tool like Jira or TestRail provides a single source of truth for all test cases, bug reports, and progress tracking, ensuring transparency across all teams.

- Clear Roles and Responsibilities: Each team should have clearly defined responsibilities – for example, one team might focus on functional testing, another on performance testing, and a third on security testing. A RACI matrix (Responsible, Accountable, Consulted, Informed) is invaluable in clarifying these roles.

- Regular Communication and Collaboration: Daily stand-up meetings, weekly progress reports, and frequent communication channels (e.g., Slack, Microsoft Teams) foster effective information flow. Regular cross-team meetings allow for collaborative problem-solving and knowledge sharing.

- Risk Management and Mitigation: Identifying potential risks early is crucial. A risk register should document potential issues, their likelihood, impact, and mitigation strategies. This proactive approach prevents delays and ensures project success.

- Test Automation: Implementing automation for repetitive tests frees up teams to focus on more complex, exploratory testing, increasing overall efficiency and test coverage.

For example, in a recent project involving the development of a large e-commerce platform, we used Jira to track progress, assigning specific modules to individual teams. Daily stand-ups ensured early identification and resolution of roadblocks, ultimately delivering a high-quality product on schedule.

Q 23. Explain your experience with different testing environments (e.g., staging, production).

Experience with various testing environments is fundamental for a comprehensive QA approach. Each environment mirrors a different stage of the software development lifecycle, each with its unique characteristics.

- Development/Local Environment: This is the initial environment where developers test their code. QA involvement here is typically limited, focusing on unit testing or early integration issues.

- Staging/Testing Environment: This is a replica of the production environment, designed for rigorous testing. Here, we perform end-to-end testing, integration testing, and performance testing, ensuring the software functions correctly under simulated real-world conditions. This allows us to identify bugs and performance bottlenecks before release.

- Production Environment: This is the live environment where the software is accessible to end-users. Testing in production is typically limited to monitoring performance and stability post-release, and handling urgent bug fixes.

In one project, we discovered a critical memory leak only during performance testing in the staging environment. This would have gone unnoticed in the development environment and could have resulted in significant performance issues in production. The staging environment allowed for proactive identification and resolution of this issue.

Q 24. Describe your process for reporting test results and communicating findings to stakeholders.

Effective reporting and communication are vital for successful QA. We need to ensure that stakeholders clearly understand the testing results and any potential risks.

- Test Reports: I typically create comprehensive reports summarizing test execution, including the number of test cases executed, passed, failed, and blocked. These reports include detailed descriptions of failures, screenshots, and logs for easy understanding.

- Bug Tracking System: Using a system like Jira, we meticulously document every bug found, including steps to reproduce, expected results, actual results, severity, and priority. This ensures efficient bug tracking and resolution.

- Stakeholder Communication: Depending on the audience, communication can vary. For technical stakeholders, detailed reports and bug logs are sufficient. For non-technical stakeholders, concise summaries focusing on key findings and risks are more effective. Regular meetings are also crucial to ensure timely updates and address concerns.

- Dashboards and Visualizations: Utilizing dashboards that display key metrics like test coverage, defect density, and open bug counts provides a clear and concise overview of the project’s health.

For example, in a recent project, we used a customized dashboard to track test execution and bug resolution daily. This allowed us to proactively address issues, and the clear visual representation of progress ensured stakeholders were well-informed throughout the project.

Q 25. How do you stay current with new technologies and best practices in QA?

Staying current in QA requires continuous learning and adaptation. The field evolves rapidly, with new tools and best practices emerging frequently.

- Professional Development: I actively participate in online courses, webinars, and conferences, attending workshops and sessions relevant to the latest trends.

- Industry Publications and Blogs: Regularly reading industry publications, blogs, and articles helps me stay updated on new testing methodologies, tools, and techniques.

- Certifications: Pursuing relevant certifications like ISTQB demonstrates a commitment to professional development and keeps my skills sharp.

- Community Engagement: Participating in online forums and communities allows for knowledge sharing and learning from others’ experiences.

- Hands-on Practice: Experimenting with new tools and techniques through personal projects is a great way to reinforce learning.

For instance, I recently completed a course on performance testing with JMeter, expanding my skillset and improving my ability to conduct more efficient and comprehensive performance testing.

Q 26. How do you create and maintain a test environment?

Creating and maintaining a robust test environment is crucial for reliable testing. This involves a well-defined process, including planning, setup, configuration, and maintenance.

- Planning: Understanding the application’s requirements and dependencies is the first step. This informs the specification of hardware and software components needed for the test environment.

- Setup: Setting up the environment can involve installing and configuring operating systems, databases, servers, and other relevant software. Consider using virtualization or cloud-based solutions for easier scalability and management.

- Configuration: This includes configuring the environment to accurately mimic the production environment, such as network settings, data volume, and user load.

- Data Management: Setting up appropriate test data is essential. This involves creating realistic datasets that cover various scenarios and edge cases without compromising sensitive production data.

- Maintenance: Regularly updating the test environment to match the application’s evolution is crucial. This might include patching the operating system, installing new software versions, and ensuring data integrity.

In a recent project, we utilized AWS to create a scalable and cost-effective cloud-based test environment. This allowed us to easily replicate production conditions and simulate various user loads during performance testing.

Q 27. What experience do you have with test automation frameworks like Selenium or Appium?

I possess extensive experience with test automation frameworks like Selenium and Appium. These tools enable the creation of automated tests, boosting efficiency and test coverage.

- Selenium: I have used Selenium WebDriver extensively for automating web application testing across various browsers. I am proficient in creating and maintaining robust Selenium test suites using programming languages like Java or Python.

- Appium: For mobile application testing, I have utilized Appium to automate tests on both iOS and Android platforms. I am experienced in writing and executing Appium tests, handling different device configurations and OS versions.

- Test Automation Frameworks: I am familiar with various test automation frameworks, including TestNG and JUnit, for organizing and managing automated tests efficiently.

- CI/CD Integration: I have experience integrating test automation into CI/CD pipelines, ensuring automated testing is an integral part of the software release process.

For instance, in a recent project, we implemented a Selenium-based automated regression testing suite, significantly reducing testing time and improving the overall quality of the software releases. This reduced manual testing efforts significantly, allowing for more focused attention on exploratory testing.

Q 28. How do you handle pressure and tight deadlines in a QA role?

Handling pressure and tight deadlines is an inherent part of the QA role. I approach such situations with a structured and prioritized approach.

- Prioritization: Focusing on high-priority tests and critical functionalities first ensures that essential aspects are thoroughly tested, even with limited time.

- Risk Assessment: Identifying potential risks and focusing testing efforts on areas with a higher probability of failure helps in efficient allocation of resources.

- Teamwork and Collaboration: Open communication and collaborative problem-solving with developers and other QA team members ensures that challenges are addressed efficiently.

- Automation: Leveraging automation for repetitive tasks frees up time for more complex testing activities, which significantly improves efficiency under tight deadlines.

- Stress Management: Practicing effective stress management techniques, like time management and taking breaks, ensures I can maintain focus and productivity.

In one project, we faced a very tight deadline, requiring us to deliver the product within two weeks. By prioritizing critical functionalities, employing automation wherever possible, and maintaining open communication within the team, we successfully completed the testing phase on time and delivered a high-quality product.

Key Topics to Learn for Develop and Implement Quality Assurance Plans Interview

- Defining Quality Assurance (QA): Understanding the fundamental principles of QA, its role in the software development lifecycle (SDLC), and its connection to business objectives.

- QA Planning & Strategy: Developing comprehensive QA plans that include scope definition, test strategy selection (e.g., agile, waterfall), risk assessment, resource allocation, and timeline creation. Practical application: Walk through creating a plan for a specific project scenario (e.g., a new mobile app).

- Test Case Design & Execution: Mastering various testing techniques (unit, integration, system, regression, user acceptance testing) and designing effective test cases. Practical application: Discuss creating test cases for different testing types and applying them to different project contexts.

- Test Automation: Understanding the benefits and challenges of test automation, selecting appropriate automation tools, and developing automated test scripts. Practical application: Explain how to choose automation tools based on project needs.

- Defect Tracking & Reporting: Effectively tracking and reporting defects using bug tracking systems. Practical application: Discuss clear and concise defect reporting techniques, prioritizing critical bugs.

- Metrics & Reporting: Understanding key QA metrics (e.g., defect density, test coverage) and creating insightful reports to communicate QA progress and quality status to stakeholders. Practical application: Show how to interpret QA metrics and use them to improve the QA process.

- Software Testing Methodologies: Understanding different methodologies (Agile, Waterfall) and adapting QA processes accordingly.

- Risk Management in QA: Identifying and mitigating risks that could impact the quality of the software product.

Next Steps

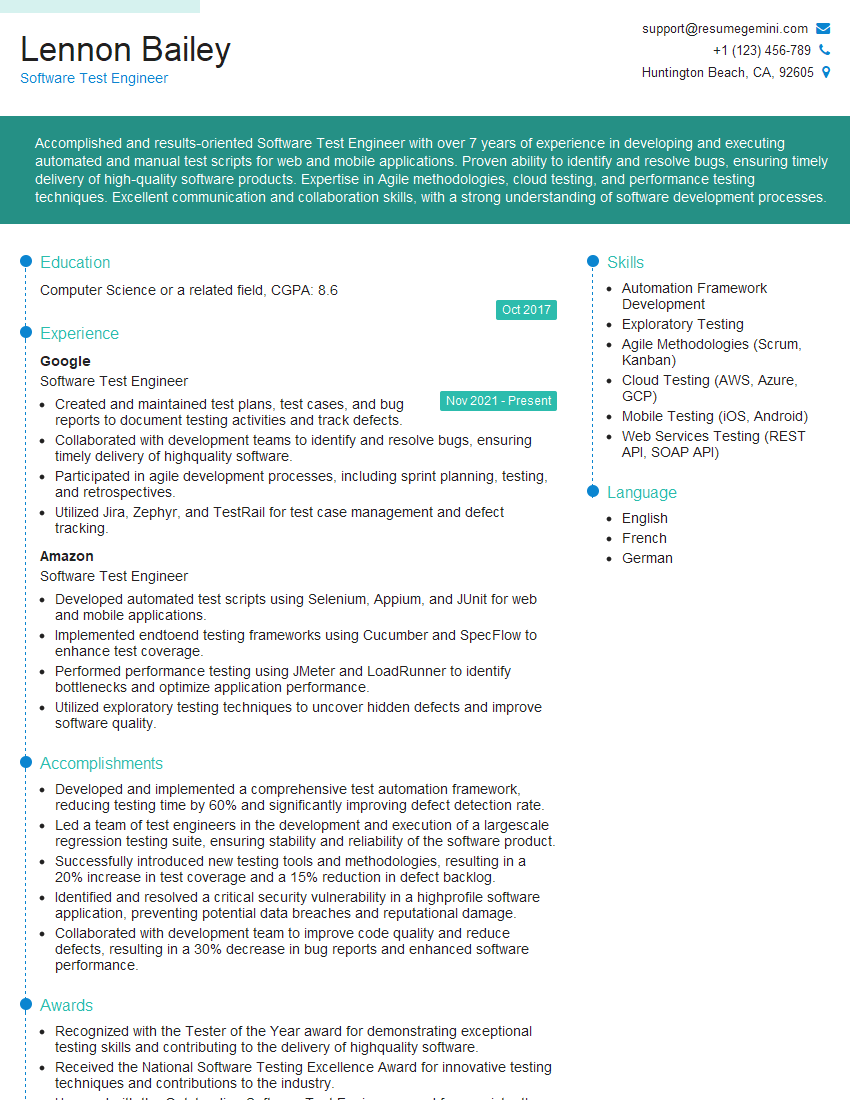

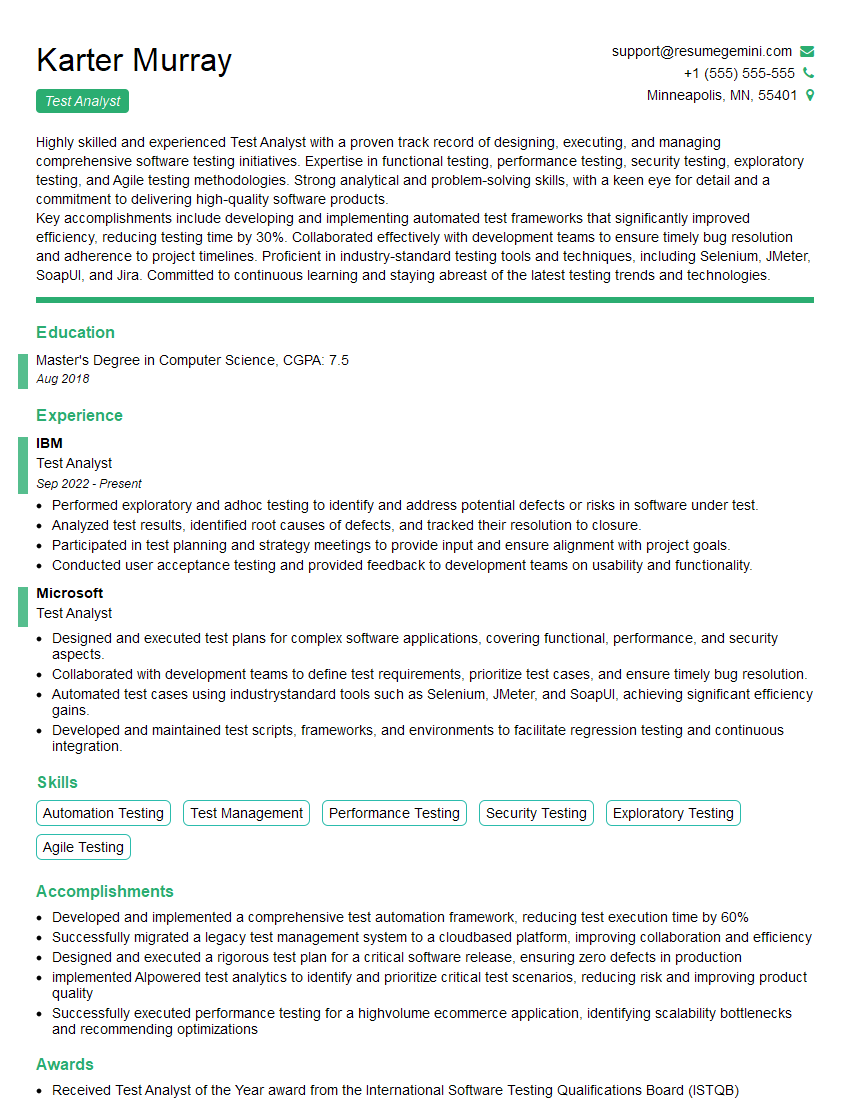

Mastering the development and implementation of quality assurance plans is crucial for career advancement in the tech industry. Strong QA skills are highly sought after, leading to increased job opportunities and higher earning potential. To maximize your job prospects, create a compelling and ATS-friendly resume that highlights your expertise. ResumeGemini is a trusted resource that can help you build a professional resume that showcases your skills and experience effectively. Examples of resumes tailored to “Develop and implement quality assurance plans” roles are available to guide you. Take the next step towards your dream career!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

To the interviewgemini.com Webmaster.

Very helpful and content specific questions to help prepare me for my interview!

Thank you

To the interviewgemini.com Webmaster.

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.