Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Genotyping interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Genotyping Interview

Q 1. Explain the difference between genotyping and sequencing.

Genotyping and sequencing are both crucial techniques in genomics, but they differ significantly in their scope and information yield. Think of it like this: sequencing is reading the entire book (the genome), while genotyping is checking specific words or phrases (specific genetic variations).

Genotyping focuses on determining the presence or absence of specific genetic variations, most commonly single nucleotide polymorphisms (SNPs), at known locations in the genome. It identifies which variant (allele) an individual possesses at those specific sites. This approach is targeted and cost-effective for large-scale studies.

Sequencing, on the other hand, determines the complete order of nucleotides (A, T, C, G) in a DNA or RNA molecule. It provides a comprehensive view of the entire genome or a specific region, revealing all variations, including SNPs, insertions, deletions, and other structural changes. This is a more powerful but also more expensive and time-consuming approach.

For example, imagine you want to know if someone carries a specific gene variant associated with a disease. Genotyping would directly test for the presence or absence of that variant. Sequencing the whole genome would reveal the variant plus potentially hundreds of thousands of other variations, most of which may be irrelevant to the initial query.

Q 2. Describe various genotyping platforms and their applications (e.g., SNP arrays, PCR, NGS).

Several platforms are used for genotyping, each with its strengths and weaknesses. The choice depends on the study design, budget, and the type of information needed.

- SNP arrays: These microarrays utilize thousands or millions of probes designed to target specific SNPs. They are highly multiplexed, allowing the simultaneous genotyping of many SNPs in a single sample. This is ideal for genome-wide association studies (GWAS) investigating the genetic basis of complex traits. They are relatively high-throughput and cost-effective but are limited to the pre-defined SNPs on the array.

- PCR-based methods: Polymerase chain reaction (PCR) can be used for genotyping specific SNPs or other genetic variations through techniques like allele-specific PCR (AS-PCR) or real-time PCR (qPCR). These are very flexible and can be tailored to target specific regions of interest. PCR is less expensive for small-scale studies than arrays, however, it typically assesses fewer variations at once compared to arrays or NGS.

- Next-Generation Sequencing (NGS): NGS provides a comprehensive approach to genotyping, allowing the detection of known and novel variations across the entire genome or targeted regions. While more expensive than arrays or PCR, NGS offers unparalleled resolution and the ability to discover new genetic variants. It is the method of choice for studies requiring high accuracy and comprehensive genomic information.

For instance, a GWAS investigating the genetic basis of type 2 diabetes might utilize SNP arrays to assess hundreds of thousands of SNPs across thousands of individuals. Conversely, if you are studying a rare genetic disorder suspected to be caused by a novel mutation, NGS would likely be preferred.

Q 3. What are the key steps involved in a typical genotyping workflow?

A typical genotyping workflow involves several key steps:

- DNA extraction: High-quality genomic DNA is extracted from biological samples (blood, saliva, tissue etc.). The purity and concentration of the extracted DNA are crucial for downstream analyses.

- Genotyping assay design (if necessary): If using PCR-based methods or designing custom SNP arrays, specific primers or probes targeting the regions of interest are designed and synthesized.

- Genotyping assay performance: Samples are processed according to the chosen platform’s protocol. This can involve hybridization to SNP arrays, PCR amplification, or library preparation for NGS.

- Data acquisition: The processed samples are analyzed using specialized equipment, such as a microarray scanner, real-time PCR machine, or NGS sequencer. Raw data are generated, representing the signal intensities or sequence reads.

- Data analysis: The raw data is processed and analyzed using bioinformatics tools to convert signal intensities into genotype calls (e.g., AA, Aa, aa) or to align sequence reads and identify variations. This step often includes quality control steps to identify and handle potential errors.

- Data interpretation: The genotype data is interpreted in the context of the research question. Statistical analyses are performed to identify associations between genotypes and phenotypes (observable characteristics).

Q 4. How do you ensure the quality and accuracy of genotyping data?

Ensuring high-quality and accurate genotyping data is paramount. Several strategies are employed:

- DNA quality control: Assess DNA purity and concentration before genotyping. Low-quality DNA can lead to inaccurate genotype calls.

- Assay validation: Before large-scale genotyping, validate the chosen assay using known control samples. This helps to identify potential biases or errors in the assay design or performance.

- Positive and negative controls: Include positive and negative controls in every genotyping run. This monitors the assay’s performance and helps to detect contamination or other issues.

- Data quality metrics: Utilize various metrics (call rate, cluster separation, heterozygosity) to assess the quality of the genotyping data. Samples or SNPs with low quality scores should be excluded from further analysis.

- Replicate samples: Include replicate samples in the analysis to assess the reproducibility of the genotyping results.

- Blind samples: In some cases, include blind samples of known genotypes to assess the accuracy of the genotyping calls without bias.

For instance, consistently low call rates for a specific SNP might indicate a problem with the probe design or the region’s instability.

Q 5. Explain the concept of Hardy-Weinberg equilibrium and its relevance to genotyping.

The Hardy-Weinberg equilibrium (HWE) principle describes the theoretical distribution of genotypes in a large, randomly mating population in the absence of evolutionary influences (mutation, selection, migration, genetic drift). It predicts the genotype frequencies based on the allele frequencies.

In a population at HWE, the genotype frequencies can be calculated using the following equation: p² + 2pq + q² = 1, where ‘p’ is the frequency of one allele and ‘q’ is the frequency of the other allele (p + q = 1). p² represents the frequency of the homozygous genotype for allele p, q² represents the frequency of the homozygous genotype for allele q, and 2pq represents the frequency of the heterozygous genotype.

HWE is relevant to genotyping because deviations from HWE at a specific locus can indicate factors such as population stratification, genotyping errors, or non-random mating (inbreeding). It serves as a crucial quality control check for genotyping data. Significant departures from HWE can necessitate further investigation, potentially identifying systematic errors in the dataset or highlighting biologically relevant factors influencing the population genetics.

For example, if a specific SNP shows significant deviation from HWE in a case-control study, it might indicate that the SNP genotyping results are inaccurate or that the study population isn’t truly representative of the intended population.

Q 6. Describe different types of SNPs and their significance in genotyping studies.

SNPs, or single nucleotide polymorphisms, are the most common type of genetic variation. They represent a single base-pair change in the DNA sequence compared to a reference genome. Different types of SNPs exist, classified by their location and functional impact:

- Synonymous SNPs (silent mutations): These SNPs don’t alter the amino acid sequence of the encoded protein due to the degeneracy of the genetic code. While not directly affecting protein function, they can still have indirect effects, for example, on mRNA stability or splicing.

- Non-synonymous SNPs (missense mutations): These SNPs cause a change in the amino acid sequence of the protein, potentially affecting its structure and function. Such changes can have significant consequences, leading to altered protein activity, stability, or interaction with other molecules. A classic example is the sickle cell anemia mutation.

- Nonsense SNPs: These SNPs introduce a premature stop codon, leading to the production of a truncated and often non-functional protein.

- Regulatory SNPs: These SNPs occur in regions that regulate gene expression (promoters, enhancers, silencers). They can alter the level of gene transcription or affect the binding of transcription factors, impacting protein production.

- Splice site SNPs: These SNPs are located within intron-exon boundaries, impacting mRNA splicing, potentially leading to altered protein isoforms or truncated proteins.

The significance of SNPs in genotyping studies depends on their type and location. Non-synonymous, nonsense, and regulatory SNPs are often more likely to have functional consequences and are, therefore, more frequently studied in disease association studies. Synonymous SNPs can sometimes serve as markers for linkage analysis. Studying the frequency and distribution of different SNP types helps in understanding the genetic architecture of complex traits and diseases.

Q 7. How do you handle missing data in genotyping analysis?

Missing data is a common issue in genotyping studies, arising from various reasons such as sample degradation, assay failures, or technical issues. Several methods exist for handling missing data:

- Exclusion: The simplest approach is to exclude samples or SNPs with a high percentage of missing data. This method is straightforward but can lead to a loss of information, especially if the missing data is not randomly distributed.

- Imputation: This method uses statistical algorithms to predict the missing genotypes based on the observed genotypes in related individuals or SNPs. Imputation methods utilize linkage disequilibrium (correlation between SNPs) and population allele frequencies to infer missing data. Several software packages are available for imputation (e.g., Beagle, IMPUTE2). It preserves more information compared to simple exclusion but can introduce biases if the underlying assumptions are violated.

- Maximum likelihood estimation (MLE): MLE is a statistical approach to estimate the missing genotypes based on the observed data and a probabilistic model. It’s particularly useful when missing data is not random.

The optimal method for handling missing data depends on the extent of missingness, the pattern of missingness, and the research question. It’s crucial to document and justify the approach used, and to be aware of potential biases introduced by the chosen method. For example, in a genome-wide association study with thousands of individuals and millions of SNPs, imputation is often preferred to avoid substantial loss of power by excluding SNPs or individuals due to missing data.

Q 8. What are the common errors encountered during genotyping and how are they addressed?

Genotyping, while powerful, is susceptible to several errors. These can broadly be categorized into experimental errors and analytical errors. Experimental errors stem from the process itself, while analytical errors arise during data processing and interpretation.

- Allelic dropout: One allele fails to be detected, leading to a homozygous genotype being miscalled as heterozygous. This can be caused by suboptimal DNA quality, PCR bias, or limitations of the genotyping technology. Addressing this involves careful sample preparation, using high-quality DNA, optimizing PCR conditions, and employing robust genotyping platforms.

- Cross-contamination: DNA from one sample contaminates another, leading to inaccurate genotype calls. Rigorous laboratory practices, including using separate pipettes and workspaces for each sample, are essential to prevent this. Negative controls in the experimental design can also help detect contamination.

- Genotyping artifacts: These are errors introduced by the genotyping technology itself, such as preferential amplification of one allele, or issues with signal interpretation. Careful selection of appropriate genotyping platforms and stringent quality control measures during data processing are vital to address this.

- Genotyping errors during data processing: Errors can creep into the data during the automated genotype calling stage of analysis, where software assigns genotypes based on the raw signal intensities. This necessitates rigorous quality control steps and manual review of any ambiguous calls.

Addressing these errors requires a multi-faceted approach encompassing meticulous laboratory techniques, careful choice of technology, robust data analysis pipelines, and regular quality control checks. Often, a combination of strategies is necessary to minimize errors and increase the reliability of genotyping results.

Q 9. Explain the concept of linkage disequilibrium (LD) and its impact on genotyping studies.

Linkage disequilibrium (LD) describes the non-random association of alleles at different loci on a chromosome. Imagine two genes close together; if one allele of the first gene is frequently found with a specific allele of the second gene more often than expected by chance, they are in LD. This is primarily due to the physical proximity of these genes—they are less likely to be separated by recombination during meiosis.

LD’s impact on genotyping studies is significant:

- Tag SNPs: LD allows us to use a smaller number of SNPs (single nucleotide polymorphisms) – known as tag SNPs – to infer the genotypes of nearby SNPs. This reduces genotyping costs and simplifies analysis without sacrificing much information. For instance, if SNPs A, B, and C are in strong LD, genotyping only A might provide sufficient information about B and C.

- Genome-wide association studies (GWAS): LD influences the power of GWAS to identify disease-associated genes. A disease-causing variant may not be directly genotyped but might be inferred through its association with a nearby tag SNP showing linkage disequilibrium.

- Population stratification: LD patterns vary between populations. Failure to account for population structure can lead to spurious associations in GWAS, where LD patterns mimicking disease association are detected due to differences in allele frequencies between populations.

Understanding and accounting for LD is crucial for accurate interpretation of genotyping data and effective experimental design in genomic studies.

Q 10. Describe different methods for genotyping quality control.

Genotyping quality control is a critical step to ensure the accuracy and reliability of the results. It involves a series of checks performed at various stages, from sample preparation to data analysis.

- Sample QC: This involves assessing DNA quantity and quality (using metrics like A260/A280 ratio and DNA integrity), checking for contamination, and verifying sample identity. Samples failing these criteria are excluded from further analysis.

- Genotype calling QC: This focuses on the accuracy of genotype calls. Metrics like call rate (percentage of successfully genotyped SNPs) and minor allele frequency (MAF) are examined. SNPs with low call rates or MAF are often removed because they are likely unreliable.

- Hardy-Weinberg equilibrium (HWE): This test checks whether genotype frequencies in a population match the expected frequencies under HWE, which assumes random mating and absence of selection. Significant deviations from HWE can indicate genotyping errors or population stratification.

- Mendel’s error checks: For family-based studies, Mendelian inheritance patterns are checked. Inconsistencies suggest errors in genotyping or pedigree information.

- Relationship checks: For studies involving related individuals, identity-by-descent (IBD) calculations and kinship coefficient estimations are performed to detect cryptic relatedness or sample duplication.

These QC steps are generally performed using specialized software packages. The thresholds for accepting or rejecting samples and SNPs are often customized based on the specific study and genotyping platform.

Q 11. How do you interpret genotyping results and draw meaningful conclusions?

Interpreting genotyping results requires a systematic approach that involves both statistical analysis and biological context. Simple interpretation might involve examining allele and genotype frequencies for a particular SNP or across a set of SNPs.

- Frequency analysis: Comparing allele and genotype frequencies between different groups (e.g., cases vs. controls in a disease study) helps identify potential associations. Chi-squared tests or Fisher’s exact test can assess the significance of these differences.

- Association testing: Statistical tests like the chi-squared test, logistic regression, or linear regression are used to test for associations between genotypes and phenotypes (e.g., disease status, quantitative trait). This can reveal whether particular genotypes are associated with a greater risk of disease or variation in a quantitative trait.

- Visualization: Graphical representations like Manhattan plots (for GWAS), heatmaps, or population structure plots aid in the visual exploration of the data and identification of interesting patterns.

- Pathway and gene ontology analysis: If significant associations are identified, these SNPs can be mapped to genes and biological pathways to investigate their functional relevance. Tools like DAVID or GOseq can facilitate this.

- Replication studies: The findings of genotyping studies, particularly those related to disease association, should ideally be validated in independent replication cohorts to confirm their robustness and generalizability.

In summary, meaningful conclusions are drawn through a combination of statistical rigor, biological insight, and consideration of potential confounding factors.

Q 12. Explain the role of bioinformatics in genotyping data analysis.

Bioinformatics plays a pivotal role throughout the genotyping process, from data acquisition to interpretation. It provides the computational tools and infrastructure necessary for managing, analyzing, and visualizing the massive datasets generated by genotyping experiments.

- Data preprocessing: Bioinformatics tools handle the initial steps of data cleaning, filtering low-quality SNPs, and imputing missing genotypes.

- Quality control: As mentioned earlier, bioinformatics tools perform QC checks such as HWE tests, Mendelian error checking, and relatedness estimation.

- Genotype calling: Software packages use algorithms to interpret raw signal intensities from genotyping platforms and assign genotypes.

- Statistical analysis: Bioinformatics tools perform association testing, population stratification analysis, and other statistical analyses to identify significant associations between genotypes and phenotypes.

- Data visualization: Producing informative graphs (Manhattan plots, heatmaps etc.) relies heavily on bioinformatics tools.

- Annotation and interpretation: Tools help to annotate identified SNPs with functional information, such as gene location, predicted effect, and pathway involvement.

Without bioinformatics, handling the sheer volume and complexity of genotyping data would be impossible. It has democratized genomic research by providing researchers with the means to analyze data that would otherwise be intractable.

Q 13. What statistical methods are commonly used for analyzing genotyping data?

Several statistical methods are commonly employed for analyzing genotyping data, depending on the research question and study design. These methods range from simple descriptive statistics to complex multivariate techniques.

- Chi-squared test and Fisher’s exact test: These tests are used to compare allele and genotype frequencies between groups (e.g., case-control studies).

- Linear regression and logistic regression: Linear regression is used for continuous traits (e.g., height, weight), while logistic regression is for binary traits (e.g., disease status). These tests assess the association between genotype and phenotype, while adjusting for potential confounders.

- ANOVA (Analysis of Variance): This test compares means of continuous traits across different genotypes.

- Mixed-effects models: These models are particularly useful in family-based studies or studies involving population structure, accounting for correlations between related individuals.

- Principal component analysis (PCA): This method is used to reduce the dimensionality of the genotype data and identify population structure.

- Genome-wide association studies (GWAS): These studies employ a variety of statistical methods to identify SNPs associated with complex traits, often including corrections for multiple testing (e.g., Bonferroni correction, False Discovery Rate).

The choice of statistical method is critical for accurate interpretation of results and depends on the specifics of the research question and data structure.

Q 14. Describe your experience with different genotyping software packages.

My experience encompasses a range of widely used genotyping software packages, each with its strengths and weaknesses. I’m proficient in using PLINK for association analysis, quality control, and population stratification analysis. PLINK’s command-line interface allows for efficient analysis of large datasets. I’ve extensively utilized GCTA for heritability estimation and genome-wide association analysis, particularly its ability to handle related individuals effectively. Furthermore, I have experience with Haploview for visualizing linkage disequilibrium patterns and performing haplotype analysis. I’m also familiar with the R statistical environment and several packages within it (e.g., SNPRelate, qqman) that provide a flexible and powerful toolkit for genotype data manipulation, analysis and visualization.

Beyond these, I have worked with proprietary software for specific genotyping platforms such as Illumina’s GenomeStudio. This familiarity allows me to navigate different data formats and interpret results from various technologies. My experience ensures a comprehensive understanding of different analytical approaches, enabling me to select the most appropriate tools for specific research questions.

Q 15. How do you validate genotyping results?

Validating genotyping results is crucial to ensure accuracy and reliability. It involves a multi-step process that confirms the genotypes called are correct and the data is of high quality. We use several approaches:

- Positive and Negative Controls: Including samples with known genotypes as positive controls and blank samples as negative controls helps identify potential contamination or assay issues. A failure to detect the positive control indicates a problem with the assay itself, whereas a positive negative control suggests contamination.

- Replicate Measurements: Repeating the genotyping process on the same sample multiple times helps assess the reproducibility of the results. High concordance between replicates provides confidence in the data.

- Genotype Calling Consistency: We use rigorous quality control (QC) metrics to ensure consistency in genotype calling across different samples and batches. This includes checking for Mendelian inconsistencies (e.g., offspring having alleles not present in parents in family-based studies) and assessing call rates (percentage of successful genotype calls).

- Comparison with Independent Methods: If possible, comparing results from our primary genotyping method with a secondary, independent method (e.g., comparing SNP array data with sequencing data for a subset of samples) provides a powerful validation strategy.

- Visual Inspection: We often visually inspect the raw data from the genotyping platform (e.g., cluster plots in Illumina BeadArray data) to identify potential outliers or artifacts that may lead to inaccurate genotype calls.

For example, in a recent project, we identified a batch effect that was causing inconsistent genotype calls. By carefully analyzing the raw data and implementing appropriate normalization procedures, we corrected the issue and obtained reliable results. Failing to validate results can lead to inaccurate conclusions in downstream analyses, like association studies.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the difference between different genotyping technologies (e.g., TaqMan, Illumina, Affymetrix).

Different genotyping technologies offer varying levels of throughput, cost, and resolution. Here’s a comparison of three popular methods:

- TaqMan: This is a real-time PCR-based method that utilizes allele-specific probes to detect variations at specific single nucleotide polymorphisms (SNPs). It’s relatively low-throughput, ideal for validating SNPs or genotyping a smaller number of samples, and is known for its high accuracy and reproducibility. It’s particularly useful for targeted genotyping.

- Illumina BeadArrays (e.g., Infinium): This technology uses high-density microarrays to genotype thousands to millions of SNPs simultaneously. It offers high throughput and is cost-effective for large-scale studies. However, it relies on hybridization and might not be as accurate as other methods for certain SNPs. We use this frequently for genome-wide association studies (GWAS).

- Affymetrix Arrays: Similar to Illumina arrays, Affymetrix utilizes microarrays to genotype multiple SNPs concurrently. Both technologies are becoming less prevalent compared to next-generation sequencing (NGS) due to the higher costs of manufacturing the arrays and their inherent limitations in terms of scalability and cost-per-SNP.

The choice of technology depends on the specific research question, budget, and the number of samples. For instance, if you need to genotype a small number of samples for a specific set of SNPs, TaqMan might be the appropriate choice. For large-scale GWAS, Illumina BeadArrays are more suitable. Next-generation sequencing (NGS) is increasingly becoming the preferred method, offering the highest resolution and flexibility, but it’s more expensive.

Q 17. Discuss the ethical considerations related to genotyping.

Genotyping raises several ethical considerations:

- Informed Consent: Participants must provide informed consent, fully understanding the purpose of the study, the potential benefits and risks, and how their data will be used and protected. This is particularly important considering the potential implications of genetic information.

- Data Privacy and Security: Genetic data is highly sensitive and requires robust security measures to prevent unauthorized access and breaches. Strict data anonymization protocols and ethical data handling procedures must be in place.

- Genetic Discrimination: There’s a risk of genetic discrimination based on genotyping results, for example, in employment or insurance. Legislation and policies are crucial to prevent such discrimination.

- Incidental Findings: Genotyping may reveal unexpected health information (incidental findings) that the participant wasn’t aware of. Clear guidelines are needed for disclosing and managing such findings, ensuring the individual’s emotional and psychological well-being.

- Return of Results: Researchers should have a clear plan regarding whether and how to return the results to participants, including considerations of the potential impact of those results and access to genetic counseling.

For example, we recently encountered a situation where a participant’s genotyping revealed a predisposition to a serious condition. We carefully followed established protocols to inform the participant, ensure appropriate genetic counseling was offered, and safeguard their privacy. Ethical considerations must be a cornerstone of any genotyping project.

Q 18. How would you approach troubleshooting a genotyping assay that is not performing well?

Troubleshooting a poorly performing genotyping assay requires a systematic approach. Here’s a step-by-step strategy:

- Review Experimental Protocol: Start by carefully checking the entire protocol for errors. Did you follow all steps precisely? Verify reagent concentrations, temperatures, incubation times, and pipetting accuracy. Even small errors can have a huge effect.

- Examine Sample Quality: Assess the quality of your DNA samples. Degradation, low DNA concentration, or contamination can significantly impact the results. Run a quality control (QC) check using standard methods such as spectrophotometry or electrophoresis.

- Check Reagents: Verify the integrity and functionality of all reagents, including primers, probes, and master mixes. Ensure proper storage conditions have been maintained. Use positive and negative controls, as mentioned earlier.

- Troubleshooting Specific Technologies: Depending on the technology used (TaqMan, Illumina, etc.), specific troubleshooting steps may apply. For instance, check cluster plots in Illumina data or amplification curves in TaqMan. Consult relevant documentation for the platform.

- Optimize Assay Conditions: If problems persist, optimizing reaction conditions may be necessary. This might involve adjusting magnesium concentration, annealing temperature, or extension time. Iterative optimization steps may be needed.

- Repeat the Assay: If all else fails, repeat the entire assay with fresh reagents and samples to rule out random errors.

In a recent project, we identified poor-quality DNA as the cause of suboptimal genotyping results. After improving our DNA extraction procedures and implementing stricter QC measures, we dramatically improved the assay’s performance. A systematic approach is key to efficient troubleshooting.

Q 19. Describe your experience with data normalization in genotyping.

Data normalization in genotyping is crucial to remove systematic biases and ensure comparability across samples and different batches. This is important because factors such as differences in DNA concentration, amplification efficiency, or batch effects can introduce variation that masks the true biological signal. Common normalization techniques include:

- Quantile Normalization: This approach transforms the data so that the distribution of intensity values is the same across all samples. This is particularly useful for correcting batch effects and making samples more comparable.

- Rank-Invariant Normalization: This method identifies a set of SNPs that are expected to be relatively invariant across samples and uses them as references to normalize the remaining SNPs. It is robust to noise and less sensitive to outliers.

- Background Correction: Many platforms measure background signal intensity, which needs to be corrected to improve signal-to-noise ratio. Various methods, depending on the platform, are employed for this step.

- Within-Array Normalization: This normalizes data within a single array to correct for inconsistencies caused by differences in probe intensity. The normalization factors are determined by the median, mean, or other summaries of the data.

We use these techniques in combination or separately, depending on the specific genotyping platform and study design. The choice of normalization method has a significant impact on the downstream analyses and interpretation of the results, potentially affecting the identification of significant associations in studies such as GWAS.

Q 20. How do you handle population stratification in genotyping studies?

Population stratification refers to the presence of population subgroups within a study sample that have different allele frequencies. This can confound association studies, leading to false-positive or false-negative results. To address this, we use several strategies:

- Principal Component Analysis (PCA): PCA is a dimensionality reduction technique that can identify major sources of variation in the data, often reflecting population structure. We include the top principal components as covariates in our statistical analyses to adjust for population stratification.

- Structure: Software packages like STRUCTURE can infer population structure using genotype data, allowing us to identify distinct subgroups and account for this structure in our analysis. This enables us to separate genetic variation due to population structure from that due to disease susceptibility.

- Linear Mixed Models (LMMs): LMMs are statistical models that can explicitly account for kinship relationships between individuals and population structure, leading to more accurate association tests. These models are particularly important in association studies of related individuals.

- Matching: We can carefully design studies by matching cases and controls for ancestry, minimizing population stratification.

For example, in a recent GWAS, we used PCA to identify population substructure within our study sample. By adjusting for the top principal components in our association analysis, we were able to avoid false-positive associations due to population stratification and obtain more robust results.

Q 21. Explain the concept of genome-wide association studies (GWAS) and their relationship to genotyping.

Genome-wide association studies (GWAS) aim to identify genetic variants associated with complex traits or diseases. Genotyping plays a central role in GWAS. In essence, GWAS relies on genotyping a large number of individuals to identify single nucleotide polymorphisms (SNPs) whose frequencies differ significantly between individuals with and without the trait of interest.

The process typically involves:

- Genotyping: Genotyping a large cohort of individuals (cases and controls) using high-throughput technologies like Illumina BeadArrays or NGS.

- Quality Control: Rigorous QC procedures to ensure high-quality genotype data.

- Association Testing: Statistical tests (e.g., chi-squared tests or logistic regression) to assess the association between each SNP and the trait. This accounts for factors like age, sex, and population stratification.

- Multiple Testing Correction: Correcting for the large number of tests performed (multiple testing correction), often using methods like Bonferroni correction or false discovery rate (FDR).

- Replication: Replicating findings in independent datasets to confirm the validity of the associations.

GWAS has been successful in identifying many genetic variants associated with complex diseases, such as type 2 diabetes, heart disease, and various cancers. The relationship between GWAS and genotyping is inseparable; without high-throughput and accurate genotyping, GWAS wouldn’t be feasible. The data generated by genotyping is the foundational information for conducting GWAS analysis. We routinely use GWAS to investigate the genetics of various complex diseases and traits.

Q 22. How do you interpret Manhattan plots from GWAS?

Manhattan plots are a powerful visualization tool used in Genome-Wide Association Studies (GWAS) to display the results of association testing between genetic variants and a trait of interest (e.g., disease susceptibility). They plot the negative logarithm of the p-value (often -log10(p)) for each single nucleotide polymorphism (SNP) against its chromosomal location. The x-axis represents the chromosome and genomic position, while the y-axis represents the significance of the association.

Interpreting a Manhattan plot involves looking for SNPs that significantly deviate from the background noise. These SNPs will appear as peaks or points far above the horizontal line representing the genome-wide significance threshold (typically p < 5 x 10-8). The height of the peak indicates the strength of the association. A higher peak suggests a stronger association between the SNP and the trait.

For example, imagine a plot showing a tall peak on chromosome 19. This would suggest a strong association between a SNP on chromosome 19 and the trait under investigation. You would then investigate that specific SNP further to understand its functional role and potential implications.

It’s crucial to remember that Manhattan plots are merely a first step. Identifying SNPs above the threshold needs to be followed by replication studies, functional validation, and further investigation to confirm the associations and understand the underlying biological mechanisms.

Q 23. Describe your experience with different genotyping applications (e.g., disease diagnostics, forensic science, agriculture).

My experience with genotyping applications spans diverse fields. In disease diagnostics, I’ve worked extensively on projects using genotyping to identify genetic predispositions to various diseases, including cancers and cardiovascular diseases. We utilized SNP arrays to identify specific genetic variants associated with increased risk. This information helps in personalized medicine, allowing for tailored preventative measures or treatment strategies based on an individual’s genetic profile.

In forensic science, I’ve applied genotyping techniques for DNA profiling and identification, leveraging STR (short tandem repeat) analysis to distinguish individuals. This involves analyzing the variation in the length of repeated DNA sequences at specific loci, crucial for forensic casework, paternity testing, and missing person investigations. The accuracy and reliability of these techniques are paramount in the justice system.

Within agriculture, I’ve been involved in projects using genotyping to improve crop yields and disease resistance. We employed techniques like genotyping-by-sequencing (GBS) to identify desirable genetic variations that enhance the traits of interest. These findings contribute to developing more resilient and productive crop varieties, contributing to food security.

Q 24. Explain the principles of PCR-based genotyping methods.

PCR-based genotyping methods leverage the power of Polymerase Chain Reaction to amplify specific DNA regions containing the genetic variations of interest. This amplification allows for precise and sensitive detection of these variations. Several common approaches exist:

- Allele-specific PCR (AS-PCR): This method uses primers designed to specifically anneal to only one allele of a SNP. Amplification only occurs if the target allele is present. The absence or presence of a PCR product indicates the genotype.

- Real-time PCR (qPCR): qPCR allows for the quantification of PCR products in real-time. Using allele-specific probes or melting curve analysis, genotypes can be determined based on the amount of amplification signal for each allele.

- High-Resolution Melting (HRM): HRM analyzes the melting profiles of PCR products. Different genotypes exhibit distinct melting curves due to variations in DNA sequence and base composition.

The principles are based on the specificity of PCR primers and the detection of amplification products. These methods are cost-effective and relatively easy to implement, making them widely used for genotyping applications in various fields.

Q 25. What are the limitations of current genotyping technologies?

Current genotyping technologies, despite their advancements, face several limitations:

- Cost: Whole-genome sequencing, while increasingly affordable, remains expensive, particularly for large-scale studies.

- Coverage and Resolution: Some technologies may not capture all genetic variations, leading to incomplete or biased information. Resolution may also be limited, especially in complex regions of the genome.

- Data Analysis: The sheer volume of data generated by high-throughput genotyping technologies requires sophisticated computational resources and bioinformatics expertise for analysis and interpretation.

- Ethical and Privacy Concerns: The sensitive nature of genetic data necessitates robust data security and privacy measures to prevent misuse and protect individual rights.

- Rare Variants: Detecting rare variants that may contribute to disease susceptibility can be challenging due to their low frequency in populations.

Overcoming these limitations involves continuous technological innovation, development of more efficient and cost-effective methods, and the establishment of robust ethical guidelines and data management practices.

Q 26. How can genotyping data be integrated with other omics data (e.g., transcriptomics, proteomics)?

Integrating genotyping data with other omics data, such as transcriptomics (gene expression), proteomics (protein levels), and metabolomics (metabolite levels), provides a more holistic understanding of biological processes and disease mechanisms. This integration is crucial for moving beyond simple genotype-phenotype associations and towards a systems biology approach.

For instance, we might identify a SNP associated with a disease through GWAS (genotyping). By integrating this information with transcriptomic data, we can investigate whether this SNP affects the expression levels of nearby genes. Furthermore, proteomic data might reveal how altered gene expression translates into changes in protein levels, and metabolomics might uncover changes in metabolite concentrations. This multi-omics approach allows us to build a much more comprehensive picture of the disease pathway and potential therapeutic targets.

This integration is typically accomplished through bioinformatics tools and statistical methods that can analyze and correlate data from different omics platforms. Techniques like pathway analysis, network analysis, and machine learning can be used to identify key interactions and patterns within the integrated dataset.

Q 27. Describe your experience with automation in genotyping workflows.

Automation plays a vital role in increasing throughput and efficiency in genotyping workflows. My experience includes working with automated liquid handling systems, robotic platforms for sample preparation, and high-throughput sequencing platforms. These systems significantly reduce manual handling, minimizing human error and increasing reproducibility.

For example, in SNP array-based genotyping, automated liquid handling robots precisely dispense reagents and transfer samples, ensuring consistent and accurate processing of hundreds or thousands of samples simultaneously. Similarly, automated sequencing platforms can process a large number of samples without manual intervention, drastically reducing turnaround time and enabling high-throughput analysis.

The use of Laboratory Information Management Systems (LIMS) for sample tracking and data management further enhances automation and data integrity. The integration of these automated systems is critical for managing the large-scale data generated by modern genotyping techniques, ensuring efficiency and reliability.

Q 28. How do you maintain data security and privacy when working with genotyping data?

Maintaining data security and privacy when handling genotyping data is of paramount importance due to its sensitive nature. This requires a multi-faceted approach:

- Data Encryption: Genotyping data should be encrypted both during storage and transmission to prevent unauthorized access. Strong encryption algorithms should be used.

- Access Control: Strict access control measures are crucial, restricting access to authorized personnel only. Role-based access control ensures that individuals only have access to the data they need for their specific roles.

- Data Anonymization: Data anonymization techniques, such as removing personally identifiable information (PII), should be employed to protect the identity of individuals. However, careful consideration is needed as anonymization is not foolproof.

- Compliance with Regulations: Adherence to relevant data protection regulations, such as HIPAA (in the US) or GDPR (in Europe), is crucial. These regulations provide a framework for responsible handling of personal data.

- Secure Data Storage: Data should be stored in secure databases with robust security measures in place to prevent unauthorized access, modification, or deletion.

Furthermore, ethical considerations should guide all aspects of data handling, including informed consent from participants and transparency about data usage.

Key Topics to Learn for Your Genotyping Interview

- Genotyping Technologies: Understand the principles and applications of various genotyping methods, including PCR-based assays (e.g., TaqMan, qPCR), microarray technology, and next-generation sequencing (NGS).

- Data Analysis and Interpretation: Master the skills to analyze genotyping data, including quality control, variant calling, and interpretation of results within the context of biological pathways and disease mechanisms. Practice interpreting different data formats (e.g., VCF, PLINK).

- SNPs and Genetic Variation: Develop a strong understanding of single nucleotide polymorphisms (SNPs), their impact on gene function, and their role in disease susceptibility and complex traits. Explore the concepts of linkage disequilibrium and haplotype analysis.

- Bioinformatics Tools and Databases: Familiarize yourself with commonly used bioinformatics software and databases for genotyping data analysis (e.g., BLAST, GATK, dbSNP). Be prepared to discuss your experience with relevant tools.

- Experimental Design and Validation: Be able to discuss the design of genotyping experiments, including sample selection, data normalization, and validation strategies. Understand the importance of proper controls and error mitigation.

- Ethical Considerations: Demonstrate awareness of ethical considerations related to genotyping, such as data privacy, informed consent, and potential biases in genetic testing and interpretation.

- Applications in Research and Medicine: Prepare to discuss the applications of genotyping across various fields, including disease diagnostics, pharmacogenomics, forensic science, and agricultural biotechnology. Be ready to give specific examples.

Next Steps: Unlock Your Genotyping Career

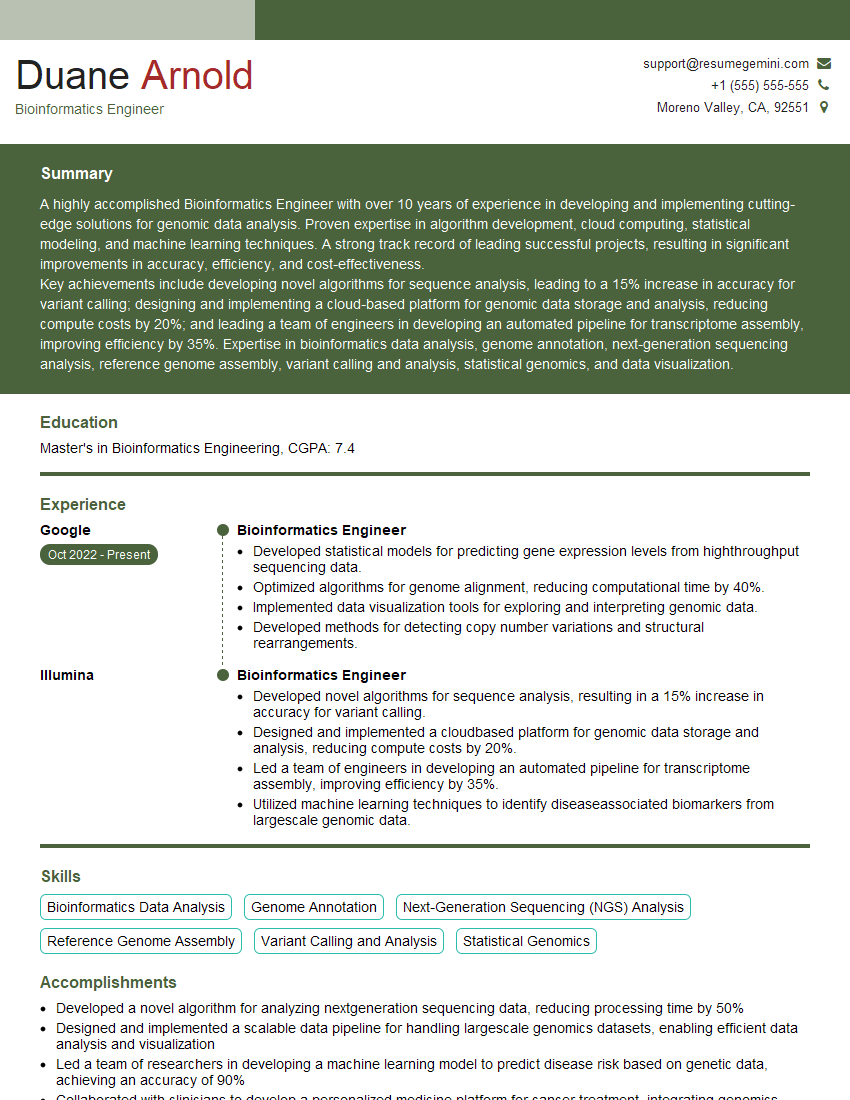

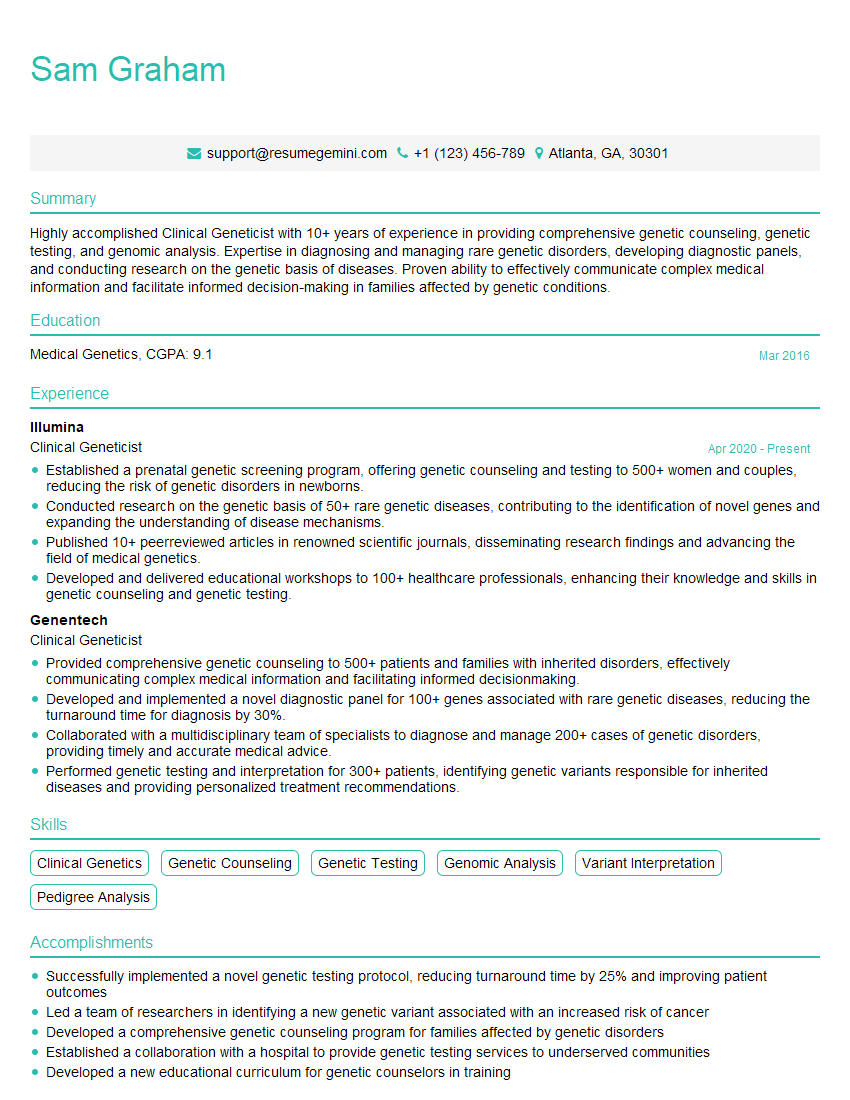

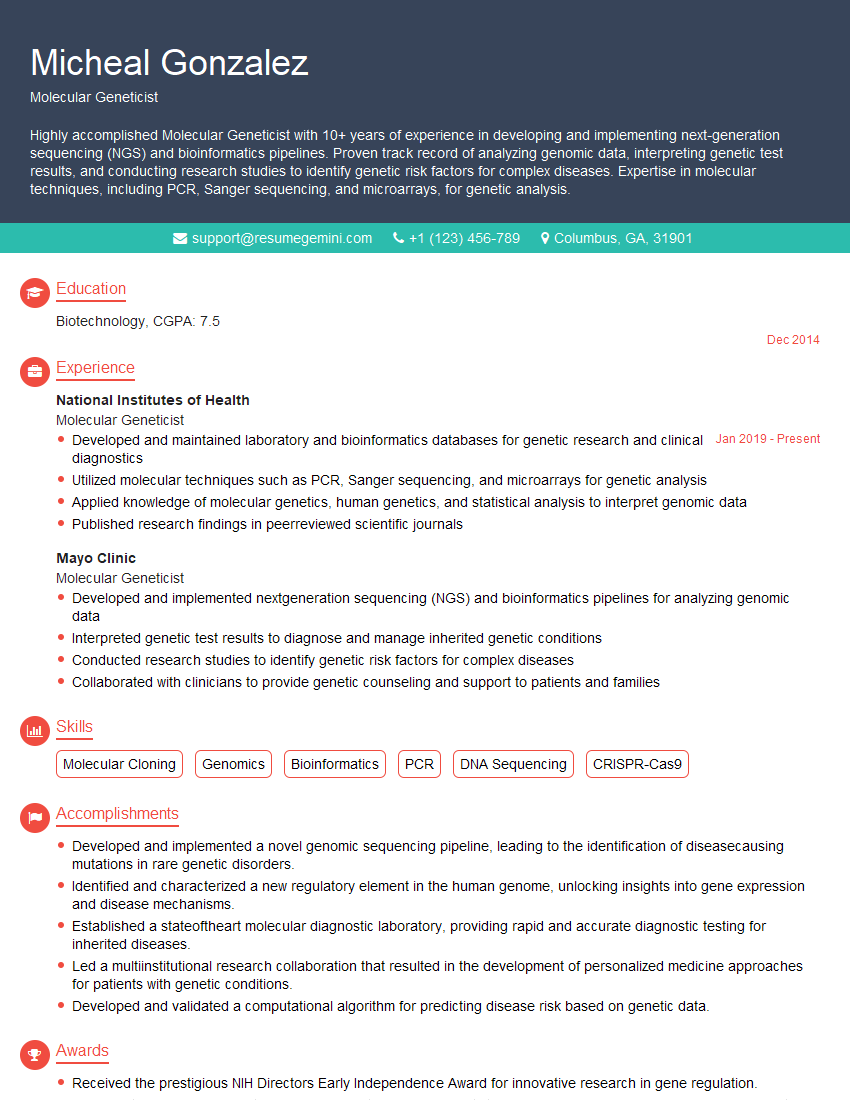

Mastering genotyping opens doors to exciting opportunities in cutting-edge research and healthcare. To maximize your chances of landing your dream role, invest time in crafting a compelling, ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource to help you build a professional and impactful resume. We provide examples of resumes tailored specifically to Genotyping roles, giving you a head start in presenting yourself as the ideal candidate. Take the next step towards your successful genotyping career today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I have something for you and recorded a quick Loom video to show the kind of value I can bring to you.

Even if we don’t work together, I’m confident you’ll take away something valuable and learn a few new ideas.

Here’s the link: https://bit.ly/loom-video-daniel

Would love your thoughts after watching!

– Daniel

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.