Are you ready to stand out in your next interview? Understanding and preparing for GIS Mapping and Location Tracking interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in GIS Mapping and Location Tracking Interview

Q 1. Explain the difference between vector and raster data.

Vector and raster data are two fundamental ways of representing geographic information in GIS. Think of it like this: raster is a photograph, and vector is a drawing.

Raster data stores spatial data as a grid of cells, or pixels, each containing a value representing a characteristic, like temperature, elevation, or land cover. Imagine a satellite image; each pixel holds a color value representing the ground it covers. Raster data is excellent for representing continuous phenomena or images.

Vector data represents geographic features as points, lines, and polygons. A point might represent a tree, a line a road, and a polygon a building. Each feature has attributes associated with it. Vector data is precise and ideal for representing discrete objects with clearly defined boundaries. For example, a polygon might not only represent a building but include information about its address, square footage, and owner.

- Raster Advantages: Good for representing continuous surfaces, easy to visualize, efficient for storing large amounts of data.

- Raster Disadvantages: Can be large in file size, less precise for discrete features.

- Vector Advantages: Precise representation, scalable, efficient storage for discrete features.

- Vector Disadvantages: Can become complex to manage with many features, can be less efficient for representing continuous surfaces.

Q 2. Describe your experience with different GIS software (e.g., ArcGIS, QGIS).

I’ve extensive experience with both ArcGIS and QGIS, having used them for various projects over the last decade. My ArcGIS experience includes working with ArcMap, ArcGIS Pro, and various extensions like Spatial Analyst and Geostatistical Analyst for advanced spatial analysis and modeling. I’ve used it for tasks ranging from creating detailed topographic maps to performing complex spatial queries on large datasets.

QGIS, on the other hand, is my preferred choice for open-source tasks and rapid prototyping. Its flexibility and extensibility through plugins makes it an extremely powerful tool. I’ve used QGIS to develop custom geoprocessing tools, process large LiDAR datasets, and conduct spatial analyses for environmental assessments. One particular project involved using QGIS to analyze deforestation patterns in the Amazon, utilizing its processing tools to compare satellite imagery over several years.

In both cases, my proficiency extends beyond basic data visualization to include advanced functionalities such as geoprocessing, spatial analysis, and data management. I am comfortable working with both proprietary and open-source geospatial tools depending on the specific project needs and budget constraints.

Q 3. How do you handle spatial data projection and coordinate systems?

Spatial data projection and coordinate systems are crucial for accurate geospatial analysis. A projection is a way of representing the three-dimensional Earth on a two-dimensional surface, resulting in some distortion. Coordinate systems define the location of points on the Earth’s surface. Ignoring these elements can lead to significant errors in measurements and analysis.

My approach involves:

- Identifying the appropriate projection: The choice depends on the geographical extent of the data and the type of analysis. For example, a UTM projection is suitable for smaller areas, while a Lambert Conformal Conic projection is better for larger regions spanning both latitude and longitude.

- Defining the coordinate system: This ensures consistency and accuracy in data handling. I utilize the EPSG codes (European Petroleum Survey Group) which are universally recognized identifiers for projections and coordinate systems.

- Performing projections transformations (re-projections): When working with datasets in different projections, I use GIS software tools to re-project them into a common coordinate system. This step is essential before performing any spatial analysis.

- Metadata management: I meticulously document the projection and coordinate system of each dataset, ensuring data integrity and repeatability. A lack of metadata can easily lead to misinterpretations and invalidate analyses.

For instance, in a recent project involving the analysis of flooding risk, I had to re-project elevation data from a global projection to a local UTM zone to accurately determine flood depths and affected areas. Failure to do so would have significantly affected the accuracy and reliability of our flood risk map.

Q 4. What are the common file formats used in GIS?

GIS utilizes a variety of file formats, each with its strengths and weaknesses. Common formats include:

- Shapefiles (.shp): A widely used vector format storing geographical features as points, lines, and polygons. It is a collection of files (.shp, .shx, .dbf, .prj) that needs to be treated as a single unit.

- GeoJSON (.geojson): A lightweight, text-based format for representing geographic data. It’s increasingly popular due to its open standard and compatibility with web mapping applications.

- GeoTIFF (.tif, .tiff): A popular raster format that supports georeferencing information, making it easy to integrate into GIS systems. It handles geospatial metadata efficiently.

- Grid (.asc, .grd): Common raster formats often used for digital elevation models (DEMs) and other gridded data.

- File Geodatabase (.gdb): A powerful, structured format for managing both vector and raster data within a single container, offering efficient data management and indexing.

The choice of format depends on the application and the specific needs of the project. For example, GeoJSON is preferred for web mapping, while File Geodatabases are suitable for managing large, complex datasets within a GIS environment.

Q 5. Explain the concept of georeferencing.

Georeferencing is the process of assigning geographic coordinates (latitude and longitude) to points on an image or map that doesn’t already have them. It’s like adding location information to an otherwise unlocated picture.

This is achieved by identifying points on the image with known coordinates, often obtained from a map or a GPS device. Using these control points, the GIS software performs a transformation to align the image to a coordinate system. The transformation involves algorithms that mathematically adjust the image’s position and orientation to match the known coordinates. Different transformation techniques exist, such as affine transformation and polynomial transformation, each with varying degrees of accuracy depending on the distortion of the image.

Georeferencing is essential for integrating scanned maps, aerial photographs, or satellite images into a GIS environment. Without georeferencing, these images would be simple pictures; georeferencing adds geographic context, allowing for spatial analysis and integration with other geospatial data. For example, a historical map of a city might need to be georeferenced before comparing it to a modern street map.

Q 6. How do you perform spatial analysis using GIS?

Spatial analysis is the process of extracting meaningful information from spatial data. It involves applying various tools and techniques to understand patterns, relationships, and trends within geographic datasets.

Common spatial analysis techniques I employ include:

- Buffering: Creating zones around features, like determining the area within a certain distance of a river.

- Overlay analysis: Combining layers to identify spatial relationships, for instance, finding areas where forests overlap with high-slope areas.

- Proximity analysis: Measuring distances between features, useful for identifying the nearest hospital to an accident site.

- Network analysis: Analyzing routes and networks, such as finding the shortest route between two locations on a road network.

- Spatial interpolation: Estimating values at unsampled locations based on known values, like creating a contour map from scattered elevation points.

For example, in a recent project to optimize emergency response times, I used network analysis to identify the optimal locations for new emergency service centers, considering factors such as population density, road networks, and response times to emergency calls.

Q 7. Describe your experience with spatial statistics.

My experience with spatial statistics encompasses a range of techniques for analyzing spatial patterns and relationships. I’m proficient in applying statistical methods to address specific research questions and gain insights from geographic data that go beyond simple visualization.

Some key methods I’ve employed include:

- Point pattern analysis: Analyzing the spatial distribution of points, such as determining if points are clustered, randomly distributed, or regularly spaced. This helps to understand spatial patterns in phenomena like disease outbreaks or crime hotspots.

- Spatial autocorrelation: Assessing the degree of similarity between values at nearby locations. It’s crucial for identifying spatial dependencies that can influence statistical modeling.

- Geostatistics: Techniques like kriging are used to predict values at unsampled locations, considering spatial autocorrelation. This is valuable for creating interpolated surfaces like precipitation maps from scattered rain gauge data.

- Spatial regression: Extending traditional regression models to account for spatial dependencies. This allows us to build more accurate predictive models by incorporating spatial information.

A recent application involved using spatial regression to model the relationship between air pollution levels and proximity to industrial areas, accounting for spatial autocorrelation to produce a more accurate and reliable predictive model of air pollution.

Q 8. Explain the difference between buffer analysis and overlay analysis.

Both buffer and overlay analyses are fundamental spatial operations in GIS, but they achieve different goals. Think of them like this: a buffer analysis creates a zone around a feature, while an overlay analysis combines information from multiple layers.

Buffer Analysis: This creates a zone of a specified distance around a point, line, or polygon. For example, a 1-kilometer buffer around a school would show all areas within 1 kilometer of the school. This is useful for proximity analysis – identifying areas affected by a feature, like determining areas at risk from a wildfire (buffering the wildfire perimeter), or identifying potential customers within a certain radius of a store.

Overlay Analysis: This integrates the spatial information from two or more layers to create a new layer that combines the attributes and geometry of the input layers. Imagine layering a map of soil types over a map showing land ownership. An overlay analysis would create a new map showing which soil types exist on each parcel of land. Common overlay types include intersect (only shows the common area of layers), union (shows all areas of all layers), and erase (removes one layer from another).

In short, buffering is about creating zones of influence, while overlaying is about integrating information from multiple layers.

Q 9. How do you handle spatial data errors and inconsistencies?

Handling spatial data errors and inconsistencies is crucial for accurate analysis. It’s a multi-step process that starts even before the data is processed. Imagine building a house – you wouldn’t start construction without checking the foundation’s integrity, right?

- Data Cleaning: This involves identifying and correcting errors like incorrect coordinates, attribute inconsistencies, and topological errors (e.g., overlapping polygons). I use tools within GIS software like ArcGIS Pro or QGIS to identify and rectify these errors. This often involves visual inspection, using built-in tools for error detection, and implementing automated checks based on data constraints.

- Data Validation: This ensures the data adheres to established standards and constraints. For example, I might check if a field representing elevation values only contains numerical data and is within a realistic range for the study area. This can be done through scripting (Python, for instance) or using database constraints.

- Spatial Accuracy Assessment: This involves evaluating the positional accuracy of the data. This might involve comparing the data to a higher-accuracy reference dataset and calculating statistics such as Root Mean Square Error (RMSE) to quantify the level of inaccuracy.

- Addressing inconsistencies: Inconsistencies might stem from different data sources having different coordinate systems or attribute schemas. I would use tools within the GIS software to project the data into a consistent coordinate system and standardize attribute fields. This might involve data transformation and reconciliation procedures.

For instance, in a project involving land cover mapping, I once found inconsistencies in polygon boundaries. After careful investigation, I determined it was due to inaccuracies in the source data. I used polygon snapping and editing tools to correct the overlapping areas, ensuring a consistent representation of the land cover. The result was a significantly more accurate and reliable map.

Q 10. What are your experiences with data visualization in GIS?

Data visualization is the heart of effective communication in GIS. It’s not just about creating pretty maps; it’s about effectively conveying complex spatial information to a diverse audience. My experience spans various techniques and tools.

- Cartographic Design Principles: I always apply established cartographic principles to ensure my maps are clear, accurate, and easy to interpret. This includes appropriate color schemes, symbology, labeling, and map layouts.

- Interactive Maps: I often create interactive web maps using platforms like ArcGIS Online or Leaflet, allowing users to explore data dynamically. For example, I once created an interactive map showing the spread of a disease, which allowed users to zoom into specific areas and see the number of cases over time.

- 3D Visualization: For projects requiring more depth and context, I’ve utilized 3D visualization techniques, often to show the change in the environment over time, like deforestation or urban growth.

- Charts and Graphs: I complement maps with charts and graphs to illustrate key statistics and trends derived from the spatial data, allowing for a deeper and more comprehensive analysis.

One project I worked on involved visualizing air quality data across a city. Simple color-coded maps were effective in showing spatial patterns, but adding interactive elements that allowed users to filter data by pollutant type and time period significantly enhanced the map’s utility. We also incorporated charts to represent trends in air quality metrics over time.

Q 11. How do you ensure data quality and accuracy in a GIS project?

Data quality and accuracy are paramount in any GIS project. Think of it like building a skyscraper – a weak foundation will lead to disaster. Ensuring data quality is an ongoing process, not a single event.

- Metadata Management: Comprehensive metadata is essential. This includes details about the data’s source, accuracy, projection, and any limitations. Properly documented metadata aids in understanding the data and its limitations, thus promoting accuracy.

- Data Validation and Error Detection: Implementing checks and balances throughout the process, such as automated data checks using scripts or using GIS software tools, are crucial to identify inconsistencies and errors early.

- Source Data Evaluation: Critically evaluating the reliability and accuracy of source data is paramount before using it in the project. I often assess the resolution, accuracy, and lineage of different datasets to determine their suitability.

- Data Transformation and Standardization: Standardizing data formats, projections, and attribute schemas ensures consistency across the dataset. This makes analysis much simpler and more reliable.

- Quality Control Checks: Regular quality checks using visual inspection, statistical analysis, and comparison against reference data are imperative to maintaining the integrity of the GIS project.

In a recent project involving land use classification, I meticulously checked the accuracy of the classification by comparing it to high-resolution aerial imagery and ground-truth data. This helped identify areas needing correction and improved the overall accuracy of the final product.

Q 12. Explain your experience with GPS data and its integration into GIS.

GPS data is the backbone of many location-based services and GIS projects. I have extensive experience integrating GPS data into GIS workflows. It is essentially the real-world counterpart to the digital maps we utilize.

- Data Acquisition and Preprocessing: This includes using GPS receivers to collect data, which might involve various GPS devices, from handheld units to those integrated into vehicles. Preprocessing steps include cleaning, transforming, and correcting the data for errors like multipath effects and atmospheric interference. Software like ArcGIS or QGIS has excellent tools for this.

- Data Integration: The preprocessed GPS data is then integrated into GIS software, typically as point features representing the location of the GPS receiver at different time stamps. These points can then be used to create lines (tracks), or polygons (areas covered).

- Spatial Analysis: Once integrated, GPS data can be used for various spatial analyses, including tracking movement, calculating distances and speeds, and creating thematic maps. This is crucial for various applications like fleet management or wildlife tracking.

- Error Handling: GPS data is inherently prone to errors. I account for this by applying appropriate error models and data filtering techniques to minimize the impact of errors on analysis.

For example, I once worked on a project tracking the movement of endangered species. I used GPS data collected from animal collars to generate movement maps that helped identify key habitats and migration patterns. This data was then used to inform conservation strategies.

Q 13. Describe your experience with remote sensing data and its applications.

Remote sensing data provides a powerful tool for observing and analyzing Earth’s surface from a distance. I have extensive experience working with various remote sensing datasets, from satellite imagery to aerial photography.

- Data Acquisition and Preprocessing: Accessing and preprocessing remote sensing data involves downloading data from various sources (like Landsat, Sentinel, or commercial providers), correcting for atmospheric and geometric distortions, and orthorectifying images to ensure accurate georeferencing.

- Image Classification: I use various image classification techniques, supervised and unsupervised, to extract meaningful information from remote sensing data. This might involve classifying land cover types, detecting changes over time, or mapping vegetation indices. Software like ERDAS IMAGINE or ENVI are often used for this.

- Image Analysis and Interpretation: Analyzing remote sensing imagery involves examining spectral signatures, patterns, and textures to understand the underlying features. This helps in interpreting land cover, identifying urban development, or assessing the health of vegetation.

- Data Integration with GIS: Remote sensing data is often integrated with other GIS data to create more comprehensive analyses. For example, integrating satellite imagery with elevation data could help assess flood risk in a region.

In a recent project involving deforestation monitoring, I used time-series satellite imagery to detect changes in forest cover over several years. This involved image classification, change detection analysis, and generating reports that quantified the extent of deforestation and identified deforestation hotspots.

Q 14. What are your skills in using SQL for spatial data queries?

SQL is a powerful tool for querying and manipulating spatial data within a database. My proficiency in SQL allows me to efficiently extract, analyze, and manage geospatial data.

- Spatial Queries: I use spatial SQL functions such as

ST_Contains,ST_Intersects,ST_Distance, andST_Bufferto perform spatial selections and analyses directly within the database. For example,SELECT * FROM parcels WHERE ST_Intersects(geom, ST_GeomFromText('POLYGON(...)'))would select all parcels intersecting a given polygon. - Data Manipulation: I can use SQL to update, insert, and delete spatial features within a database, ensuring data integrity. For example, I can update the attributes of certain features based on spatial relationships.

- Database Management: My skills extend to managing spatial databases, including creating indexes to optimize query performance, ensuring data consistency, and maintaining data integrity.

- PostgreSQL/PostGIS: I have hands-on experience working with spatial databases like PostgreSQL with PostGIS extension, a widely-used and robust open-source solution for managing spatial data.

In a project involving analyzing crime incidents, I used SQL queries to identify crime hotspots within specific neighborhoods by selecting incidents within a certain buffer distance of each other. This was a far more efficient approach than performing the same analysis in a GIS software environment.

Q 15. How familiar are you with different map projections and their applications?

Map projections are essential in GIS because they represent the three-dimensional Earth on a two-dimensional map. No projection is perfectly accurate; they all involve distortion of area, shape, distance, or direction. My familiarity encompasses a wide range, including:

- Conic Projections: Ideal for mid-latitude regions, minimizing distortion along the standard parallels. For example, the Albers Equal-Area Conic Projection is excellent for representing large areas with minimal area distortion, often used for land use planning.

- Cylindrical Projections: These project the Earth onto a cylinder, resulting in minimal distortion along the equator. The Mercator projection, while famously distorting areas at higher latitudes (making Greenland appear larger than it is), is crucial for navigation due to its preservation of direction.

- Azimuthal Projections: These project the Earth onto a plane tangent to a point, best for representing polar regions or specific locations. The Stereographic projection, for instance, is often used for mapping small areas with accurate shape preservation.

Choosing the right projection is critical. For instance, a project focusing on land area measurements in a large country would utilize an equal-area projection like Albers, while a navigation app would benefit from a conformal projection like Mercator.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with creating thematic maps.

I have extensive experience in creating thematic maps, utilizing various software such as ArcGIS and QGIS. My process involves:

- Data Preparation: This crucial first step involves cleaning, transforming, and classifying data to ensure accuracy and consistency for mapping.

- Symbol Selection: Choosing appropriate symbols (colors, patterns, sizes) to effectively represent data and convey the thematic information is vital. For instance, using graduated colors to show population density.

- Map Layout Design: A well-designed map is clear, concise, and easy to understand. I always prioritize readability, using legends, titles, scale bars, and north arrows appropriately.

- Data Analysis & Visualization: Selecting the appropriate cartographic techniques (choropleth, dot density, isopleth maps, etc.) for the type of data and the intended audience is key. I’ve worked on maps visualizing everything from crime rates to election results.

For example, I once created a thematic map showing the distribution of different soil types in a region, using different colors and patterns to represent each soil type. The map aided agricultural planning by enabling the identification of areas suitable for specific crops.

Q 17. How do you handle large datasets in a GIS environment?

Handling large datasets efficiently is paramount in GIS. My approach incorporates techniques such as:

- Data Subsetting: Working with subsets of the data, focusing only on the area or features of immediate interest greatly reduces processing time. I would often work with specific geographical extents or select specific attribute values.

- Spatial Indexing: Techniques such as R-trees and quadtrees significantly speed up spatial queries, allowing for efficient retrieval of features based on location.

- Data Compression: Using various compression methods (e.g., shapefile compression, geodatabase compression) reduces storage space and improves data transfer speeds.

- Database Management Systems (DBMS): Utilizing specialized spatial databases like PostGIS (PostgreSQL extension) or Oracle Spatial enhances data management, storage, and retrieval efficiency for large datasets.

- Parallel Processing and Cloud Computing: For extremely large datasets, leveraging parallel processing capabilities and cloud computing platforms like AWS or Azure enables more efficient handling of data.

Imagine analyzing satellite imagery covering a whole country. Processing the entire dataset at once would be computationally expensive. Instead, I would process smaller tiles or areas in parallel to expedite the analysis and leverage cloud-based resources if required.

Q 18. What are your experiences with GIS data modeling?

GIS data modeling involves defining the structure and relationships between spatial and attribute data. My experience includes:

- Conceptual Modeling: I begin by defining the entities (e.g., roads, buildings, parcels) and their attributes (e.g., road type, building height, parcel owner). Entity Relationship Diagrams (ERDs) are crucial in this step.

- Logical Modeling: This stage translates the conceptual model into a logical database design, selecting appropriate data types and defining relationships between tables.

- Data Normalization: Applying normalization techniques reduces data redundancy and improves data integrity. For instance, using normalization to avoid storing duplicate address information for multiple parcels on the same street.

- Spatial Data Structures: Understanding and using various spatial data structures like vector (points, lines, polygons) and raster (grids, images) is fundamental for efficient data representation and analysis. I’m adept at identifying the optimal structure for specific data types and tasks.

For a project involving land parcel management, I might use a geodatabase with feature classes representing parcels, with attributes for owner information, tax assessments, and other relevant data. The relationships between these feature classes are then defined to allow for efficient queries and analyses.

Q 19. Explain your experience with developing and maintaining GIS databases.

Developing and maintaining GIS databases requires a structured approach. My experience includes designing, implementing, and maintaining databases using:

- Relational Database Management Systems (RDBMS): I’m proficient in working with various RDBMS like PostgreSQL/PostGIS, MySQL, and Oracle Spatial for storing and managing spatial data.

- Geodatabases: I have extensive experience creating and managing both file and enterprise geodatabases in ArcGIS, understanding their structure and functionalities.

- Data Versioning: Implementing data versioning strategies enables collaborative editing, tracking changes, and resolving conflicts within the database.

- Data Quality Control: Establishing and enforcing data quality standards, including data validation and error checking, is crucial for maintaining data integrity and reliability.

- Metadata Management: Documenting metadata (information about the data) is essential for understanding, accessing, and using the database effectively.

In one project, I developed a geodatabase for managing infrastructure assets of a city. The database incorporated data on roads, water pipes, and power lines, enabling efficient asset management and planning.

Q 20. How do you perform spatial join operations?

A spatial join operation combines attributes from two layers based on their spatial relationships. The process involves selecting a target layer and a join layer, then defining the spatial relationship (e.g., intersects, contains, within). The attributes from the join layer are then added to the target layer based on the defined spatial relationship.

For example, let’s say you have a layer of census tracts and a layer of crime incidents. Performing a spatial join with ‘contains’ will add the crime count for each tract to the census tract layer, showing crime rates per tract.

Specific steps depend on the GIS software. In ArcGIS, it is a geoprocessing tool, while in QGIS, it’s a menu option. The key is defining the correct join operation to accurately capture the relationship between the layers and ensuring that the input layers are correctly projected.

Q 21. What are your experiences with using Python or other scripting languages in GIS?

Python is my preferred scripting language for GIS tasks, automating workflows and extending the functionalities of GIS software. My experience includes:

- Geoprocessing Automation: Using libraries like

arcpy(for ArcGIS) andgeopandas(for general GIS tasks), I automate repetitive tasks like batch processing, data conversion, and map generation. - Spatial Analysis: Python with libraries like

shapelyandscipyallows for performing complex spatial analyses that are difficult or impossible to achieve manually in the GIS software. - Data Wrangling and Manipulation: Python’s flexibility is great for cleaning, transforming, and manipulating GIS data, ensuring data consistency and quality before visualization or analysis.

- Web Mapping Applications: Using frameworks like

FlaskorDjangowith libraries such asfoliumorLeaflet, I create web mapping applications for interactive data visualization and analysis.

Example (using geopandas):

import geopandas as gpd

shapefile = gpd.read_file('roads.shp')

print(shapefile.head())This snippet reads a shapefile containing road data using geopandas, enabling further processing and analysis.

Q 22. How do you manage and organize GIS project files and data?

Managing GIS project files and data effectively is crucial for project success and collaboration. I employ a meticulous, multi-layered approach. First, I establish a clear folder structure, mirroring the project’s phases and data types. For example, a project might have folders for ‘Raw Data,’ ‘Processed Data,’ ‘Maps,’ ‘Scripts,’ and ‘Documentation.’ Within each, I use descriptive filenames following a consistent naming convention (e.g., YYYYMMDD_Project_Data.shp). This ensures easy identification and retrieval.

Second, I leverage a robust database management system (DBMS), often PostgreSQL/PostGIS, for structured data. This allows for efficient querying, spatial analysis, and data versioning. For metadata management, I utilize standardized metadata schemas like FGDC or ISO 19139, ensuring data discoverability and interoperability. Finally, I maintain a detailed project log documenting all data modifications, analysis steps, and decisions, fostering transparency and reproducibility.

For example, during a recent environmental impact assessment project, this structured approach allowed my team to quickly access specific land cover data from a year ago, enabling a robust temporal analysis and preventing potential errors from using outdated information.

Q 23. Explain your understanding of spatial indexing techniques.

Spatial indexing is a fundamental concept in GIS, significantly accelerating spatial queries. Imagine searching for all restaurants within a 5-kilometer radius of your location; without indexing, the system would need to check every restaurant’s coordinates against yours – computationally expensive. Spatial indexes organize spatial data to make these searches far more efficient.

Common spatial indexing techniques include:

- R-tree: Organizes spatial objects into a tree-like structure, bounding boxes representing groups of objects. Searches efficiently eliminate large portions of the data space.

- Quadtree: Recursively divides space into four quadrants, excellent for uniformly distributed data. Each quadrant represents a smaller geographic area, enabling rapid search focusing on the relevant areas.

- Grid Index: Divides the spatial extent into a grid of cells. Objects are assigned to cells based on their location; searches are limited to cells intersecting the query.

The choice of spatial index depends on the data distribution, query types, and performance requirements. For example, R-trees are generally preferred for point data with uneven distribution, while quadtrees are suited for uniformly distributed points. In practice, I select the most appropriate index based on thorough data analysis and performance testing.

Q 24. Describe your experience with web mapping technologies (e.g., Leaflet, OpenLayers).

I have extensive experience developing interactive web maps using Leaflet and OpenLayers. Both are powerful JavaScript libraries providing a rich set of tools for visualizing and interacting with geographic data on web platforms. Leaflet is known for its lightweight nature and ease of use, making it ideal for simpler projects or when performance is critical. OpenLayers offers more advanced features and customization options, particularly useful for complex mapping projects with numerous data layers and custom functionalities.

For instance, I recently developed a web map using Leaflet for a public transportation authority, displaying bus routes, real-time vehicle locations, and nearby bus stops. The map incorporated custom icons, pop-ups with schedule information, and responsive design for various screen sizes. In another project, I used OpenLayers to create a sophisticated web application for environmental monitoring, allowing users to visualize and analyze various environmental data sets like air quality, water levels, and land cover.

I’m proficient in using various map tiles, including OpenStreetMap and Google Maps, and have experience integrating data from various sources, including GeoJSON, WMS (Web Map Service), and WFS (Web Feature Service) services.

Q 25. How familiar are you with cloud-based GIS platforms (e.g., ArcGIS Online, Google Earth Engine)?

I am very familiar with cloud-based GIS platforms like ArcGIS Online and Google Earth Engine. These platforms offer significant advantages in terms of scalability, collaboration, and accessibility. ArcGIS Online provides a complete suite of tools for creating, sharing, and managing geospatial data and maps, while Google Earth Engine is a powerful platform for geospatial analysis using its massive collection of satellite imagery and other geospatial datasets.

In a project involving wildfire risk assessment, I leveraged Google Earth Engine’s extensive historical satellite imagery to analyze land cover changes and identify areas at high risk. The cloud-based platform’s processing capabilities allowed for efficient analysis of large datasets, producing results that would have been infeasible using local processing power. In another instance, ArcGIS Online facilitated the collaborative creation and sharing of maps and data among team members distributed geographically.

I understand the benefits of these platforms and have experience with their respective APIs for creating custom applications and workflows.

Q 26. Explain your experience with location tracking technologies and data analysis.

My experience with location tracking technologies and data analysis is extensive. I’ve worked with various technologies, including GPS tracking devices, mobile apps, and IoT sensors to collect location data. I’m proficient in analyzing this data to derive insights using various methods.

This includes:

- Trajectory analysis: Analyzing movement patterns of objects or individuals over time.

- Geospatial statistics: Using spatial statistical methods to identify patterns and relationships in location data.

- Spatial interpolation: Estimating values at unobserved locations based on nearby measurements.

For example, I recently worked on a project analyzing the movement patterns of endangered species using GPS collar data. By analyzing the trajectories of these animals, I could identify critical habitats and potential threats to their survival. In another project, I analyzed the location data from a fleet of delivery vehicles to optimize delivery routes and improve efficiency.

My analysis techniques include various software and programming languages, like Python with libraries such as pandas, geopandas, and scikit-learn.

Q 27. How do you ensure data security and privacy in a GIS project?

Data security and privacy are paramount in GIS projects. I adhere to strict protocols throughout the project lifecycle to safeguard sensitive information. This involves multiple layers of protection.

Key strategies include:

- Access control: Restricting access to data and systems based on roles and responsibilities using secure authentication and authorization mechanisms.

- Data encryption: Encrypting data both in transit and at rest using strong encryption algorithms to prevent unauthorized access.

- Data anonymization and aggregation: Transforming data to remove identifying information when appropriate, while still allowing for meaningful analysis.

- Compliance with regulations: Adhering to relevant data privacy regulations such as GDPR or CCPA.

For example, in a project involving the location data of individuals, I ensured all data was anonymized before analysis, following strict protocols to protect personal privacy. The use of secure servers and encrypted data transfer also minimized the risk of unauthorized access.

Q 28. Describe your experience with creating interactive GIS maps.

Creating interactive GIS maps is a core part of my expertise. I leverage various techniques and tools to create engaging and informative maps. This goes beyond simply displaying data; it involves designing maps that effectively communicate information and enable users to explore the data in meaningful ways.

I utilize tools like ArcGIS Pro, QGIS, and web mapping libraries (Leaflet, OpenLayers) to build maps with interactive elements such as:

- Pop-ups: Displaying detailed information about features when clicked.

- Tooltips: Providing brief summaries of features on hover.

- Layers: Allowing users to switch between different datasets and visualize information at various scales.

- Legends: Clearly explaining the meaning of symbols and colors used on the map.

- Interactive widgets: Adding functionalities such as zoom sliders, search bars, time sliders, and measurement tools.

For example, I developed an interactive map for a city planning department, enabling users to explore proposed development projects, view zoning regulations, and provide feedback. The map included interactive layers for different datasets and tools for measuring distances and areas.

Key Topics to Learn for GIS Mapping and Location Tracking Interview

- Spatial Data Models: Understanding vector and raster data, their strengths and weaknesses, and when to apply each. Consider practical examples of data representation in different scenarios.

- Geographic Coordinate Systems (GCS) and Projections: Mastering concepts like latitude/longitude, UTM, and State Plane coordinates. Be prepared to discuss the implications of different projections on spatial analysis.

- Data Acquisition and Processing: Familiarize yourself with various methods of obtaining spatial data (e.g., GPS, remote sensing, surveying) and the techniques used for cleaning, transforming, and managing this data (e.g., geoprocessing tools).

- Spatial Analysis Techniques: Develop a strong understanding of techniques like buffering, overlay analysis, network analysis, and spatial interpolation. Be ready to discuss how these are used to solve real-world problems.

- GIS Software Proficiency: Demonstrate your expertise in industry-standard software like ArcGIS, QGIS, or other relevant platforms. Highlight your experience with specific tools and functionalities.

- Cartography and Map Design: Understand the principles of effective map design, including symbolization, labeling, and legend creation. Discuss the importance of communicating information clearly and effectively through maps.

- Location Tracking Technologies: Familiarize yourself with GPS, RFID, and other location tracking technologies. Be prepared to discuss their applications, limitations, and accuracy considerations.

- Database Management Systems (DBMS) and SQL: Demonstrate understanding of how spatial data is stored and managed within a DBMS and your proficiency with SQL for querying and manipulating spatial data.

- Problem-Solving and Case Studies: Prepare examples showcasing how you’ve used GIS and location tracking to solve practical problems. Highlight your analytical skills and ability to interpret spatial data.

- Ethical Considerations in GIS: Understand the ethical implications of using location data and the importance of data privacy and security.

Next Steps

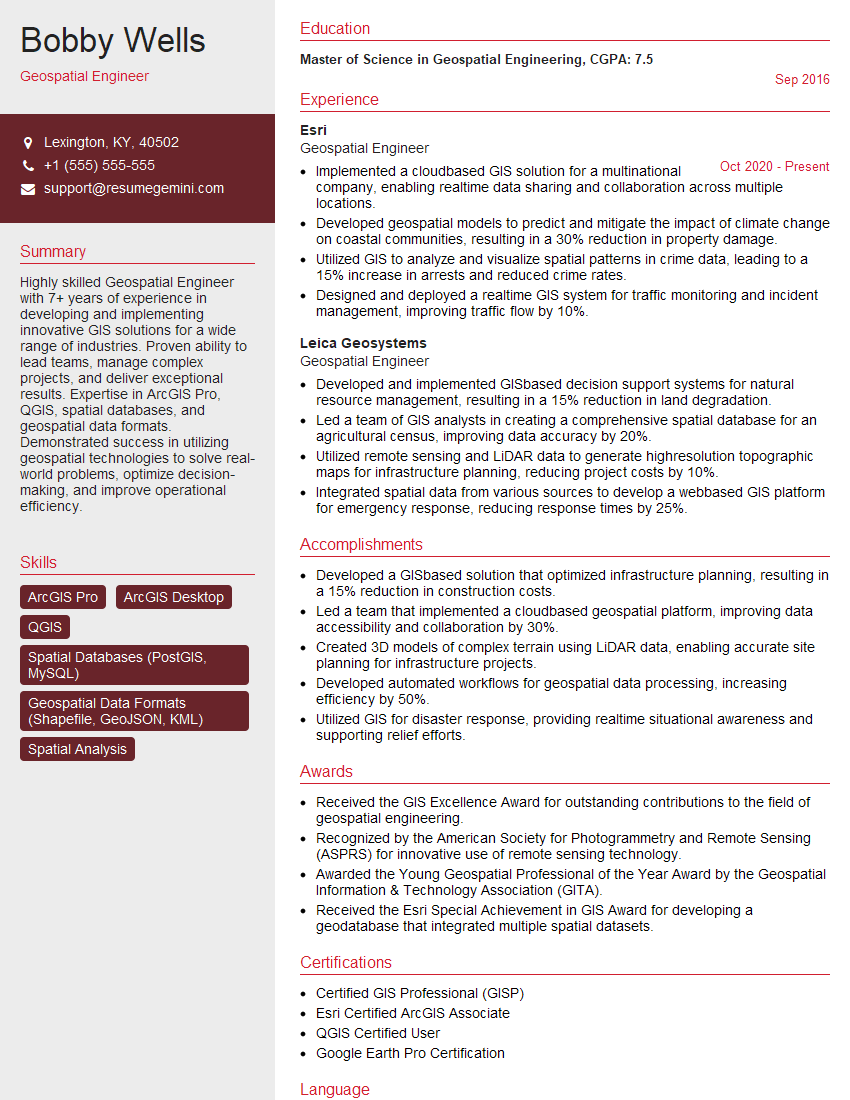

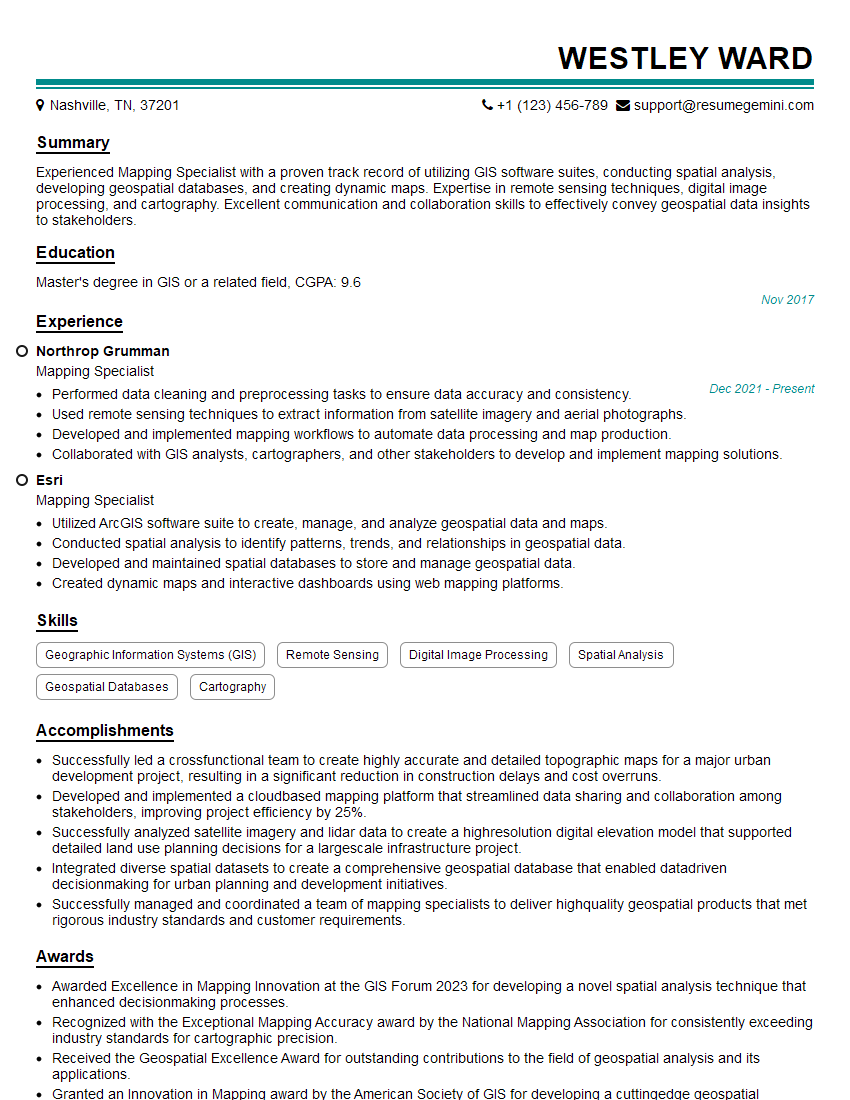

Mastering GIS Mapping and Location Tracking opens doors to exciting and rewarding careers in various fields. Building a strong foundation in these areas significantly enhances your job prospects and allows you to contribute meaningfully to innovative projects. To maximize your chances of landing your dream role, creating an ATS-friendly resume is crucial. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. We provide examples of resumes tailored to GIS Mapping and Location Tracking to give you a head start. Take the next step in your career journey – build a compelling resume with ResumeGemini today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.