The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Interactive and Immersive Experiences interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Interactive and Immersive Experiences Interview

Q 1. Explain the difference between VR, AR, and MR.

Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR) are all immersive technologies, but they differ significantly in how they blend the real and virtual worlds.

- VR completely immerses the user in a simulated environment, blocking out the real world. Think of putting on a headset and being transported to another planet. The user interacts solely with the virtual world.

- AR overlays digital content onto the real world, enhancing the user’s perception of their surroundings. Imagine using your phone’s camera to see furniture virtually placed in your living room before buying it. The real world remains central, with virtual elements added on top.

- MR combines elements of both VR and AR. It allows for interaction between real and virtual objects, where virtual objects can affect the real world and vice versa. A good example is a holographic projection that you can interact with physically, perhaps pushing it or having it react to your touch.

The key difference lies in the level of immersion and interaction with the real world. VR is fully immersive, AR is overlaid, and MR allows for interaction between the two.

Q 2. Describe your experience with a specific 3D modeling software.

I have extensive experience with Blender, a free and open-source 3D creation suite. I’ve used it for various projects, from creating low-poly assets for VR games to designing complex environments for AR applications.

Blender’s versatility is what I appreciate most. Its powerful sculpting tools allow for organic modeling, while its robust mesh editing capabilities are ideal for creating hard-surface objects. I’m proficient in using its node-based material system to create realistic textures and lighting effects. For example, in one project, I used Blender to model a detailed historical building for an AR city tour app. I created individual assets like windows, doors, and architectural details, then assembled them to construct the complete model, carefully texturing them to match historical photographs.

Beyond modeling, I’ve also utilized Blender’s animation and rendering capabilities. Its Cycles renderer is particularly useful for generating high-quality visuals for immersive experiences. The ability to work with a wide range of file formats ensures seamless integration with other software in a production pipeline.

Q 3. What are the key principles of user experience (UX) design in immersive environments?

UX design in immersive environments presents unique challenges, requiring a shift from traditional screen-based interfaces to spatial interactions. Key principles include:

- Intuitive Navigation: Users need clear and natural ways to move through the virtual space. Avoid complex control schemes and prioritize intuitive hand gestures or controller inputs.

- Spatial Awareness: Design should account for the user’s physical space and ensure they don’t experience motion sickness or disorientation. Techniques like teleportation or smooth locomotion need careful consideration.

- Clear Visual Hierarchy: Guide the user’s attention effectively using visual cues, depth of field, and other design elements. Important information should stand out.

- Feedback Mechanisms: Provide immediate and clear feedback to user actions. Haptic feedback, sound effects, and visual cues are vital for creating a sense of presence and engagement.

- Accessibility Considerations: Design for users with various needs, including those with disabilities. This includes support for different input devices and methods.

For example, in designing a VR museum tour, a well-designed UX would involve intuitive hand gestures to navigate between exhibits, clear visual markers to indicate interactive elements, and haptic feedback when interacting with virtual artifacts.

Q 4. How do you ensure accessibility in immersive experiences?

Accessibility is paramount in immersive experiences. It requires considering a diverse range of users with different abilities and needs.

- Input Modalities: Support multiple input methods like hand tracking, voice commands, gaze tracking, and traditional controllers to cater to users with motor impairments.

- Sensory Considerations: Minimize motion sickness through smooth locomotion techniques and avoid overwhelming visual stimuli. Provide options for adjusting visual and audio settings.

- Cognitive Accessibility: Design for users with cognitive disabilities by using clear and concise language, avoiding complex interfaces, and providing ample time for interaction.

- Text-to-Speech and Screen Readers: Incorporate features that convert on-screen text into audio or provide data in formats compatible with screen readers.

- Closed Captions and Subtitles: Provide closed captions and subtitles for all audio content.

For instance, in a VR training simulation, offering multiple input methods (voice commands alongside controllers) and adjustable difficulty levels ensures accessibility for a wider range of learners.

Q 5. What are some common challenges in developing interactive experiences?

Developing interactive experiences presents several challenges:

- Motion Sickness: Poorly designed locomotion can lead to motion sickness, negatively impacting the user experience. Careful consideration of camera movement and user interaction is crucial.

- Technical Limitations: Hardware limitations, especially processing power and rendering capabilities, can restrict the complexity and fidelity of immersive environments.

- Development Costs: Creating high-quality immersive experiences requires specialized skills and tools, resulting in higher development costs compared to traditional software.

- Accessibility Challenges: Ensuring accessibility for diverse users requires careful planning and design considerations across all aspects of the experience.

- Content Creation: Generating high-quality 3D models, textures, and audio requires significant time and effort.

Overcoming these challenges often involves iterative design, rigorous testing, and a strong understanding of both the technology and the user’s needs.

Q 6. Describe your process for designing an intuitive user interface for a VR application.

My process for designing an intuitive VR UI involves several steps:

- User Research: Understanding the target audience and their needs is paramount. This includes conducting user interviews and surveys to inform design decisions.

- Interaction Design: Choosing appropriate input methods and designing intuitive interactions is crucial. This often involves prototyping and testing different interaction schemes.

- Information Architecture: Organizing information spatially in a way that is easy to navigate and understand within the virtual environment.

- Prototyping and Iteration: Building low-fidelity prototypes to test different design options and refine the UI based on user feedback. This iterative process allows for early identification and correction of usability issues.

- Accessibility Considerations: Ensuring the UI is accessible to users with different abilities. This includes providing alternative input methods and clear visual cues.

For example, in a VR game, I’d design a menu system with large, easily selectable buttons, using hand gestures for navigation. I’d test different layouts and button sizes to ensure ease of use before finalizing the design.

Q 7. Explain your understanding of spatial audio and its importance in immersive environments.

Spatial audio refers to the reproduction of sound in a way that mimics its natural behavior in three-dimensional space. It goes beyond simply playing sounds from different speakers; it considers the listener’s position and orientation relative to the sound source.

In immersive environments, spatial audio is crucial for enhancing the sense of presence and realism. By accurately positioning sounds within the virtual space, it creates a more believable and engaging experience. For example, the sound of footsteps should seem to come from behind you if a character is walking behind you in a VR game. Similarly, the sound of a distant plane in an AR experience should feel far away and less intense than a car passing nearby.

Key aspects of spatial audio include:

- 3D Sound Positioning: Accurately placing sounds in three-dimensional space.

- Distance Attenuation: Sounds becoming quieter as they get farther away.

- Environmental Effects: Simulating how sounds reflect and reverberate in different environments (e.g., a large cathedral versus a small room).

- Head Tracking: Sounds shifting position in relation to the user’s head movements.

Without spatial audio, immersive experiences can feel flat and unrealistic, significantly diminishing the level of engagement and immersion.

Q 8. How do you handle user input and interaction in VR/AR applications?

Handling user input in VR/AR is fundamentally different from traditional interfaces. Instead of a mouse and keyboard, we rely on a variety of sensors and input devices to translate real-world actions into digital commands.

This involves several key aspects:

- Controllers: These are the most common input method, allowing users to interact with virtual objects using buttons, triggers, and joysticks. We programmatically map these inputs to specific actions within the application. For example, a trigger press might fire a weapon in a game, or select an object in a design application.

- Hand Tracking: More advanced headsets allow for direct hand tracking, eliminating the need for controllers altogether. The application tracks the position and orientation of the user’s hands, interpreting gestures like pointing, grabbing, or manipulating virtual objects. This requires sophisticated algorithms to accurately interpret hand movements and prevent false positives.

- Gaze Interaction: Many VR/AR systems use eye-tracking to incorporate gaze as an input method. This can be used for selecting objects by looking at them, or for controlling the viewpoint in a scene. Combining gaze with other input methods allows for more intuitive and natural interactions.

- Voice Control: Voice commands provide a hands-free interaction option, allowing users to control aspects of the application using natural language. This requires robust speech recognition and natural language processing (NLP) to accurately interpret commands and handle potential ambiguities.

For instance, in a virtual training simulation I developed, we used a combination of controllers for manipulating tools and hand tracking for more natural object interactions. The system dynamically adjusted the input method based on the context of the task, enhancing usability and immersion.

Q 9. What are some best practices for optimizing performance in immersive experiences?

Optimizing performance in immersive experiences is crucial for delivering a smooth and enjoyable user experience. Lag, stuttering, or low frame rates can quickly break the illusion of presence and lead to motion sickness.

My optimization strategies usually involve:

- Level of Detail (LOD): Dynamically adjusting the level of detail of objects based on their distance from the user. Objects far away require less processing power, improving performance without compromising visual fidelity in close-range interactions.

- Occlusion Culling: This technique eliminates rendering objects that are hidden behind other objects, significantly reducing the rendering load. It’s like only drawing what’s visible in the real world.

- Texture Optimization: Using appropriately sized and compressed textures minimizes memory usage and improves loading times. We often use texture atlases to combine multiple textures into a single image.

- Shader Optimization: Efficiently written shaders can significantly impact performance. Understanding the hardware capabilities and employing appropriate rendering techniques are crucial.

- Asset Optimization: Using optimized 3D models and animations reduces the processing load on the system. Reducing polygon counts and using efficient animation techniques such as skeletal animation and blending are essential steps.

- Multithreading: Distributing the workload across multiple processor cores, crucial for maximizing performance across high-end hardware.

For example, in a large-scale VR architectural walkthrough I worked on, implementing LOD and occlusion culling reduced the rendering load by over 60%, resulting in a significantly smoother and more enjoyable user experience.

Q 10. How familiar are you with different VR/AR headsets and their capabilities?

I have extensive experience with a variety of VR/AR headsets, including:

- Oculus Rift/Quest: Familiar with the inside-out tracking, the controller input system, and the development tools.

- HTC Vive/Vive Pro: Experienced with room-scale tracking and the associated challenges of setting up and calibrating the system, along with their controller interfaces.

- Microsoft HoloLens: Knowledgeable in developing mixed reality experiences and utilizing spatial mapping capabilities.

- Magic Leap: Understanding its unique approach to augmented reality and its limitations, particularly regarding field of view.

My understanding extends beyond just the hardware to the SDKs and development tools associated with each platform. This understanding allows me to select the most suitable hardware and platform for a given project based on its specific requirements and target audience. For example, for a high-fidelity, room-scale VR experience, I might choose the HTC Vive Pro, while a lightweight AR experience might best utilize the HoloLens.

Q 11. Describe your experience with game engines like Unity or Unreal Engine.

I’m proficient in both Unity and Unreal Engine, having used them extensively for developing VR and AR applications. My experience encompasses:

- Unity: I’ve built numerous projects using Unity’s robust ecosystem, leveraging its ease of use and extensive asset store for rapid prototyping and development. I’m comfortable using various scripting languages like C# to implement complex interactions and game logic. I’m also familiar with various Unity features specific to VR/AR development, such as VR input handling, spatial audio, and optimization techniques.

- Unreal Engine: I’ve utilized Unreal Engine for projects requiring high-fidelity visuals and complex physics simulations. I’m familiar with its Blueprint visual scripting system, as well as C++ for more demanding tasks. Unreal Engine’s powerful rendering capabilities make it ideal for visually stunning and immersive experiences.

The choice between the two depends heavily on project requirements. Unity often provides a quicker development cycle for prototyping and simpler projects, whereas Unreal Engine’s strengths lie in high-end visuals and complex simulations. I can seamlessly transition between the two based on the specific needs of each project.

Q 12. What are some ethical considerations when designing immersive experiences?

Ethical considerations are paramount in designing immersive experiences. The power of VR/AR to create highly believable and engaging environments necessitates careful consideration of several key areas:

- Privacy: VR/AR often collects significant user data, including eye tracking and spatial movement. It’s crucial to obtain informed consent and clearly outline how user data will be used and protected. Anonymization and data minimization are important strategies.

- Accessibility: Designing immersive experiences that are inclusive and accessible to individuals with disabilities is essential. This requires careful consideration of different input methods, visual and auditory cues, and cognitive load.

- Safety: VR/AR experiences can sometimes cause motion sickness, disorientation, or other physical discomfort. It’s crucial to implement measures to mitigate these risks. Clear safety warnings and appropriate onboarding are vital.

- Misinformation and Manipulation: VR/AR’s ability to create realistic simulations can be exploited to spread misinformation or manipulate users. It’s essential to be mindful of the potential for misuse and strive to design experiences that are informative and truthful.

- Mental Health: Highly immersive experiences can have a significant impact on users’ mental and emotional states. It’s important to consider the potential for negative psychological effects and to design experiences that are positive and uplifting.

For instance, in a project simulating a hazardous environment, we carefully considered the safety implications and incorporated features to reduce the risk of motion sickness, such as providing frequent rest periods and allowing users to easily exit the simulation.

Q 13. How do you conduct user testing for interactive experiences?

User testing is an integral part of the development process for interactive experiences. My approach involves a multi-stage process:

- Usability Testing: Observing users interacting with the experience to identify usability issues and areas for improvement. We employ think-aloud protocols, asking users to verbalize their thoughts and actions.

- Playtesting: Similar to usability testing but more focused on the enjoyment and engagement of the experience. We collect feedback on the overall flow, difficulty, and immersion level.

- A/B Testing: Comparing different versions of the experience to determine which performs better based on user feedback and metrics like task completion rate and engagement time.

- Surveys and Questionnaires: Gathering quantitative and qualitative data through post-session surveys to assess user satisfaction, identify pain points, and measure overall user experience.

- Eye Tracking: Using eye tracking technology to understand user attention and gaze patterns. This provides insight into areas of engagement or confusion.

For a recent project, we conducted iterative playtesting sessions with a diverse group of participants. This feedback led to several key improvements in the game’s controls and overall user experience. We used eye-tracking to help us design more effective visual cues.

Q 14. Explain your experience with motion tracking and its challenges.

Motion tracking is the cornerstone of immersive experiences, allowing the system to accurately track the user’s position and orientation in the virtual environment. However, it presents unique challenges:

- Accuracy and Precision: Achieving accurate and precise tracking is crucial for avoiding disorientation and motion sickness. Factors like environmental conditions (lighting, occlusion), sensor limitations, and latency can all affect tracking accuracy.

- Drift: Over time, tracking systems can drift, causing the virtual environment to slowly deviate from the user’s actual position. Robust algorithms and calibration techniques are required to minimize drift.

- Occlusion: Objects blocking the view of the tracking sensors can cause tracking errors or complete loss of tracking. This is particularly problematic in room-scale VR systems.

- Latency: The delay between the user’s movement and the system’s response is another critical factor. High latency can lead to motion sickness and a degraded user experience. Efficient algorithms and optimized hardware are necessary to minimize latency.

- Calibration: Setting up and calibrating the tracking system correctly is often a time-consuming and complex process. The calibration process must be intuitive and user-friendly for a positive user experience.

In one project, we addressed occlusion issues by incorporating multiple sensors and employing sophisticated filtering algorithms to compensate for temporary tracking losses. We also implemented real-time calibration adjustments to minimize drift and enhance the overall tracking stability.

Q 15. How do you create a sense of presence and immersion in your designs?

Creating a sense of presence and immersion in interactive experiences hinges on meticulously crafting a believable and responsive virtual environment. This involves leveraging several key design principles.

- High-fidelity visuals and audio: Realistic visuals and immersive soundscapes are crucial. Think of the difference between a grainy, low-resolution video and a crisp, high-definition one – the latter immediately draws you in more completely. Similarly, spatial audio, where sounds appear to originate from specific locations in the virtual space, significantly enhances immersion.

- Interactive elements and agency: Users need to feel like active participants, not passive observers. This means incorporating elements they can manipulate, puzzles they can solve, and choices they can make that impact the narrative or environment. The feeling of control is paramount.

- Consistent and believable world-building: A well-defined world with consistent rules, physics, and narrative logic fosters believability. Inconsistent details break the spell of immersion. For example, if gravity behaves erratically, the experience feels less real.

- Sensory feedback beyond sight and sound: Haptic feedback (physical sensations), realistic smells (olfactory stimulation – though still in its early stages), and even temperature changes can significantly enhance presence, making the experience more multi-sensory and engaging.

For example, in a virtual museum tour, high-resolution 3D models of artifacts, ambient sounds of a museum hall, and the ability to zoom in and examine details contribute to immersion. Adding haptic feedback to a virtual button press would further solidify this feeling of presence.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are the key considerations for designing for different types of immersive hardware?

Designing for different immersive hardware requires a nuanced understanding of each platform’s capabilities and limitations. Key considerations include:

- Field of View (FOV): Head-mounted displays (HMDs) vary widely in their FOV. A wider FOV provides a more expansive and immersive experience, but may be more computationally demanding. Designs need to adapt to the available FOV to avoid visual distortions or clipping.

- Resolution and refresh rate: Higher resolution and refresh rates lead to smoother and more comfortable experiences, reducing motion sickness. Designs must account for the specific resolution and refresh rate of the target hardware to ensure optimal visual quality.

- Tracking accuracy and latency: The accuracy of head and hand tracking directly impacts the sense of presence. High latency (delay between movement and on-screen response) can cause nausea. Designs should optimize for the specific tracking capabilities of the hardware and account for potential latency.

- Input methods: Different hardware supports various input methods, including controllers, hand tracking, voice control, and eye tracking. The design must leverage the most suitable input methods for the given hardware and user interaction goals.

- Form factor and ergonomics: The physical design of the hardware impacts user comfort and usability. The design should be tailored to the specific form factor, ensuring comfortable and intuitive interaction.

For instance, a VR experience designed for a high-end HMD with advanced hand tracking capabilities can offer more complex and nuanced interactions than one designed for a mobile VR headset. The design needs to be adaptable across the range of hardware to deliver a consistent and engaging experience.

Q 17. Describe your experience with creating 360° video content.

My experience with 360° video content involves both creation and optimization for different platforms. I’ve worked on projects ranging from documentary films to interactive marketing campaigns. This typically involves several steps:

- Planning and shooting: Careful planning of camera placement and movement is crucial to avoid disorienting the viewer. We often use multiple cameras to capture overlapping footage, enhancing stitching quality.

- Stitching and post-production: The footage from multiple cameras needs to be stitched together seamlessly to create a complete 360° view. This requires specialized software and expertise to correct distortions and ensure a smooth viewing experience. Post-production also includes color correction, audio mixing, and potentially adding interactive elements.

- Optimization for different platforms: 360° videos need to be optimized for various platforms, including VR headsets, mobile devices, and web browsers. This involves adjusting resolution, bitrate, and format to ensure smooth playback and optimal performance.

- Interactive elements (optional): Adding hotspots, interactive elements, and branching narratives to a 360° video enhances engagement. This allows users to navigate the environment and experience the content at their own pace, transforming passive viewing into active participation.

One project involved creating a 360° virtual tour of a historical site, where users could explore the location independently or follow a guided narrative. This required careful consideration of camera placement to showcase key features and create a compelling sense of place.

Q 18. How do you incorporate user feedback into the iterative design process?

Incorporating user feedback is integral to iterative design. We employ several methods:

- Usability testing: We conduct regular usability tests with target users, observing their interactions and gathering feedback on navigation, clarity, and overall experience. This often involves think-aloud protocols, where users verbalize their thought processes while interacting with the experience.

- Surveys and questionnaires: Surveys and questionnaires are used to gather quantitative and qualitative data on user preferences, satisfaction, and suggestions for improvement. This provides a broad overview of user opinions.

- A/B testing: For specific design elements, A/B testing allows us to compare different versions and assess which performs better. For instance, comparing two different navigation schemes.

- Heatmaps and analytics: Tracking user interactions with heatmaps and analytics tools helps identify areas of high and low engagement, informing design iterations. These data points offer objective insights into user behavior.

- Iterative prototyping: We develop quick prototypes early in the design process to test core mechanics and gather feedback before investing significant time in high-fidelity assets. This allows for rapid design iteration and cost-effectiveness.

Feedback is used not just to fix bugs, but also to refine the overall design, ensuring it aligns with user expectations and preferences. This iterative process leads to a more polished and user-friendly final product.

Q 19. Explain your understanding of haptics technology and its applications.

Haptics technology refers to the use of tactile feedback to enhance user interaction with digital content. It involves simulating the sense of touch through various means, generating sensations like vibration, pressure, or temperature.

- Actuators: These are the devices responsible for creating the haptic feedback. They range from simple vibrotactile motors in game controllers to more complex systems that can simulate texture and force feedback.

- Applications: Haptics are used in a broad range of applications, including gaming (providing realistic feedback from weapons or environmental interactions), virtual and augmented reality (improving sense of presence by simulating touching virtual objects), medical simulation (allowing trainees to practice procedures on virtual patients with realistic tactile response), industrial training (simulating operating machinery, offering a safer alternative to real-world training), and assistive technologies (helping people with visual impairments interact with digital information through touch).

- Types of Haptics: There are various types of haptic feedback including:

- Vibrotactile: Simple vibrations, often used in controllers.

- Kinesthetic/Force Feedback: Providing resistance or force, typically used in more sophisticated systems like robotic arms.

- Tactile Feedback: Simulating texture and surface properties, still an area of active research.

For example, in a VR surgery simulator, haptic feedback allows trainees to feel the resistance of virtual tissues, improving their procedural skills. In a gaming context, haptic feedback in a racing game can simulate the impact of hitting a curb, adding to the realism.

Q 20. What are some strategies for storytelling in immersive environments?

Storytelling in immersive environments requires a different approach than traditional methods. The key is to leverage the unique capabilities of the medium.

- Spatial storytelling: Use the 3D space to reveal information gradually, unfolding the narrative as the user explores the environment. This might involve discovering clues, finding hidden objects, or witnessing events from multiple perspectives.

- Interactive narrative: Give the user agency and choice, allowing them to influence the story’s progression and outcome. This encourages engagement and makes the story feel more personal.

- Environmental storytelling: Let the environment itself tell part of the story. Use visual cues, soundscapes, and interactive elements to convey information without explicit exposition. Think of the subtle details that add to the atmosphere in a game level or film setting.

- Emotional engagement: Use the sensory richness of the environment to evoke emotions in the user. This might involve using sound design, lighting, and visual effects to create a mood, or incorporating haptic feedback to enhance emotional impact.

- Multi-sensory engagement: Immersive storytelling should engage as many senses as possible for a richer and more impactful narrative. This might involve incorporating sounds, visual effects, temperature changes and even smells.

For instance, a historical reenactment in VR could allow users to explore a battlefield, find personal artifacts and letters, and witness key events from multiple perspectives, creating a much more engaging narrative than a simple documentary.

Q 21. How do you manage large datasets for immersive experiences?

Managing large datasets for immersive experiences requires efficient data management strategies. This is especially crucial for high-fidelity 3D models, high-resolution textures, and complex simulations.

- Data compression techniques: Employing efficient compression algorithms minimizes storage space and bandwidth requirements. Techniques like texture compression and mesh simplification are crucial.

- Level of Detail (LOD): Using LOD techniques renders different levels of detail based on the user’s distance from objects. This reduces rendering load, improving performance, and optimizing for different hardware.

- Streaming and on-demand loading: Instead of loading all data at once, stream assets on demand as the user interacts with the environment. This reduces initial load times and resource consumption.

- Cloud-based storage and processing: Leveraging cloud services for storage and processing can handle large datasets more effectively, especially for experiences involving multiple users or complex simulations.

- Data optimization and pre-processing: Optimizing models by reducing polygon count and optimizing textures dramatically reduces file sizes, making the experience more efficient.

- Database management systems: Employing database management systems to organize and manage data effectively is critical for maintaining data integrity and accessibility, particularly in complex projects.

For example, in a large-scale virtual city environment, using LOD would display buildings with high detail only when the user is close to them, allowing for smooth navigation while keeping resources low. Streaming textures and 3D models as the user moves around the city further helps efficiency.

Q 22. Describe your experience with version control systems in the context of interactive design.

Version control systems (VCS) are crucial in interactive design, especially in collaborative projects. Think of them as a meticulous record-keeper for your project’s evolution, allowing multiple designers and developers to work simultaneously without overwriting each other’s work. My experience primarily involves Git, a distributed VCS. I’m proficient in branching, merging, resolving conflicts, and using platforms like GitHub and GitLab for code and asset management. In interactive design, this means we can track changes to 3D models, code for animations, UI elements, and even design documents. For instance, if a designer revises a character model, we can easily revert to an earlier version if needed, compare iterations, and even collaborate on the same model concurrently. This significantly reduces the risk of errors and lost work, facilitating efficient team collaboration and improving project quality.

In a recent project developing a VR escape room, Git allowed us to manage multiple levels, puzzles, and character models independently, ensuring a streamlined workflow and easy integration of updates.

Q 23. What are some techniques for optimizing 3D models for VR/AR performance?

Optimizing 3D models for VR/AR is vital for smooth performance, as high-poly models can lead to lag and motion sickness. Several techniques are crucial: Level of Detail (LOD): Creating multiple versions of a model with varying polygon counts. The system renders the lowest-poly version from a distance, switching to higher-poly versions as the user gets closer. Texture Optimization: Using compressed textures (e.g., using formats like DXT or ASTC) to reduce file size without significant loss of visual quality. Careful texture resolution selection and smart atlasing (combining multiple textures into one) are also key. Model simplification: Reducing the polygon count (polygons) by techniques such as decimation or edge collapsing. This shrinks the model size, resulting in fewer calculations the system has to perform. Mesh optimization: Ensuring that models have well-structured geometry, including optimizing triangles and normals.Occlusion culling: This technique hides objects that are not visible to the camera (e.g. objects hidden behind walls), reducing the rendering load. It is very effective in improving performance.

For example, in a VR architectural walkthrough, we used LODs for building models, ensuring smooth performance even with complex geometries. High-resolution textures were used only for close-up views, while lower-resolution versions were used for distant views. This reduced the overall workload on the VR headset significantly.

Q 24. Explain your understanding of different interaction paradigms in immersive environments (e.g., hand tracking, controllers).

Immersive environments offer diverse interaction paradigms. Hand tracking uses cameras or sensors to detect hand movements, offering a natural and intuitive interaction style – think of reaching out and grabbing virtual objects. This adds realism, but requires sufficient processing power. Controllers (like the Oculus Touch or HTC Vive controllers) provide more precise and direct manipulation. They are reliable but lack the natural feel of hand tracking. Voice control allows users to interact via voice commands, useful for hands-free operation or accessibility. However, it can be prone to misinterpretations. Gaze interaction allows users to select or interact with elements by looking at them, usually in conjunction with other methods. It provides an intuitive interface but has limitations in complex interactions. Haptic feedback uses vibrations or other physical sensations to enhance immersion, adding another layer to the experience, and improving feedback to user actions. The optimal paradigm depends on the application’s context and target audience. A VR game might benefit from controllers for precise actions, while an AR application for interior design could utilize hand tracking for a more natural feel.

Q 25. How familiar are you with different development frameworks for AR applications?

I have experience with several AR development frameworks. ARKit (iOS) and ARCore (Android) are the dominant platforms for mobile AR development, providing essential features for object tracking, plane detection, and anchor management. I’ve also used Unity and Unreal Engine, which are powerful game engines with robust AR capabilities; they offer cross-platform deployment and advanced rendering features. Vuforia Engine provides a comprehensive SDK focusing on image recognition and tracking, useful for creating location-based AR experiences. My choice of framework depends on project requirements: for simple mobile AR, ARKit or ARCore suffice, while complex, high-fidelity experiences necessitate Unity or Unreal Engine.

For example, in a recent project creating an AR museum guide, we leveraged Vuforia for image recognition to trigger 3D models of artifacts when the user pointed their phone at corresponding images in a printed brochure.

Q 26. How would you approach designing an immersive experience for a specific target audience?

Designing an immersive experience for a specific target audience requires a deep understanding of their needs, preferences, and technical capabilities. First, user research is paramount; understanding the audience’s demographics, technical proficiency, and expectations helps shape the design. Next, accessibility is vital; consider impairments and ensure inclusivity. This includes providing alternative interaction methods and adjusting difficulty levels. Usability testing throughout the design process ensures the experience is intuitive and enjoyable. This can include user surveys, and direct feedback sessions. Finally, iterative design, involving continuous refinement based on user feedback, is critical for delivering a successful immersive experience. For instance, designing a VR training simulation for surgeons would require a different approach than creating an AR game for children; the former demands high fidelity and realistic interactions, while the latter needs simplicity and engagement.

Q 27. Describe a time you had to overcome a technical challenge in an immersive project.

In a project creating a large-scale VR environment, we encountered performance issues caused by the sheer amount of high-poly 3D models. The initial prototype suffered from significant lag and frame drops. To overcome this, we implemented a multi-stage optimization process. First, we reduced the polygon count of the 3D models using decimation techniques. Then we implemented LODs (levels of detail), which allowed the system to render lower-resolution models at a distance. We also optimized textures, using compression techniques without compromising visual quality significantly. Finally, we implemented occlusion culling, which significantly reduced the number of polygons the system needed to render at any given time. This systematic approach improved performance by over 50%, resulting in a significantly more immersive and enjoyable VR experience. The project shifted from unusable to a highly playable and responsive environment.

Q 28. What are your thoughts on the future of immersive technologies?

The future of immersive technologies is incredibly exciting. I foresee several key trends. Increased accessibility: The cost of hardware will continue to decrease, making VR and AR more accessible to the general public. Improved realism: Advancements in display technology, haptics, and tracking will lead to more realistic and immersive experiences. Seamless integration: VR and AR will be more seamlessly integrated into our daily lives, impacting various fields, including education, healthcare, and entertainment. The Metaverse is likely to evolve further, offering persistent virtual environments for socializing, working, and playing. However, challenges remain in areas like standardization, overcoming motion sickness, and addressing ethical concerns related to data privacy and potential misuse of the technology. Despite these challenges, the potential impact of immersive technologies across many sectors is immense, and I’m optimistic about their future development.

Key Topics to Learn for Interactive and Immersive Experiences Interview

- User Experience (UX) and User Interface (UI) Design Principles: Understanding how to design engaging and intuitive interactions within immersive environments. Consider the impact of different input methods (VR controllers, hand tracking, gaze interaction) on the user experience.

- 3D Modeling and Animation: Familiarity with software and techniques for creating realistic and visually appealing 3D assets for immersive applications. This includes understanding different modeling techniques and animation principles for optimal performance.

- Virtual Reality (VR) and Augmented Reality (AR) Technologies: A strong grasp of the underlying technologies, hardware, and software platforms used in VR/AR development. Explore different VR/AR headsets and their capabilities.

- Spatial Audio and Sound Design: Understanding how to create immersive soundscapes that enhance the user experience. This includes binaural audio, 3D sound positioning, and the impact of sound on presence and immersion.

- Interactive Storytelling and Narrative Design: Designing compelling narratives that are engaging and emotionally resonant within interactive experiences. Consider how branching narratives and player agency can shape the story.

- Game Engine Technologies (Unity, Unreal Engine): Practical experience using game engines for building interactive and immersive applications. Focus on understanding scene management, scripting, and optimization techniques.

- Performance Optimization and Troubleshooting: The ability to identify and resolve performance bottlenecks in VR/AR applications, ensuring a smooth and enjoyable user experience. This includes understanding frame rates, rendering techniques, and memory management.

- Accessibility in Immersive Experiences: Designing inclusive experiences that are accessible to users with disabilities. This involves considering different types of impairments and adapting design to accommodate them.

Next Steps

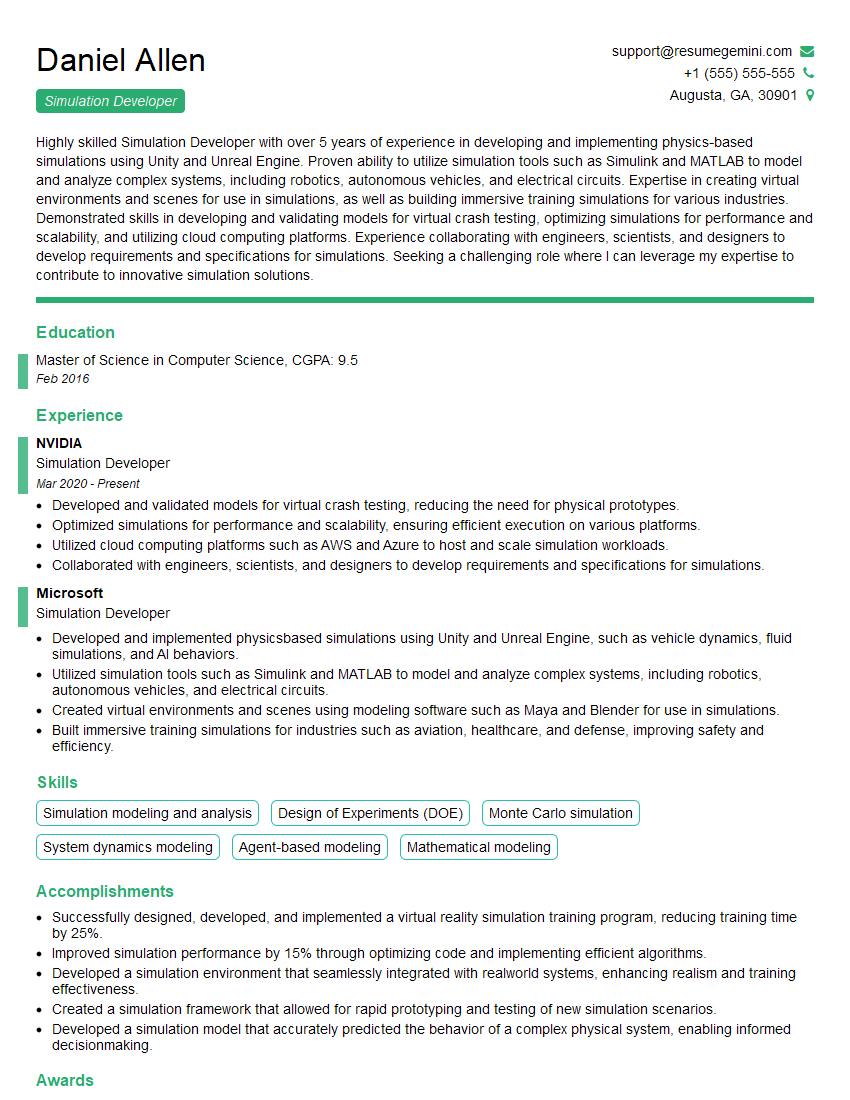

Mastering Interactive and Immersive Experiences opens doors to exciting career opportunities in rapidly growing fields like gaming, entertainment, education, and training. To maximize your job prospects, invest time in creating a strong, ATS-friendly resume that showcases your skills and experience effectively. ResumeGemini is a trusted resource that can help you build a professional and impactful resume, ensuring your qualifications stand out. Examples of resumes tailored to Interactive and Immersive Experiences are available to guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.