Preparation is the key to success in any interview. In this post, we’ll explore crucial Object Detection and Recognition in LIDAR Data interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Object Detection and Recognition in LIDAR Data Interview

Q 1. Explain the difference between LiDAR and other sensor modalities (e.g., camera, radar).

LiDAR, cameras, and radar are all sensor modalities used for environmental perception, but they differ significantly in how they gather and represent data. LiDAR (Light Detection and Ranging) uses lasers to measure distances to objects, creating a 3D point cloud representing the scene. This provides highly accurate depth information but can be sensitive to weather conditions like fog or rain. Cameras capture images by recording reflected light, offering rich color and texture information but lacking direct depth information – depth must be inferred using techniques like stereo vision or structure from motion. Radar, on the other hand, uses radio waves to detect objects. It’s less sensitive to weather conditions than LiDAR and offers good performance at long ranges, but its resolution is generally lower, providing less detailed information about object shapes.

Think of it this way: LiDAR is like a precise 3D scanner, cameras are like our eyes, offering a detailed visual representation, and radar is like a broad sonar, giving you a general sense of the surroundings.

Q 2. Describe the process of point cloud registration.

Point cloud registration is the process of aligning multiple point clouds acquired from different viewpoints or at different times. Imagine taking several photos of an object from different angles – registration is like stitching those photos together to create a complete 3D model. This involves finding a transformation (rotation and translation) that best aligns the points in one point cloud with the points in another. Common approaches include Iterative Closest Point (ICP) which iteratively refines the transformation by finding the closest points between clouds and minimizing the distance between them. Other methods leverage feature extraction and matching to find corresponding points across clouds before applying a transformation.

For example, in autonomous driving, point clouds from multiple LiDAR sensors on a vehicle need to be registered to create a comprehensive view of the environment. Accurate registration is crucial for tasks like mapping and object tracking.

Q 3. What are common challenges in processing LiDAR data, and how do you address them?

Processing LiDAR data presents several challenges. One major challenge is the sheer volume of data – a single scan can generate millions of points. This necessitates efficient algorithms and data structures. Another significant challenge is noise and outliers – erroneous points in the data caused by sensor limitations or environmental factors like reflections. Data sparsity in certain areas, especially at longer ranges, is another problem, leading to incomplete representations of the scene. Finally, variations in reflectivity of different surfaces can create inconsistencies in point cloud density.

We address these challenges through various techniques, including filtering to remove noise and outliers, downsampling to reduce data volume, interpolation to fill in sparse areas, and normalization to account for variations in reflectivity. Sophisticated algorithms that handle missing data and noise robustly are also critical.

Q 4. Explain different LiDAR point cloud filtering techniques.

LiDAR point cloud filtering aims to remove unwanted points, improving data quality and computational efficiency. Several techniques exist:

- Statistical Outlier Removal: This method calculates the local density of points and removes points that fall outside a certain statistical threshold (e.g., standard deviation).

- Radius Outlier Removal: This technique checks the number of neighboring points within a specified radius around each point. Points with fewer neighbors than a predefined threshold are classified as outliers and removed.

- Voxel Grid Filtering: This approach divides the point cloud into a 3D grid (voxels). For each voxel, only one point (e.g., the centroid) is retained, effectively downsampling the data. This significantly reduces computational load.

- Conditional Filtering: Filters points based on specific criteria, such as intensity, reflectivity, or elevation. This is useful for removing points from the ground or specific object classes.

The choice of filtering technique often depends on the application and the specific characteristics of the point cloud data.

Q 5. How do you handle outliers and noise in LiDAR data?

Outliers and noise significantly impact the accuracy of object detection and other tasks. We handle them using a combination of filtering methods discussed earlier and robust estimation techniques. For instance, using a median filter instead of a mean filter is more robust to outliers as it’s less sensitive to extreme values. In addition, techniques like Random Sample Consensus (RANSAC) can be used to fit models (e.g., planes for ground segmentation) while ignoring outliers. More advanced approaches use machine learning models trained to identify and classify noise points within the point cloud. Proper preprocessing and careful choice of algorithms are key to minimize the impact of outliers and noise on downstream tasks.

Q 6. Discuss different approaches to object detection in LiDAR point clouds.

Several approaches exist for object detection in LiDAR point clouds:

- Voxel-based methods: These methods first voxelize the point cloud, converting it into a 3D grid. Features are then extracted from the voxels, and a classifier or detector (e.g., convolutional neural network) is used to identify objects.

- Point-based methods: These methods directly work on the raw point cloud data, utilizing deep learning architectures like PointNet or PointPillars specifically designed for point cloud processing. These methods often leverage the spatial relationships between points to learn features and detect objects.

- Region-based methods: These methods typically involve identifying regions of interest in the point cloud and performing object classification within those regions. This can involve clustering algorithms or segmentation techniques to partition the point cloud into meaningful regions before classification.

- Hybrid methods: Combine aspects of voxel-based and point-based approaches to leverage the strengths of both.

The best method depends on factors like computational resources, desired accuracy, and the characteristics of the data.

Q 7. Compare and contrast voxel-based and point-based object detection methods.

Voxel-based and point-based methods differ fundamentally in how they handle LiDAR point cloud data. Voxel-based methods discretize the point cloud into a regular grid, losing some spatial resolution in the process but gaining computational efficiency through structured data representation. They are typically faster and easier to implement, but can suffer from information loss due to quantization. Convolutional neural networks (CNNs) are commonly used with voxelized data, leveraging their strengths in processing structured grid data.

Point-based methods, on the other hand, operate directly on the raw, unordered point cloud. This preserves all the spatial information but presents challenges due to the unordered nature of the data. Specialized deep learning architectures like PointNet and its variants have been developed to address this challenge, learning directly from point sets. While point-based methods generally provide higher accuracy, they are usually more computationally expensive than voxel-based methods.

Choosing between the two depends on the balance between computational resources and desired accuracy. For applications requiring high accuracy and where computational resources are less of a constraint, point-based methods are preferred. If speed is paramount, voxel-based methods might be a more suitable choice.

Q 8. Explain the concept of sensor fusion and its application in autonomous driving.

Sensor fusion, in the context of autonomous driving, is the process of combining data from multiple sensors to get a more comprehensive and robust understanding of the environment than any single sensor could provide on its own. Think of it like having multiple witnesses to an event – each witness might have a slightly different perspective, but by combining their accounts, you get a much clearer picture.

In autonomous vehicles, common sensors include LiDAR (Light Detection and Ranging), cameras, and radar. LiDAR excels at providing accurate 3D distance measurements, cameras offer rich visual information, and radar can detect objects even in low-light or adverse weather conditions. By fusing these data sources, we can compensate for the individual limitations of each sensor. For instance, LiDAR might struggle to classify objects (is that a pedestrian or a lamppost?), while a camera excels at this. Combining the distance information from LiDAR with the visual classification from a camera yields a much more reliable and complete understanding of the scene.

A practical application is in object detection. LiDAR provides the 3D location of an object, while a camera can classify it as a car, bicycle, or pedestrian. This fused information significantly improves the accuracy and robustness of the autonomous vehicle’s perception system, leading to safer and more reliable navigation.

Q 9. How do you evaluate the performance of an object detection system for LiDAR data?

Evaluating the performance of a LiDAR-based object detection system requires a multifaceted approach, using standard metrics commonly employed in computer vision. We typically use precision, recall, and their harmonic mean, the F1-score, to assess the accuracy of our detections.

- Precision: measures the proportion of correctly identified objects among all detected objects. A high precision means we are making few false positives (incorrectly identifying something as an object).

- Recall: measures the proportion of correctly identified objects among all actual objects present. High recall means we are missing few objects (false negatives).

- F1-score: balances precision and recall, providing a single metric that summarizes the overall performance. It’s particularly useful when we need to consider both false positives and false negatives.

Beyond these basic metrics, we also consider the Intersection over Union (IoU) which measures the overlap between the predicted bounding box and the ground truth bounding box. A higher IoU indicates a more accurate localization of the object. We often set an IoU threshold (e.g., 0.5) to determine whether a detection is considered a true positive.

Finally, we might evaluate metrics specific to LiDAR data, such as the accuracy of distance estimation and the completeness of point cloud reconstruction. A robust evaluation requires a comprehensive test set with diverse scenarios, including challenging conditions like occlusion, varying weather, and different object types.

Q 10. Describe your experience with different LiDAR data formats (e.g., PCD, LAS).

I have extensive experience working with various LiDAR data formats. Two of the most common are PCD (Point Cloud Data) and LAS (LASer Scan).

- PCD: This is a widely used open format, often preferred for its simplicity and flexibility. It’s essentially a text-based file that stores the 3D coordinates (x, y, z) of each point, along with potentially other attributes like intensity, reflectivity, and timestamp. Libraries like PCL (Point Cloud Library) provide excellent tools for processing PCD files.

- LAS: This format, primarily used in airborne LiDAR surveys, is more structured and includes metadata about the scanning process. It’s often preferred for large-scale projects because it supports compression and efficient indexing. LAStools is a powerful suite of utilities designed to process LAS files.

My experience includes both reading and writing these file formats, handling various point attributes, and performing preprocessing steps such as filtering and noise reduction. I’m also familiar with other formats like PLY (Polygon File Format) and BIN, adapting my workflow based on the specific data requirements and available tools.

Q 11. What are the limitations of using only LiDAR data for object detection?

While LiDAR provides excellent 3D point cloud data, relying solely on it for object detection has several limitations. LiDAR’s primary weakness is its inability to easily classify objects. It provides excellent geometric information (shape and location) but lacks the rich semantic understanding offered by cameras.

- Limited semantic information: LiDAR points represent geometric properties, but don’t inherently indicate what the object is. A cluster of points might represent a car, a pedestrian, or a bush – without additional information, the system struggles to differentiate.

- Occlusion challenges: Objects hidden behind other objects are difficult to detect completely from LiDAR data alone. While some parts might be visible, the full extent of the occluded object remains unknown.

- Sensitivity to weather conditions: Heavy rain, snow, or fog can significantly affect the quality of LiDAR point clouds, reducing the accuracy and reliability of object detection.

- Cost and computational requirements: High-resolution LiDAR sensors can be expensive and computationally demanding to process.

Therefore, sensor fusion with cameras or radar is crucial for overcoming these limitations and building a robust and reliable object detection system for autonomous driving.

Q 12. How do you handle occlusion in LiDAR point clouds?

Occlusion is a significant challenge in LiDAR-based object detection. When an object is partially or fully hidden behind another, a substantial portion of its point cloud is missing. Handling occlusion requires a combination of techniques:

- Contextual information: Utilizing information from other sensors (cameras, radar) can help infer the shape and location of occluded objects based on the visible parts and surrounding context.

- Point cloud completion: Techniques like deep learning-based methods can be used to predict the missing parts of an occluded object’s point cloud by learning patterns from the visible parts and other similar objects seen in the training data.

- Shape priors: Incorporating prior knowledge about object shapes can help fill in missing parts based on what we already know about typical object geometries. For instance, assuming a car has four wheels and a roughly rectangular shape.

- Data augmentation: During training, artificially creating occlusions in the data can help the model learn to handle missing information more effectively.

The choice of techniques will depend on the specific application and available computational resources. Often, a combination of methods is employed for best results.

Q 13. Explain your understanding of different LiDAR sensor types (e.g., ToF, spinning, solid-state).

LiDAR sensors come in various types, each with its advantages and disadvantages.

- Time-of-Flight (ToF) LiDAR: These sensors measure the time it takes for a light pulse to travel to an object and back, directly calculating the distance. They are generally compact and low-cost but can be susceptible to ambient light interference and have limitations in range and accuracy.

- Spinning LiDAR: These sensors use a rotating mirror or prism to sweep a laser beam across the scene, creating a 360-degree view. They provide high-resolution data over a wide field of view but can be mechanically complex and potentially less robust.

- Solid-state LiDAR: These sensors use micro-electromechanical systems (MEMS) or other solid-state technology to steer the laser beam without moving parts. They offer greater robustness, lower power consumption, and potentially higher reliability compared to spinning LiDAR, though their range and resolution can sometimes be lower.

My experience encompasses working with data from different sensor types, understanding their specific characteristics, and adapting my processing pipelines accordingly. The choice of sensor type often depends on the specific application requirements, such as range, field of view, accuracy, cost, and robustness.

Q 14. Discuss your experience with deep learning architectures for LiDAR-based object detection.

I have significant experience applying various deep learning architectures for LiDAR-based object detection. PointNet, PointPillars, and VoxelNet are some of the prominent architectures I’ve worked with.

- PointNet and its variants: These architectures directly process point cloud data without requiring explicit voxelisation, making them efficient for handling large datasets. I have used PointNet++ for its ability to handle local features effectively, improving the accuracy of object detection, particularly in complex scenes.

- PointPillars: This architecture combines the advantages of both point-based and voxel-based methods. It projects points into pseudo-images (pillars) and then uses convolutional neural networks (CNNs) for efficient feature extraction and object detection. I’ve used PointPillars for its ability to efficiently handle large-scale point clouds while preserving the fine-grained information within the points.

- VoxelNet: This architecture converts the point cloud into a 3D voxel grid and then employs 3D convolutional neural networks for feature extraction. This approach allows for efficient feature learning from the 3D spatial structure but can be computationally expensive.

My work involves adapting these architectures to specific datasets and optimizing them for performance. This includes experimenting with different network configurations, loss functions, and data augmentation techniques to achieve the best object detection results. I’m also familiar with more recent architectures like CenterPoint and have experience comparing their performance and suitability for different scenarios.

Q 15. How do you address the problem of varying point density in LiDAR data?

Varying point density in LiDAR data is a common challenge. It arises because the sensor’s return signal strength changes with distance and surface reflectivity. Areas closer to the sensor tend to have higher point density than those farther away. This uneven distribution can negatively impact object detection and recognition algorithms.

Addressing this involves several strategies:

- Voxel Grid Filtering: This is a common preprocessing step. We divide the 3D point cloud into a grid of equally sized voxels (3D pixels). All points within a voxel are then aggregated, often by taking the mean or median coordinates. This reduces the number of points and makes the point cloud more uniform. Think of it like compressing an image; you lose some detail but gain efficiency.

- Interpolation Techniques: For applications where preserving detail is crucial, interpolation methods like nearest-neighbor, linear, or radial basis function interpolation can be used to fill in gaps in the point cloud. These techniques estimate the point cloud’s density in sparsely populated regions, creating a more consistent representation.

- Adaptive Sampling: Instead of uniform voxel grids, adaptive sampling adjusts the voxel size based on local point density. Denser areas are sampled with smaller voxels, preserving detail, while sparser areas are represented with larger voxels, reducing computation.

- Point Cloud Completion: More sophisticated methods employ deep learning techniques to predict missing points based on the existing point cloud structure. These methods often leverage learned representations of shapes and objects to infer missing information.

The choice of method depends on the specific application and the trade-off between computational efficiency and the need to maintain detail. For real-time applications, voxel grid filtering is often preferred for its speed. However, for high-accuracy applications requiring preservation of fine details, interpolation or more advanced completion methods are often necessary.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your experience with calibration techniques for LiDAR sensors.

LiDAR sensor calibration is crucial for accurate 3D point cloud generation. Inaccurate calibration leads to systematic errors that propagate through the entire object detection pipeline, resulting in poor localization and classification.

My experience includes calibrating both extrinsic and intrinsic parameters. Extrinsic calibration refers to determining the transformation (rotation and translation) between the LiDAR sensor and other sensors, such as cameras or IMUs (Inertial Measurement Units). This involves using known targets or features that are simultaneously observed by multiple sensors.

Intrinsic calibration focuses on parameters related to the LiDAR sensor itself, such as the laser beam divergence, scan angle, and focal length (if applicable). These parameters are typically obtained through factory calibration reports or determined through careful measurements and rigorous mathematical modeling. For example, we might use a calibration target with precisely known dimensions and positions to obtain accurate estimates for these intrinsic parameters.

I’ve used a variety of calibration methods, including:

- Target-based methods: Using checkerboard patterns or other structured targets, we can accurately determine the transformation between sensors using computer vision techniques.

- Self-calibration methods: These algorithms leverage the LiDAR data itself to estimate calibration parameters. They are especially useful when target-based methods are not feasible.

- Bundle Adjustment: A powerful optimization technique used to refine calibration parameters by minimizing reprojection errors across multiple sensor measurements.

Robust calibration is an iterative process. We often evaluate the accuracy through visual inspection of aligned point clouds and quantitative metrics, like point-to-plane distances between overlapping scans.

Q 17. Describe the process of generating a 3D point cloud from raw LiDAR data.

Generating a 3D point cloud from raw LiDAR data involves several steps:

- Data Acquisition: The LiDAR sensor emits laser pulses and measures the time-of-flight for each pulse to return. This provides the distance to the object.

- Data Preprocessing: Raw data often contains noise and outliers. Preprocessing involves filtering techniques to remove these, like removing points that are outside of a defined range or using outlier rejection algorithms.

- Coordinate Transformation: Raw LiDAR data is typically in sensor coordinates. It must be transformed to a global coordinate system (e.g., using GPS and IMU data for mobile LiDAR). This step often involves applying calibration parameters.

- Point Cloud Construction: Using the distance measurements, along with the known angles of the emitted laser beams, 3D coordinates (x, y, z) are calculated for each returned point. This creates the 3D point cloud.

- Data Organization: The generated point cloud can be stored in various formats, like .LAS, .PLY, or .PCD. The choice of format depends on the application and intended software for processing.

For example, a typical LiDAR sensor might output a sequence of scan lines, with each point on a line having its associated range and angle. We would then convert these polar coordinates (range, azimuth, elevation) into Cartesian coordinates (x, y, z) using trigonometric functions.

// Example conversion (simplified): x = range * cos(elevation) * cos(azimuth); y = range * cos(elevation) * sin(azimuth); z = range * sin(elevation);The resulting 3D point cloud is a fundamental representation used for subsequent processing and object detection tasks.

Q 18. What are some common metrics used to evaluate the accuracy of object detection?

Evaluating the accuracy of object detection in LiDAR data requires comprehensive metrics that capture both localization and classification performance.

- Intersection over Union (IoU): This metric measures the overlap between the predicted bounding box and the ground truth bounding box. A higher IoU indicates better localization accuracy. It’s often expressed as a percentage.

- Precision and Recall: Precision measures the percentage of correctly detected objects among all detected objects, while recall measures the percentage of correctly detected objects among all ground truth objects. These are crucial for understanding the balance between false positives and false negatives.

- F1-Score: The harmonic mean of precision and recall, providing a single metric that balances both. A higher F1-score indicates better overall performance.

- Average Precision (AP): Considers the precision-recall curve across different confidence thresholds. It is commonly used to evaluate the detection performance over a range of object sizes and difficulties.

- Mean Average Precision (mAP): The average of AP across multiple object classes, providing a single metric for comparing the overall performance across different classes.

We often use visualization tools to visually inspect the results. False positives and false negatives can then be identified and analyzed. This helps us identify weaknesses in the model and suggest improvements.

Q 19. How do you handle moving objects in LiDAR point clouds?

Handling moving objects in LiDAR point clouds is challenging because their position changes between scans. Several approaches address this:

- Temporal Filtering: Moving objects typically appear as inconsistent points across consecutive scans. Temporal filtering techniques, like Kalman filtering or particle filters, track the motion of points and filter out points that do not adhere to a consistent motion model.

- Motion Segmentation: We can identify moving objects by segmenting the point cloud into regions of consistent motion. Algorithms like optical flow adapted for 3D point clouds can be used to identify areas with significant movement.

- Multi-sensor Fusion: Integrating LiDAR with other sensors, like cameras or IMUs, allows us to combine information and improve the tracking of moving objects. For example, a camera can provide visual cues about object identity and motion, which can help to disambiguate moving objects in the LiDAR data.

- Deep Learning Approaches: Deep learning models, specifically those trained on sequences of LiDAR data, are increasingly capable of effectively detecting and tracking moving objects directly from point cloud sequences. These methods can automatically learn complex motion patterns.

In practical applications, I’ve employed a combination of these techniques. For example, we might use a Kalman filter to refine the position of a detected vehicle while using a temporal filter to eliminate points that represent noise or artifacts from the environment.

Q 20. Explain the concept of SLAM (Simultaneous Localization and Mapping) in the context of LiDAR.

SLAM (Simultaneous Localization and Mapping) is a crucial technique in robotics and autonomous systems that uses sensor data to simultaneously build a map of the environment and determine the robot’s location within that map. In the context of LiDAR, it leverages the 3D point cloud data to achieve both tasks.

The process typically involves these key steps:

- Point Cloud Registration: Consecutive LiDAR scans are aligned (registered) to estimate the robot’s movement between scans. This involves finding transformations (rotation and translation) that best match overlapping points in consecutive scans.

- Loop Closure: When the robot revisits a previously explored area, SLAM algorithms detect these loops and adjust the map and robot pose to maintain consistency. This is important for correcting accumulated drift in the pose estimation.

- Map Building: The registered point clouds are integrated to build a consistent and coherent 3D map of the environment. This often involves strategies like occupancy grid mapping or point cloud fusion.

- Pose Estimation: The robot’s pose (position and orientation) is estimated at each time step based on the registered point clouds. This provides the robot’s current location within the constructed map.

Different SLAM approaches exist, including:

- Feature-based SLAM: Extracts distinctive features from the point clouds for registration. It’s efficient but can be sensitive to environmental changes.

- Direct SLAM: Uses the raw point cloud data directly for registration, which is more robust but computationally intensive.

In practical scenarios, LiDAR-based SLAM is fundamental for autonomous vehicles, robotics, and 3D modeling applications, ensuring accurate navigation and map generation in various environments.

Q 21. Describe your experience with different software libraries for LiDAR point cloud processing (e.g., PCL, Open3D).

I have extensive experience with various software libraries for LiDAR point cloud processing, primarily focusing on Point Cloud Library (PCL) and Open3D.

PCL (Point Cloud Library) is a mature and widely-used library offering a comprehensive set of tools for point cloud processing. I have leveraged PCL for:

- Filtering: Removing noise, outliers, and downsampling point clouds using techniques like voxel grid filtering, statistical outlier removal, and radius outlier removal.

- Feature Extraction: Computing features like normal vectors, curvature, and keypoints to aid in object detection and recognition.

- Registration: Aligning point clouds using ICP (Iterative Closest Point) and other registration algorithms.

- Segmentation: Grouping points into meaningful segments based on characteristics like distance, normal, and color.

Open3D is a more recent library known for its ease of use and excellent visualization capabilities. Its strengths lie in:

- Visualizations: Creating interactive 3D visualizations of point clouds, meshes, and other geometric data. This is essential for debugging and analysis.

- Mesh Processing: Open3D is particularly well-suited for tasks that involve mesh processing, such as surface reconstruction from point clouds.

- Deep Learning Integration: Its seamless integration with deep learning frameworks makes it well-suited for modern object detection and segmentation tasks using point clouds.

My choice of library often depends on the specific task and project requirements. PCL is powerful for a wide range of tasks, while Open3D’s ease of use and visualization capabilities are advantageous for prototyping and visualization-heavy tasks. I often utilize both libraries in a single project, combining their strengths to address specific challenges.

Q 22. Explain the tradeoffs between different object detection algorithms in terms of accuracy, speed, and computational cost.

Choosing the right object detection algorithm for LiDAR data involves a critical trade-off between accuracy, speed, and computational cost. There’s no one-size-fits-all solution; the best choice depends heavily on the application.

PointPillars, PointRCNN, and SECOND: These methods represent a spectrum of approaches. PointPillars, a pillar-based approach, prioritizes speed and is suitable for real-time applications where slight accuracy reductions are acceptable. PointRCNN and SECOND, on the other hand, are more accurate but computationally more expensive, making them better suited for offline processing or applications where accuracy is paramount, such as autonomous driving in complex scenarios.

VoxelNet: This voxel-based approach offers a good balance between speed and accuracy. It’s a popular choice for many applications due to its relative efficiency and strong performance.

Deep learning-based methods vs. traditional methods: Deep learning algorithms like the ones mentioned above generally provide superior accuracy compared to traditional methods like RANSAC (Random Sample Consensus) or Hough transforms. However, traditional methods often boast significantly faster speeds and lower computational costs, making them a viable option for resource-constrained environments.

For example, in a self-driving car system needing real-time object detection, PointPillars might be preferred for its speed, even with a slightly lower accuracy compared to PointRCNN. In contrast, a post-processing analysis of LiDAR scans for detailed scene understanding might utilize a more accurate but slower algorithm like PointRCNN or SECOND.

Q 23. How do you handle different weather conditions and their effect on LiDAR data?

Weather significantly impacts LiDAR data quality. Rain, snow, and fog attenuate the laser signal, leading to reduced range and the presence of noise and artifacts. Sunlight can also create reflections that interfere with accurate point cloud generation.

To handle this, several strategies are employed:

Data Preprocessing: This involves filtering the point cloud to remove noise points and outliers caused by weather effects. Techniques like median filtering, outlier rejection using statistical methods, and applying intensity thresholds are common.

Calibration and Compensation: Regular calibration of the LiDAR sensor is crucial to ensure accurate measurements. Atmospheric attenuation models can be integrated into the processing pipeline to compensate for signal loss due to weather conditions. This involves estimating the attenuation coefficient based on weather data or sensor readings and adjusting the point cloud accordingly.

Robust Algorithms: Choosing object detection algorithms that are robust to noise and outliers is critical. Methods that employ clustering or filtering techniques before object detection are advantageous in challenging weather.

Data Augmentation: Synthetically generating data that simulates various weather conditions and their effects on LiDAR scans can enhance the robustness of the model. This could include adding simulated noise to clean data.

For instance, if working with a dataset collected during a snowy day, I would initially focus on noise reduction using robust filtering techniques and then choose an object detection model known for its resilience to noise and missing data.

Q 24. Discuss your experience with deploying object detection models on embedded systems.

I have extensive experience deploying object detection models on embedded systems, focusing primarily on optimizing for low power consumption and real-time performance. This often involves challenges like limited computational resources and memory constraints. My approach typically involves:

Model Compression: Techniques like pruning, quantization, and knowledge distillation are crucial for reducing the model’s size and computational requirements. For example, using 8-bit quantization instead of 32-bit floating-point representation significantly reduces memory usage.

Hardware Acceleration: Leveraging hardware accelerators such as GPUs or specialized AI processors, if available, dramatically improves processing speed and reduces energy consumption. I’ve worked with platforms like NVIDIA Jetson and specialized automotive-grade SoCs.

Efficient Architectures: Selecting lightweight deep learning architectures specifically designed for embedded systems, like MobileNet or ShuffleNet, is critical. These models trade off a small amount of accuracy for significantly improved efficiency.

Software Optimization: Employing techniques like optimizing the code for the target hardware and minimizing data transfer overhead improves performance.

In one project, I successfully deployed a PointPillars-based object detection model on a low-power ARM-based system for a robotic navigation application, achieving real-time performance with acceptable accuracy through model quantization and code optimization.

Q 25. How would you improve the performance of an existing LiDAR object detection system?

Improving an existing LiDAR object detection system requires a systematic approach. My strategy typically includes:

Data Analysis: Thoroughly analyzing the current system’s performance, including identifying areas where it struggles (e.g., specific object classes, challenging weather conditions, or difficult viewing angles). This often involves visualizing the detection results and examining false positives and false negatives.

Data Augmentation: Expanding the training dataset with synthetic data or by augmenting existing data to increase the model’s robustness to various conditions and improve its generalization ability.

Algorithm Refinement: Investigating more advanced object detection algorithms or improving the current one by tuning hyperparameters, optimizing the architecture, or exploring more advanced loss functions.

Feature Engineering: Exploring new features that might improve the model’s performance. This could involve incorporating features extracted from the point cloud’s intensity, reflectivity, or other sensor data.

Post-processing Techniques: Implementing post-processing steps such as Non-Maximum Suppression (NMS) to filter out redundant detections and improve precision. Tracking algorithms could also enhance the stability and accuracy of object detection over time.

For example, if the system struggles with detecting small objects, I might incorporate data augmentation to generate more examples of small objects in various contexts or explore a different model architecture better suited for detecting fine details.

Q 26. Explain your understanding of different segmentation techniques for LiDAR point clouds.

Segmentation techniques for LiDAR point clouds aim to partition the point cloud into meaningful regions corresponding to different objects or surfaces. Several techniques exist:

Clustering-based Segmentation: Methods like k-means clustering or DBSCAN (Density-Based Spatial Clustering of Applications with Noise) group points based on their spatial proximity and features. This approach is relatively simple but can be sensitive to noise and parameter choices.

Region Growing: This approach starts with a seed point and iteratively adds neighboring points that meet certain criteria (e.g., similar intensity or distance). It’s effective for segmenting homogenous regions but might struggle with complex shapes or noise.

Supervoxel Segmentation: This technique merges neighboring points into supervoxels, which are larger, more meaningful units. It simplifies subsequent processing and often reduces computational complexity. Examples include the SLIC (Simple Linear Iterative Clustering) algorithm.

Deep Learning-based Segmentation: Convolutional neural networks (CNNs) have shown remarkable success in semantic segmentation of LiDAR point clouds. These methods can learn complex patterns and relationships within the data to produce accurate segmentation masks.

The choice of segmentation technique depends on factors such as the desired level of detail, computational resources, and the specific characteristics of the point cloud. For example, a fast, coarse segmentation might use k-means for a robotics application, whereas a high-precision segmentation might employ a deep learning-based approach for autonomous driving.

Q 27. How do you deal with shadows and reflections in LiDAR data?

Shadows and reflections in LiDAR data introduce significant challenges. Shadows cause missing data, while reflections create false or distorted measurements. Several techniques help mitigate these issues:

Intensity Information: Utilizing the intensity values associated with each point can help differentiate between shadowed regions and actual object surfaces. Shadowed regions typically have lower intensities.

Data Filtering: Removing outlier points with unusually high or low intensities can help reduce the influence of reflections. Applying filters that smooth the point cloud while preserving relevant features can mitigate the effect of both shadows and reflections.

Contextual Information: Incorporating other sensor data, such as images from cameras, can provide contextual information that helps to compensate for missing data in shadowed areas. This fusion of data from multiple sensors provides a more complete understanding of the scene.

Model Robustness: Training object detection models on datasets that include a variety of lighting conditions and shadows improves their robustness to these effects.

For instance, I might use intensity thresholds to identify and flag potentially erroneous points caused by reflections. Then, I could employ a robust object detection algorithm that can handle missing data and outliers effectively.

Q 28. Describe your experience with data augmentation techniques for LiDAR object detection.

Data augmentation is crucial for improving the robustness and generalization ability of LiDAR object detection models. The limitations of collecting large, diverse, and well-labeled datasets make augmentation essential. Techniques include:

Noise Injection: Adding Gaussian noise to the point cloud coordinates simulates sensor noise and improves model robustness.

Point Dropout: Randomly removing a percentage of points from the point cloud simulates missing data due to occlusions or sensor limitations.

Rotation and Translation: Rotating and translating the point cloud along different axes creates variations in viewpoint and object orientation.

Scaling: Scaling the point cloud up or down simulates variations in object size and distance.

Synthetic Data Generation: Generating synthetic LiDAR scans using 3D modeling software and physics engines allows creation of diverse and challenging scenarios that are difficult or expensive to collect in real-world data.

For example, in a project involving autonomous driving, I augmented the training dataset by randomly rotating and translating point clouds to simulate different viewpoints and object poses, resulting in a significant improvement in detection performance, especially in challenging scenarios.

Key Topics to Learn for Object Detection and Recognition in LIDAR Data Interview

- Point Cloud Processing: Understanding data structures (e.g., PCD, LAS), filtering techniques (e.g., noise removal, outlier detection), and data registration methods.

- Feature Extraction: Exploring various feature descriptors for 3D point clouds, including handcrafted features (e.g., intensity, curvature) and deep learning-based features.

- Object Detection Algorithms: Familiarizing yourself with classical methods (e.g., RANSAC, Hough Transform) and deep learning approaches (e.g., PointPillars, VoxelNet, PointRCNN) for object detection in 3D point clouds.

- Object Recognition and Classification: Mastering techniques for classifying detected objects into predefined categories, including the use of deep learning models (e.g., CNNs, PointNet) and feature matching algorithms.

- Sensor Fusion: Understanding how to integrate LIDAR data with other sensor modalities (e.g., cameras, radar) to improve the accuracy and robustness of object detection and recognition.

- Performance Evaluation Metrics: Knowing how to evaluate the performance of object detection and recognition systems using metrics such as precision, recall, F1-score, Intersection over Union (IoU), and Average Precision (AP).

- Practical Applications: Being aware of real-world applications in autonomous driving, robotics, mapping, and 3D scene understanding.

- Computational Efficiency and Optimization: Understanding strategies for optimizing algorithms for real-time performance and resource constraints.

- Challenges and Limitations: Addressing common issues such as occlusion, sparsity of data, and varying environmental conditions.

- Explainability and Interpretability: Understanding the importance of explainable AI and methods for interpreting the decisions made by object detection and recognition models.

Next Steps

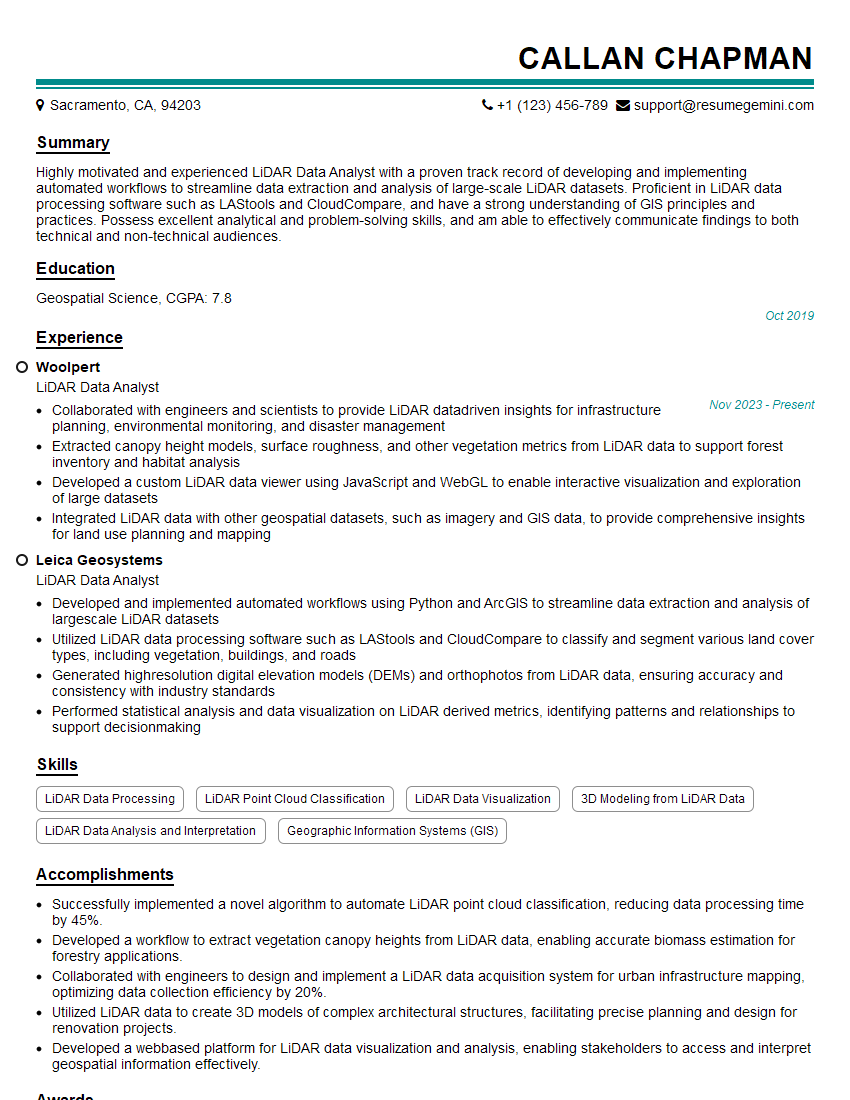

Mastering Object Detection and Recognition in LIDAR Data opens doors to exciting careers in cutting-edge fields like autonomous vehicles and robotics. To significantly increase your job prospects, crafting a strong, ATS-friendly resume is crucial. ResumeGemini is a trusted resource to help you build a professional and impactful resume that highlights your skills and experience effectively. Examples of resumes tailored to Object Detection and Recognition in LIDAR Data are available to guide you. Invest the time – it’s an investment in your future success!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

To the interviewgemini.com Webmaster.

Very helpful and content specific questions to help prepare me for my interview!

Thank you

To the interviewgemini.com Webmaster.

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.