Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Research Methods and Statistical Analysis interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Research Methods and Statistical Analysis Interview

Q 1. Explain the difference between Type I and Type II errors.

Type I and Type II errors are both errors in statistical hypothesis testing. Imagine you’re a detective investigating a crime. Your hypothesis is that the suspect is guilty.

A Type I error (false positive) occurs when you wrongly reject a true null hypothesis. In our detective analogy, this means you convict an innocent person. The probability of making a Type I error is denoted by alpha (α), often set at 0.05 (5%).

A Type II error (false negative) occurs when you fail to reject a false null hypothesis. In our analogy, this is letting a guilty person go free. The probability of making a Type II error is denoted by beta (β). The power of a test (1-β) represents the probability of correctly rejecting a false null hypothesis – catching the guilty party.

To minimize both types of errors, careful experimental design, appropriate sample size, and a well-defined hypothesis are crucial. A balance must be struck, as reducing the chance of one type of error often increases the chance of the other.

Q 2. What are the assumptions of linear regression?

Linear regression models the relationship between a dependent variable and one or more independent variables. Several assumptions underpin its validity:

- Linearity: The relationship between the independent and dependent variables should be linear. A scatter plot can visually assess this.

- Independence of errors: The residuals (errors) should be independent of each other. Autocorrelation violates this assumption.

- Homoscedasticity: The variance of the errors should be constant across all levels of the independent variable(s). A plot of residuals versus fitted values can reveal heteroscedasticity (non-constant variance).

- Normality of errors: The errors should be normally distributed. A histogram or Q-Q plot of residuals can assess normality.

- No multicollinearity: In multiple linear regression, the independent variables should not be highly correlated with each other. High multicollinearity can inflate standard errors and make it difficult to interpret individual coefficients.

Violation of these assumptions can lead to biased or inefficient estimates, rendering the regression model unreliable. Diagnostic plots and statistical tests are used to check these assumptions. If assumptions are violated, transformations of the data or the use of alternative models may be necessary.

Q 3. Describe the Central Limit Theorem and its importance.

The Central Limit Theorem (CLT) is a cornerstone of inferential statistics. It states that the distribution of the sample means of a sufficiently large number of independent, identically distributed random variables will approximate a normal distribution, regardless of the shape of the original population distribution.

Imagine you’re measuring the height of adult women. The population distribution of heights might be slightly skewed. However, if you repeatedly take large samples and calculate the mean height for each sample, the distribution of these sample means will be approximately normal.

The CLT’s importance lies in its ability to justify the use of normal-based statistical tests (like t-tests and z-tests) even when the population distribution is not normal, provided the sample size is large enough (generally considered to be n ≥ 30). This makes it a powerful tool for inference in many real-world situations where the true population distribution is unknown.

Q 4. How do you handle missing data in a dataset?

Missing data is a common problem in research. The best approach depends on the mechanism of missingness (missing completely at random (MCAR), missing at random (MAR), or missing not at random (MNAR)), the amount of missing data, and the nature of the variables. There’s no one-size-fits-all solution.

- Deletion methods: Listwise deletion (removing entire rows with missing data) is simple but can lead to a substantial loss of information, especially with many variables. Pairwise deletion uses all available data for each analysis but can lead to inconsistent results.

- Imputation methods: These methods fill in missing values with plausible estimates. Mean/mode imputation is simple but can underestimate variability. More sophisticated methods include regression imputation, multiple imputation (creating multiple plausible datasets), and maximum likelihood estimation.

Before deciding on a method, it’s crucial to understand the pattern of missingness and assess the potential impact of different methods on the results. For instance, if missingness is related to a key variable, simple imputation methods might lead to biased results.

Q 5. What is the difference between correlation and causation?

Correlation and causation are often confused, but they are distinct concepts. Correlation refers to a statistical association between two variables; when one variable changes, the other tends to change as well (either positively or negatively). Causation, on the other hand, implies a cause-and-effect relationship: one variable directly influences the other.

Example: Ice cream sales and crime rates are often positively correlated; both increase in the summer. This doesn’t mean that buying ice cream causes crime! A confounding variable – hot weather – affects both. Correlation does not imply causation. Establishing causation requires more rigorous methods, such as controlled experiments or longitudinal studies that account for potential confounding factors.

Q 6. Explain the concept of p-values and their interpretation.

A p-value is the probability of observing results as extreme as, or more extreme than, the results actually obtained, assuming the null hypothesis is true. It’s a measure of evidence *against* the null hypothesis.

A small p-value (typically less than 0.05) suggests strong evidence against the null hypothesis, leading researchers to reject it in favor of the alternative hypothesis. However, a large p-value doesn’t necessarily mean the null hypothesis is true; it simply means there’s not enough evidence to reject it.

It’s crucial to avoid misinterpreting p-values. They don’t measure the probability that the null hypothesis is true, nor do they measure the size or importance of an effect. The context, effect size, and the practical significance of the results should be considered alongside the p-value when making decisions.

Q 7. What are the different types of sampling methods?

Sampling methods determine how a subset of individuals is selected from a larger population for study. Different methods have different strengths and weaknesses.

- Probability sampling: Every member of the population has a known non-zero probability of being selected.

- Simple random sampling: Every member has an equal chance of being selected. Useful for large populations, but can be impractical for geographically dispersed ones.

- Stratified sampling: The population is divided into strata (groups) based on relevant characteristics, then random samples are taken from each stratum, ensuring representation from all groups.

- Cluster sampling: The population is divided into clusters (often geographical), and a random sample of clusters is selected. All members of the selected clusters are included in the study.

- Systematic sampling: Individuals are selected at regular intervals from a list or sequence.

- Non-probability sampling: The probability of selection is unknown, making generalizations to the population less reliable.

- Convenience sampling: Individuals are selected based on ease of access.

- Quota sampling: Samples are selected to meet pre-defined quotas for certain characteristics, often used in market research.

- Snowball sampling: Participants recruit other participants, often used for hard-to-reach populations.

The choice of sampling method depends on factors like research question, budget, time constraints, and the accessibility of the population.

Q 8. When would you use a t-test versus an ANOVA?

Both t-tests and ANOVAs (Analysis of Variance) are used to compare means, but they differ in the number of groups being compared. A t-test compares the means of two groups. Think of it like weighing two bags of apples to see if they have significantly different weights. An ANOVA, on the other hand, compares the means of three or more groups. Imagine comparing the average weight of apples from three different orchards.

Specifically, we use an independent samples t-test when comparing the means of two independent groups (e.g., comparing the test scores of students taught by two different methods). A paired samples t-test is used when comparing the means of two related groups (e.g., comparing a patient’s blood pressure before and after treatment). If we have more than two groups, we’d utilize ANOVA (One-way ANOVA for one independent variable, two-way ANOVA for two independent variables, etc.).

Choosing between the two depends entirely on the research question and the number of groups involved. Using an ANOVA when only two groups exist is not wrong, but a t-test would be simpler and more efficient.

Q 9. Describe your experience with different statistical software packages (e.g., R, SPSS, SAS).

I have extensive experience with several statistical software packages, including R, SPSS, and SAS. My proficiency extends beyond basic data entry and analysis. In R, I’m comfortable working with various packages like dplyr for data manipulation, ggplot2 for data visualization, and lme4 for mixed-effects modeling. I’ve used R extensively for complex statistical analyses, including creating custom functions and scripts for specific research needs. In SPSS, I’m adept at conducting various statistical tests, managing large datasets, and creating comprehensive reports. My experience with SAS focuses primarily on its strength in handling very large datasets and advanced statistical procedures, particularly in the context of clinical trials and longitudinal studies. I can effectively utilize PROC MIXED for longitudinal data analysis and PROC GLM for general linear modeling. My skillset ensures efficient and accurate analysis, irrespective of the chosen software.

Q 10. How do you determine the appropriate sample size for a study?

Determining the appropriate sample size is crucial for ensuring the validity and reliability of research findings. It’s not a one-size-fits-all answer, but depends on several factors:

- Effect size: The magnitude of the difference or relationship you expect to find. Larger expected effects require smaller sample sizes.

- Power: The probability of detecting a true effect if it exists. Typically, a power of 80% is considered acceptable.

- Significance level (alpha): The probability of rejecting the null hypothesis when it is true (usually set at 0.05).

- Type of study: Different study designs (e.g., experimental, observational) have different sample size requirements.

Several methods exist for sample size calculation, often employing statistical power analysis software or online calculators. These tools take the above factors as input and output the required sample size. I frequently use G*Power and PASS software for these calculations. Failing to account for these factors can lead to studies that are underpowered (unable to detect real effects) or overpowered (unnecessarily expensive and time-consuming).

Q 11. Explain the difference between parametric and non-parametric tests.

The choice between parametric and non-parametric tests hinges on the assumptions about the data. Parametric tests, like t-tests and ANOVAs, assume that the data are normally distributed and that the variances of the groups being compared are equal (or approximately equal). They are generally more powerful than non-parametric tests if these assumptions hold. Think of them as more precise instruments that work best under ideal conditions.

Non-parametric tests, such as the Mann-Whitney U test (analogous to the independent samples t-test) and the Kruskal-Wallis test (analogous to ANOVA), make fewer assumptions about the data. They are suitable for data that are not normally distributed or have unequal variances. They are more robust but less powerful if the parametric assumptions are met. These tests work like more versatile tools, suitable for a broader range of scenarios, although potentially less precise in ideal situations.

Violating the assumptions of parametric tests can lead to inaccurate conclusions, hence the importance of assessing the data’s distribution before choosing a test. Techniques like histograms, Q-Q plots, and Shapiro-Wilk tests help assess normality.

Q 12. What is a confidence interval, and how is it calculated?

A confidence interval provides a range of values within which we are confident that the true population parameter lies. For example, a 95% confidence interval for the average height of women means that we are 95% confident that the true average height falls within that interval. It doesn’t mean there’s a 95% chance the true value is within the interval; the true value is either within or outside the interval.

The calculation involves the sample statistic (e.g., sample mean), the standard error of the statistic, and the critical value from the appropriate probability distribution (often the t-distribution for smaller sample sizes or the z-distribution for large samples). The formula for a confidence interval for a population mean is generally:

CI = sample mean ± (critical value * standard error)

The standard error reflects the variability of the sample mean. A smaller standard error leads to a narrower confidence interval, indicating greater precision in estimating the population parameter. The critical value depends on the desired confidence level (e.g., 1.96 for a 95% confidence interval using the z-distribution).

Q 13. How do you assess the reliability and validity of a measurement instrument?

Assessing the reliability and validity of a measurement instrument is critical for ensuring the quality of research data. Reliability refers to the consistency of the instrument in measuring the target construct. A reliable instrument produces similar results under similar conditions. We assess reliability through methods like:

- Test-retest reliability: Administering the instrument twice to the same group and correlating the scores.

- Internal consistency reliability (Cronbach’s alpha): Measuring the correlation among items within the instrument.

- Inter-rater reliability: Assessing the agreement between different raters using the same instrument.

Validity refers to the extent to which the instrument actually measures what it is intended to measure. We assess validity through various approaches:

- Content validity: Assessing whether the instrument covers all relevant aspects of the construct.

- Criterion validity: Correlating the instrument’s scores with an external criterion (e.g., comparing a new depression scale with an established one).

- Construct validity: Assessing whether the instrument measures the theoretical construct it is designed to measure (often involves factor analysis).

Both reliability and validity are essential for drawing meaningful conclusions from research data. A reliable but invalid instrument consistently measures something irrelevant, while an unreliable instrument provides inconsistent and thus uninterpretable results. Both need to be high for the instrument to be useful.

Q 14. Describe your experience with experimental design.

My experience with experimental design encompasses a wide range of designs, from simple randomized controlled trials (RCTs) to more complex factorial designs and quasi-experimental designs. In RCTs, I have experience in randomizing participants to treatment and control groups, controlling for confounding variables, and analyzing the data using appropriate statistical methods. This includes understanding the importance of blinding, minimizing bias, and ensuring the integrity of the study. For example, I’ve worked on studies comparing the effectiveness of different teaching methodologies, ensuring random assignment to ensure the groups are comparable before the intervention.

My experience extends to factorial designs, where we manipulate multiple independent variables simultaneously to investigate their individual and interactive effects. I am also familiar with quasi-experimental designs, which are used when random assignment isn’t feasible. For instance, I have worked on evaluating the impact of a policy change on a specific population using a pre-post design, acknowledging the limitations inherent in such designs. A thorough understanding of these designs and their strengths and weaknesses is vital to designing studies that answer research questions accurately and ethically.

Q 15. What are some common threats to internal and external validity?

Internal validity refers to the confidence we have that the observed relationship between variables in our study is real and not due to some other factor. External validity, on the other hand, concerns the generalizability of our findings to other populations, settings, and times. Threats to both are numerous and often intertwined.

Threats to Internal Validity:

- Confounding Variables: These are extraneous variables that correlate with both the independent and dependent variables, making it difficult to isolate the true effect of the independent variable. For example, in a study on the effect of a new drug on blood pressure, if participants in the drug group also happen to be exercising more than the control group, exercise becomes a confounding variable.

- Selection Bias: This occurs when the groups being compared are not equivalent at the start of the study, leading to spurious results. A poorly designed study might inadvertently assign healthier participants to one group, leading to biased results.

- History: External events that occur during the study can influence the results. Imagine a study on consumer spending habits interrupted by a major economic recession.

- Maturation: Changes within the participants themselves over time (e.g., aging, learning) can affect the dependent variable, confounding the results.

- Testing Effects: Repeated testing can influence participant responses, particularly if they learn from previous tests.

Threats to External Validity:

- Sampling Bias: If the sample is not representative of the population, the findings cannot be generalized to the broader population.

- Setting: The specific context of the study might limit the generalizability of the findings. A study conducted in a highly controlled laboratory setting might not translate well to real-world scenarios.

- History: Similar to its impact on internal validity, historical events can also limit the generalizability of findings across time.

- Interaction of Selection and Treatment: The effect of the independent variable might be specific to the sample selected and not generalizable to other populations.

Addressing these threats requires careful experimental design, including random assignment, control groups, and representative sampling. Rigorous analysis and thoughtful interpretation of results are also crucial.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the difference between qualitative and quantitative research methods.

Qualitative and quantitative research methods represent fundamentally different approaches to understanding the world. They differ in their goals, data collection techniques, and data analysis methods.

Qualitative Research: Focuses on exploring complex social phenomena through in-depth understanding of experiences, perspectives, and meanings. It emphasizes rich descriptive data and aims to generate hypotheses or theories rather than test them. Methods include interviews, focus groups, ethnography, and case studies. Data analysis is often iterative and involves identifying themes, patterns, and relationships within the data.

Example: Conducting in-depth interviews with teachers to understand their experiences with a new educational technology.

Quantitative Research: Emphasizes numerical data and statistical analysis to test hypotheses and establish relationships between variables. It aims to measure and quantify phenomena, often involving large sample sizes. Methods include surveys, experiments, and secondary data analysis. Data analysis relies on statistical tests to determine significance and relationships between variables.

Example: Conducting a survey to assess the effectiveness of a new marketing campaign by measuring changes in sales and brand awareness.

In practice, a mixed-methods approach, combining both qualitative and quantitative methods, can provide a more comprehensive understanding of a research problem. For instance, you might conduct a quantitative survey followed by qualitative interviews to explore unexpected findings.

Q 17. How do you interpret a regression coefficient?

In regression analysis, the regression coefficient represents the change in the dependent variable associated with a one-unit change in the independent variable, holding all other variables constant. It indicates the slope of the regression line.

For example, consider a simple linear regression model: y = β0 + β1x + ε, where y is the dependent variable, x is the independent variable, β0 is the y-intercept, β1 is the regression coefficient, and ε is the error term. If β1 = 2, this means that a one-unit increase in x is associated with a two-unit increase in y, assuming all other factors remain unchanged.

The sign of the coefficient indicates the direction of the relationship: a positive coefficient indicates a positive relationship (as x increases, y increases), while a negative coefficient indicates a negative relationship (as x increases, y decreases). The magnitude of the coefficient indicates the strength of the relationship. However, the magnitude alone should not be interpreted in isolation; it’s essential to consider the units of measurement and the context of the analysis.

It’s crucial to remember that correlation does not equal causation. A significant regression coefficient only suggests an association; it does not necessarily imply that changes in the independent variable directly cause changes in the dependent variable.

Q 18. What are some common methods for data visualization?

Data visualization is crucial for communicating insights from data effectively. Several methods exist, each suited for different types of data and objectives.

- Histograms: Show the distribution of a single continuous variable.

- Scatter plots: Display the relationship between two continuous variables.

- Box plots: Show the distribution of a variable, including its median, quartiles, and outliers.

- Bar charts: Compare different categories or groups.

- Line charts: Show trends over time or across different levels of a variable.

- Pie charts: Show the proportions of different categories within a whole.

- Heatmaps: Show the values of a matrix using color intensity.

- Geographic maps: Display data geographically, highlighting spatial patterns.

Choosing the right visualization depends on the data and the message you want to convey. A well-designed visualization should be clear, concise, and easy to interpret. Tools like R, Python (with libraries like Matplotlib and Seaborn), and Tableau are commonly used for creating visualizations.

Q 19. Describe your experience with data cleaning and preprocessing.

Data cleaning and preprocessing are essential steps in any data analysis project. My experience involves a systematic approach encompassing several key tasks:

- Handling Missing Data: I employ various techniques depending on the nature and extent of missing data. This can include imputation using mean/median/mode, more sophisticated methods like k-nearest neighbors imputation, or removal of rows/columns with excessive missing values. The choice of method is carefully considered based on the dataset’s characteristics and the potential impact on the analysis.

- Outlier Detection and Treatment: Identifying outliers through visual inspection (e.g., box plots, scatter plots) and statistical methods (e.g., z-scores, IQR). Strategies for handling them include removal, transformation (e.g., log transformation), or winsorizing/clipping. The choice depends on the nature of the outliers and the research question.

- Data Transformation: Applying transformations like standardization (z-score normalization), min-max scaling, or logarithmic transformations to improve model performance or meet the assumptions of statistical tests.

- Data Consistency and Formatting: Ensuring consistency in data formats (e.g., date formats, units of measurement), correcting inconsistencies and errors, and handling inconsistent data entry.

- Feature Engineering: Creating new variables from existing ones to improve model performance and capture relevant information. This could involve creating interaction terms or polynomial terms, or deriving new features from dates or categorical variables.

I am proficient in using programming languages like R and Python with relevant libraries (e.g., pandas, dplyr) to automate these tasks and ensure efficient data cleaning and preprocessing.

Q 20. How do you deal with outliers in your data?

Outliers are data points that significantly deviate from the rest of the data. Dealing with them requires careful consideration, as they can unduly influence the results of statistical analyses. My approach involves a multi-step process:

- Identification: First, I identify potential outliers using visual methods (box plots, scatter plots) and statistical methods (z-scores, interquartile range (IQR)). Z-scores exceeding a certain threshold (e.g., ±3) or data points falling outside the IQR range (1.5 times IQR above the third quartile or below the first quartile) are flagged as potential outliers.

- Investigation: Once identified, I investigate the reason for the outlier. Is it a genuine observation, a data entry error, or a result of measurement error? This often involves examining the data collection process and checking for errors.

- Treatment: The treatment strategy depends on the cause and impact of the outlier. Options include:

- Removal: If the outlier is determined to be due to a clear error, removing it might be appropriate. However, this should be done cautiously and documented.

- Transformation: Transforming the data (e.g., using logarithmic transformation) can sometimes reduce the influence of outliers.

- Winsorizing/Clipping: Replacing extreme values with less extreme values (e.g., the 95th or 5th percentile) can mitigate the outlier’s effect without completely removing it.

- Robust Methods: Using statistical methods that are less sensitive to outliers (e.g., median instead of mean, robust regression techniques) can help minimize their impact.

- Documentation: Regardless of the chosen method, all steps taken in handling outliers should be clearly documented to ensure transparency and reproducibility.

The decision of how to handle outliers should be justified based on the context of the analysis and potential impact on the results.

Q 21. Explain the concept of statistical power.

Statistical power refers to the probability that a hypothesis test will correctly reject a false null hypothesis. In simpler terms, it’s the ability of a study to detect a real effect if one truly exists. A study with high power is more likely to find a significant result when there’s a genuine effect, while a study with low power might fail to detect an effect even if one is present.

Power is influenced by several factors:

- Effect size: The magnitude of the difference or relationship being studied. Larger effect sizes are easier to detect.

- Sample size: Larger samples generally lead to higher power. Larger samples provide more precise estimates and reduce the influence of random error.

- Significance level (alpha): The probability of rejecting the null hypothesis when it is actually true (Type I error). A lower alpha level (e.g., 0.01 instead of 0.05) reduces power.

- Variability in the data: High variability in the data makes it harder to detect an effect, thus reducing power.

Before conducting a study, researchers often perform a power analysis to determine the appropriate sample size needed to achieve a desired level of power (e.g., 80% or 90%). This helps ensure that the study has a reasonable chance of detecting a meaningful effect if one exists. Low power can lead to false negative results (Type II errors), where the study fails to detect a real effect, leading to potentially missed discoveries.

For example, a clinical trial testing a new drug might conduct a power analysis to determine how many participants are needed to detect a clinically meaningful improvement in patient outcomes with a sufficient level of confidence.

Q 22. What is your experience with A/B testing?

A/B testing, also known as split testing, is a randomized experiment where two or more versions of a variable (e.g., a webpage, an email, an advertisement) are shown to different groups of users to determine which version performs better based on a pre-defined metric (e.g., click-through rate, conversion rate). It’s a cornerstone of data-driven decision-making.

My experience with A/B testing spans various domains, from optimizing website conversion funnels to improving email marketing campaigns. I’ve designed and implemented A/B tests using tools like Optimizely and Google Optimize, meticulously controlling for confounding variables. For example, in a recent project, we tested two different versions of a product landing page. Version A used a more concise headline, while Version B emphasized product features. By randomly assigning users to either version A or B, we eliminated bias and accurately measured the impact of each headline on conversion rates. Version B ultimately resulted in a statistically significant increase in conversions, guiding us toward a more effective design. Beyond simple A/B tests, I have experience with multivariate testing, which involves testing multiple variables simultaneously to identify optimal combinations.

A crucial aspect of successful A/B testing is ensuring sufficient sample size to detect statistically significant differences, correctly interpreting p-values and confidence intervals, and understanding the limitations of the methodology. This ensures results are reliable and actionable.

Q 23. How do you evaluate the performance of a statistical model?

Evaluating the performance of a statistical model involves a multifaceted approach, going beyond simple accuracy metrics. It requires a deep understanding of the model’s purpose, the data used, and the context of the problem.

- Goodness of Fit: For models predicting continuous outcomes (like regression models), metrics like R-squared or adjusted R-squared assess how well the model fits the data. Lower values indicate a poorer fit. For classification models, metrics like accuracy, precision, recall, F1-score, and AUC-ROC (Area Under the Receiver Operating Characteristic Curve) are used to evaluate performance.

- Model Complexity vs. Generalization: Overfitting occurs when a model performs exceptionally well on training data but poorly on unseen data. Techniques like cross-validation help assess the model’s ability to generalize to new data. Regularization techniques such as L1 and L2 penalties can reduce overfitting.

- Residual Analysis: For regression models, analyzing residuals (differences between observed and predicted values) is crucial. Patterns or trends in residuals suggest model misspecification or violations of assumptions.

- Feature Importance: Understanding which features contribute most to the model’s predictions is essential for interpretability and insights. Techniques like SHAP (SHapley Additive exPlanations) values or variable importance plots from tree-based models can be used.

- Business Context: Ultimately, the best model is the one that solves the business problem effectively. While high accuracy is desirable, it must be balanced against other factors such as interpretability, computational cost, and ease of deployment. For example, a simpler model with slightly lower accuracy might be preferred over a complex model if it is easier to explain to stakeholders or requires less computational power.

Q 24. What is your experience with time series analysis?

Time series analysis is a specialized field focusing on data points indexed in time order. My experience involves analyzing various time series data, including financial market data, website traffic, sensor readings, and sales figures. I’m proficient in various techniques for analyzing and forecasting such data.

I’ve worked extensively with techniques like:

- ARIMA modeling: Autoregressive Integrated Moving Average models are used to model and forecast stationary time series. This involves identifying the order of the autoregressive (AR), integrated (I), and moving average (MA) components. I’ve utilized software like R or Python’s

statsmodelslibrary for ARIMA modeling and diagnostics. - Exponential Smoothing methods: These methods assign exponentially decreasing weights to older observations, making them suitable for forecasting non-stationary time series with trends or seasonality. Specific methods include Simple Exponential Smoothing, Holt’s linear trend method, and Holt-Winters method.

- Decomposition: This involves separating a time series into its trend, seasonal, and residual components. This helps understand the underlying patterns driving the data. Seasonal adjustment is often used to remove seasonal effects before forecasting.

- Prophet (from Meta): This is a robust and user-friendly tool particularly effective for time series data with strong seasonality and trend components.

For example, I helped a client forecast their monthly sales using a combination of ARIMA modeling and Prophet, considering past sales data and seasonal trends to optimize inventory management and staffing needs. Correctly identifying seasonality and trend is crucial for accurate forecasts.

Q 25. Explain different methods of hypothesis testing.

Hypothesis testing is a fundamental statistical method used to make inferences about a population based on sample data. It involves formulating a null hypothesis (H0), which is a statement of no effect, and an alternative hypothesis (H1), which is a statement contradicting the null hypothesis. The goal is to determine whether there is enough evidence to reject the null hypothesis in favor of the alternative.

Several methods exist, categorized based on the type of data and research question:

- t-tests: Compare the means of two groups. Independent samples t-test is used for independent groups, while paired samples t-test is used for related samples (e.g., before-and-after measurements).

- ANOVA (Analysis of Variance): Compares the means of three or more groups. One-way ANOVA is used for one independent variable, while two-way ANOVA is used for two or more independent variables.

- Chi-square test: Tests for the association between two categorical variables. For example, it can determine if there is a relationship between gender and preference for a product.

- Z-tests: Used when the population standard deviation is known. It can be used to test for differences in means or proportions.

- Non-parametric tests: Used when the assumptions of parametric tests (like normality) are not met. Examples include the Mann-Whitney U test (for comparing two independent groups) and the Wilcoxon signed-rank test (for comparing two related groups).

The choice of test depends on the data type, the number of groups being compared, and the assumptions being made about the data. The p-value obtained from the test is compared to a pre-defined significance level (alpha, typically 0.05), and the null hypothesis is rejected if the p-value is less than alpha.

Q 26. Describe your familiarity with Bayesian statistics.

Bayesian statistics provides a powerful framework for statistical inference by incorporating prior knowledge or beliefs into the analysis. Unlike frequentist methods, which focus solely on sample data, Bayesian methods update prior beliefs based on observed data to generate a posterior distribution representing our updated belief about the parameters of interest.

My familiarity with Bayesian statistics includes using Markov Chain Monte Carlo (MCMC) methods, such as Gibbs sampling and Metropolis-Hastings algorithms, to estimate posterior distributions. I’ve worked with software like Stan and PyMC3 to implement Bayesian models.

For instance, in a clinical trial setting, I might have prior knowledge about the effectiveness of a treatment based on previous research. This prior information can be incorporated into a Bayesian model to improve the precision and reliability of the results. Bayesian methods are particularly useful when dealing with small sample sizes or when prior knowledge is readily available, offering a more nuanced and comprehensive approach to inference. The posterior distribution provides a complete picture of uncertainty, unlike frequentist p-values.

Q 27. How do you choose the appropriate statistical test for a given research question?

Choosing the appropriate statistical test is crucial for valid and reliable research. The selection depends on several key factors:

- Research Question: What are you trying to find out? Are you comparing means, proportions, associations, or predicting an outcome?

- Data Type: What kind of data do you have? Is it continuous (e.g., height, weight), categorical (e.g., gender, color), or ordinal (e.g., Likert scale ratings)?

- Number of Groups/Variables: Are you comparing two groups, multiple groups, or examining the relationship between two or more variables?

- Assumptions of the Tests: Many tests make assumptions about the data (e.g., normality, independence). Are these assumptions met? If not, non-parametric tests might be necessary.

A flowchart or decision tree can help systematically navigate these factors. For example:

- Comparing means of two independent groups: Independent samples t-test (if data is normally distributed) or Mann-Whitney U test (if data is not normally distributed).

- Comparing means of three or more independent groups: One-way ANOVA (if data is normally distributed) or Kruskal-Wallis test (if data is not normally distributed).

- Analyzing the association between two categorical variables: Chi-square test of independence.

- Predicting a continuous outcome based on one or more predictors: Linear regression (if assumptions are met) or other regression techniques.

Incorrectly chosen tests can lead to misleading results. Consulting statistical literature and seeking expert advice when needed is critical to ensuring the correct test is selected and interpreted properly.

Key Topics to Learn for Research Methods and Statistical Analysis Interview

- Research Design: Understanding different research designs (experimental, quasi-experimental, correlational, qualitative) and their strengths and weaknesses. Knowing when to apply each design to different research questions is crucial.

- Data Collection Methods: Familiarity with various data collection techniques, including surveys, interviews, observations, and experiments. Be prepared to discuss the advantages and limitations of each method and how to ensure data quality.

- Descriptive Statistics: Mastering measures of central tendency (mean, median, mode), variability (standard deviation, variance), and distribution (skewness, kurtosis). Understand how to interpret and present descriptive statistics effectively.

- Inferential Statistics: A strong grasp of hypothesis testing, confidence intervals, and p-values. Be prepared to discuss different statistical tests (t-tests, ANOVA, regression analysis) and their appropriate applications.

- Regression Analysis: Understanding linear and multiple regression, including model building, interpretation of coefficients, and assessing model fit. Be ready to discuss assumptions and limitations of regression analysis.

- Data Visualization: Ability to effectively communicate findings through various data visualization techniques, including graphs, charts, and tables. Choosing the right visualization for the data is key.

- Ethical Considerations in Research: Understanding research ethics, including informed consent, confidentiality, and data integrity. This is vital for demonstrating responsible research practices.

- Interpreting and Communicating Results: Clearly and concisely explaining complex statistical findings to both technical and non-technical audiences. This showcases strong communication skills.

- Bias and Limitations: Critically evaluating research findings, identifying potential sources of bias, and understanding the limitations of different methods and analyses.

Next Steps

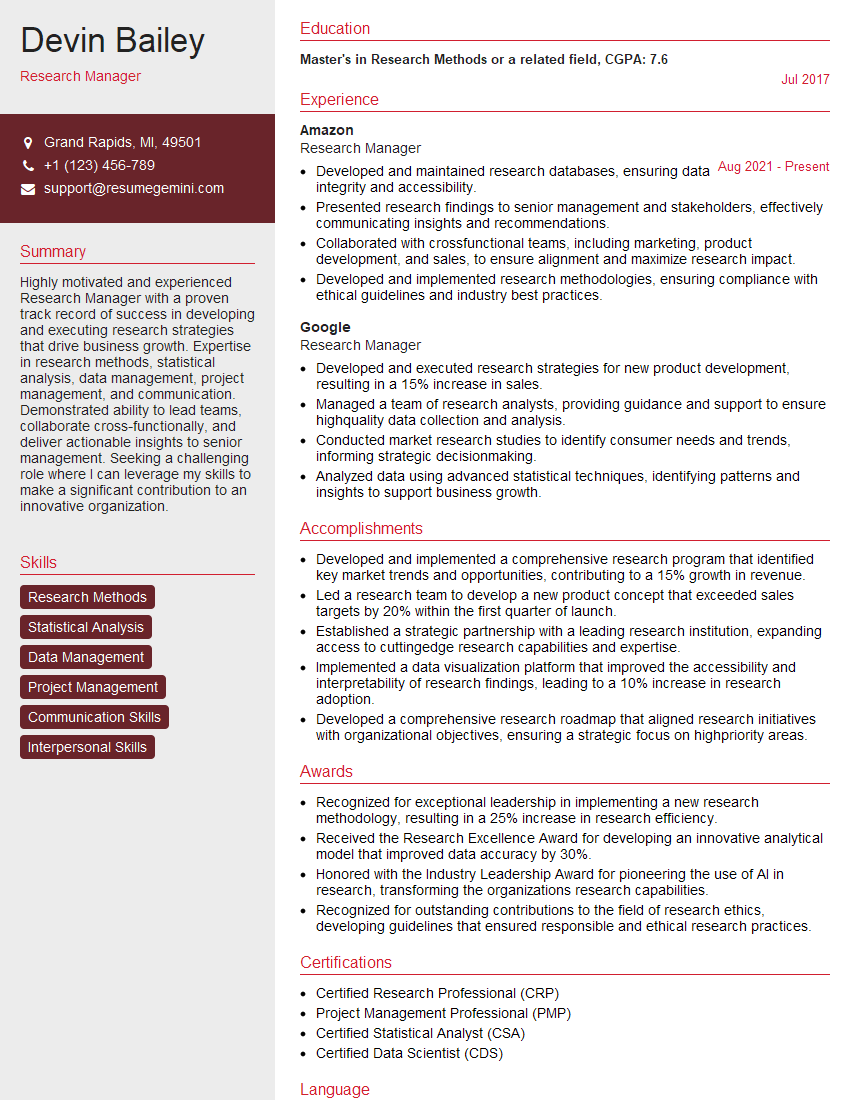

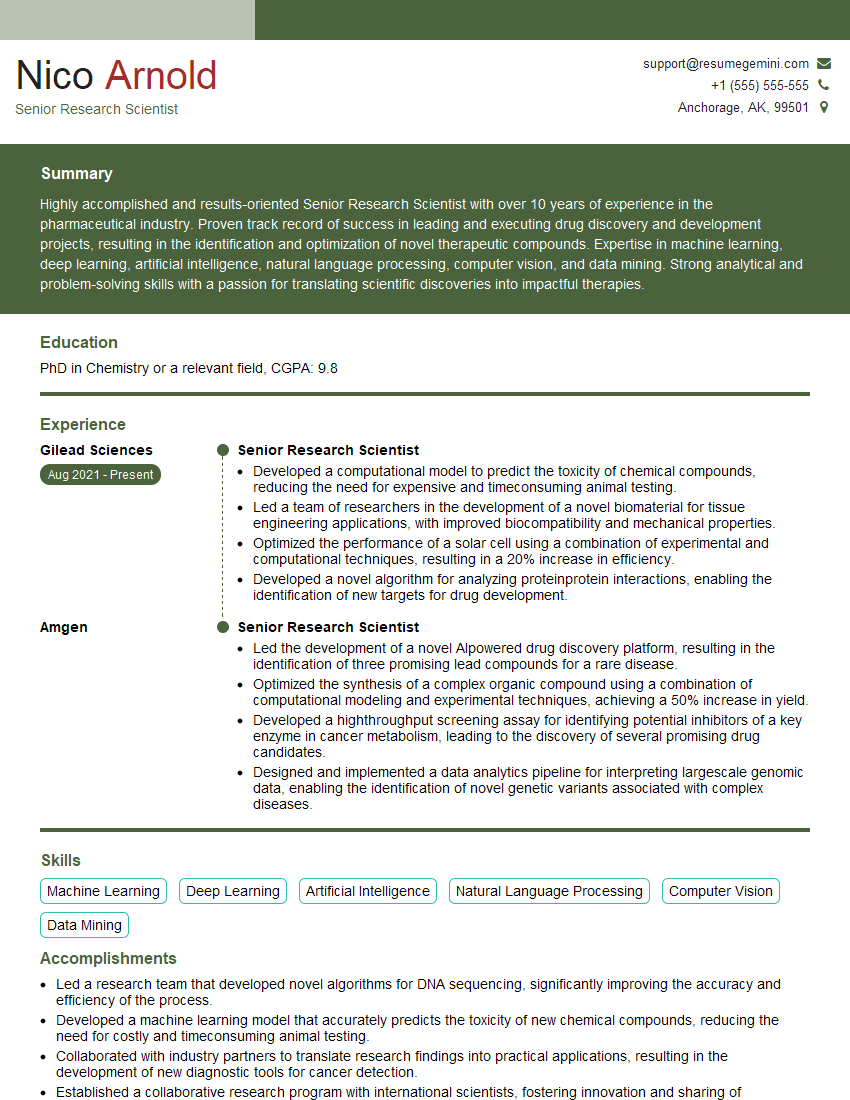

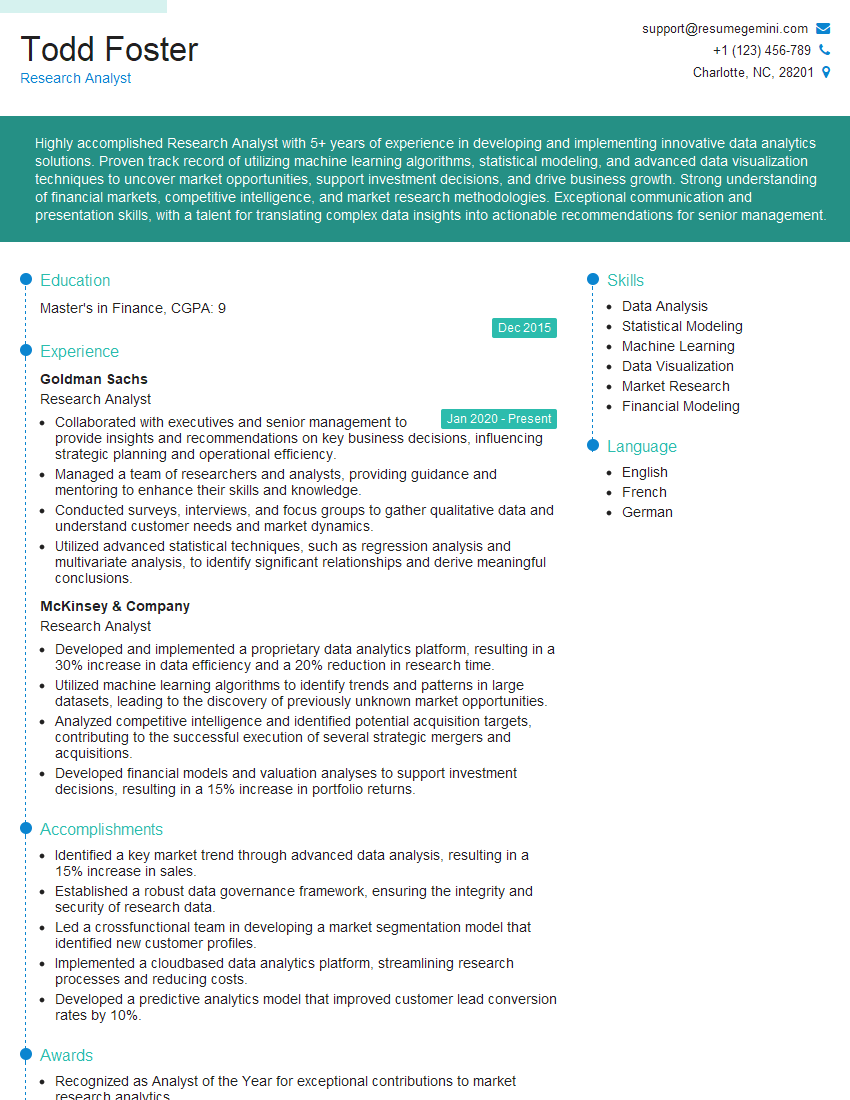

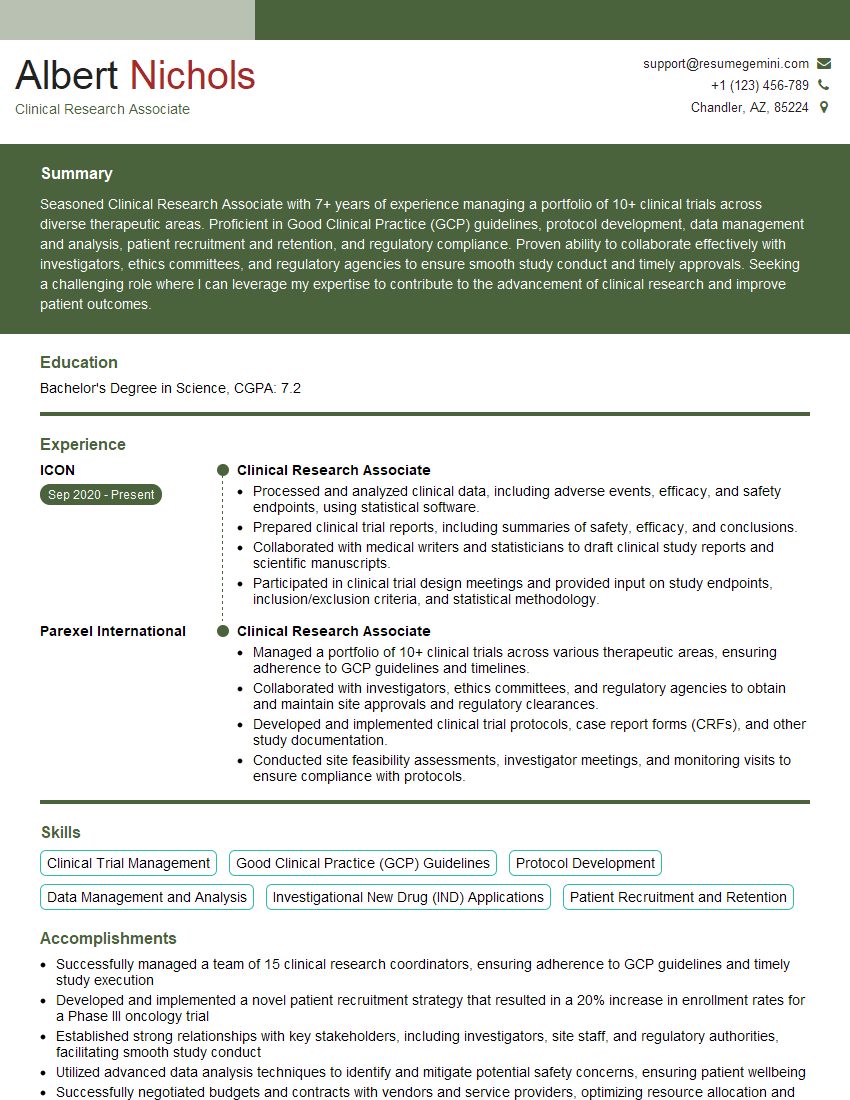

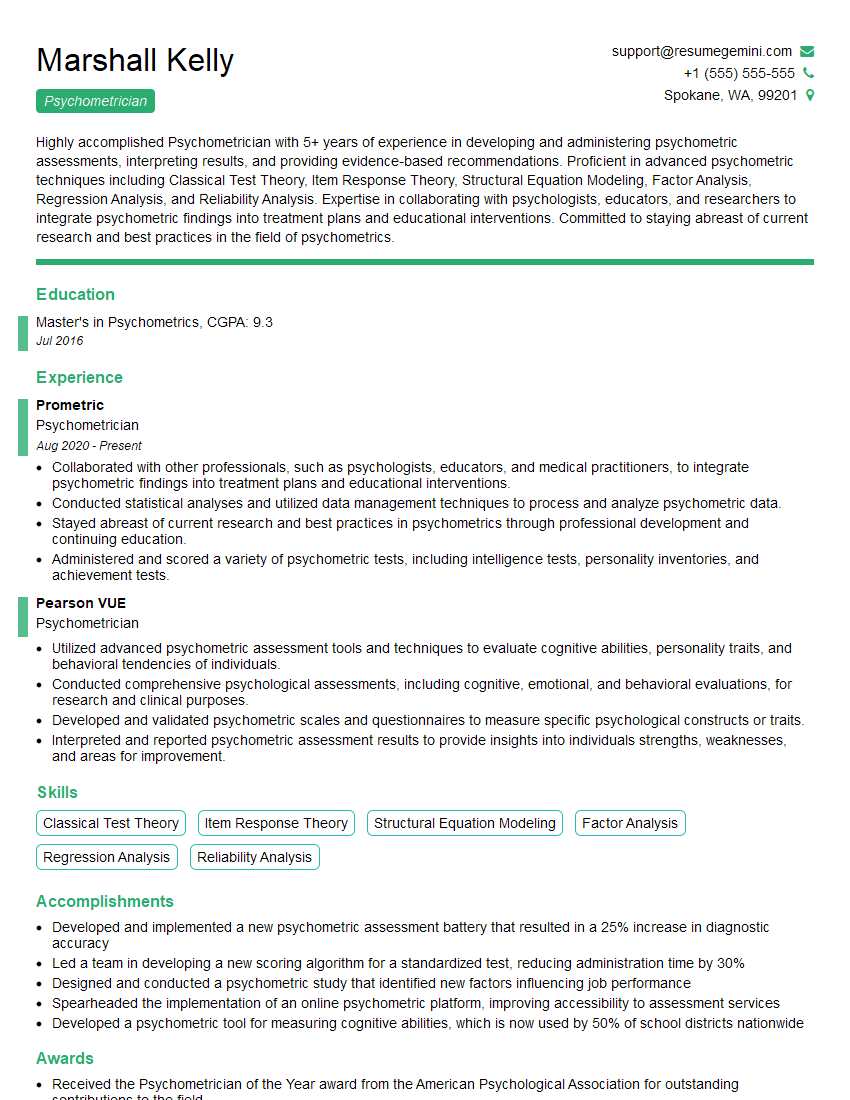

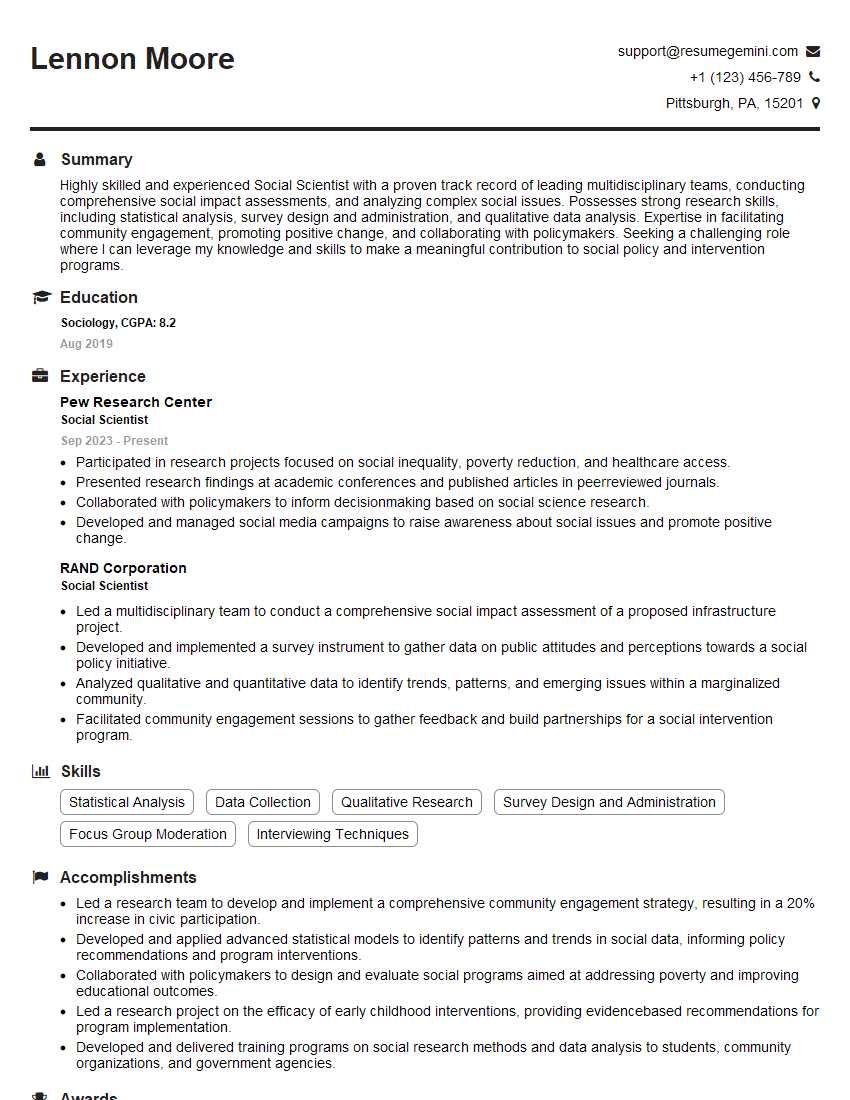

Mastering Research Methods and Statistical Analysis significantly enhances your career prospects in various fields, opening doors to exciting opportunities and higher earning potential. A strong foundation in these areas demonstrates valuable analytical skills highly sought after by employers. To maximize your job search success, focus on building an ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource for creating compelling and impactful resumes, and we provide examples of resumes tailored to Research Methods and Statistical Analysis to help guide you. Invest in your professional presentation – it’s an investment in your future.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.