The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Seismic Velocity Model Building interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Seismic Velocity Model Building Interview

Q 1. Explain the different methods for building seismic velocity models.

Building accurate seismic velocity models is crucial for proper seismic imaging and interpretation. Several methods exist, each with its strengths and weaknesses, and often a combination is used. These methods broadly fall into two categories: velocity analysis from seismic data itself and incorporation of well log data.

- Velocity Analysis from Seismic Data: This involves analyzing the travel times of seismic reflections to estimate subsurface velocities. Common techniques include:

- Normal Moveout (NMO) velocity analysis: This is a fundamental method that analyzes the relationship between reflection travel times and offset (distance between source and receiver). It’s often the first step in velocity analysis and provides a relatively smooth, average velocity profile.

- Velocity spectrum analysis: This method displays a range of velocities for each reflection event, helping identify the most likely velocity. It’s especially useful when dealing with complex geology.

- Constant velocity stacks: This involves stacking seismic traces after applying a constant velocity to correct for moveout. By comparing the quality of the stacked sections for different velocities, the best-fitting velocity model can be found.

- Well Log Data Integration: This involves incorporating direct velocity measurements from well logs (sonic logs, density logs, etc.) into the seismic velocity model. This provides crucial ground truth information for calibration and validation of the seismic-derived velocities.

- Tomography: This is an advanced method that uses travel times from numerous seismic events to create a 3D velocity model. It’s computationally intensive but can handle complex velocity variations.

- Full-waveform inversion (FWI): This is a sophisticated technique that inverts the entire seismic waveform to create a high-resolution velocity model. While it yields detailed models, it’s also highly computationally expensive and requires considerable expertise.

The choice of method depends on factors like data quality, geological complexity, and computational resources available.

Q 2. Describe the role of well velocity data in velocity model building.

Well velocity data plays a critical role in building reliable seismic velocity models. It provides direct measurements of subsurface velocity, acting as a ‘ground truth’ against which the seismic-derived velocities can be checked and calibrated.

Sonic logs, in particular, measure the velocity of sound waves through the formation. These measurements are converted to interval velocities, which are then integrated to obtain depth-velocity relationships. This directly observed velocity information helps to constrain the velocity model, improve its accuracy, and correct for inconsistencies derived purely from seismic data.

For instance, if seismic velocity analysis suggests a certain velocity, but the corresponding well log shows a significantly different value, we can adjust the seismic model to better match the well log’s accurate velocity measurements. This process helps to minimize errors and improves the overall reliability of the velocity model, leading to more accurate seismic imaging.

Q 3. How do you handle velocity anomalies in seismic data?

Velocity anomalies, representing areas of unexpectedly high or low seismic velocity, are common in seismic data and often indicate complex geological features such as faults, salt domes, or changes in lithology. Handling these anomalies requires careful consideration and often involves iterative model building.

- Identification: Velocity anomalies often show up as inconsistencies between different velocity analysis methods or deviations from regional trends.

- Investigation: If the anomaly is supported by geological or well log data, it should be incorporated into the model. For instance, if a salt dome is known to exist, a low-velocity zone should be incorporated into the velocity model around that area.

- Refinement: If the anomaly is not supported by other data and is deemed to be an artifact, it can be smoothed or modified. This often involves iterative processes, comparing the improved seismic image with the original.

- Local Model adjustments: Complex anomalies might require locally refined velocity models within the area of the anomaly, tying the local changes to the regional model.

The key is to balance the need to accurately represent the true subsurface velocity with the avoidance of overfitting the data to spurious noise. A thorough understanding of the geological setting and careful interpretation of the data are crucial for effectively handling velocity anomalies.

Q 4. What are the common challenges in building velocity models for complex geological settings?

Building accurate velocity models in complex geological settings presents numerous challenges. These areas often exhibit significant lateral and vertical variations in velocity, making it difficult to obtain a reliable representation of the subsurface.

- Rapid velocity changes: Sharp changes in lithology or the presence of faults can cause abrupt velocity variations, making it difficult for standard velocity analysis techniques to accurately capture the velocity structure.

- Multiple reflections and interference: Complex geology can lead to multiple reflections and interference effects, making it harder to isolate primary reflections for velocity analysis.

- Limited well control: The sparse distribution of wells in some areas can limit the ability to calibrate and constrain the seismic velocity model.

- Difficulties in velocity analysis algorithms: Standard velocity analysis techniques can struggle to resolve velocity variations in complex settings. More advanced techniques like tomography or FWI may be needed, but they are computationally expensive and require significant expertise.

- High data noise levels: Noise can affect the accuracy of seismic velocity analysis, especially in areas with complex geology. Careful pre-processing and advanced noise-attenuation techniques might be necessary.

In such settings, integrating various data sources (seismic, well logs, geological information) and employing advanced velocity modeling techniques are essential for building a reliable velocity model.

Q 5. Discuss the impact of inaccurate velocity models on seismic imaging.

Inaccurate velocity models have a significant impact on seismic imaging, leading to various artifacts and misinterpretations. The consequence of a poorly constructed model can range from minor inaccuracies in reflection positioning to major misinterpretations of the subsurface structure.

- Incorrect positioning of reflectors: Errors in velocity lead to incorrect depth conversion, misplacing the reflections and distorting the geological structures. This can affect the interpretation of fault locations, stratigraphic horizons, and reservoir boundaries.

- Distorted amplitudes: Velocity errors can lead to inaccurate amplitude recovery, which is crucial for reservoir characterization. This can lead to incorrect estimation of reservoir properties, such as porosity and saturation.

- Poor image resolution: Inaccurate velocity models can reduce the resolution of seismic images, obscuring subtle geological features. This makes it difficult to detect small faults or thin layers which may have hydrocarbon implications.

- Difficulties in interpretation: The resulting image can be complex to interpret, leading to possible errors in identifying geological structures and formations.

Therefore, creating an accurate velocity model is a critical step to ensure the success of seismic imaging projects.

Q 6. Explain the difference between tomography and other velocity analysis methods.

Tomography is a powerful 3D velocity analysis method distinct from simpler techniques like NMO velocity analysis. While NMO analysis primarily uses travel times from common midpoint gathers (CMP gathers) to estimate velocities along a single raypath, tomography employs a vast amount of data from a multitude of raypaths to build a more comprehensive and detailed velocity model.

In simpler terms, imagine trying to map the depth of a lake using only a few soundings. NMO velocity analysis is like taking these limited soundings, whereas tomography is like using a vast network of sonar readings to create a detailed bathymetric map. This approach allows tomography to resolve complex velocity variations that might be missed by simpler methods. It creates a 3D grid and uses travel-time information from many seismic traces to simultaneously solve for velocities at each grid node. This iterative approach allows for the construction of higher-resolution models in complex settings. Other simpler methods tend to give smoothed velocity fields; tomography offers a significant increase in resolution.

Q 7. How do you validate a seismic velocity model?

Validating a seismic velocity model is a crucial step to ensure its accuracy and reliability. This process involves comparing the model’s predictions with independent data sources and assessing the quality of the resulting seismic images.

- Comparison with well log data: The most direct validation method is to compare the velocities from the seismic model to the interval velocities derived from well logs at the well locations. Discrepancies could point to areas requiring further model refinement.

- Checking for consistency across different velocity analysis methods: If different velocity analysis methods (e.g., NMO and tomography) yield significantly different results, it may indicate a problem with the data or the model. This should trigger investigation into potential issues.

- Assessment of seismic image quality: A well-built velocity model should produce high-quality seismic images with well-defined and continuous reflections. Poor image quality, including artifacts and smearing, can be indicative of errors in the velocity model.

- Comparison with geological information: The velocity model should be consistent with existing geological knowledge and interpretations. Major discrepancies might hint at inaccuracies in the model, prompting re-evaluation and potential revision of the model.

- Residual moveout analysis: After applying the velocity model to correct moveout, analyzing the remaining moveout (residual moveout) can identify areas where the model needs improvement.

Through this comprehensive validation process, we can ensure the reliability and accuracy of the seismic velocity model, leading to more confident interpretations and improved decision-making in exploration and production projects.

Q 8. Describe the steps involved in building a pre-stack depth migration velocity model.

Building a pre-stack depth migration (PSDM) velocity model is a crucial step in seismic imaging, aiming to accurately position subsurface reflectors in their correct depth positions. It’s an iterative process, often involving several steps and significant interaction with geologists and other subsurface specialists.

- Initial Velocity Model: This often starts with a simple velocity model derived from well logs, existing seismic data (e.g., a simpler velocity model from a previous survey), or regional geological information. This provides a starting point for the more complex iterative processes.

- Velocity Analysis: Various techniques are employed to analyze the seismic data and estimate interval velocities. Common methods include semblance analysis, normal moveout (NMO) velocity analysis, and other more advanced techniques. These methods analyze the travel times of reflections to determine the velocity structure.

- Pre-stack Depth Migration: The initial velocity model is used to perform a pre-stack depth migration. This process ‘migrates’ the seismic reflections to their correct depth positions based on the provided velocities.

- Velocity Model Updating: After the migration, the resulting image is analyzed for residual moveouts or other imaging artifacts. These indicate inaccuracies in the velocity model. This process often involves using residual moveout analysis to identify areas requiring velocity adjustments. This step is often repeated in iterations, creating a feedback loop between migration and velocity updates.

- Iteration and Refinement: The process of migration and velocity update is repeated iteratively. Each iteration refines the velocity model, leading to a progressively more accurate image. This process often continues until a satisfactory image quality is achieved, often balanced with the computational costs involved.

- Model Validation: Once the velocity model is built, various validation steps are undertaken. This could include comparing the final depth image to well log data, geological information, or even synthetic seismograms generated from the final velocity model to check the accuracy of the predicted reflections.

Think of it like assembling a 3D jigsaw puzzle. The initial velocity model is a rough guess at the picture. Depth migration is like trying to fit the pieces together using that guess. The residual moveouts are the pieces that don’t fit, and adjusting the velocity model is like finding the right pieces and shifting them accordingly to improve the overall picture.

Q 9. What are the key quality indicators for a good velocity model?

A good velocity model is characterized by several key quality indicators, all contributing to a high-quality seismic image. These include:

- Accurate Reflection Positioning: The most important indicator. The model should correctly position reflectors at their true subsurface depths. Incorrect positioning can lead to misinterpretation of the subsurface structures.

- Flat Events on Migrated Sections: After pre-stack depth migration, seismic events (reflectors) should appear relatively flat and continuous. Significant dips or warps in the migrated section indicate remaining velocity errors.

- Geologically Reasonable Model: The velocity model should be consistent with known geology. Unrealistic velocity variations (e.g., extremely high or low velocities in unexpected locations) are usually indicators of problems.

- Well-Tie Consistency: If well logs are available, the velocity model should be consistent with the velocities derived from the well logs. This helps to ground truth the model and ensure its reliability.

- Resolution and Detail: The model should allow for accurate representation of the fine-scale geological details within the region of interest. This often depends on the quality and resolution of the input seismic data and the capabilities of the velocity estimation algorithms used.

- Robustness: The velocity model should be robust to noise and uncertainties in the input data. Small variations in the input data should not cause drastic changes to the velocity model.

In essence, a good velocity model is one that produces a geologically plausible and accurate representation of the subsurface while effectively mitigating the impact of uncertainties and noise.

Q 10. How do you handle uncertainties in velocity model building?

Uncertainties are inherent in velocity model building. Several strategies are used to mitigate their impact:

- Multiple Velocity Models: Building multiple velocity models using different methodologies and assumptions can reveal the range of uncertainty. Stochastic methods can also be used to quantify this uncertainty.

- Sensitivity Analysis: This tests the sensitivity of the final image to variations in the velocity model. This helps to identify areas where the model is most uncertain and where further refinement may be beneficial.

- Regularization Techniques: These methods constrain the velocity model to be smooth or consistent with prior knowledge, reducing the impact of noise and local uncertainties.

- Bayesian Approaches: These use probabilistic frameworks to incorporate prior geological knowledge and seismic data uncertainties to produce velocity estimates along with associated uncertainties.

- Inversion Techniques: Full-waveform inversion and other advanced inversion methods can produce velocity models directly from seismic data. While computationally expensive, these techniques often incorporate uncertainties more effectively.

- Ensemble Methods: Using multiple methods such as full-waveform inversion, tomography, and others to build multiple velocity models which can be combined and compared to identify potential biases.

It’s important to remember that a velocity model is never perfect. Quantifying and understanding the uncertainties is crucial for proper interpretation of the final seismic image.

Q 11. Explain the concept of velocity analysis and its importance in seismic processing.

Velocity analysis is the process of determining the velocity of seismic waves as they travel through the subsurface. It’s a fundamental step in seismic processing because the accurate determination of seismic velocity is essential for correctly positioning subsurface reflectors and improving image quality.

Imagine throwing a pebble into a still pond; the ripples (seismic waves) travel outwards at a speed related to the properties of the water (subsurface). Velocity analysis helps us determine the speed of those seismic ripples based on the time it takes for them to return to the surface after bouncing off underground layers. Incorrect velocity leads to incorrectly positioned reflectors, resulting in a blurry or inaccurate picture of the subsurface.

The importance of accurate velocity analysis cannot be overstated. It directly impacts:

- Seismic Imaging: Accurate velocity is critical for pre-stack depth migration, ensuring accurate positioning of reflectors and a high-quality image.

- Subsurface Interpretation: An inaccurate velocity model can lead to misinterpretation of faults, structures, and reservoir properties.

- Reservoir Characterization: Velocity is directly related to rock properties (e.g., porosity, density), making it essential for reservoir characterization.

Q 12. Describe different types of velocity functions (e.g., RMS, interval, average).

Several types of velocity functions are used in seismic processing, each providing different information about the subsurface. The key distinctions are often in how they are expressed spatially within the 3D model.

- Root Mean Square (RMS) Velocity: This is the average velocity that seismic waves travel at over a given interval from the surface to a particular reflector. It’s the velocity you’d get if you averaged the velocity over the entire travel path. It’s directly used in the NMO correction of seismic data. It’s often what is first estimated in velocity analysis, allowing for an initial pre-stack time migration.

- Interval Velocity: This represents the true velocity of seismic waves within a specific layer or interval between two reflectors. This is the velocity we aim to solve for and model, often in a 3D grid. It is derived from RMS velocities using Dix’s equation, often forming the basis of many interval velocity models.

- Average Velocity: The average velocity over a specified interval, often simply an arithmetic average of interval velocities within the interval of interest. It’s less commonly used directly in migration but is useful for some forms of well-tie and comparison with well log data.

The relationship between these velocity types is fundamental. RMS velocities are readily measurable, but interval velocities are what ultimately allow us to build a more accurate representation of the subsurface properties within each individual layer. The choice of which velocity function is more appropriate depends on the application. For instance, interval velocities are essential for building detailed depth models, whereas RMS velocities are commonly used for initial processing steps like NMO corrections.

Q 13. How do you incorporate geological information into your velocity model?

Incorporating geological information is critical for building a realistic and accurate velocity model. Geological information can constrain the model, reduce ambiguity, and improve the reliability of the final velocity model.

Here are several ways geological information is integrated:

- Well Logs: Well logs provide direct measurements of velocity and other rock properties (e.g., porosity, density) at specific locations. These are often used to constrain the velocity model, especially near wells. Velocity from sonic logs is frequently incorporated directly into the starting velocity model.

- Geological Maps and Cross-Sections: These maps show the distribution of different rock units in the subsurface. This information is used to define the layering in the velocity model and guide velocity variations. This provides an initial framework on which the seismic data is analyzed and compared.

- Geological Interpretation: Geologists can use their expertise to guide the velocity model construction. For example, they can identify areas where velocity variations are expected due to geological features like faults or unconformities.

- Stratigraphic Information: Understanding the layering and sequence of rock units in a region is invaluable. This helps to determine which velocities are realistic and assists in interpreting any anomalies found during velocity analysis.

Think of geological information as providing a ‘skeleton’ for the velocity model. The seismic data then ‘fills in the flesh’ providing the specific velocity values consistent with the overall structure.

Q 14. Discuss the impact of noise on velocity analysis.

Noise in seismic data significantly impacts velocity analysis, leading to inaccurate velocity estimates and ultimately poor seismic imaging. Noise can manifest in various forms, including random noise, coherent noise (e.g., multiples), and ground roll.

The effects of noise are:

- Biased Velocity Estimates: Noise can obscure the true seismic events, leading to the velocity analysis algorithms picking up false events and resulting in inaccurate velocity estimates.

- Reduced Resolution: Noise reduces the signal-to-noise ratio, making it difficult to identify subtle velocity variations and hindering the resolution of the velocity model.

- Unrealistic Velocity Variations: Noise can introduce spurious high-frequency variations in the velocity model, making it less geologically realistic.

- Difficulties in Picking Events: Noise makes it harder to manually pick events, which is frequently required in many velocity analysis workflows.

Strategies for mitigating noise effects include:

- Pre-processing: Applying various filtering techniques (e.g., noise attenuation, deconvolution, multiple suppression) prior to velocity analysis to improve the signal-to-noise ratio.

- Robust Velocity Analysis Techniques: Employing techniques that are less sensitive to noise, such as semblance analysis methods.

- Careful Data Editing: Manually editing the seismic data to remove obvious noise artifacts before velocity analysis can be beneficial, however this can be very time consuming.

Noise management is an ongoing effort. Understanding its impact and employing suitable noise-reduction techniques are essential for producing accurate and reliable velocity models.

Q 15. How do you address the effects of anisotropy on velocity modeling?

Anisotropy, the directional dependence of seismic wave velocities, significantly impacts velocity model building. Ignoring it leads to inaccurate imaging and interpretation. We address anisotropy by incorporating it directly into the velocity model. This often involves using techniques that estimate the parameters of an anisotropic model, such as Thomsen parameters (ε, δ, γ), which describe the strength of anisotropy.

One common approach is to use pre-stack depth migration (PSDM) which accounts for anisotropy during the imaging process. This requires careful analysis of seismic data to determine the appropriate anisotropic parameters. For instance, analyzing shear-wave splitting (the separation of shear waves into faster and slower components due to anisotropy) provides crucial constraints. Another method involves building a transversely isotropic (TI) model, a common type of anisotropy, where properties vary with depth but are symmetrical about a vertical axis. We often start with an isotropic model and then iteratively refine it by adding anisotropic parameters based on seismic data and well log information. The selection of the appropriate anisotropic model is crucial and depends on the geological setting and the quality of the data.

For example, in a shale gas reservoir, strong vertical transverse isotropy (VTI) is expected due to the layered nature of shale. Therefore, we’d utilize a VTI model in our workflow. Incorrectly neglecting anisotropy in such a case would result in inaccurate depth conversion and potentially missed or poorly imaged reservoir features.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the relationship between velocity model accuracy and seismic resolution.

The accuracy of a velocity model directly impacts the seismic resolution. Think of it like focusing a camera: a precise velocity model is like having a sharp focus, providing clear and detailed images of subsurface structures. Conversely, an inaccurate velocity model acts like a blurry lens, obscuring the details and hindering our ability to resolve fine-scale features. Errors in velocity can lead to lateral and vertical smearing of reflections, making it difficult to interpret faults, fractures, or subtle changes in lithology.

For instance, a velocity model with significant errors in a high-resolution survey aimed at detecting thin hydrocarbon layers would result in an inability to resolve these layers. The reflections would be poorly positioned and potentially blended together, preventing accurate layer identification and thickness estimation. High-frequency seismic data inherently provide greater detail, but the benefit is lost if the velocity model is not similarly accurate and properly accounts for all relevant geological features.

Q 17. What software packages are you familiar with for velocity model building?

I have extensive experience with several industry-standard software packages for velocity model building. These include:

- Petrel: A comprehensive platform offering a wide range of functionalities, from seismic data processing and interpretation to velocity model building and reservoir characterization.

- OpendTect: A powerful and flexible open-source package suitable for various seismic processing and interpretation tasks, including velocity model construction.

- Kingdom: Known for its advanced algorithms and capabilities in seismic imaging and velocity analysis, particularly useful for complex geological settings.

- GeoProbe: Often utilized for its robust functionalities in handling large seismic datasets and building high-resolution velocity models.

My proficiency extends beyond individual software to the underlying principles and methodologies that drive effective velocity model construction. I’m comfortable adapting my workflow to different software platforms as needed.

Q 18. Describe your experience with depth conversion using velocity models.

Depth conversion is crucial in transforming seismic data from time to depth domains. This transformation is directly dependent on the accuracy of the velocity model. I routinely perform depth conversion using velocity models generated from various techniques, including tomography, well-log analysis, and velocity analysis from seismic data. The process usually involves applying ray tracing algorithms to map the travel times of seismic events into spatial coordinates.

I’ve used this extensively for pre-stack depth migration where the velocity model is critical for accurate image construction. A common workflow includes iterative updates to the velocity model based on the migrated image. Inaccurate depth conversion can lead to mis-positioning of faults, horizons, and other geological structures, significantly affecting reservoir characterization and well planning. For example, an error in depth conversion could lead to drilling a well in the wrong location relative to the anticipated reservoir, potentially resulting in a dry hole.

Q 19. How do you handle multiple velocity solutions during model building?

Multiple velocity solutions are often encountered during velocity model building, particularly in complex geological settings. This ambiguity arises from the non-uniqueness of the inverse problem of determining velocity from travel times. I use a combination of techniques to resolve this. Firstly, we start by using available well logs to constrain the model. Next, I employ multiple velocity analysis techniques such as semblance and velocity spectra to assess the plausibility of different solutions. Furthermore, integrating geological information, such as fault locations and known lithologies, helps in eliminating unrealistic velocity models. A detailed understanding of the subsurface geology is crucial in selecting the most geologically realistic model among the multiple possibilities.

If uncertainties still remain, I would quantify these uncertainties and propagate them through subsequent analyses. This involves creating an ensemble of velocity models that encapsulate the range of possible solutions. This provides a more complete and robust representation of our subsurface understanding.

Q 20. Discuss the trade-offs between different velocity modeling techniques.

Various velocity modeling techniques exist, each with its strengths and weaknesses. The choice depends on data quality, geological complexity, and project objectives.

- Tomography: A powerful method that uses traveltime information from numerous seismic events to iteratively refine a velocity model. It’s robust but computationally intensive and can be sensitive to noise in the data.

- Well-log velocity analysis: Provides high-resolution velocities but is limited to the locations of boreholes. Extrapolating these velocities to the entire survey area requires careful consideration and potentially relies on other data types.

- Velocity analysis from seismic data: Techniques like semblance analysis and velocity spectra can be used directly on seismic data, but may be less accurate in complex areas and requires careful interpretation.

The trade-off often lies between accuracy, computational cost, and data requirements. A sophisticated tomography model might be highly accurate but extremely expensive and time-consuming, while a simpler method based on well logs might be faster but less spatially representative. Therefore, an optimal strategy usually involves combining multiple techniques to leverage their respective strengths and mitigate their limitations.

Q 21. How do you quantify the uncertainty associated with your velocity model?

Quantifying uncertainty in a velocity model is paramount to provide a realistic assessment of its reliability. I employ several strategies for this. Firstly, I conduct sensitivity analysis, assessing how changes in input parameters (e.g., seismic data noise levels) affect the final velocity model. This helps determine which aspects of the model are most uncertain. Secondly, we use statistical measures such as variance and standard deviation to characterize the uncertainty in velocity estimates at each point in the model. Thirdly, building multiple velocity models using different input parameters or methodologies creates an ensemble of models. The spread of this ensemble provides a visual and quantitative representation of the uncertainty.

Furthermore, we use Monte Carlo simulations to propagate uncertainties through the entire workflow. This involves running numerous simulations with varying input parameters, sampled randomly from their probability distributions. The resulting ensemble of final results provides a range of possible outcomes and a robust measure of uncertainty. This allows us to assess the risk associated with decisions based on our velocity model, improving the reliability and accuracy of subsequent interpretations and reservoir management decisions.

Q 22. Explain the role of iterative inversion in velocity model building.

Iterative inversion is the cornerstone of modern velocity model building. It’s a process where we repeatedly refine our initial velocity model by comparing its predictions to the observed seismic data. Think of it like sculpting: you start with a rough block (initial model) and progressively chip away (adjust velocities) until you achieve a desired shape (accurate model that matches the data).

The process typically involves these steps:

- Initial Model: We begin with a starting velocity model, often derived from well logs, regional studies, or even simple assumptions.

- Forward Modeling: We use the current velocity model to predict the seismic data. This involves simulating wave propagation through the model.

- Residual Calculation: We compare the predicted data to the actual observed seismic data. The difference is the residual, which represents the errors in our current model.

- Inversion: We use sophisticated algorithms (e.g., least-squares, tomography) to adjust the velocity model based on these residuals. The goal is to minimize the difference between the predicted and observed data.

- Iteration: We repeat steps 2-4 until the residuals are sufficiently small, indicating a good fit to the observed data or until we reach a convergence criteria. This iterative process progressively improves the accuracy of the velocity model.

For instance, in a full-waveform inversion (FWI) workflow, this iterative process might involve thousands of iterations, with each iteration making subtle adjustments to the velocity model until a high-fidelity match to the observed seismic data is obtained.

Q 23. How do you assess the impact of velocity errors on reservoir characterization?

Velocity errors significantly impact reservoir characterization. Inaccurate velocities lead to incorrect positioning of reflectors, distorted amplitudes, and flawed estimations of reservoir properties. Think of it like looking at a map with incorrect scaling – you’ll misjudge distances and overall geography.

The effects are multifaceted:

- Incorrect Depth Conversion: Errors in velocity lead to incorrect depths of reflectors and consequently, incorrect locations of the reservoir boundaries. This directly affects the estimation of reservoir volume.

- Amplitude Distortion: Velocity errors can distort seismic amplitudes, which are crucial for estimating reservoir properties like porosity and hydrocarbon saturation. Incorrect amplitudes lead to inaccurate predictions of reservoir quality.

- Migration Artifacts: Inaccurate velocities lead to migration artifacts (smearing or focusing of reflectors), which can obscure reservoir features and complicate interpretation.

- Seismic Attribute Errors: Many seismic attributes (e.g., reflection strength, curvature) are sensitive to velocity. Inaccurate velocities lead to unreliable attribute maps and consequently, poor predictions of reservoir heterogeneity.

A common example is in seismic imaging: a 5% error in velocity can lead to a significant mispositioning of a reservoir horizon at depth, potentially leading to a miscalculation of reserves. Therefore, rigorous quality control and iterative refinement are crucial to minimize the impact of velocity errors.

Q 24. Describe your experience with velocity model updates based on new data.

In my experience, velocity model updates based on new data are routine. This typically happens when additional data becomes available, such as new seismic surveys, well logs from newly drilled wells, or even results from production testing. This iterative approach is key to continuously improving our understanding of the subsurface.

The process generally involves:

- Data Integration: We integrate the new data into our existing workflow. This might involve incorporating new seismic data into our tomography inversion, or using well logs to constrain velocities in specific areas.

- Model Re-evaluation: We reassess the existing velocity model in light of the new information, looking for inconsistencies or areas of improvement.

- Iterative Refinement: We use iterative inversion techniques (similar to those described in the previous question) to update the velocity model and incorporate the new information. This might involve adding new layers or refining existing ones.

- Quality Control: Rigorous quality control checks are performed at each step to validate the updated model and ensure that it accurately reflects the new data and prior information.

For example, in one project, we incorporated new 3D seismic data into our existing velocity model. This resulted in a significant improvement in the resolution of reservoir features, leading to a more accurate estimate of the hydrocarbon in place and subsequent optimization of drilling plans.

Q 25. How do you handle missing data in velocity model building?

Missing data is a common challenge in velocity model building. The approach depends on the extent and nature of the missing data. We often utilize a combination of strategies to address this issue.

Common methods include:

- Interpolation: Simple interpolation techniques (linear, nearest-neighbor, etc.) can be used to fill small gaps in the data, but these methods might introduce artifacts and should be used cautiously. More advanced spatial interpolation methods, such as kriging, can provide better results.

- Extrapolation: Extrapolating from nearby well data or using regional velocity trends to estimate velocities in data-sparse areas is a common approach, but should be treated with caution due to potential inaccuracies.

- Constraint Inversion: We can use a priori information such as well logs, geological models, or regional velocity trends as constraints during the inversion process. This helps to stabilize the inversion and compensate for missing data.

- Bayesian Inversion Methods: Bayesian methods allow us to incorporate prior information and uncertainty about the data into the velocity estimation, providing a more robust solution in the presence of missing data.

The choice of method depends on the specific context and the amount of missing data. Careful consideration is required to avoid introducing biases or artifacts that might negatively impact the quality of the final velocity model.

Q 26. Explain your experience in working with different seismic data types for velocity model building.

My experience encompasses working with a variety of seismic data types, each with its own strengths and limitations in velocity model building.

- Reflection Seismic Data: This is the most common data type, providing reflections from subsurface interfaces. Different acquisition geometries (2D, 3D, and even 4D) offer varying degrees of resolution and coverage. Velocity analysis techniques like semblance and velocity spectra are widely used to estimate velocities from reflection data.

- Refraction Seismic Data: Refraction data are particularly valuable for estimating near-surface velocities. First-arrival times are used to construct velocity models, often using tomography techniques. This is crucial for accurate depth conversion of reflection data, especially in complex near-surface scenarios.

- Well Log Data: Well logs directly measure P-wave and S-wave velocities, providing crucial ground truth information. These data are invaluable for calibrating and constraining seismic velocity models, particularly in areas with high well density.

- Full Waveform Inversion (FWI) data: FWI utilizes the complete seismic waveform to estimate subsurface properties, including velocities. This technique can achieve high resolution but is computationally demanding and requires high-quality seismic data.

The effective integration of these diverse data types is key for building robust and accurate velocity models. For example, combining well log velocities with reflection seismic data using tomographic techniques can create a more accurate velocity model than relying on any one data source alone.

Q 27. Describe your approach to quality control in velocity model building.

Quality control is paramount in velocity model building. It’s an iterative process that should be applied at every stage of the workflow.

My approach includes:

- Data Quality Checks: This involves examining the raw seismic data for noise, artifacts, and inconsistencies. Proper preprocessing is essential to ensure data quality.

- Velocity Analysis Checks: Careful analysis of velocity analysis results (e.g., semblance plots, velocity spectra) is needed to identify any outliers or inconsistencies in velocity estimates.

- Model Consistency Checks: We compare the estimated velocities to well log velocities and regional velocity trends, looking for significant discrepancies. This involves examining velocity-depth relationships for consistency and identifying potential errors.

- Migration Quality Control: After migration using the built velocity model, we inspect the seismic images for artifacts, focusing on the clarity of reflectors and their continuity. This helps us identify areas where the velocity model might need refinement.

- Sensitivity Analysis: We sometimes perform sensitivity analysis to determine the impact of velocity errors on key parameters, such as reservoir volume or hydrocarbon saturation. This analysis provides a measure of the uncertainty in our velocity model.

By systematically implementing these checks and regularly reviewing the progress, we can identify and rectify errors early, saving time and ensuring the reliability of the final velocity model.

Q 28. How do you communicate complex technical information related to velocity models to non-technical audiences?

Communicating complex technical information about velocity models to non-technical audiences requires clear, concise, and engaging communication. I avoid using jargon and focus on visual aids.

My approach involves:

- Analogies and Visualizations: Using simple analogies, like the sculpting analogy mentioned earlier, helps non-technical audiences understand the iterative process. Visual aids such as cross-sections, 3D visualizations, and maps of key parameters greatly enhance understanding.

- Focus on Key Outcomes: Instead of getting bogged down in technical details, I focus on the implications of velocity model accuracy for business decisions. For instance, I might explain how an accurate velocity model improves drilling success rates, reduces exploration risk, or leads to more accurate reserve estimations.

- Storytelling: Presenting the information as a narrative, highlighting the challenges overcome and the insights gained during the model building process, helps engage the audience.

- Interactive Presentations: Using interactive presentations, allowing questions and answers, helps foster two-way communication and clarifies any misunderstandings.

Ultimately, the goal is to translate complex technical concepts into easily digestible information that can be used to make informed decisions. For example, presenting a simple map showing potential hydrocarbon locations derived from the velocity model is much more effective for a non-technical audience than discussing intricate inversion algorithms.

Key Topics to Learn for Seismic Velocity Model Building Interview

- Seismic Wave Propagation: Understanding the principles of P-wave and S-wave propagation through different subsurface layers, including reflection and refraction phenomena.

- Velocity Analysis Techniques: Mastering various methods like Normal Moveout (NMO) correction, velocity spectrum analysis, and semblance analysis for accurate velocity determination.

- Well Log Integration: Learning how to integrate well log data (sonic, density logs) to constrain and improve the accuracy of the velocity model.

- Seismic Inversion: Exploring different seismic inversion techniques (e.g., full waveform inversion, least squares migration) to estimate subsurface properties from seismic data, focusing on their application to velocity model building.

- Model Building Workflows: Understanding the iterative process of building a velocity model, including initial model creation, model updating based on seismic data, and quality control measures.

- Dealing with Complex Geology: Learning how to handle challenges posed by complex geological structures like faults, salt bodies, and lateral velocity variations.

- Uncertainty Quantification: Understanding the inherent uncertainties in velocity models and strategies for quantifying and managing these uncertainties.

- Software and Tools: Familiarity with industry-standard software packages used for seismic velocity model building (mentioning specific software is optional, to avoid outdated information).

- Practical Applications: Understanding the role of accurate velocity models in various downstream applications, such as depth imaging, reservoir characterization, and 4D seismic monitoring.

- Problem-Solving Approaches: Developing strong analytical and problem-solving skills to troubleshoot common issues encountered during velocity model building.

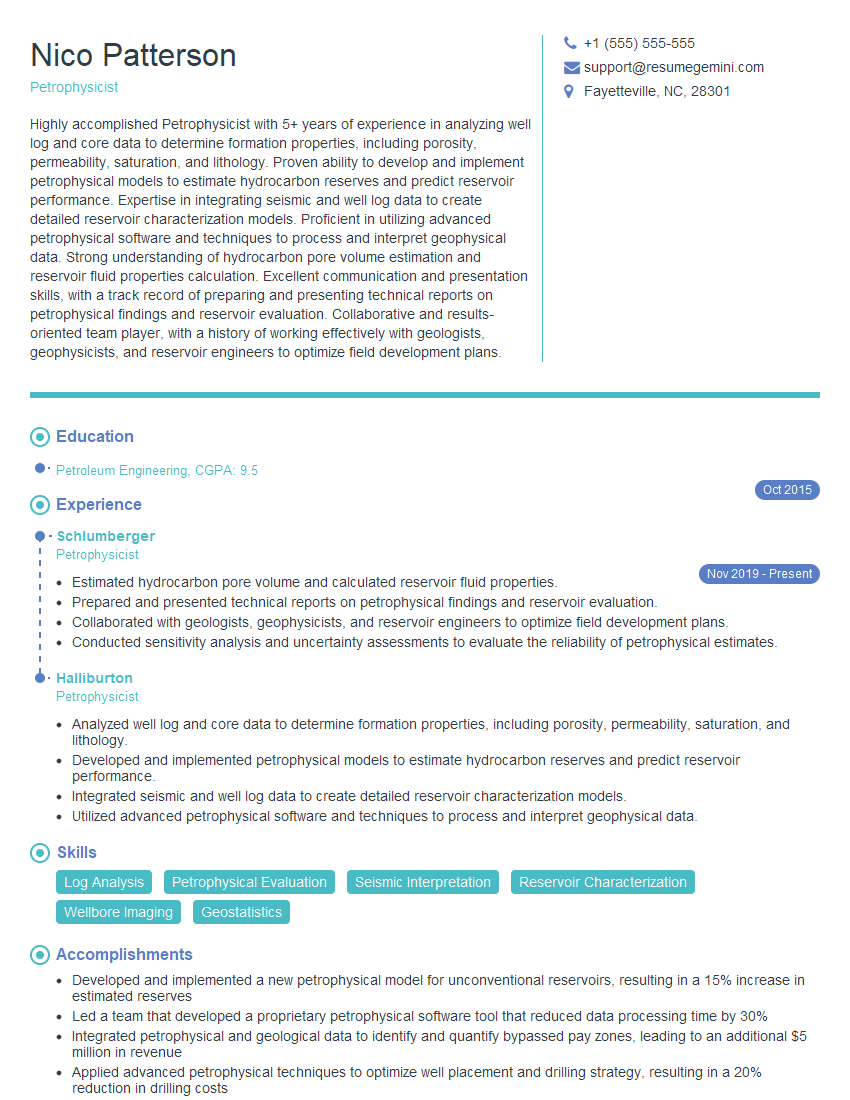

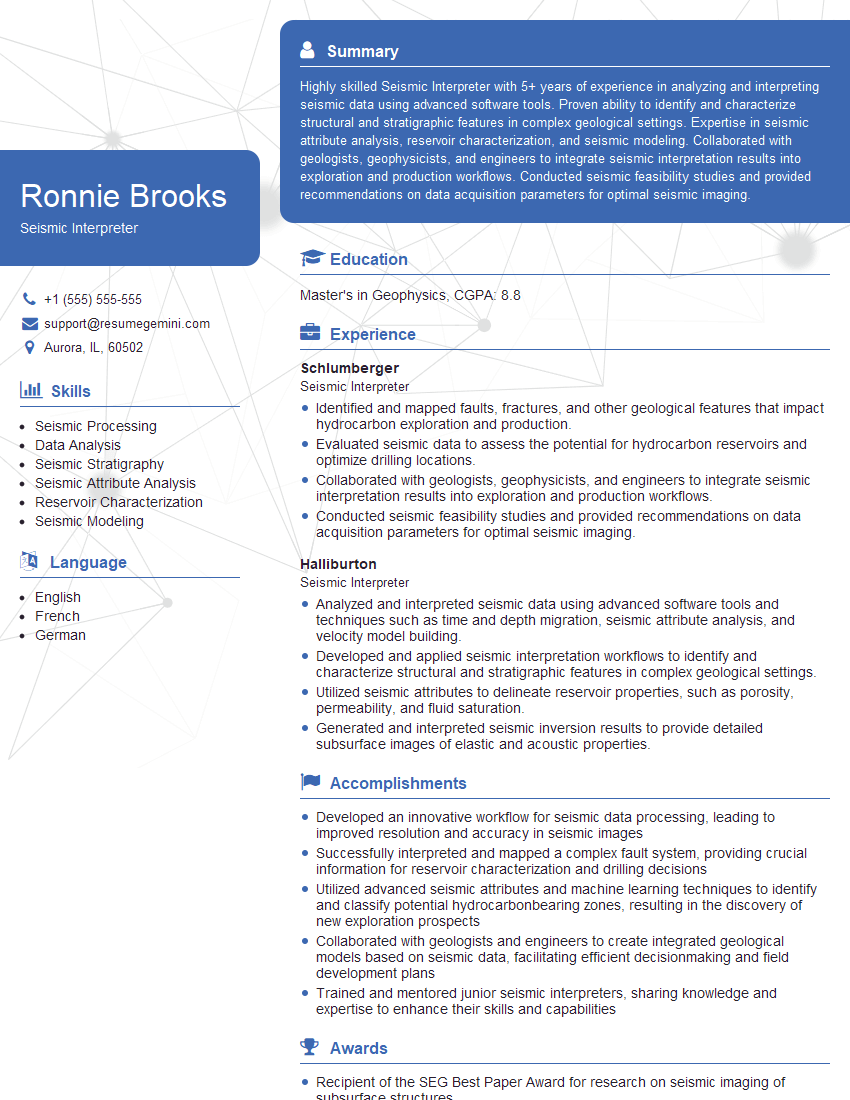

Next Steps

Mastering Seismic Velocity Model Building is crucial for career advancement in the geoscience industry. A strong understanding of these concepts opens doors to exciting opportunities in exploration, production, and research. To maximize your job prospects, focus on creating an ATS-friendly resume that effectively highlights your skills and experience. ResumeGemini is a trusted resource that can help you build a professional and impactful resume, tailored to the specific requirements of the jobs you’re targeting. We provide examples of resumes specifically tailored to Seismic Velocity Model Building to help guide you. Invest time in crafting a compelling resume – it’s your first impression on potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I have something for you and recorded a quick Loom video to show the kind of value I can bring to you.

Even if we don’t work together, I’m confident you’ll take away something valuable and learn a few new ideas.

Here’s the link: https://bit.ly/loom-video-daniel

Would love your thoughts after watching!

– Daniel

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.