The thought of an interview can be nerve-wracking, but the right preparation can make all the difference. Explore this comprehensive guide to Spatial Data Analysis and Visualization interview questions and gain the confidence you need to showcase your abilities and secure the role.

Questions Asked in Spatial Data Analysis and Visualization Interview

Q 1. Explain the difference between vector and raster data.

Vector and raster data are two fundamental ways to represent geographic information. Think of it like drawing a map: vector uses points, lines, and polygons to define features, while raster uses a grid of cells (pixels) to represent data.

- Vector Data: Each feature is stored as a discrete object with defined coordinates. For example, a road is represented as a line, a building as a polygon, and a tree as a point. This approach is ideal when precise location and attributes are crucial. Think of a highly detailed CAD drawing of a city.

- Raster Data: Represents spatial data as a grid of cells, each containing a value. This value can be a continuous variable like elevation or temperature, or a categorical variable like land cover type. Think of an aerial photograph or a satellite image where each pixel holds color information.

Key Differences Summarized:

- Representation: Vector uses points, lines, and polygons; Raster uses a grid of cells.

- Data Storage: Vector stores coordinates and attributes; Raster stores cell values.

- Scale Dependency: Vector data remains sharp at any scale; Raster data resolution is fixed.

- Data Types: Vector is best for discrete features; Raster is good for continuous phenomena.

Example: Imagine mapping a city. Vector data would be ideal for representing individual buildings, roads, and rivers. Raster data would be suitable for representing elevation, land cover, or population density across the city.

Q 2. Describe different types of map projections and their applications.

Map projections are methods of transforming the three-dimensional surface of the Earth onto a two-dimensional plane. No projection is perfect; all introduce some distortion. The type of projection chosen depends entirely on the application and the area being mapped.

- Cylindrical Projections (e.g., Mercator): Project the Earth onto a cylinder. Preserves direction but distorts shape and area, especially at higher latitudes. Useful for navigation because lines of constant bearing are straight lines. The classic world map is a Mercator projection.

- Conic Projections (e.g., Albers Equal-Area): Project the Earth onto a cone. Good for mapping mid-latitude regions with minimal distortion. Preserves area, but distorts shape and direction.

- Azimuthal Projections (e.g., Stereographic): Project the Earth onto a plane tangent to a point. Useful for mapping polar regions. Preserves direction from the central point but distorts shape and area.

Applications:

- Navigation: Mercator projection for nautical charts.

- Thematic Mapping: Albers Equal-Area for showing population density across a continent.

- Polar Regions: Stereographic projection for mapping Antarctica.

- Global Views: Robinson projection (compromise projection) provides a visually appealing but less accurate global view.

The choice of projection is a crucial decision in spatial analysis and map creation because it directly impacts the accuracy and interpretation of the spatial data.

Q 3. What are the common spatial data formats (e.g., Shapefile, GeoJSON, GeoTIFF)?

Several common spatial data formats cater to different needs and software compatibilities. Each has strengths and weaknesses.

- Shapefile (.shp): A popular vector format storing geometry (points, lines, polygons) and attributes. It’s widely supported but is actually a collection of files (at least .shp, .shx, .dbf). Limited to a single feature class per file.

- GeoJSON (.geojson): A text-based, human-readable format for representing geographic data. Open standard, supports various geometry types, and is widely used for web mapping applications. It’s lightweight and easily parsable.

- GeoTIFF (.tif, .tiff): A raster format that stores georeferenced raster data, including satellite imagery, elevation models, and other gridded data. Supports various compression methods and metadata, which are crucial for large datasets.

- KML/KMZ (.kml, .kmz): Keyhole Markup Language, used primarily by Google Earth. It’s a versatile format for storing vector and raster data, annotations, and placemarks.

Choosing the right format depends on factors like:

- Data type (vector or raster): Shapefile for vector, GeoTIFF for raster

- Software compatibility: Shapefiles are nearly universally compatible.

- Data size and complexity: GeoJSON for smaller, less complex datasets; GeoTIFF for large raster datasets.

- Interoperability: GeoJSON for web applications; KML for Google Earth integration.

Q 4. Explain the concept of spatial autocorrelation.

Spatial autocorrelation describes the degree to which values at nearby locations are similar. In simple terms, it measures the degree of clustering or pattern in spatial data. High spatial autocorrelation means similar values tend to cluster together, while low spatial autocorrelation means values are randomly distributed.

Types:

- Positive Spatial Autocorrelation: Similar values cluster together (e.g., high-income households in the same neighborhood).

- Negative Spatial Autocorrelation: Dissimilar values tend to be adjacent (e.g., alternating patterns of high and low elevation).

- No Spatial Autocorrelation: Values are randomly distributed.

Measuring Spatial Autocorrelation: Techniques like Moran’s I and Geary’s C are used to quantify spatial autocorrelation. These indices help us determine the strength and significance of the spatial pattern.

Implications: Understanding spatial autocorrelation is vital in spatial analysis because it can influence statistical results. Ignoring spatial autocorrelation can lead to inaccurate conclusions. For instance, a disease outbreak might appear randomly distributed without considering the influence of neighboring areas.

Q 5. How do you handle spatial data with missing values?

Missing values in spatial data are a common challenge. How you handle them depends on the nature of the data, the amount of missing data, and the research question.

Methods for Handling Missing Values:

- Deletion: Remove records or features with missing data. Simple but can lead to biased results if missing data is not randomly distributed.

- Imputation: Replace missing values with estimated values. Methods include:

- Mean/Median Imputation: Replace missing values with the mean or median of the available values. Simple but can mask variability.

- Spatial Interpolation (e.g., Kriging, IDW): Use surrounding values to predict missing values based on spatial relationships. More sophisticated but requires careful consideration of the interpolation method.

- Regression Imputation: Use a regression model to predict missing values based on other variables. Requires knowing relationships between variables.

- Model-based approaches: Some statistical models explicitly account for missing data using techniques like multiple imputation.

Choosing a Method: The best approach depends on the context. If you have many missing values, imputing them is better than deletion. If the missing data is systematic, more advanced techniques are needed.

Q 6. Describe different methods for spatial interpolation.

Spatial interpolation is the process of estimating values at unsampled locations based on known values at nearby sampled locations. It’s crucial for creating continuous surfaces from point data.

Common Methods:

- Inverse Distance Weighting (IDW): Estimates the value at an unsampled location as a weighted average of the known values, with closer points having greater weights. Simple but sensitive to outlier points.

- Kriging: A geostatistical method that considers both the spatial distribution of known values and their spatial autocorrelation to provide optimal predictions. More complex, but produces more accurate results if the autocorrelation is well-understood.

- Spline Interpolation: Fits a smooth surface through the known points. Different spline types (e.g., thin-plate splines) offer flexibility in the smoothness of the resulting surface.

- Nearest Neighbor: The value of the nearest known point is assigned to the unsampled location. Simple and fast but produces a discontinuous surface.

Choosing a Method: The best method depends on the characteristics of your data. IDW is a good starting point if you have a large dataset and limited knowledge of autocorrelation. Kriging is preferred when spatial autocorrelation is significant.

Q 7. What are the advantages and disadvantages of different spatial analysis techniques (e.g., overlay, buffering, proximity analysis)?

Spatial analysis techniques manipulate and analyze spatial data to extract meaningful insights. Each technique has its advantages and disadvantages.

Overlay Analysis: Combines two or more layers to create a new layer.

- Advantages: Identifies relationships between features in different layers, such as finding areas where land use overlaps with floodplains.

- Disadvantages: Can be computationally intensive for large datasets, requires careful consideration of data alignment and coordinate systems.

Buffering: Creates a zone around a feature or set of features.

- Advantages: Useful for proximity analysis, such as identifying areas within a certain distance of a road or pollution source.

- Disadvantages: Can lead to overlapping buffers if features are close together, and doesn’t inherently take into account complex relationships.

Proximity Analysis: Measures the distance between spatial features.

- Advantages: Useful for understanding spatial relationships and connectivity, identifying nearest neighbors, or determining service areas.

- Disadvantages: Can be computationally expensive for large datasets, the choice of distance metric affects the results (e.g., Euclidean versus network distance).

General Considerations: Always consider the limitations of your data and the assumptions behind each technique. Visualizing the results is crucial for proper interpretation.

Q 8. Explain the concept of georeferencing.

Georeferencing is the process of assigning geographic coordinates (latitude and longitude) to points on an image or map that doesn’t already have them. Think of it like adding a location stamp to a photograph – it tells you exactly where the picture was taken. This is crucial because it allows us to integrate this image or map with other spatially referenced data, enabling analysis and visualization within a geographic context. For example, a scanned historical map might lack coordinate information; georeferencing aligns it with a modern base map using control points – identifiable features common to both (like intersections or landmarks) – to mathematically transform the coordinates.

The process typically involves identifying control points on both the unreferenced image and a reference map with known coordinates, then using GIS software to apply a transformation (e.g., affine, polynomial) to accurately map the points. The accuracy depends on the number and quality of control points used and the chosen transformation.

Q 9. How do you perform spatial joins?

A spatial join combines attributes from two spatial datasets based on the spatial relationship between their features. Imagine you have a map of cities and another of counties. A spatial join could add the county name to each city point based on which county the city falls within. This process requires defining the type of spatial relationship:

- Intersect: Features from both layers that overlap are joined.

- Contains: Features from one layer entirely contained within features from the other layer are joined.

- Within: Features from one layer entirely within features from the other layer are joined (opposite of Contains).

- Nearest: Features are joined based on proximity.

Most GIS software offer tools to perform spatial joins. In ArcGIS, for example, you would use the ‘Spatial Join’ geoprocessing tool, specifying the input and join features, the join operation, and the output fields. The specific implementation depends on the software used but the underlying principle remains the same.

Q 10. Describe different types of spatial queries.

Spatial queries allow us to retrieve information from spatial datasets based on location or spatial relationships. They are fundamental to many GIS analyses. Common types include:

- Point-in-polygon: Determining if a point falls within a polygon (e.g., finding all houses within a flood zone).

- Polygon-on-polygon: Finding overlaps or intersections between polygons (e.g., identifying areas where land use conflicts arise).

- Buffering: Creating zones around features (e.g., finding all houses within 1km of a school).

- Nearest neighbor: Finding the closest feature to a given feature (e.g., finding the nearest hospital to an accident).

- Spatial selection: Selecting features based on their spatial location relative to other features or a defined area.

These queries are often implemented using SQL-like statements within spatial databases like PostGIS, or through graphical user interfaces in software like ArcGIS or QGIS. For instance, a simple PostGIS query to find all points within a polygon might look like: SELECT * FROM points WHERE ST_Contains('polygon_geom', 'point_geom');

Q 11. What are some common challenges in working with large spatial datasets?

Working with large spatial datasets presents significant challenges, primarily related to:

- Storage and retrieval: Large datasets require substantial storage capacity and efficient data structures (e.g., spatial indexes) for quick data retrieval. Standard relational databases might struggle.

- Processing time: Spatial analysis operations on massive datasets can be computationally expensive and time-consuming. Parallel processing and optimized algorithms are crucial.

- Data visualization: Displaying large datasets without compromising performance or clarity requires specialized techniques like simplification, aggregation, or employing tiled map services.

- Data quality and consistency: Ensuring data accuracy and consistency across a large dataset is difficult; inconsistencies can lead to flawed analyses.

Addressing these challenges often involves using cloud-based solutions, distributed processing frameworks (like Spark), and employing techniques like data tiling and generalization to manage size and complexity.

Q 12. How do you ensure the accuracy and quality of spatial data?

Maintaining the accuracy and quality of spatial data is paramount. This involves several steps throughout the data lifecycle:

- Data acquisition: Using high-precision instruments and reputable data sources.

- Data cleaning: Identifying and correcting errors, inconsistencies, and outliers. This can involve techniques like spatial error detection, attribute checks, and topological validation.

- Data validation: Comparing data with other reliable sources to confirm accuracy. Field verification is often essential.

- Metadata management: Carefully documenting data sources, processing steps, and potential limitations. Clear metadata helps assess data quality and reliability.

- Data transformation and projection: Ensuring all data uses a consistent coordinate system to avoid misalignment and errors during analysis.

Regular audits and quality control procedures are vital to maintain data integrity over time. It’s a continuous effort, not a one-time fix.

Q 13. Explain your experience with different GIS software (e.g., ArcGIS, QGIS, PostGIS).

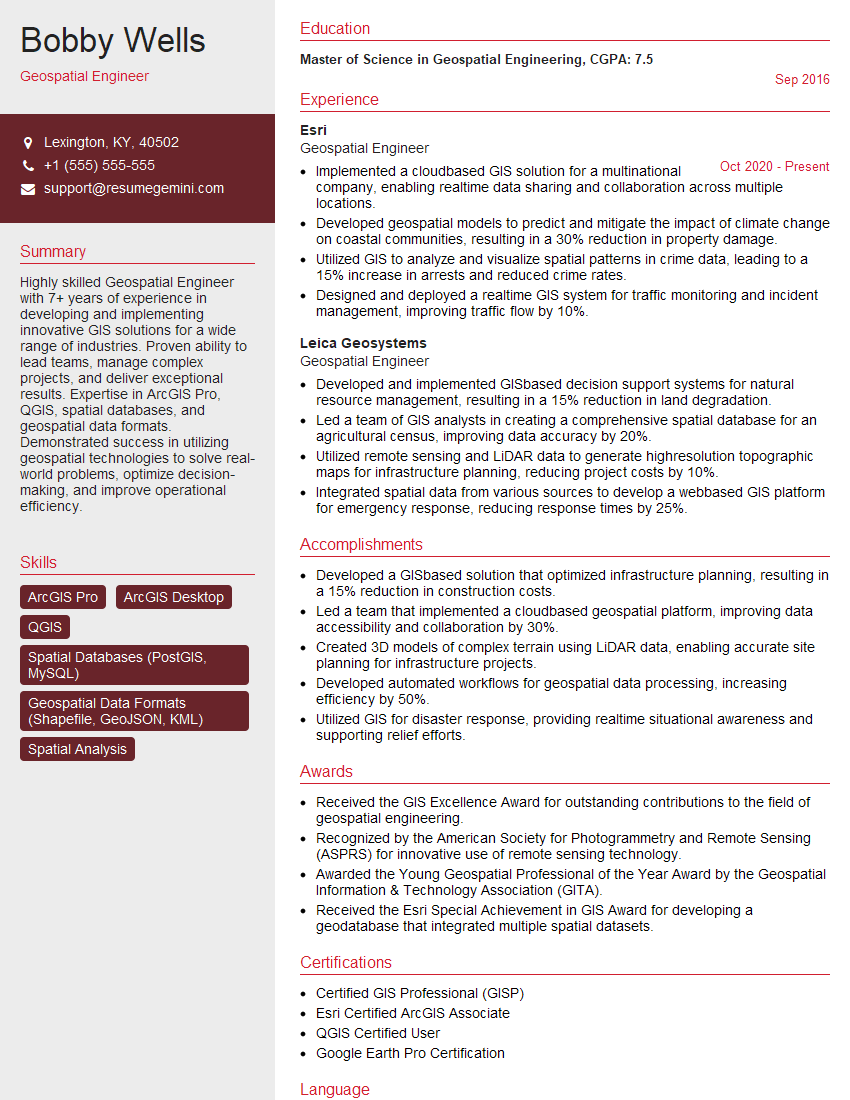

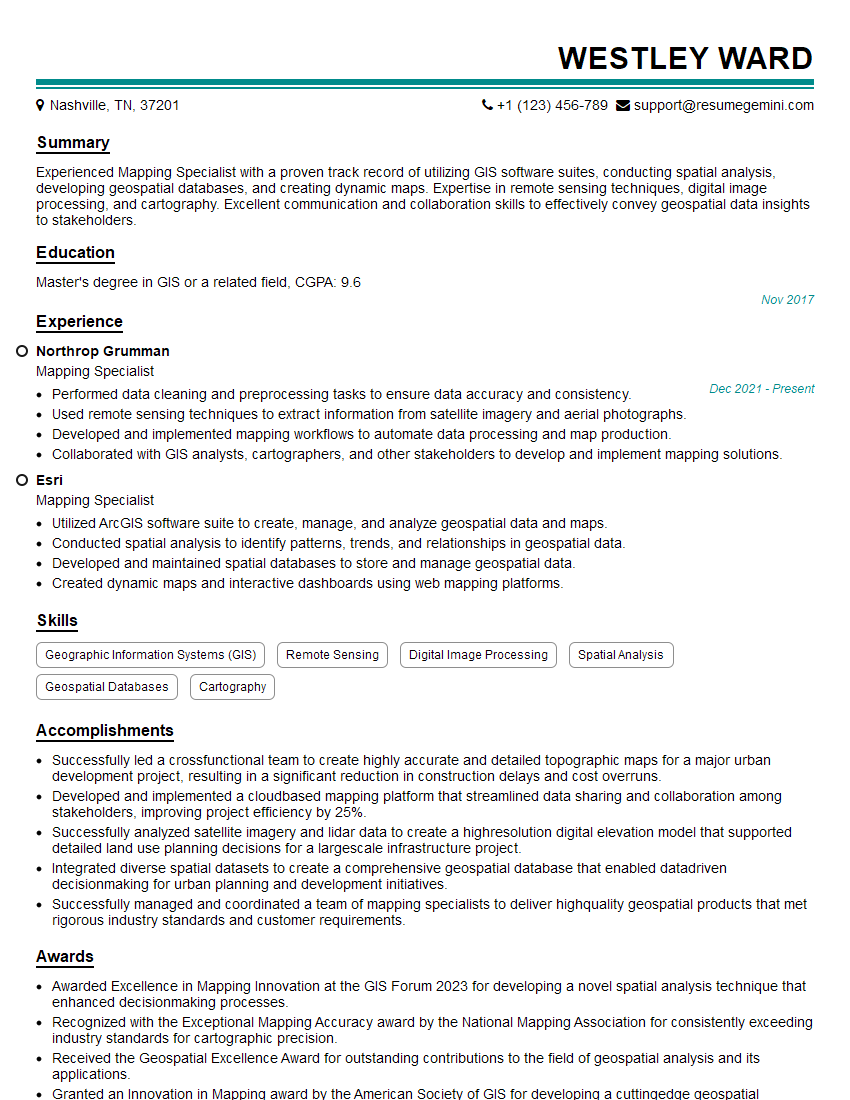

I have extensive experience with various GIS software packages. My work has primarily involved ArcGIS Pro and QGIS, complemented by experience with PostGIS for spatial database management.

ArcGIS Pro: I’m proficient in using its geoprocessing tools for spatial analysis, data management, and map creation. I’ve leveraged its advanced capabilities for tasks such as network analysis, 3D visualization, and geostatistical modeling. A recent project involved using ArcGIS Pro’s spatial analyst tools to model wildfire spread using elevation, wind patterns, and fuel type data.

QGIS: QGIS is my go-to open-source alternative for many tasks. Its flexibility and extensive plugin ecosystem make it valuable for specialized analyses and custom workflows. For example, I utilized QGIS’s processing toolbox and Python scripting capabilities for automating batch geoprocessing tasks on a large set of remotely sensed imagery.

PostGIS: I utilize PostGIS for managing and querying large spatial datasets efficiently. My skills encompass creating spatial indexes, implementing spatial functions within SQL queries, and building robust geospatial database schemas. This allows for efficient storage and retrieval of spatial data, and streamlined integration with other database systems.

Q 14. How do you visualize spatial data effectively?

Effective visualization of spatial data is crucial for communication and insight generation. The key is to choose the right visualization techniques for the specific data and objective. Techniques I frequently employ include:

- Choropleth maps: Showing data aggregated by geographic area (e.g., population density by county).

- Dot density maps: Visualizing the concentration of points (e.g., distribution of trees in a forest).

- Proportional symbol maps: Using symbols sized proportionally to a data value (e.g., city size based on population).

- Isoline maps: Displaying continuous data using contour lines (e.g., elevation or temperature).

- 3D visualizations: Providing a spatial context, especially useful for terrain modeling or showing building heights.

- Interactive maps: Enabling exploration and data discovery through user interaction (e.g., zoom, pan, selection).

Choosing the appropriate color palettes, legends, and map projections are crucial aspects of creating clear and effective visualizations. The goal is always to ensure the map tells a story and supports the intended message.

Q 15. Describe your experience with spatial statistics software (e.g., R, Python with geospatial libraries).

My experience with spatial statistics software is extensive, encompassing both R and Python with its rich geospatial libraries. In R, I’m proficient with packages like sf, spdep, and raster for tasks ranging from data manipulation and spatial regression to point pattern analysis and raster processing. I’ve used sf extensively for its efficient handling of simple features, conducting analyses on everything from crime hotspots to disease outbreaks. spdep has been invaluable for exploring spatial autocorrelation and building spatial models. With raster, I’ve processed and analyzed satellite imagery and elevation data for environmental applications.

In Python, I leverage libraries such as geopandas (which mirrors much of sf‘s functionality), shapely for geometric operations, and rasterio for raster data manipulation. I find Python’s flexibility and integration with other scientific computing libraries, particularly scikit-learn for machine learning, very powerful for complex spatial modeling projects. For example, I recently used Python to develop a predictive model for land-use change using satellite imagery and socio-economic data.

Beyond these core libraries, I’m familiar with many other specialized packages depending on the specific needs of the project. This broad experience allows me to choose the most appropriate tools for each task, optimizing efficiency and accuracy.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are some common spatial data errors and how can they be corrected?

Spatial data is prone to several errors, broadly categorized as positional, attribute, and topological errors. Positional errors arise from inaccuracies in the coordinates, stemming from limitations in GPS technology, digitization errors during map creation, or inconsistencies in surveying methods. For example, a slightly mis-located point representing a building could skew density calculations. These are often addressed through techniques like kriging or other interpolation methods.

Attribute errors involve inaccuracies in the descriptive information associated with spatial features. This might include incorrect values in a database, missing information, or inconsistent data types. Data cleaning and validation processes are crucial, including checking for outliers, inconsistencies, and data plausibility.

Topological errors concern inconsistencies in how spatial features relate to each other – for instance, overlapping polygons, gaps between adjacent polygons, or dangling lines. These errors can disrupt spatial analysis. Software tools often include functions for detecting and correcting these inconsistencies. For example, QGIS and ArcGIS have inbuilt tools for cleaning and validating spatial datasets.

Correcting these errors often requires a multi-step approach, combining automated tools with manual inspection and validation. Careful data handling and quality control throughout the data lifecycle are essential to minimizing errors and improving the reliability of spatial analyses.

Q 17. Explain the concept of spatial indexing.

Spatial indexing is a technique used to optimize spatial queries and searches within large spatial datasets. Imagine searching for all houses within a 1km radius of a specific point – searching through every single house record would be computationally expensive, especially with millions of data points. Spatial indexing structures like R-trees, quadtrees, or grid indexing create a hierarchical representation of the spatial data, allowing for efficient searching and retrieval of spatially relevant data.

Think of it like a library’s card catalog. Instead of searching through every single book, the catalog organizes books by subject, author, and title, allowing for quick access to the desired book. Similarly, spatial indexing structures organize spatial data in a way that allows for quick retrieval of data within a specified region or proximity to a specific point.

The choice of indexing structure depends on the characteristics of the spatial data and the types of queries performed. R-trees are widely used due to their ability to handle complex geometries and varied data densities. Using an appropriate spatial index significantly reduces query times and improves the overall performance of spatial applications, which is especially important in real-time applications or when dealing with very large datasets.

Q 18. How do you handle coordinate systems and datum transformations?

Coordinate systems and datum transformations are critical for ensuring that spatial data from different sources can be accurately combined and analyzed. A coordinate system defines how locations on the Earth’s surface are represented in a Cartesian coordinate system (x,y) or (x,y,z). A datum, on the other hand, is a reference surface that approximates the Earth’s shape. Different datums exist because the Earth isn’t a perfect sphere; they account for variations in the Earth’s gravitational field. Using inconsistent coordinate systems or datums can lead to significant positional errors.

I handle these transformations using GIS software or programming libraries that provide tools for coordinate system conversions. In R, for example, the sf package offers robust functions for converting between coordinate reference systems (CRS) using the st_transform() function. A similar function is available in geopandas for Python. For instance, converting data from a geographic coordinate system (like WGS 84) to a projected coordinate system (like UTM) requires specifying the target CRS using its EPSG code (e.g., EPSG:32633 for UTM Zone 33N). The software handles the mathematical transformations necessary to accurately relocate the points from one system to another.

Understanding the implications of different datums is also crucial. Using different datums can lead to positional shifts that might be very significant at the global scale. Proper transformation is therefore not just about changing coordinate systems but ensuring consistency in referencing to a consistent Earth model.

Q 19. Describe your experience with spatial modeling techniques.

My experience with spatial modeling techniques includes a wide range of approaches, tailored to the specific research question and data characteristics. I’ve extensively worked with spatial regression models, such as geographically weighted regression (GWR) and spatial autoregressive models (SAR), to understand the spatial relationships between variables. For example, I used GWR to model the influence of proximity to green spaces on property values, revealing that this relationship varied across a city.

I’m also experienced in point pattern analysis, using techniques like Ripley’s K-function to assess spatial clustering or randomness in point data. For instance, I analyzed crime incident data to identify spatial hotspots requiring targeted police interventions. Moreover, I’ve applied interpolation methods like kriging and inverse distance weighting (IDW) to estimate values at unsampled locations based on known data points. This is crucial for creating continuous surfaces from point data, such as interpolating rainfall measurements across a region.

Furthermore, I have experience with spatial statistical simulations to generate spatial random fields, allowing the comparison of observed patterns with expected patterns under certain assumptions. Finally, I am increasingly using machine learning techniques, integrating spatial information as predictor variables, for applications such as land-cover classification and prediction of environmental variables.

Q 20. What are the ethical considerations when working with spatial data?

Ethical considerations when working with spatial data are paramount. The most important aspects revolve around privacy, data security, and representation. Spatial data often contains sensitive information that can be used to identify individuals or reveal private locations. For example, aggregated crime data could potentially, in rare cases, still reveal the location of sensitive incidents despite anonymization. Therefore, anonymization or aggregation techniques must be carefully considered to protect privacy.

Data security is also crucial, ensuring that data is protected from unauthorized access, modification, or disclosure. This involves appropriate access controls, data encryption, and secure data storage practices. Finally, spatial data often reflects existing social and environmental inequalities, and we must be mindful of avoiding biased representations and interpretations. Misuse of spatial data can reinforce existing biases and inequalities. Thorough consideration should be given to the potential impact on marginalized communities.

Openly communicating the limitations of the data and analysis, as well as the potential biases, is a critical part of ethical conduct. Transparency in data sources, methods, and conclusions builds trust and strengthens the integrity of spatial research and applications. Moreover, careful consideration of the potential impacts of my work on various stakeholders is essential to ensuring responsible use of this powerful information.

Q 21. How do you address scale and resolution issues in spatial analysis?

Scale and resolution are fundamental aspects of spatial analysis that significantly impact the results. Scale refers to the spatial extent of the analysis, while resolution refers to the level of detail captured in the data. These two are intertwined and must be carefully considered. Analyzing crime data at a city-wide scale will yield different results compared to an analysis at the neighborhood level.

Addressing scale issues involves choosing the appropriate scale for the research question. For example, if we’re interested in understanding regional variations in land use, a coarser resolution (larger spatial extent) may suffice. However, for studying local environmental impacts, a finer resolution (smaller spatial extent) is needed. This often necessitates data aggregation or disaggregation techniques, with careful consideration of the potential information loss or creation of spurious patterns.

Resolution challenges relate to the level of detail in the spatial data. High-resolution data provides more detail but can be more computationally intensive and costly to acquire and process. Low-resolution data is cheaper but might miss important local variations. Choosing the appropriate resolution involves a trade-off between detail and practicality. Data resampling techniques, like aggregation or interpolation, can be used to adjust resolution, but again, it is important to be aware of the potential for introducing biases or errors.

Sensitivity analysis, exploring how results vary with changes in scale and resolution, is important to understand the robustness and limitations of conclusions drawn from spatial analysis. The choice of scale and resolution should always be explicitly justified in the context of the research questions and data limitations.

Q 22. Explain your experience with remote sensing data and its applications.

Remote sensing involves acquiring information about the Earth’s surface without making physical contact. I have extensive experience working with various remote sensing datasets, including satellite imagery (Landsat, Sentinel, MODIS) and aerial photography. My work has spanned diverse applications. For instance, I used Landsat imagery to monitor deforestation in the Amazon rainforest, analyzing changes in land cover over several decades using techniques like image classification and change detection. This involved pre-processing the imagery (atmospheric correction, geometric correction), classifying land cover types using supervised methods like support vector machines (SVMs) or random forests, and then quantifying the changes in forest cover area over time. In another project, I leveraged high-resolution aerial photography to create detailed 3D models of urban areas, which were then used for urban planning and infrastructure management. This involved photogrammetry techniques to generate point clouds and mesh models, allowing for precise measurements and analysis of buildings and streets.

- Application 1: Precision agriculture – analyzing multispectral imagery to assess crop health and optimize irrigation.

- Application 2: Disaster response – using satellite imagery to assess damage after natural disasters like floods or earthquakes.

Q 23. How do you perform spatial regression analysis?

Spatial regression analysis is used to model the relationship between a dependent variable and one or more independent variables, while accounting for the spatial dependence between observations. Unlike traditional regression, it acknowledges that nearby locations are often more similar than distant ones. I typically use tools like GeoDa or R packages such as spdep and spatialreg to perform these analyses. The process often begins with exploring spatial autocorrelation using Moran’s I statistic to determine if spatial dependence is present and needs to be accounted for. Then, I choose the appropriate spatial regression model, such as spatial lag or spatial error models. The selection depends on whether the spatial dependence is in the dependent or independent variables. For example, in a study on housing prices, I might use spatial lag model to consider the influence of neighboring house prices on a particular house’s value. Model diagnostics are crucial to assess model fit and identify any potential problems, including spatial heteroskedasticity.

#Example R code snippet (Illustrative): library(spdep); library(spatialreg); moran.test(your_data$dependent_variable, nb2listw(your_spatial_weights_matrix))Q 24. Describe your experience with creating interactive maps and dashboards.

I possess significant experience in crafting interactive maps and dashboards using various tools, including ArcGIS Online, QGIS, Tableau, and Power BI. My focus is always on user experience and ensuring the information is presented clearly and effectively. For example, I developed an interactive dashboard for a city’s public works department to visualize real-time traffic flow, allowing them to identify congestion hotspots and optimize traffic management strategies. This involved integrating data from various sources, including GPS trackers and traffic sensors. Another project involved creating an interactive map to showcase the distribution of different types of businesses in a specific region, enabling users to filter by business type, size, and other relevant parameters. The key is to design for clarity and intuitive interaction, using effective symbology, legends, and tooltips to maximize understanding.

- Key Considerations: User needs, data visualization best practices, platform capabilities, and accessibility.

Q 25. Explain your experience with spatial databases.

Spatial databases are crucial for efficiently storing and managing geospatial data. I’m proficient with various spatial database management systems (DBMS), including PostGIS (an extension to PostgreSQL) and Oracle Spatial. My experience encompasses designing and implementing spatial databases, optimizing queries for performance, and ensuring data integrity. For example, in a project involving a large-scale transportation network, I used PostGIS to store the network data, including road segments, intersections, and points of interest. This allowed for efficient querying and analysis of the network, including route planning and network analysis. The choice of spatial database depends on factors such as data volume, complexity, performance requirements, and budget. Understanding spatial indexing techniques (like R-trees and quadtrees) is also critical for optimizing query performance.

Q 26. How do you use spatial data to solve real-world problems?

Spatial data analysis is invaluable for solving diverse real-world problems. For example, I used spatial interpolation techniques (like kriging) to estimate air pollution levels across a city, based on measurements from a limited number of monitoring stations. This information helped public health officials identify pollution hotspots and implement targeted mitigation strategies. In another instance, I used spatial statistics to analyze crime patterns, identifying crime clusters and informing law enforcement resource allocation decisions. The process often involves understanding the underlying spatial processes, selecting appropriate analytical methods, and effectively communicating findings through maps and reports.

- Example: Optimizing delivery routes using network analysis, identifying optimal locations for new facilities using spatial optimization techniques.

Q 27. Describe your experience with cloud-based GIS platforms (e.g., AWS, Azure).

I have experience working with cloud-based GIS platforms like AWS (Amazon Web Services) and Azure (Microsoft Azure). This involves using services like Amazon S3 for data storage, Amazon EC2 for processing, and various geoprocessing tools available on these platforms. The advantages are scalability, cost-effectiveness (pay-as-you-go model), and access to powerful computing resources for large-scale spatial analyses. For instance, I processed and analyzed terabytes of satellite imagery using cloud-based computing resources, which would have been impractical on a local machine. The experience involves understanding data management in cloud environments, securing data, and managing the computational resources efficiently.

Q 28. What are some future trends in spatial data analysis and visualization?

The field of spatial data analysis and visualization is rapidly evolving. Some key trends include:

- Increased use of AI and machine learning: AI/ML algorithms are being integrated into spatial analysis workflows for tasks like object detection in imagery, predictive modeling, and anomaly detection.

- Big data analytics: Handling and analyzing massive geospatial datasets is becoming increasingly important, requiring specialized techniques and cloud-based infrastructure.

- 3D spatial analysis: The use of 3D models and analysis techniques is growing rapidly, especially in areas like urban planning and environmental modeling.

- Enhanced visualization techniques: New methods are continuously emerging to represent complex spatial data more effectively and interactively, improving communication and understanding.

- Integration of spatial data with other data types: Combining spatial data with other data sources (e.g., social media, sensor data) is leading to richer insights and new possibilities for applications.

Key Topics to Learn for Spatial Data Analysis and Visualization Interview

- Spatial Data Structures: Understanding various data structures like vector (points, lines, polygons) and raster data, their strengths and weaknesses, and appropriate selection for different analyses.

- Geospatial Data Wrangling: Mastering data cleaning, transformation, and projection techniques using tools like QGIS, ArcGIS, or Python libraries (e.g., GeoPandas, Shapely).

- Spatial Analysis Techniques: Familiarize yourself with methods like spatial autocorrelation (e.g., Moran’s I), spatial interpolation (e.g., Kriging), proximity analysis, overlay analysis, and network analysis.

- Spatial Statistics: Grasping fundamental statistical concepts within a spatial context, including understanding spatial randomness and pattern detection.

- Geographic Information Systems (GIS) Software: Demonstrating proficiency in at least one major GIS software package (ArcGIS, QGIS) including data management, analysis, and map creation.

- Data Visualization: Creating effective and informative maps and charts to communicate spatial patterns and insights. This includes understanding map design principles and choosing appropriate visualization techniques for different datasets and audiences.

- Cartography and Map Design: Understanding map projections, symbolization, and layout principles for creating clear and effective maps that communicate spatial information effectively.

- Programming for Spatial Analysis: Familiarity with programming languages like Python (with relevant libraries) for automating spatial analysis tasks and developing custom solutions.

- Remote Sensing and Image Analysis: Understanding the principles of remote sensing and how imagery can be used for spatial analysis (optional, depending on the specific role).

- Problem-Solving Approach: Practice articulating your thought process when tackling spatial analysis problems. Focus on clearly defining the problem, selecting appropriate methods, interpreting results, and communicating findings.

Next Steps

Mastering Spatial Data Analysis and Visualization opens doors to exciting and rewarding careers in various fields, including urban planning, environmental science, public health, and market research. To maximize your job prospects, crafting a strong, ATS-friendly resume is crucial. This ensures your application gets noticed by recruiters and hiring managers. ResumeGemini is a trusted resource to help you build a professional and impactful resume. They provide examples of resumes tailored to Spatial Data Analysis and Visualization roles to help you get started. Invest the time in creating a resume that showcases your skills and experience effectively – it’s a key step in your job search.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I have something for you and recorded a quick Loom video to show the kind of value I can bring to you.

Even if we don’t work together, I’m confident you’ll take away something valuable and learn a few new ideas.

Here’s the link: https://bit.ly/loom-video-daniel

Would love your thoughts after watching!

– Daniel

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.