Are you ready to stand out in your next interview? Understanding and preparing for Use of Design and Simulation Software interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Use of Design and Simulation Software Interview

Q 1. Explain the difference between FEA and CFD.

FEA (Finite Element Analysis) and CFD (Computational Fluid Dynamics) are both powerful simulation techniques used in engineering, but they address different physical phenomena. FEA is primarily used to analyze structural mechanics problems, such as stress, strain, and deflection in solid objects under load. Think of it like virtually testing the strength of a bridge under traffic. CFD, on the other hand, focuses on fluid flow, analyzing parameters like pressure, velocity, and temperature in liquids and gases. Imagine simulating the airflow around an airplane wing.

The key difference lies in the governing equations: FEA solves the equations of solid mechanics (e.g., equilibrium equations), while CFD solves the Navier-Stokes equations, which describe fluid motion. While they are distinct, they can be coupled in some applications, such as analyzing the fluid-structure interaction of a dam during a flood.

Q 2. What are the limitations of Finite Element Analysis?

While FEA is incredibly powerful, it does have limitations. One significant limitation is the reliance on model assumptions. Simplifying complex geometries or material properties can introduce inaccuracies. For example, assuming a perfectly linear elastic material might not accurately reflect the behavior of a component under high stress.

Another limitation is mesh dependency. The accuracy of the results is highly sensitive to the quality and density of the mesh used to discretize the geometry. A poorly refined mesh can lead to inaccurate or even completely wrong results. Similarly, the computational cost increases drastically with mesh refinement, sometimes making very detailed simulations impractical.

Finally, FEA struggles with certain highly non-linear phenomena like large deformations or material failure that necessitate advanced modeling techniques and might require significant computational resources.

Q 3. Describe your experience with meshing techniques.

My experience encompasses a wide range of meshing techniques, from structured to unstructured meshes, and I’m proficient in both manual and automated mesh generation. I’ve used structured meshes, which are easy to generate but are best suited for simple geometries, in applications like analyzing heat transfer in a rectangular heat sink.

For more complex geometries, like a turbine blade or a car chassis, unstructured meshes offer greater flexibility and accuracy. I’ve extensively used unstructured meshing techniques, including tetrahedral and hexahedral elements. The choice of element type depends on the specific application and desired accuracy. For instance, hexahedral elements often provide better accuracy for stress analysis than tetrahedral elements, but are more challenging to generate for complex geometries.

Furthermore, I understand the importance of mesh refinement in critical areas where high gradients in stress or flow are expected. Adaptive mesh refinement techniques allow for automated refinement in these regions, ensuring solution accuracy without unnecessarily increasing computational cost. I’ve applied these techniques in various projects to optimize computational efficiency and achieve reliable results.

Q 4. How do you validate simulation results?

Validation of simulation results is crucial to ensure their reliability. This involves comparing the simulation predictions to experimental data or established theoretical solutions. For example, if I’m simulating the stress on a component, I might compare my FEA results to the results obtained from a physical tensile test on a prototype.

Several techniques are used for validation. Direct comparison of key parameters like stress, strain, or flow velocity is often the first step. A quantitative measure, such as a percentage difference or R-squared value, is used to assess the level of agreement. If the differences are significant, investigating potential sources of error in the model (boundary conditions, material properties, mesh quality) is necessary.

Beyond direct comparison, I often use uncertainty quantification techniques to assess the impact of uncertainties in input parameters on the simulation results. This helps in determining the confidence level we can place on the predictions.

Q 5. What software packages are you proficient in (e.g., ANSYS, Abaqus, COMSOL)?

I am proficient in several leading simulation software packages. My expertise includes ANSYS Mechanical and Fluent for structural and fluid simulations, respectively. I have extensive experience using Abaqus for advanced nonlinear finite element analysis, particularly in applications involving contact and large deformations. I’ve also worked with COMSOL Multiphysics, leveraging its capabilities for coupled physics simulations, such as fluid-thermal interactions in microfluidic devices.

My proficiency extends beyond basic usage; I’m familiar with advanced features like custom user-defined materials, submodeling techniques, and parallel processing for improved efficiency. I’m comfortable creating complex models, running simulations, and analyzing results within these environments.

Q 6. Explain the concept of boundary conditions in simulation.

Boundary conditions are essential inputs in any simulation that define the interaction of the model with its surroundings. They specify the values of relevant variables (e.g., displacement, pressure, temperature) at the boundaries of the simulation domain. Think of them as the constraints or inputs that dictate the behavior of the simulated system.

Examples include fixed displacement (e.g., a component clamped to a wall), prescribed pressure (e.g., inlet pressure in a pipe), convective heat transfer (e.g., heat loss from a surface to the surrounding air), and symmetry conditions (used to reduce computational costs by exploiting symmetry in the geometry). The accurate definition of boundary conditions is critical; incorrect boundary conditions can lead to erroneous or meaningless results.

Q 7. How do you handle convergence issues in your simulations?

Convergence issues, where the solution fails to stabilize or reaches an incorrect result, are common in simulations. My approach involves a systematic troubleshooting process. First, I examine the mesh quality: poorly shaped or excessively skewed elements are common causes of convergence problems. Re-meshing with improved element quality often resolves the issue.

Next, I check the boundary conditions for inconsistencies or unrealistic values. Sometimes, poorly defined or conflicting boundary conditions can prevent convergence. I carefully review and adjust the boundary conditions, ensuring physical consistency. The choice of solver parameters, such as time step size or under-relaxation factors, can also play a significant role. I often experiment with these parameters to find a stable solution.

Finally, I scrutinize the material properties and the constitutive models used. Inappropriate or inconsistent material definitions can lead to numerical instability. If the problem persists after exploring these aspects, I might investigate whether a different solution technique or a more sophisticated numerical model is required.

Q 8. Describe your experience with different element types (e.g., linear, quadratic).

Choosing the right element type in finite element analysis (FEA) is crucial for accuracy and efficiency. Linear elements, the simplest type, use straight lines to approximate geometry and solution variations. They’re computationally inexpensive but can be less accurate for complex geometries or solutions with significant curvature. Think of them like using LEGO bricks to build a curved wall – you’ll get a decent approximation, but it’s not perfectly smooth.

Quadratic elements, on the other hand, use curved lines (parabolas) for approximation, providing much higher accuracy, particularly in regions with rapid changes. This is like using molding clay to build the same wall; you can achieve a smoother, more accurate representation of the curvature. However, they come with a higher computational cost due to more degrees of freedom.

In my experience, I’ve used both extensively. For a preliminary design or a quick feasibility study where speed is prioritized, I might opt for linear elements. But for critical applications like stress analysis around complex features or fluid flow simulations with significant gradients, quadratic or higher-order elements are essential to capture the detailed physics accurately. The choice often involves a trade-off between computational resources and desired accuracy, and the project’s requirements dictate this balance.

Q 9. What are your preferred methods for post-processing simulation data?

Post-processing simulation data is where you extract meaningful insights from your analysis. My preferred methods involve a combination of visualization and data extraction techniques. I often start with graphical representations, like contour plots, deformed shapes, and vector fields to get a general understanding of the results. Software like ANSYS, Abaqus, and COMSOL offer powerful visualization tools that enable interactive exploration.

Beyond visualization, I extract quantitative data through various means. This could involve generating tables of key results (e.g., maximum stress, displacement, etc.), creating XY plots to analyze trends, or exporting data into spreadsheet software for further analysis and reporting. For complex results, I might use scripting (discussed further in a later question) to automate the extraction of specific data points of interest and to easily generate formatted reports. The choice of post-processing method depends highly on the specific goals of the simulation and the type of data generated.

Q 10. How do you determine the appropriate level of mesh refinement?

Mesh refinement, or deciding how fine your mesh needs to be, is critical for accuracy without excessive computation. It’s a balancing act. Too coarse a mesh can lead to inaccurate results, missing important details. Too fine a mesh dramatically increases computational time and resources, often with negligible improvement in accuracy.

My approach is iterative. I typically start with a relatively coarse mesh for a preliminary run to get a feel for the solution and identify areas of high gradients (e.g., high stress concentrations). Then, I selectively refine the mesh in those critical areas, leaving coarser meshes in less sensitive regions. Mesh independence studies are vital; I perform multiple runs with successively finer meshes until the solution converges, meaning further refinement yields no significant change in the results. This proves that the solution is not significantly affected by the mesh density, giving confidence in the results.

Adaptive mesh refinement techniques also help automate this process; the software automatically refines the mesh based on pre-defined criteria, such as solution error estimates, saving significant time and effort.

Q 11. Explain the concept of model order reduction.

Model order reduction (MOR) is a technique used to reduce the complexity of large-scale simulations, which is incredibly useful when dealing with models with millions of degrees of freedom. Imagine trying to simulate the entire airflow around a car—the computational cost would be enormous. MOR comes to the rescue!

It works by creating a smaller, simpler model that accurately captures the essential dynamics of the original system. This reduced-order model is much faster to solve, enabling quicker design iterations and ‘what-if’ scenarios. Various MOR techniques exist, including proper orthogonal decomposition (POD) and Krylov subspace methods. Each method has its strengths and weaknesses, depending on the system’s characteristics.

In practice, MOR allows engineers to perform rapid design optimizations and parameter studies that would be computationally intractable with the full-order model. For instance, I’ve used MOR in the optimization of vibration characteristics of large structures, significantly speeding up the design process.

Q 12. Describe your experience with scripting or automation in simulation software.

Scripting and automation are essential skills for efficient simulation workflows. I’m proficient in Python and have used it extensively with various simulation packages. For instance, I’ve written scripts to automate mesh generation based on design parameters, run parametric studies across a range of input values, post-process results, and generate reports.

# Example Python snippet for automating a parameter studyfor i in range(10): # Set parameter value parameter = i * 0.1 # Run simulation run_simulation(parameter) # Extract results results = extract_results() # Save results save_results(results)

Automating these tasks frees up my time for more creative and higher-level tasks, reduces the risk of human error, and allows for consistent and repeatable simulations. This is crucial for projects involving numerous simulations or repetitive tasks.

Q 13. How do you ensure the accuracy and reliability of your simulations?

Ensuring accuracy and reliability is paramount. It’s not just about getting numbers; it’s about having confidence in those numbers. My approach is multi-faceted.

- Verification and Validation: I verify the simulation code itself by comparing the results to known analytical solutions or simpler models. Validation involves comparing simulation results to experimental data whenever possible. This builds confidence in both the model and the simulation process.

- Mesh Convergence Studies: As mentioned earlier, these are crucial to demonstrate that the solution is not significantly dependent on the mesh resolution.

- Code Review: When working in teams, code review is vital to catch potential bugs or errors early on.

- Documentation: Meticulous documentation of all assumptions, inputs, and the simulation process is essential for transparency and repeatability.

Ultimately, a rigorous and systematic approach, combined with a healthy dose of skepticism, is vital to ensure the reliability of simulation results. It’s about understanding the limitations of the model and interpreting the results carefully.

Q 14. What are some common sources of error in simulation?

Errors in simulation can stem from various sources. Some common culprits include:

- Modeling Errors: Simplifying assumptions made during model creation can introduce significant inaccuracies. For instance, neglecting certain physical effects or using idealized material properties can lead to deviations from reality.

- Meshing Errors: Poor mesh quality, such as skewed elements or excessively large aspect ratios, can lead to inaccurate or unstable solutions.

- Numerical Errors: These are inherent in numerical methods and can accumulate during the computation. Choosing appropriate solution methods and convergence criteria helps mitigate these errors.

- Boundary Condition Errors: Incorrect specification of boundary conditions can drastically alter the simulation results. Carefully defining and validating boundary conditions is essential.

- Input Data Errors: Inaccurate or incomplete input data (material properties, geometry dimensions) can directly propagate to the results. Double-checking all inputs is a critical step.

Being aware of these potential sources of error and employing strategies to minimize them, like those discussed above, are critical for generating reliable simulation results. A thorough understanding of the underlying physics and the numerical methods used is key to identifying and addressing these issues.

Q 15. How do you handle non-linear material behavior in your simulations?

Handling non-linear material behavior in simulations is crucial for accurate results, as many real-world materials don’t behave linearly under stress. Linearity implies a proportional relationship between stress and strain; however, many materials exhibit plasticity (permanent deformation), viscoelasticity (time-dependent behavior), or other complex phenomena.

To address this, we employ advanced constitutive models within the simulation software. These models mathematically describe the material’s non-linear response. For example, for metals undergoing plastic deformation, we might use a plasticity model like the von Mises yield criterion with isotropic or kinematic hardening rules. For polymers exhibiting viscoelasticity, we’d utilize models like the Maxwell or Kelvin-Voigt models, often incorporating temperature and rate dependence. The choice of model depends heavily on the material and the loading conditions.

The simulation software then uses numerical integration techniques to solve the governing equations, often requiring iterative approaches due to the non-linearity. Convergence criteria are set to ensure accuracy and stability. Proper mesh refinement in regions of high stress gradients is also vital for capturing the complex behavior accurately. It’s often an iterative process: we compare simulation results to experimental data, refine the model if necessary (adjusting material parameters or selecting a more appropriate model), and re-run the simulation until satisfactory agreement is achieved.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your experience with different solver types (e.g., implicit, explicit).

Solver types are fundamental to simulation. Implicit and explicit solvers each have strengths and weaknesses depending on the problem being modeled. Implicit solvers work by solving for the equilibrium state at the end of a time step. They’re typically unconditionally stable, meaning they can handle larger time steps. This makes them efficient for quasi-static or low-speed dynamic problems, such as structural analysis under static loads or slow creep. However, they can be computationally expensive for each time step, as they involve solving a large system of equations.

Explicit solvers, on the other hand, directly calculate the state at the end of a time step based on the state at the beginning. They are well-suited for high-speed impact and crash simulations where large deformations occur rapidly. They are conditionally stable and require smaller time steps, determined by the Courant-Friedrichs-Lewy (CFL) condition. While each time step is computationally less intensive, the need for many small time steps can lead to longer overall simulation times.

My experience includes using both implicit solvers (like those found in ANSYS Mechanical) for structural analyses and explicit solvers (like LS-DYNA) for impact simulations. The choice of solver is always driven by the nature of the problem and the desired level of accuracy within available computational resources.

Q 17. Describe your experience with optimization techniques in simulation.

Optimization techniques are essential for improving design efficiency and achieving optimal performance. In simulation, optimization algorithms help find the best design parameters to meet specific objectives, such as minimizing weight while maintaining strength or maximizing efficiency.

I’ve extensively used optimization techniques, including gradient-based methods (like steepest descent or conjugate gradient) and evolutionary algorithms (like genetic algorithms). Gradient-based methods rely on calculating the sensitivity of the objective function to changes in design parameters, making them efficient for smooth functions. However, they can get stuck in local optima. Evolutionary algorithms are robust in navigating complex design spaces with multiple local optima but are computationally more expensive.

For example, in designing a lightweight car component, I used a genetic algorithm to optimize its geometry, minimizing weight while ensuring it could withstand specified loads. The software automatically iterated through many design variations, evaluating each using finite element analysis and selecting the best designs based on predefined criteria.

Response Surface Methodology (RSM) is another valuable technique I’ve employed. RSM builds a statistical model of the objective function using a limited number of simulations and then uses the model to find the optimum. This is particularly useful when computationally expensive simulations are involved.

Q 18. How do you interpret and present simulation results to non-technical stakeholders?

Communicating complex simulation results to non-technical stakeholders requires a clear and concise approach that avoids jargon. I always start with the big picture: what were we trying to achieve with the simulation, and what are the key findings?

Visualizations are critical. Instead of showing complex data tables, I use charts, graphs, and animations to illustrate key trends and results. For instance, a simple bar graph comparing the strength of different design options is much more effective than a detailed stress contour plot. Animations showing the deformation of a structure under load can also help convey critical information effectively.

I focus on explaining the implications of the results in terms of the business goals. For example, rather than saying “the maximum stress is 150 MPa,” I would say, “this design exceeds the safety limit and needs to be revised to prevent failure.” Using relatable analogies—such as comparing stress to pressure in a water pipe—can further enhance understanding. Finally, I always allow time for questions and ensure the audience understands the limitations and uncertainties associated with the simulation results.

Q 19. What are your experiences with parallel computing in simulations?

Parallel computing is essential for handling the large computational demands of complex simulations. My experience includes leveraging parallel processing capabilities in various software packages. This involves distributing the computational workload across multiple processors or cores, significantly reducing simulation time.

For instance, in finite element analysis, the mesh can be partitioned, and each sub-domain can be processed concurrently on different processors. The results are then combined to obtain the overall solution. I am familiar with using MPI (Message Passing Interface) and OpenMP (Open Multi-Processing) programming paradigms to achieve parallel processing, and I’ve utilized cloud-based high-performance computing resources (like AWS or Azure) when dealing with exceptionally large models.

The efficiency gains from parallel computing are significant. A simulation that might take days on a single processor can often be completed in hours or even minutes when using multiple processors. However, the overhead of communication between processors needs to be carefully managed to avoid performance degradation. Choosing the appropriate parallel computing strategy depends on the specific software, hardware, and the problem’s nature.

Q 20. Describe a challenging simulation project you worked on and how you overcame the obstacles.

One challenging project involved simulating the fluid-structure interaction of a marine propeller during cavitation. Cavitation, the formation and collapse of vapor bubbles around the propeller blades, leads to erosion and reduced efficiency. Accurately simulating this phenomenon is computationally very demanding due to the complex interaction between the fluid and the solid, and the need for extremely fine mesh resolution around the blades.

The initial simulations experienced convergence difficulties and unrealistic results. We overcame these obstacles by employing a multi-physics approach, carefully coupling the Computational Fluid Dynamics (CFD) solver with a Finite Element Analysis (FEA) solver. We also implemented advanced turbulence models and used adaptive mesh refinement to resolve the fine-scale details of the cavitation bubbles. Finally, we validated our simulation results against experimental data from cavitation tunnel tests. Through iterative refinement of the model, mesh, and solver settings, we successfully produced accurate and reliable predictions of propeller performance and cavitation patterns. The project demonstrated the importance of a thorough understanding of the underlying physics, careful model selection, and meticulous validation when tackling such complex simulations.

Q 21. What are your experiences with different types of simulations (e.g., static, dynamic, transient)?

My experience encompasses various simulation types, each suitable for different applications:

- Static simulations analyze structures under constant loads. These are useful for determining stresses, strains, and displacements in components under static loading conditions. Examples include analyzing the strength of a bridge under its own weight or the stress distribution in a pressure vessel.

- Dynamic simulations model the behavior of structures subjected to time-varying loads or impacts. They are used to study vibrations, shocks, and impacts. Examples include analyzing the response of a building to an earthquake or the crashworthiness of a vehicle.

- Transient simulations model systems that change with time, including temperature variations and dynamic events. They’re crucial for capturing time-dependent behavior. Examples include analyzing the heat transfer in an electronic component during operation or the thermal stress in a turbine blade.

I have used these simulation types in various projects, from designing aerospace components that can withstand high-speed airflows to modeling the dynamic response of structures during extreme events. The choice of simulation type is dictated by the specifics of the problem, the desired level of detail, and the computational resources available.

Q 22. Explain your understanding of uncertainty quantification in simulation.

Uncertainty quantification (UQ) in simulation acknowledges that models are never perfect representations of reality. Input parameters, material properties, and even the governing equations themselves contain inherent uncertainties. UQ aims to quantify and propagate these uncertainties through the simulation to understand the range of possible outcomes. This is crucial because relying solely on a single simulation result, ignoring uncertainty, can lead to flawed design decisions and potentially catastrophic consequences.

For example, imagine simulating the stress on a bridge. We might have uncertainties in the material strength of the steel, the exact loading conditions (weight of vehicles, wind speed), and the precise geometry of the bridge. UQ techniques allow us to account for these uncertainties by using probabilistic methods, like Monte Carlo simulations. This will produce a range of possible stress values rather than a single deterministic value, giving us a much more realistic and robust picture of the bridge’s safety.

Common UQ methods include:

- Monte Carlo simulation: Repeatedly running the simulation with different random inputs sampled from probability distributions.

- Latin Hypercube Sampling: A more efficient variant of Monte Carlo sampling.

- Sensitivity analysis: Identifying which input parameters have the most significant impact on the output.

By understanding the range of possible outcomes, we can make more informed decisions, perhaps by increasing safety factors or conducting further investigations.

Q 23. How do you choose the appropriate simulation method for a given problem?

Choosing the right simulation method is crucial for accurate and efficient results. The selection depends on several factors, including the:

- Problem physics: Is it structural mechanics, fluid dynamics, heat transfer, or a multiphysics problem? For example, analyzing fluid flow requires Computational Fluid Dynamics (CFD), while analyzing stress in a solid would use Finite Element Analysis (FEA).

- Geometry complexity: Simple geometries might be handled by analytical methods, while complex ones demand numerical methods like FEA or CFD.

- Required accuracy: High-accuracy simulations generally require more computational resources and more sophisticated methods.

- Available software and resources: The choice of simulation method also depends on what software you have access to and the computational power at your disposal.

For example, simulating the aerodynamic performance of a car would necessitate using CFD software, potentially employing techniques like Reynolds-Averaged Navier-Stokes (RANS) or Large Eddy Simulation (LES), depending on the level of detail required. In contrast, analyzing the stress distribution in a simple beam under load might be sufficiently addressed with a simpler FEA approach.

A systematic approach is key: start by clearly defining the problem, identifying the relevant physics, assessing geometry complexity, and then selecting the appropriate method based on these factors. Software selection often comes last, chosen based on its capability to handle the selected method and the problem at hand.

Q 24. Describe your experience with experimental validation of simulation results.

Experimental validation is paramount to ensure the reliability of simulation results. It’s the process of comparing simulation predictions with real-world experimental data. This comparison helps to assess the accuracy of the simulation model, identify potential errors, and improve the model’s predictive capabilities.

In my experience, I’ve validated simulation results using various experimental techniques, including:

- Strain gauge measurements: To verify stress and strain predictions in structural analysis.

- Pressure transducers: To validate pressure predictions in fluid dynamics simulations.

- Temperature sensors: To validate temperature predictions in thermal simulations.

For example, in a project involving the simulation of a car crash, I used high-speed cameras to record the deformation of the vehicle during a controlled crash test. These experimental results were then compared against the FEA simulations, which included material models calibrated with material testing data. The comparison allowed us to refine our material model and ensure the accuracy of the simulated crash behavior.

Discrepancies between simulation and experiment highlight areas for improvement, like refining the model, improving input parameters, or re-evaluating assumptions. A well-defined validation plan, outlining experimental methods, data acquisition, and comparison techniques, is essential for a successful validation exercise.

Q 25. How familiar are you with design of experiments (DOE) techniques?

I am highly familiar with Design of Experiments (DOE) techniques. DOE is a powerful statistical methodology for planning experiments efficiently and effectively. It allows us to investigate the effects of multiple input parameters on the simulation output with a minimum number of runs.

Common DOE techniques I’ve used include:

- Full factorial designs: All possible combinations of input parameters are tested.

- Fractional factorial designs: A subset of the full factorial design is used to reduce the number of experiments.

- Taguchi methods: Focuses on minimizing the influence of noise factors.

- Response surface methodology (RSM): Uses statistical models to optimize the design parameters.

For instance, in optimizing the design of a heat sink, I employed a fractional factorial DOE to assess the impact of fin height, fin thickness, and base material on heat dissipation. This allowed us to identify the most influential parameters and determine optimal design configurations more efficiently than exhaustive testing.

DOE significantly improves simulation efficiency, reduces the number of simulations needed to achieve meaningful results, and helps in identifying important design factors and interactions. Choosing the right DOE strategy depends on the specific problem, the number of input variables, and the desired level of detail.

Q 26. What are your experiences with multiphysics simulations?

Multiphysics simulations involve solving coupled equations that describe the interactions between different physical phenomena. My experience includes coupling various physics, such as:

- Fluid-structure interaction (FSI): Simulating the interaction between a fluid and a deformable structure. For example, analyzing the effect of wind loads on a bridge or the flow of blood in arteries.

- Thermal-structural coupling: Modeling the interaction between temperature changes and structural deformations. This is critical in applications like the design of electronic components or engines.

- Electro-mechanical coupling: Simulating the interaction between electrical fields and mechanical deformations. This is important in the design of actuators and sensors.

One project involved simulating the FSI of a propeller in water. This required coupling CFD (to simulate water flow) and FEA (to simulate propeller deformation). The results accurately predicted cavitation and propeller performance, providing valuable insights for design optimization.

Multiphysics simulations are often computationally intensive and require specialized software and expertise in coupled physics. They offer a more realistic representation of real-world phenomena compared to single-physics simulations, making them invaluable in numerous engineering applications.

Q 27. Explain the concept of mesh independence.

Mesh independence refers to the condition where the simulation results are no longer significantly affected by further refinement of the computational mesh. The mesh is a discretization of the geometry used in numerical methods like FEA and CFD.

In simpler terms, imagine trying to approximate the area of a circle using smaller and smaller squares. Initially, the approximation is coarse, but as you use smaller squares (finer mesh), the approximation improves. At some point, using even smaller squares doesn’t significantly improve the accuracy. That’s mesh independence. It ensures that the results are not artifacts of the mesh but represent the true solution of the underlying physical problem.

Achieving mesh independence involves performing a series of simulations with increasingly finer meshes. The results are then compared, and if the difference is negligible (within a predefined tolerance), mesh independence is achieved. This is typically done by plotting a key simulation output (e.g., stress, displacement, pressure) against the mesh density. Once the plot plateaus, it suggests that the solution has converged, and mesh independence has been reached.

Ignoring mesh independence can lead to inaccurate and unreliable results. An overly coarse mesh might result in significant errors, whereas an excessively fine mesh might be computationally expensive without providing any substantial improvement in accuracy.

Q 28. Describe your experience with different types of contact definitions in FEA.

Finite Element Analysis (FEA) often involves defining contact between different parts of a model. The type of contact definition significantly influences the accuracy and computational cost of the simulation. Different types of contact definitions address different aspects of physical interaction.

I have experience with various contact definitions, including:

- Bonded contact: Simulates a perfect bond between two surfaces, implying no relative movement between them. This is used when two parts are rigidly connected.

- No separation contact: Allows relative movement between surfaces but prevents separation. This is useful for modeling interference fits or situations where parts remain in contact under load.

- Frictionless contact: Allows sliding between surfaces without any frictional resistance.

- Frictional contact: Considers the frictional forces that arise between surfaces in contact. The friction coefficient is a crucial parameter here.

- Rough contact: Models the effect of surface roughness on contact behavior.

Choosing the appropriate contact definition is crucial for accurate simulations. For example, simulating a bolted joint would typically involve a combination of bonded contact (for the bolt threads) and frictional contact (between the bolt head and the clamped surfaces). Misrepresenting the contact type might lead to an inaccurate prediction of stress distribution and the overall structural integrity.

Proper contact definition also requires careful consideration of contact parameters like friction coefficients, surface roughness, and contact algorithms. The selection of these parameters is often based on experimental measurements or material properties data.

Key Topics to Learn for Use of Design and Simulation Software Interview

- Software Proficiency: Demonstrate mastery of specific software packages (e.g., AutoCAD, SolidWorks, ANSYS, MATLAB) relevant to the job description. Practice complex modeling and simulation tasks.

- Design Principles: Understand fundamental engineering design principles (e.g., material selection, stress analysis, tolerance analysis) and how they are applied within the software.

- Simulation Techniques: Explain various simulation methods (e.g., Finite Element Analysis (FEA), Computational Fluid Dynamics (CFD), and their applications in different engineering disciplines. Be prepared to discuss the limitations of each method.

- Data Analysis & Interpretation: Showcase your ability to interpret simulation results, identify trends, and draw meaningful conclusions. Practice presenting your findings clearly and concisely.

- Problem-Solving & Troubleshooting: Describe your approach to identifying and resolving errors or unexpected results during the simulation process. Highlight your experience with debugging and validation techniques.

- Collaboration & Teamwork: Discuss your experience working collaboratively on design and simulation projects, including version control and data sharing practices.

- Project Management: Demonstrate understanding of project timelines, resource allocation, and effective communication within a team environment related to simulations.

Next Steps

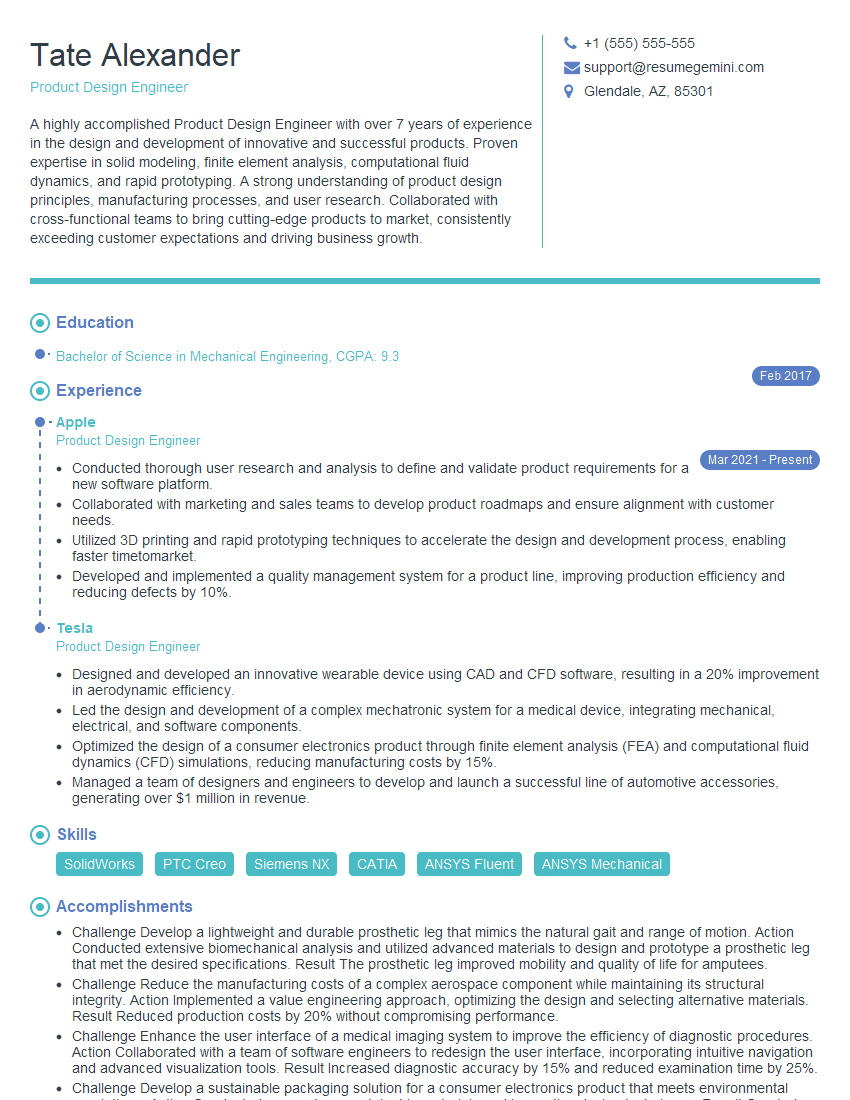

Mastering the use of design and simulation software is crucial for career advancement in today’s competitive engineering landscape. Proficiency in these tools opens doors to exciting opportunities and significantly enhances your problem-solving capabilities. To maximize your job prospects, creating a strong, ATS-friendly resume is paramount. ResumeGemini can help you craft a professional and impactful resume tailored to highlight your skills and experience. We offer examples of resumes specifically designed for candidates with expertise in Use of Design and Simulation Software to guide you through the process. Let ResumeGemini help you land your dream job!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

This was kind of a unique content I found around the specialized skills. Very helpful questions and good detailed answers.

Very Helpful blog, thank you Interviewgemini team.